7 ways SRE teams can reduce incident management MTTR

TL;DR: Reducing MTTR by up to 80% requires eliminating coordination overhead, not just typing faster. Coordination typically consumes more time than actual repair work (assembling teams, finding context, switching tools). Automate responder assembly to cut 10-15 minutes per incident. Adopt Slack-native ChatOps to eliminate context-switching. Deploy AI SREs that automate up to 80% of incident response through autonomous investigation and fix suggestions. Auto-capture timelines to eliminate 60-90 minutes of post-mortem reconstruction. Teams using this approach see median P1 MTTR drop from 48 minutes to under 30 minutes.

Most SRE teams see median P1 MTTR between 45-60 minutes. Here's the typical breakdown: 12 minutes assembling the team and gathering context, 20 minutes troubleshooting the actual issue, 4 minutes on mitigation, 12 minutes cleaning up (updating status pages, creating tickets, starting the post-mortem). The problem isn't the technical fix. The problem is everything else.

The typical incident response process creates massive coordination overhead. You check PagerDuty to find who's on-call, open Datadog for metrics, coordinate in Slack, take notes in Google Docs, create Jira tickets, and update Statuspage. Five tools. Twelve minutes of logistics before troubleshooting starts. Each tool switch loses context. Each manual lookup slows response. Each "who should I page?" decision burns time while customers are impacted.

This is the coordination tax. Reducing MTTR isn't about hiring faster engineers. It's about removing the friction that slows them down. By automating team assembly, centralizing context in Slack, and using AI to handle the grunt work, teams can reduce incident resolution time significantly.

What is MTTR and why is it the metric that matters?

Mean Time to Resolution measures the average time it takes to fully resolve an incident, from detection through recovery. MTTR includes multiple phases: detection, acknowledgment, coordination, investigation, repair, and recovery.

MTTR matters because downtime is expensive. For mid-market SaaS companies, one hour of downtime can cost $25,000-$100,000 in lost revenue, customer trust, and team productivity. Multiply that by 15-20 incidents per month, and you see why optimizing MTTR is business-critical.

Elite-performing teams restore service faster because they eliminate coordination waste, not because they have better engineers. Analysis of over 100,000 real incidents across companies of all sizes shows that coordination and investigation phases consume the majority of incident time, while the actual technical repair is often quick once the right team has the right context.

The hidden components of MTTR:

- Time to Detect (MTTD): How long until you know there's a problem

- Time to Acknowledge (MTTA): How long until someone starts working on it

- Coordination time: Assembling the right responders, establishing communication channels, gathering context

- Time to Repair (MTTRr): Actually fixing the issue

- Recovery time: Verifying the system is healthy again

- Documentation time: Writing the post-mortem so the issue doesn't recur

The goal: eliminate the waste in every phase except repair, because that's the only phase adding technical value.

1. Automate responder assembly to eliminate the “who is on-call” scramble

At 2:47 AM, PagerDuty fires an alert. You wake up, check the alert, realize this is a database issue, and now you're hunting for who's on-call for the database team. You check the Google Sheet. It's out of date. You ping someone on Slack. They're asleep. You try their backup. Another 15 minutes gone.

Manual on-call lookup creates what we call the assembly tax. Industry data shows that teams spend on average a third of their time responding to disruptions, and a significant chunk of that goes to figuring out who should be working on the problem.

The fix: automated escalation policies that trigger on alert attributes.

Modern incident management platforms parse the alert payload, identify the affected service from your catalog, and page the right on-call engineer automatically. No spreadsheets. No guessing. No manual lookups.

"The Slack integration is very well done, and allows both technical teams and stakeholders to collaborate effectively without constant context switching." - Verified user review of incident.io

When a Datadog alert fires for API latency in your checkout service, incident.io creates a dedicated Slack channel #inc-2847-api-latency, pages the on-call SRE, pulls in the service owner from the catalog, and starts capturing the timeline. Total assembly time: under 2 minutes. Compare that to 15 minutes of manual coordination.

How to implement this:

- Map alerts to services: Tag your Datadog, Prometheus, or New Relic alerts with service identifiers

- Define escalation paths: Primary on-call responds in 5 minutes, secondary gets paged automatically if no acknowledgment

- Auto-create incident channels: No more manually typing

/create-incidentin Slack - Pull in stakeholders based on severity: P1 incidents auto-notify VP Engineering, P2s stay within the team

Across 20 incidents per month, saving 10 minutes per incident reclaims 200 minutes monthly, or 3.3 hours of engineering time.

2. Centralize context with a unified incident management platform

You're troubleshooting an API timeout. The error logs are in Datadog. The service ownership is in your wiki. The recent deploys are in GitHub. The similar incidents are in Jira. The runbook is in Confluence. Five tools. Five context switches. Each switch pulls your attention away from solving the actual problem.

When responders can see service owners, dependencies, recent changes, and past incidents in one view, they troubleshoot faster. Research shows that coordination overhead drops significantly when context is centralized instead of scattered across multiple tools.

The service catalog is the foundation.

A service catalog is a living registry of your infrastructure: who owns each service, what it depends on, recent deploys, health metrics, and runbooks. During an incident, this context surfaces automatically instead of requiring manual detective work.

"incident.io makes incidents normal. Instead of a fire alarm you can build best practice into a process that everyone can understand intuitively and execute." - Verified user review of incident.io

When an incident channel is created for your checkout API, the service catalog automatically displays:

| Context Type | Source | Value During Incident |

|---|---|---|

| Service ownership | Catalog | Know who to page immediately |

| Recent deploys | GitHub/GitLab integration | Identify likely culprit (bad deploy) |

| Dependencies | Catalog + monitoring | See what upstream/downstream impact |

| Past incidents | Historical incident data | Learn from similar issues |

| Runbooks | Confluence/Notion integration | Follow established procedures |

| Health metrics | Datadog/Prometheus | See real-time impact |

incident.io's integration capabilities connect Datadog, Jira, GitHub, Confluence, and 100+ other tools so context flows into the incident channel automatically. You're not replacing your monitoring stack, you're centralizing the coordination layer.

3. Adopt chatops to stop the “tab-switching tax”

Tab-switching during an incident isn't just annoying. It's cognitively expensive. Every switch from Slack to PagerDuty to Datadog to your web-based incident management UI pulls your attention away from the problem. You lose the mental model you were building.

Research on disruption response shows that tool sprawl is one of the primary drivers of extended MTTR. Teams using Slack-native incident management eliminate this overhead.

Slack-native means the incident lives entirely in the channel.

Instead of managing the incident in a web UI that sends updates to Slack, you manage the incident entirely in Slack using slash commands:

/inc escalate @database-team- Page the DB team/inc assign @sarah- Make Sarah the incident commander/inc severity high- Escalate to P1/inc update API response times still elevated, investigating connection pool- Log a status update/inc resolve fixed by increasing connection pool size- Close the incident

"incident.io strikes just the right balance between not getting in the way while still providing structure, process and data gathering to incident management." - Verified user review of incident.io

These commands feel like natural Slack messages because they are Slack messages. New engineers see experienced responders using /inc commands and copy the pattern. Onboarding time for on-call rotation drops from 2-3 weeks to 2-3 days.

Why Slack-native architecture matters:

- Zero training required: Engineers already know how to use Slack

- No context-switching: Everything happens in one tool

- Faster adoption: 178 reviews on G2 specifically praise the Slack-native workflow and ease of use

- Mobile-friendly: Respond from your phone using the Slack app you already have

The difference between Slack-native and Slack-integrated is architectural. Most incident management tools are web-first with Slack bolted on. incident.io built the entire platform to BE Slack-native from day one.

4. Deploy AI SREs for autonomous investigation and root cause analysis

For decades, incident response has followed the same pattern: alert fires, human investigates, human identifies root cause, human implements fix. The bottleneck is human availability and cognitive load. At 3 AM, even senior engineers need time to orient themselves, pull logs, correlate metrics, and pattern-match against past incidents.

AI SREs change this equation. They don't just summarize logs or correlate alerts. They autonomously investigate, identify likely root causes, and suggest specific fixes.

What is an AI SRE?

An AI SRE is an agentic AI assistant that operates with a "human-on-the-loop" model rather than "human-in-the-loop." It takes autonomous actions (querying logs, analyzing metrics, identifying code changes) and presents findings for human approval, rather than waiting for humans to prompt each step.

"The AI features really reduce the friction of incident management... It's easy to set up, and easy to use." - Verified user review of incident.io

incident.io's AI SRE autonomously handles investigation by:

- Identifying the likely change behind incidents: Correlates alert timing with recent deploys, config changes, or infrastructure updates

- Opening pull requests directly in Slack: Suggests code fixes and can generate PRs for review

- Pulling relevant metrics and logs: Gathers diagnostic data without manual queries

- Suggesting next steps based on past incidents: Pattern-matches against your incident history to recommend proven solutions

| Capability | Traditional Tools | AI SRE (Agentic) |

|---|---|---|

| Alert correlation | Manual, based on rules | Autonomous, based on patterns |

| Root cause identification | Engineer investigates | AI suggests likely culprit with confidence score |

| Fix suggestions | Runbook lookup | AI generates specific fix PRs |

| Learning from history | Manual post-mortem review | AI applies learnings automatically |

A practical guide to AI SRE tools shows that modern platforms are moving beyond basic log correlation to autonomous investigation. If an AI SRE can identify "Deploy #4872 to checkout-api at 14:32 UTC" as the likely culprit within 30 seconds, you skip 15-20 minutes of manual correlation work.

Trust and the "human-on-the-loop" model:

AI SRE suggests actions ("I believe Deploy #4872 caused this. Shall I open a rollback PR?"), but humans approve execution. You maintain control while offloading investigation toil.

Watch the AI SRE introduction video for a full walkthrough of autonomous investigation in action.

5. Automate status page updates to reduce communication toil

During a P1 incident, your support team gets flooded with "is this fixed yet?" tickets. Your VP of Engineering is asking for updates every 10 minutes. Customers are checking your status page and seeing nothing because you forgot to update it in the chaos of troubleshooting.

Manual status page updates are a coordination failure. They require someone to remember to switch contexts from fixing the problem to communicating about the problem.

The fix: link incident severity to automatic status page updates.

Modern platforms update your status page automatically based on incident state:

- Incident declared with severity "high" → Status page posts "Investigating: API response times elevated"

- Incident updated with

/inc update identified database connection pool issue→ Status page updates "Identified: Connection pool exhaustion, implementing fix" - Incident resolved with

/inc resolve→ Status page updates "Resolved: API response times back to normal"

Zero manual effort. Zero forgotten updates.

"incident.io is a game-changer for incident response... packed with powerful features like automated timelines, role assignments, and customizable workflows." - Verified user review of incident.io

Status page best practices:

- Map severity to visibility: Not every P3 incident needs a customer-facing update

- Auto-subscribe stakeholders: VP Engineering gets notifications on P1s automatically

- Template your messaging: "API response times elevated" reads better than "database is broken"

- Close the loop: Status page resolves automatically when you type

/inc resolve

incident.io's status pages for startups walk through setup in under 5 minutes.

6. Auto-capture timelines to eliminate post-mortem archaeology

Three days after a P1 incident, you sit down to write the post-mortem. You scroll back through the incident Slack channel trying to remember what happened. You check PagerDuty for the alert timestamps. You look at Datadog for when metrics spiked. Ninety minutes later, you have an incomplete, probably inaccurate post-mortem.

This is post-mortem archaeology. You're reconstructing the crime scene after the evidence is cold.

Documented playbooks can lead up to 80% reduction in MTTR, but only if people actually write them. The problem: writing post-mortems from memory is painful, time-consuming, and often skipped entirely when the team is busy shipping features.

The fix: capture the timeline in real-time, not after the fact.

Every Slack message in the incident channel, every /inc command, every role assignment, every status update, every decision made on a call is captured automatically. When you type /inc resolve, the timeline is already complete. AI then drafts the post-mortem using this captured data. You spend 10-15 minutes refining it, not 90 minutes writing it from scratch.

"The velocity of development and integrations is second to none. Having the ability to manage an incident through raising - triage - resolution - post-mortem all from Slack is wonderful." - Verified user review of incident.io

A comparison of automated post-mortems across incident.io, FireHydrant, and PagerDuty shows that platforms using AI-drafted post-mortems reduce documentation time by 75%, with post-mortems published within 24 hours instead of 3-5 days.

The Scribe Advantage:

incident.io's Scribe AI transcribes incident calls in real-time via Google Meet or Zoom integration. When someone says "We deployed version 2.4.1 at 14:30 and that's when the errors started," Scribe captures it. When the team decides "Let's roll back to 2.4.0," Scribe notes it. No designated note-taker needed.

Watch how note-taking during incident response works without pulling someone away from the technical work.

7. Use data-driven insights to identify reliability risks

Your VP Engineering asks: "Are we getting better at incidents?" You spend 4 hours exporting Jira tickets, correlating Slack channels, and building a spreadsheet because your incident data is scattered across three tools.

Visibility into incident patterns is critical for improving reliability, but most teams lack it because their data is fragmented.

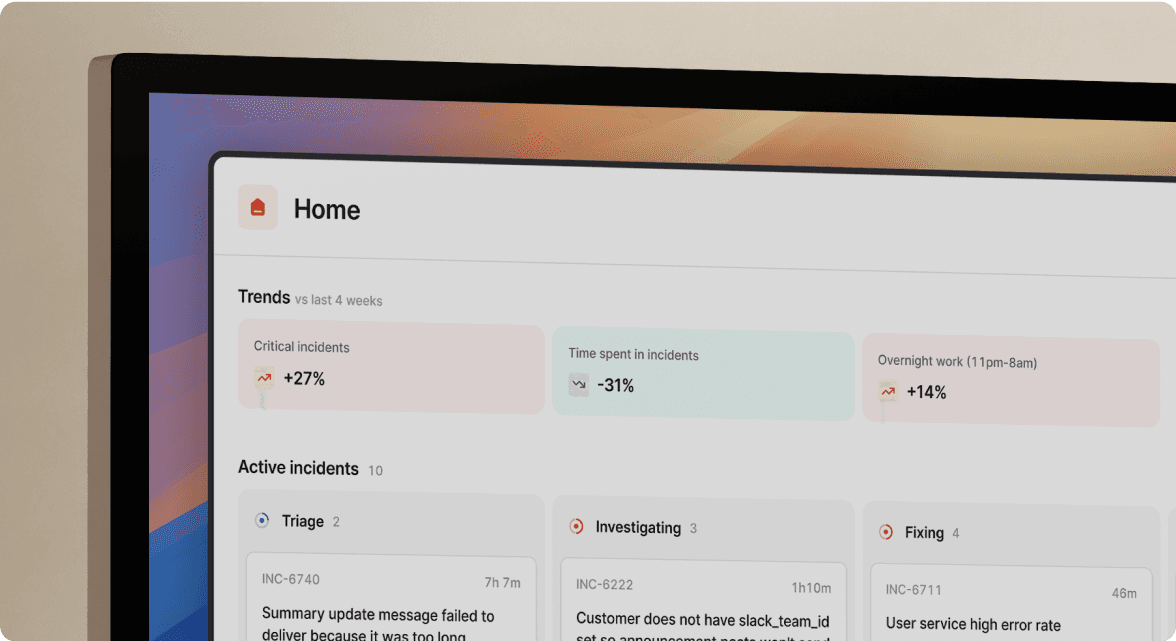

The Insights dashboard: MTTR trends, incident patterns, and ROI proof.

Modern platforms track incident metrics automatically because they're managing the incidents. No manual exports. No spreadsheet wrangling. The data flows from your incident workflow into dashboards showing:

- MTTR trends over time: Are we getting faster at resolution? Which teams are improving?

- Incident frequency by service: Which services are generating the most incidents?

- Top incident types: Are we seeing patterns (database timeouts, API rate limits, deploy failures)?

- Response time by severity: Are P1s being handled with appropriate urgency?

- On-call burden: Who's handling the most incidents? Is the load balanced?

Analyzing the entire incident lifecycle means tracking more than just resolution speed. For example, if your median P1 MTTR dropped from 48 to 30 minutes after adopting a new platform, that's a 37.5% improvement. Across 15 incidents per month, that's 270 minutes saved monthly, or 4.5 hours of engineering time reclaimed.

"Great company, amazing product, world-class support. We've eliminated 3 other vendors and unified services onto Incident.io... The AI insights are super cool." - Verified user review of incident.io

Proving ROI to Leadership:

When your CTO asks "What's the ROI on this tooling investment?", the Insights dashboard provides concrete answers: 270 minutes saved per month at $150 loaded cost = $8,100/year in reclaimed engineering time, plus reduced downtime impact and $5,400/year in tool consolidation savings from replacing PagerDuty + Statuspage.

See the full platform walkthrough for a demo of Insights in action.

Comparison: AI SRE capabilities vs. traditional SRE approaches

The shift from manual to AI-assisted incident response changes what work humans do during an incident. Instead of gathering logs and correlating metrics, engineers focus on decisions that require human judgment: which fix to apply, who else to involve, how to communicate impact.

| Capability | Traditional SRE (Manual) | AI SRE (Agentic) |

|---|---|---|

| Root cause analysis | Engineer manually correlates logs, metrics, and recent changes (15-20 mins) | AI suggests likely culprit within 30 seconds with confidence score |

| Timeline creation | Designated note-taker types updates into Google Doc (pulls them away from troubleshooting) | AI captures every Slack message, command, and call transcription automatically |

| Status page updates | Engineer context-switches to web UI, writes customer-facing copy (2-3 mins per update) | Automatic updates based on incident severity and state changes (0 minutes) |

| Fix suggestions | Engineer searches runbooks, checks past incidents, pattern-matches from memory | AI analyzes incident history, suggests proven solutions, generates fix PRs |

| Post-mortem generation | 60-90 minutes writing from memory 3-5 days after incident | 10-15 minutes editing AI-drafted post-mortem within 24 hours |

Pros and cons of AI-driven incident management

No solution is perfect. AI-driven incident management offers significant benefits, but it requires trust, integration work, and organizational buy-in.

Pros:

- Speed: Significant automation of incident response tasks means faster resolution and less manual toil

- Consistency: AI doesn't forget to update the status page or skip documentation

- Reduced burnout: On-call rotations become less painful when AI handles the grunt work

- Better learning: Auto-captured timelines and AI-drafted post-mortems mean every incident becomes a learning opportunity

- Scalability: Junior engineers can participate effectively because the platform guides them

Cons:

- Trust gap: Teams need time to build confidence in AI suggestions through repeated validation

- Integration overhead: AI SREs work best when integrated with your full toolchain (monitoring, source control, incident management), which requires setup

- Human oversight required: The "human-on-the-loop" model means someone still reviews AI actions before execution

- Dependency on data quality: If historical incident data is incomplete or inaccurate, AI suggestions may be less relevant

- Cost considerations: AI-powered platforms typically sit in higher pricing tiers (Pro/Enterprise plans)

The decision framework: if your team handles 10+ incidents per month and MTTR is a business-critical metric, the ROI on AI-driven incident management is clear. If you handle 1-2 incidents per quarter, manual processes are probably sufficient.

Learn more about automated incident response and where it's headed in 2025 and beyond.

Ready to reduce your MTTR by eliminating coordination overhead? Schedule a demo and run your first incident in Slack.

Key terminology

AI SRE: An agentic AI assistant that autonomously investigates incidents, identifies root causes, and suggests fixes using the human-on-the-loop model.

Agentic AI: AI that takes autonomous actions and presents findings for human approval, rather than waiting for humans to prompt each step.

Incident management platform: Software that unifies the complete incident lifecycle from detection through resolution, including on-call scheduling, response coordination, status pages, and post-mortems.

Mean Time to Resolution (MTTR): The average time it takes to fully resolve an incident, from detection through recovery and verification.

Service catalog: A living registry of your infrastructure showing service owners, dependencies, recent deploys, health metrics, and runbooks.

ChatOps: Managing operations (like incident response) directly in chat applications like Slack using slash commands instead of separate web UIs.

Timeline capture: Automatic recording of every action, decision, and status update during an incident for later analysis and post-mortem generation.

Human-on-the-loop: An AI operating model where AI takes autonomous actions but presents findings for human approval before execution.

FAQs

See related articles

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian Hanson

Everything you need to know about ITIL 5, AI and incident management

We break down ITIL 5's governance framework and what it means for teams using AI in incident response. For incident management, it addresses questions like: Who's accountable when an AI-suggested remediation backfires? How do you audit AI-generated updates?

Chris Evans

Chris Evans

DevEx matters for coding agents, too

When AI can scaffold out entire features in seconds and you have multiple agents all working in parallel on different tasks, a ninety-second feedback loop kills your flow state completely. We've recently invested in dramatically speeding up our developer feedback cycles, cutting some by 95% to address this. In this post we’ll share what that journey looked like, why we did it and what it taught us about building for the AI era.

Rory Bain

Rory BainSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization