Incident management tool integration

Picture the scene: a high‑severity alert fires, Slack lights up, and dashboards scream red. You’re juggling Datadog, PagerDuty, Jira, and status pages while trying to coordinate fixes. The problem isn’t a lack of tools; it’s that they aren’t talking to each other. This guide explains why incident management tool integration matters, how it cuts response times, and where to start.

Why tool integration matters

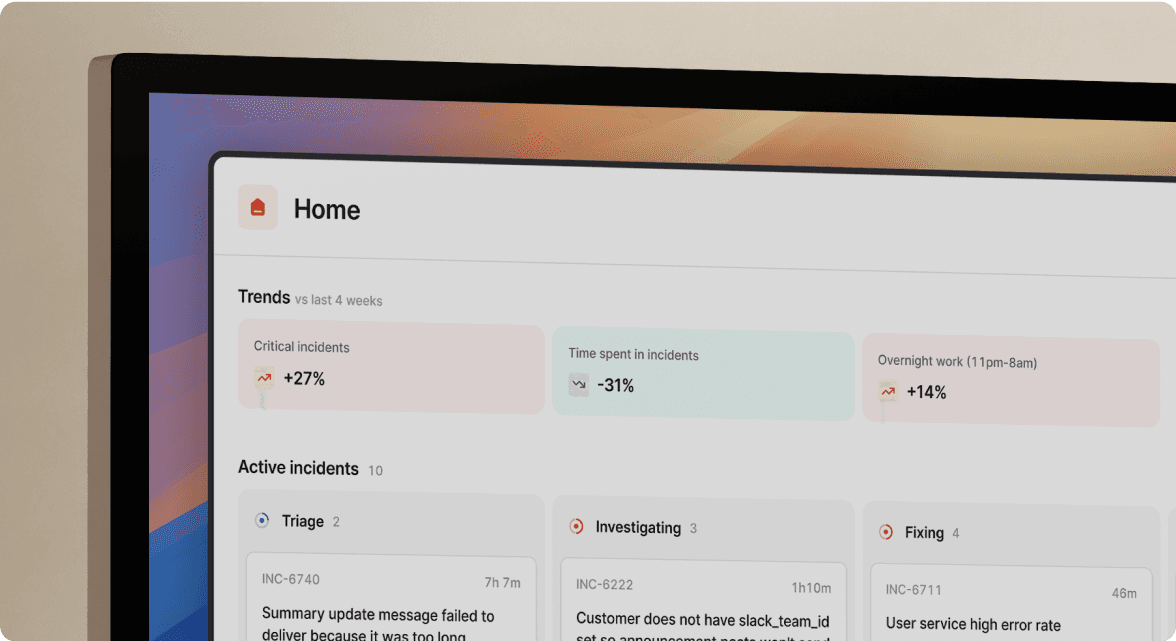

- Less context‑switching. Every hop between chat, paging, and monitoring costs seconds that add up. Integrated workflows surface alerts, metrics, and runbooks inside the incident channel so responders stay focused.

- Faster hand‑offs. When role assignments, escalations, or stakeholder updates trigger automatically, no one waits for a manual nudge. The next engineer or exec has the information they need the moment they join.

- Single source of truth. If alert details, decisions, and follow‑up tasks sync across systems, you avoid duelling updates and missing action items. Clean timelines make post‑incident reviews easier and more accurate.

- Healthier teams. Automating repetitive chores like posting updates, creating Jira tickets, and closing Slack channels reduces midnight busywork and burnout.

Core pieces of an integrated stack

| Lifecycle stage | Typical tooling | Integration win |

|---|---|---|

| Alerting and detection | Datadog, CloudWatch, Sentry | Auto-declare incidents when critical monitors fire; include graphs in chat for instant context |

| Paging and escalation | Incident.io on-call, PagerDuty, Opsgenie | Trigger pages or overrides from the incident channel; reflect ack / resolve status in the timeline |

| Communication | Slack, Microsoft Teams | Spin up a dedicated channel with pre-filled context; route status updates to exec-only channels |

| Tracking and following up | Jira, Linear, Asana | Create follow-up tickets from chat; sync status both ways so nothing falls through |

| Customer visibility | Incident.io status pages, Atlassian Statuspage | Push incident state and latest updates automatically, without copying text |

A real‑world example

At Intercom, engineers linked PagerDuty, Slack, and Jira into a single workflow. When PagerDuty fires, a Slack incident channel appears with the alert payload and a pre‑assigned lead. As responders chat, slash commands log follow‑up actions that land in Jira. According to Intercom’s SRE team, this cut their median time to assemble a response crew by roughly 40 percent and made retros far cleaner.

Steps to integrate without the headache

- Map your flow. List every touchpoint from alert to post‑mortem: where alerts appear, how paging works, and what ticketing system records tasks.

- Pick obvious wins first. High-frequency pain points, such as creating chat rooms, manually paging, or copying alerts, deliver quick value when automated.

- Favour native integrations or webhooks. Off‑the‑shelf connectors keep maintenance low. For niche tools, lightweight webhooks often beat full custom code.

- Keep humans in the loop. Automated escalations should still tap a person for judgment calls. Aim for assistance, not autopilot repairs.

- Measure improvements. Track metrics such as time to assemble responders or the number of missed updates before and after each integration. Data helps justify deeper work later.

Common pitfalls

- Treating integration as a one‑off project. Systems change, so keep a health check to ensure alerts still fire and timelines still sync.

- Over‑automating. If every low‑priority blip creates an incident, responders tune out. Gate integrations with severity filters.

- Ignoring post‑incident workflows. The follow‑up phase is where learning happens. Automate export timelines, action items, and graphs so retrospectives start with accurate data.

Takeaway

Incident management tool integration turns a pile of siloed software into a cohesive workflow. Start with your biggest friction points, plug systems together with the lightest‑weight connectors you can, and iterate. Your future self, awake at 2 a.m., will thank you.

See related articles

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian Hanson

Everything you need to know about ITIL 5, AI and incident management

We break down ITIL 5's governance framework and what it means for teams using AI in incident response. For incident management, it addresses questions like: Who's accountable when an AI-suggested remediation backfires? How do you audit AI-generated updates?

Chris Evans

Chris Evans

DevEx matters for coding agents, too

When AI can scaffold out entire features in seconds and you have multiple agents all working in parallel on different tasks, a ninety-second feedback loop kills your flow state completely. We've recently invested in dramatically speeding up our developer feedback cycles, cutting some by 95% to address this. In this post we’ll share what that journey looked like, why we did it and what it taught us about building for the AI era.

Rory Bain

Rory BainSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization