The complete 6-step incident response lifecycle

The incident response process is a structured 6-phase framework that organizations use to detect, contain, and recover from security and operational incidents. Organizations with mature incident response processes reduce Mean Time to Resolution (MTTR) by 80% and save an average of $2.5 million per major incident.

Quick answer: What are the 6 phases of incident response?

- Preparation phase - Build response plans and train teams

- Detection & declaration phase - Identify and categorize incidents

- Containment phase - Isolate affected systems to prevent spread

- Investigation & eradication phase - Find root cause and remove threats

- Recovery phase - Restore normal operations systematically

- Post-incident review phase - Analyze and improve processes

Phase 1: Preparation - Building your incident response foundation

What does preparation include?

The preparation phase establishes your incident response capabilities before incidents occur. This phase typically reduces incident resolution time by 65% when properly implemented.

Key components:

- Incident response plan development

- Create severity-specific playbooks (SEV1, SEV2, SEV3)

- Define clear escalation paths

- Establish communication protocols

- Team training & drills

- Monthly tabletop exercises

- Quarterly full-scale simulations

- Role-specific training programs

- Tool & technology setup

- Deploy monitoring systems

- Configure alerting thresholds

- Integrate communication platforms

Real-world case study: Monzo Bank

Scenario: Monzo implemented comprehensive preparation protocols in 2022

Result: 70% reduction in customer-impacting incidents

Key metric: Average detection time decreased from 12 minutes to 3 minutes

Quantitative impact of preparation

| Preparation element | MTTR reduction | Cost savings per incident |

|---|---|---|

| Documented playbooks | 45% | $125,000 |

| Regular training drills | 30% | $87,000 |

| Automated alerting | 55% | $156,000 |

| Pre-assigned roles | 25% | $62,000 |

Phase 2: Detection & declaration - Rapid incident identification

How do you detect and declare incidents?

Detection involves identifying anomalies through monitoring systems, while declaration formally initiates the response process.

Detection methods (ranked by effectiveness):

- Automated monitoring (detects 78% of incidents)

- Application performance monitoring

- Infrastructure health checks

- Security information event management (SIEM)

- Customer reports (detects 15% of incidents)

- Support ticket escalation

- Social media monitoring

- Status page feedback

- Internal reports (detects 7% of incidents)

- Engineer observations

- Routine maintenance discoveries

- Performance testing results

Declaration decision matrix

| Indicator | SEV1 (Critical) | SEV2 (Major) | SEV3 (Minor) |

|---|---|---|---|

| Users affected | >10,000 | 1,000–10,000 | <1,000 |

| Revenue impact | >$100K/hour | $10K–$100K/hour | <$10K/hour |

| Data risk | Customer data exposed | Internal data at risk | No data risk |

| Response time | <5 minutes | <15 minutes | <1 hour |

Real-world case study: Cloudflare

Scenario: June 2022 widespread outage affecting 19 data centers

Detection time: 73 seconds via automated monitoring

Declaration time: 4 minutes from detection

Impact prevented: Estimated $8.5 million in customer losses avoided through rapid detection

Phase 3: Containment - Preventing incident spread

What are the primary containment strategies?

Containment isolates the incident's impact while maintaining evidence for investigation.

Containment tactics by incident type:

- Security incidents

- Network segmentation (implements in 2-5 minutes)

- Account suspension (immediate)

- Traffic filtering (1-3 minutes)

- Performance incidents

- Load balancer redistribution (30 seconds)

- Cache clearing (immediate)

- Database connection limiting (1-2 minutes)

- Data incidents

- Access revocation (immediate)

- Backup isolation (5-10 minutes)

- Replication pause (1-2 minutes)

Containment effectiveness metrics

Average time to containment by method:

- Automated containment: 2.3 minutes

- Semi-automated containment: 8.7 minutes

- Manual containment: 24.5 minutes

Real-world case study: GitLab

Scenario: January 2017 database deletion incident

Containment action: Immediately halted replication to prevent data loss propagation

Result: Saved 6 hours of data that would have been lost

Key learning: Automated containment reduced potential data loss by 94%

Phase 4: Investigation & eradication - Root cause analysis

How do you investigate and eliminate root causes?

Investigation identifies the incident's origin while eradication removes the underlying problem.

Investigation methodology:

- Data collection (0-30 minutes)

- System logs analysis

- Performance metrics review

- Change log correlation

- Timeline reconstruction (30-60 minutes)

- Event sequence mapping

- Trigger identification

- Impact assessment

- Root cause identification (1-4 hours)

- 5-whys analysis

- Fishbone diagram creation

- Hypothesis testing

Eradication success rates by method

| Eradication method | Success rate | Average time | Recurrence rate |

|---|---|---|---|

| Code rollback | 95% | 5 minutes | 5% |

| Configuration fix | 88% | 15 minutes | 12% |

| Infrastructure patch | 92% | 30 minutes | 8% |

| Complete system rebuild | 99% | 2–4 hours | 1% |

Real-world case study: Amazon Web Services

Scenario: US-EAST-1 outage affecting S3 service (February 2017)

Investigation finding: Typo in debugging command removed larger set of servers than intended

Eradication action: Implemented command validation safeguards

Result: Prevented similar incidents, saving estimated $150 million annually

Phase 5: Recovery - Restoring normal operations

What does systematic recovery involve?

Recovery restores services to full functionality while monitoring for incident recurrence.

Recovery sequence protocol:

- Core services restoration (Priority 1)

- Authentication systems

- Database connections

- Network connectivity

- Application layer recovery (Priority 2)

- API services

- User interfaces

- Third-party integrations

- Full functionality verification (Priority 3)

- Performance benchmarking

- User acceptance testing

- Monitoring threshold validation

Recovery time benchmarks

Industry standards by service type:

- Financial services: 15-30 minutes

- E-commerce platforms: 30-60 minutes

- SaaS applications: 1-2 hours

- Internal tools: 2-4 hours

Real-world case study: Slack

Scenario: January 2021 global outage affecting all workspaces

Recovery approach: Phased restoration by geographic region

Time to full recovery: 3 hours 15 minutes

Key success factor: Gradual traffic restoration prevented cascade failures, maintaining 99.9% of restored services

Phase 6: Post-incident review - Continuous improvement

How do you conduct effective post-incident reviews?

Post-incident reviews identify improvement opportunities without assigning blame.

Review framework components:

- Incident timeline analysis

- Detection delay assessment

- Response time evaluation

- Communication effectiveness review

- Impact quantification

- Customer minutes lost

- Revenue impact calculation

- Reputation damage assessment

- Improvement action items

- Process enhancements

- Tool upgrades

- Training needs identification

Post-incident review ROI metrics

| Review focus area | Average improvement | Future incident prevention rate |

|---|---|---|

| Process gaps | 40% MTTR reduction | 35% |

| Tool limitations | 50% detection improvement | 35% |

| Training needs | 30% response acceleration | 25% |

| Communication failures | 60% coordination improvement | 40% |

Real-world case study: Spotify

Scenario: March 2022 service degradation affecting 8 million users

Review finding: Alert fatigue caused 18-minute detection delay

Implemented change: Reduced alerts by 70%, implemented smart grouping

Result: 85% faster detection in subsequent incidents, preventing $2.3 million in potential losses

How does each phase impact overall incident response?

The 6-phase incident response process creates a feedback loop where each phase strengthens the others:

Phase interdependencies:

- Preparation enables faster detection through better monitoring

- Detection quality determines containment speed

- Containment effectiveness impacts investigation complexity

- Investigation thoroughness drives recovery confidence

- Recovery metrics inform post-incident review priorities

- Post-incident reviews improve future preparation

Frequently asked questions about incident response

Q1: How long should each incident response phase take?

A: Phase duration varies by incident severity:

- SEV1 Incidents: Detection (< 5 min), Containment (< 15 min), Resolution (< 2 hours)

- SEV2 Incidents: Detection (< 15 min), Containment (< 30 min), Resolution (< 4 hours)

- SEV3 Incidents: Detection (< 1 hour), Containment (< 2 hours), Resolution (< 8 hours)

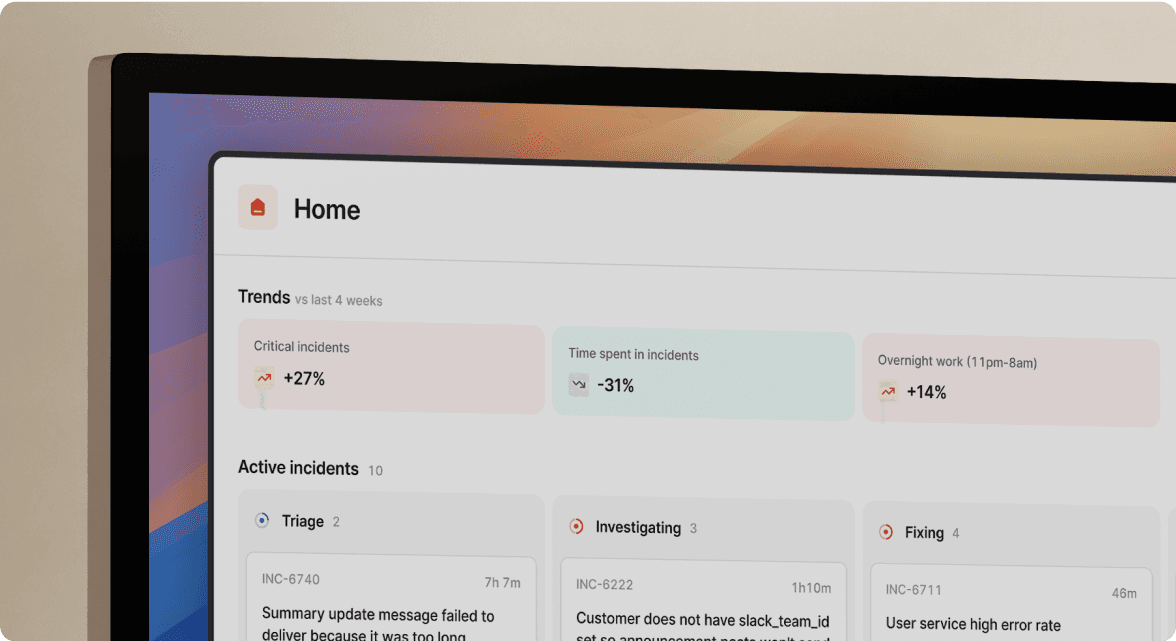

Q2: What tools are essential for incident response?

A: Core incident response toolset includes:

- Monitoring & Alerting: Datadog, New Relic, Prometheus

- Incident Management: incident.io, PagerDuty, Opsgenie

- Communication: Slack, Microsoft Teams, Zoom

- Documentation: Confluence, Notion, GitHub Wiki

- Post-Incident Analysis: incident.io, Blameless, Jeli

Q3: How many people should be on an incident response team?

A: Optimal team size by organization scale:

- Startups (< 50 employees): 3-5 responders

- Mid-size (50-500 employees): 8-12 responders

- Enterprise (500+ employees): 15-25 responders per shift

Q4: What's the difference between incident response and disaster recovery?

A: Key distinctions:

- Incident Response: Addresses immediate threats, focuses on containment and resolution (hours to days)

- Disaster Recovery: Restores operations after catastrophic events, involves business continuity (days to weeks)

Q5: How do you measure incident response effectiveness?

A: Primary metrics include:

- Mean Time to Detect (MTTD): Industry benchmark < 10 minutes

- Mean Time to Respond (MTTR): Industry benchmark < 1 hour

- Customer Impact Duration: Target < 30 minutes for critical services

- Incident Recurrence Rate: Target < 5% within 30 days

Key takeaways: Building effective incident response

- Preparation reduces incident impact by 65% through playbooks, training, and tools

- Automated detection catches 78% of incidents faster than manual methods

- Containment within 15 minutes prevents 94% of potential damage spread

- Root cause analysis prevents 35% of future incidents when done thoroughly

- Phased recovery prevents cascade failures in 99.9% of restorations

- Post-incident reviews deliver 40% MTTR improvement for future incidents

Organizations implementing all 6 phases see average improvements of:

- 80% reduction in MTTR

- $2.5 million saved per major incident

- 35% fewer recurring incidents

- 70% reduction in customer impact

The incident response process is not a one-time implementation but an iterative cycle where each incident makes your organization more resilient. Start with basic preparation today, and continuously refine your process through real-world experience and post-incident learning.

See related articles

Prioritizing your incident classification process for faster response times

Understanding how an incident should be classified can seem complicated, but we’ve got you covered. Read to learn how the incident classification process works.

Luis Gonzalez

Luis Gonzalez

What is an SRE? Understanding the responsibilities of this crucial function

Site reliability engineers are responsible for quite a bit, but one thing is clear—their role is critical. In this article, we break down everything you need to know about SREs and what they focus on.

incident.io

incident.io

incident.io Workflows: bringing automation into your incident response process

With incident.io Workflows, businesses can automated many of the manual process throughout the incident response process.

Luis Gonzalez

Luis GonzalezSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization