Opsgenie integrations migration Guide: Datadog, Prometheus, AWS, and Grafana

Updated December 31, 2025

TL;DR: Atlassian is shutting down Opsgenie by April 5, 2027. Migrating your Datadog, Prometheus, AWS CloudWatch, and Grafana alerts to incident.io takes days, not months. Use a 14-day parallel run where both systems receive alerts simultaneously to validate routing rules without risk. Generate new webhook URLs in incident.io, configure dual-send from your monitoring tools, test escalation paths, then cut over. This guide covers webhook mapping, payload transformation, routing rule translation, and a validation checklist to ensure zero alert loss during the switch.

Why this migration matters now

Atlassian set a hard deadline in the Opsgenie sunset timeline: sales ended June 4, 2025, and the platform shuts down April 5, 2027. You have a forced migration ahead, but the real question is not whether to migrate but where and how to do it without dropping alerts.

The migration looks daunting at first. Datadog fires alerts into Opsgenie, Prometheus routes through Alertmanager, AWS CloudWatch pushes SNS notifications, Grafana dashboards wire into on-call schedules. Rewiring all of this feels like changing engines mid-flight. But modern alert integrations are mostly webhooks with JSON payloads. If you migrate strategically using a parallel-run approach, you validate your new setup while keeping Opsgenie as a safety net. No downtime. No missed pages. No 3 AM surprises.

This guide walks through the technical specifics of reconnecting your monitoring stack to incident.io: webhook URL generation, payload mapping, routing rule translation, and a step-by-step validation checklist.

Understanding the Opsgenie sunset timeline

Atlassian has set clear deadlines in their shutdown announcement: new Opsgenie purchases stopped June 4, 2025, and the platform shuts down entirely April 5, 2027. Existing customers can renew subscriptions and add users until the final shutdown, but Atlassian actively pushes teams toward Jira Service Management or Compass.

The problem with the JSM migration path is that it is service-desk-first, not purpose-built for real-time incident response. Complex JSM implementations often take months to configure, and the platform lacks the Slack-native coordination that modern SRE teams expect.

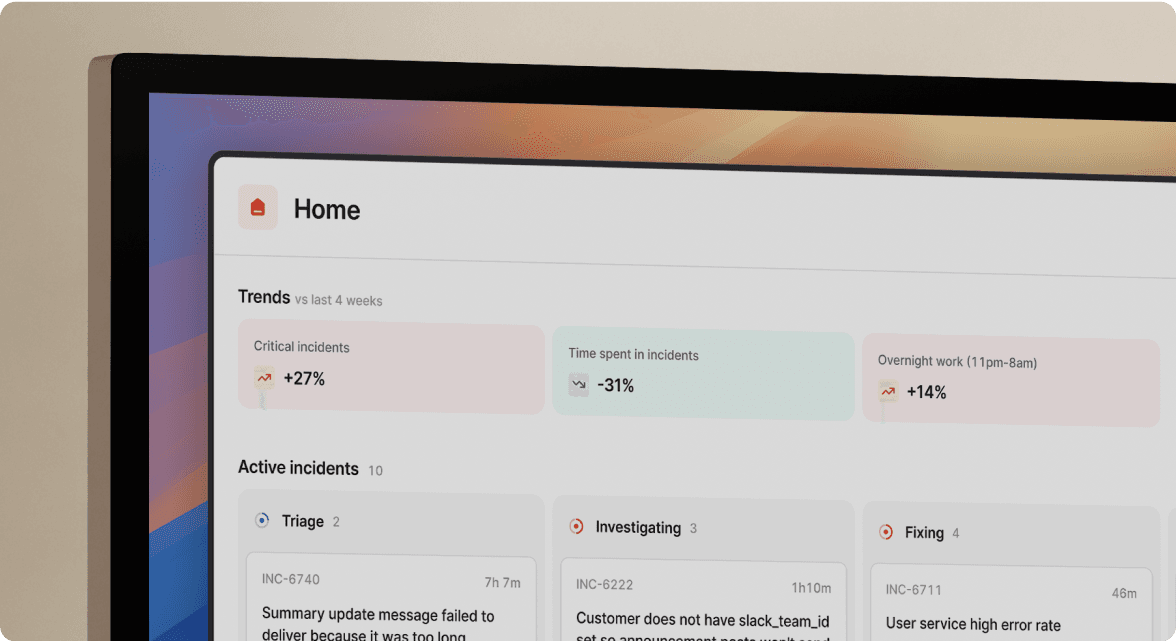

We built incident.io as a Slack-native alternative that unifies on-call scheduling, alert routing, incident response, and post-mortem generation in one platform. You get the full incident lifecycle in Slack, not just alert notifications. The migration from Opsgenie to incident.io typically takes days, not months, because the core alert integrations are straightforward webhook configurations.

The 14-day parallel run strategy

The safest way to migrate alert integrations is to run both systems simultaneously for a validation period. We recommend between one day and two weeks, depending on your incident volume and team size.

Here is how the parallel run works in practice:

- Phase 1: Dual-Send Setup (Day 1) Configure your monitoring tools to send alerts to both Opsgenie and incident.io simultaneously. You make no changes to your existing Opsgenie configuration. You are simply adding a second webhook destination in Datadog, Prometheus, AWS SNS, or Grafana.

- Phase 2: Shadow Validation (Days 2-7) We receive alerts and create incidents automatically, but your team continues responding in Opsgenie. Use this week to verify that alerts route correctly, escalation paths trigger as expected, and no alerts are dropped.

- Phase 3: Live Cutover (Days 8-14) Switch your team to respond in incident.io while keeping Opsgenie as a backup. If any routing issues surface, you still have Opsgenie running to catch missed pages.

- Phase 4: Decommission (Day 15) Disable Opsgenie webhooks and cancel your subscription.

Validation checkpoints before cutover

Before disabling Opsgenie completely, confirm these four checkpoints:

- Alert Delivery: Every alert source (Datadog, Prometheus, CloudWatch, Grafana) successfully forwards alerts to incident.io with no webhook errors.

- On-Call Schedule Accuracy: The on-call schedules in incident.io match your Opsgenie configuration exactly, including rotation times and override handling.

- Escalation Path Testing: Send test alerts and verify that escalations notify the correct people at the correct times with the correct urgency (Slack message vs. SMS vs. phone call).

- Status Page Automation: If you use status pages, confirm they update automatically when incidents resolve in incident.io.

Step-by-step: Migrating key integrations

Migrating Datadog alerts

Datadog is the most common monitoring tool integrated with Opsgenie. We built native Datadog integration that auto-parses alert payloads to populate incident details, so you spend zero time mapping fields manually.

Configuration Steps:

- Generate webhook URL in incident.io

- Navigate to Alerts > Sources in the incident.io dashboard.

- Click New Alert Source and select Datadog.

- Copy the unique webhook URL provided.

- Add Webhook in Datadog

- In your Datadog account, go to Integrations > Webhooks.

- Click New to create a webhook.

- Name it "incident.io Integration."

- Paste the incident.io webhook URL into the URL field.

- Leave authentication empty (the URL contains the token).

- Update alert notification rules

- Edit your existing Datadog monitors.

- Add the new incident.io webhook as a notification target alongside your existing Opsgenie integration.

- Example notification configuration:

@webhook-incidentio @opsgenie-api-integration(during parallel run).

- Payload mapping

Datadog's alert payload automatically maps to our fields:$EVENT_TITLE→ Incident title$ALERT_STATUS→ Incident status (firing/resolved)$HOSTNAMEand$TAGS→ Custom attributes for routing

- Test the integration

- Trigger a test alert in Datadog.

- Verify it appears in our Alerts tab.

- Confirm the alert creates an incident and routes to the correct team.

Watch our full platform demo to see how alerts flow from Datadog into incident.io and trigger Slack channels automatically.

Migrating prometheus and alertmanager

Prometheus uses Alertmanager to route alerts. We built a native Alertmanager notifier that simplifies the integration to a few lines in your alertmanager.yml config.

Configuration Steps:

- Generate alert source in incident.io

- Go to Alerts > Sources in incident.io.

- Create a new alert source for Prometheus.

- Copy the webhook URL and alert source token.

- Edit

alertmanager.yml

Add a new receiver to your Alertmanager configuration:

receivers:

- name: 'incidentio-notifications'

incidentio_configs:

- url: '<your-incident-io-webhook-url>'

alert_source_token: '<your-alert-source-token>'

- Update routing rules

Configure yourroutesection to direct alerts to the new receiver:

route:

receiver: 'incidentio-notifications'

routes:

- match:

severity: 'critical'

receiver: 'incidentio-notifications'

- Reload Alertmanager

Apply the configuration changes:

kill -HUP $(pidof alertmanager)

- Verify alert flow

- Trigger a test alert from Prometheus.

- Check that it appears in incident.io.

If you are new to the Prometheus and Grafana architecture, this five-minute video breakdown shows how alerts flow from Prometheus through Alertmanager to external systems.

Migrating AWS CloudWatch alarms

AWS CloudWatch uses SNS (Simple Notification Service) to push alerts to external systems. The migration involves creating an SNS topic that forwards to an incident.io webhook.

Configuration Steps:

- Generate webhook URL in incident.io

- Navigate to Alerts > Sources.

- Click New Alert Source and select AWS SNS.

- Copy the HTTPS webhook URL.

- Create SNS topic

- In the AWS Management Console, go to SNS > Topics.

- Click Create topic and select Standard.

- Name it "incident-io-alerts."

- Create HTTPS subscription

- Within your new SNS topic, click Create subscription.

- Set Protocol to HTTPS.

- Paste the incident.io webhook URL as the Endpoint.

- AWS sends a subscription confirmation request to the webhook. We automatically confirm it, so you see the status change to Confirmed within seconds.

- Update CloudWatch alarm actions

- In CloudWatch > Alarms, edit your existing alarms.

- In the Notifications section, add your new SNS topic as an action for

ALARMandOKstates.

- Test with a test alarm

- Create a test alarm that triggers immediately.

- Verify the alert reaches incident.io.

We published a recap of AWS re:Invent 2025 observability sessions that covers modern alerting patterns for CloudWatch, EventBridge, and SNS.

Migrating Grafana alerts

Grafana's alerting system can send notifications to external webhooks. The setup is similar to other integrations.

Configuration Steps:

- Generate webhook URL in incident.io

- In Alerts > Sources, create a new alert source for Grafana.

- Create webhook contact point in Grafana

- Go to Alerting > Contact points in Grafana.

- Click New contact point.

- Select Webhook as the type.

- Paste the incident.io webhook URL.

- Configure notification policies

- Navigate to Notification policies.

- Create or edit a policy to use the incident.io contact point for alerts matching specific labels (e.g.,

severity=critical).

- Test alert delivery

- Trigger a test alert from Grafana.

- Confirm it creates an incident in incident.io.

Handling custom webhooks

If you have in-house monitoring tools or custom scripts that currently send alerts to Opsgenie, you can configure them to send to incident.io using the generic HTTP alert source.

Configuration Process:

- Generate HTTP alert source

- In incident.io, go to Alerts > Sources and select HTTP.

- Copy the webhook URL and authentication token.

- Configure your custom tool

- Send an HTTP POST request to the incident.io URL.

- Include the

Authorizationheader with your token:Bearer <your-token>. - Set

Content-Type: application/json.

- Construct the JSON payload

Minimum required fields:

{

"title": "Database connection pool exhaustion on prod-db-01",

"status": "firing",

"deduplication_key": "prod-db-01-connection-pool",

"description": "Connection pool utilization at 98% for 5 minutes",

"metadata": {

"host": "prod-db-01",

"service": "postgres",

"team": "platform"

}

}

- Test and validate

- Send a test payload to the webhook.

- Verify the incident appears in incident.io with the correct metadata.

For additional examples of custom webhook integrations, see the groundcover documentation for payload structures and error handling patterns.

Replicating routing rules and schedules

Opsgenie routing rules and escalation policies need to be manually recreated in incident.io. While this requires some effort, it is also an opportunity to clean up outdated routing logic and eliminate "zombie alerts" that nobody acts on.

Terminology mapping: Opsgenie to incident.io

Here is how Opsgenie concepts map to incident.io:

| Opsgenie Term | incident.io Equivalent | Key Difference |

|---|---|---|

| Team | Team | Direct 1:1 mapping. Import your Opsgenie teams using the automated importer. |

| Routing Rule | Alert Route | Our routing is more flexible, supporting catalog-based routing (by service, team, or feature). |

| Escalation Policy | Escalation Path | We support priority-based escalation and working-hours awareness. Must be recreated manually. |

| Alert Deduplication | Alert Grouping | We use deduplication_key in payloads and intelligent grouping logic to bundle related alerts. |

Migrating on-call schedules

We built an automated schedule importer that handles user accounts and on-call rotations from Opsgenie, so you do not spend days manually recreating shifts.

Import Process:

- Export from Opsgenie

- Use the Opsgenie REST API to export schedules:

GET /v2/schedules. - Export users:

GET /v2/users. - Export teams:

GET /v2/teams.

- Use the Opsgenie REST API to export schedules:

- Import into incident.io

- In incident.io, go to Settings > Import from Opsgenie.

- Provide your Opsgenie API key.

- The importer fetches users, teams, and schedules and recreates them automatically.

- Manually recreate escalation paths

- Escalation policies are not automatically imported.

- Go to Escalations > New Escalation Path.

- Recreate each Opsgenie escalation policy as an incident.io escalation path, configuring notification urgency (Slack, SMS, phone call) and time-to-acknowledge thresholds.

Read our guide on designing smarter on-call schedules to learn how to reduce burnout and improve response times once your migration is complete.

Translating routing rules

Opsgenie's team-based routing can be replicated using our alert routes, but you can also take advantage of our catalog-based routing to make rules more dynamic.

Example translation:

Opsgenie Rule: "If an alert has the tag service:api and priority is P1, escalate to the API On-Call schedule."

incident.io configuration:

- Create a custom attribute named

servicein your alert source. - Create an alert route that matches alerts where

serviceequalsapiandpriorityequalsP1. - Link this route to an escalation path that notifies the API On-Call schedule.

Watch our on-call setup walkthrough to see how to configure escalation paths, notification urgency, and time-to-acknowledge thresholds.

Data export and historical context

When migrating from Opsgenie, you will need to export historical data for compliance or post-mortem reference.

Critical data to export

These are required for your incident.io to function correctly:

Priority 1 (Required for operations):

- Users (API:

GET /v2/users) - Teams (API:

GET /v2/teams) - Schedules (API:

GET /v2/schedules) - Escalation Policies (API:

GET /v2/escalations)

Priority 2 (Useful for historical analysis):

- Incidents (API:

GET /v2/incidents) - Export the last 12 months for trending analysis.

Priority 3 (Nice to have):

- Runbooks and playbooks - Copy any documentation linked in Opsgenie to incident.io's service catalog.

Our full Opsgenie migration playbook includes API export scripts and guidance on what data to preserve vs. what to leave behind.

Minimizing downtime and ensuring adoption

The technical migration is only half the challenge. Your team needs to adopt the new system without confusion or resistance.

Training and onboarding

We designed incident.io to be intuitive, but your team still needs to understand the new workflow.

Week 1: Announce and demonstrate

- Send a company-wide announcement explaining the migration timeline and rationale.

- Host a 20-minute demo showing how to declare an incident, escalate, and resolve using

/inccommands in Slack.

Week 2: Shadow mode

- Run the parallel system with Opsgenie still active.

- Encourage engineers to declare lower-severity incidents in incident.io using

/inc declareto get comfortable with the workflow. - Keep responding to P0/P1 incidents in Opsgenie until Week 3.

Week 3: Go live

- Cut over to incident.io as the primary system.

- Keep Opsgenie running for one more week as a backup.

Week 4: Decommission

- Disable Opsgenie webhooks and cancel the subscription.

Read our 2025 guide to preventing alert fatigue to learn how to reduce noise and improve on-call experience after your migration.

Migration comparison: incident.io vs. JSM vs. PagerDuty

| Feature | incident.io | Jira Service Management | PagerDuty |

|---|---|---|---|

| Setup Time | Days | Months for complex setups | Varies by configuration |

| Cost (100 users) | $4,500/month (Pro + On-call) | $2,000-2,100/month (Standard) | Typically higher with add-ons |

| Slack-Native | Yes, entire workflow in Slack | No, service desk first | Notifications only |

| Migration Support | Automated importer for schedules | Automated tool from Atlassian | Manual configuration |

We published a detailed pricing and ROI comparison showing the total cost of ownership for incident.io vs. PagerDuty vs. Opsgenie over 12 months, including hidden costs like per-incident fees and add-on charges.

Troubleshooting common migration issues

Failed webhooks: 401 unauthorized

Symptom: Monitoring tool reports webhook failures with HTTP 401 status.

Root Cause: Invalid or expired API token in the Authorization header.

Resolution:

- Generate a new API token in incident.io settings.

- Update the webhook configuration in your monitoring tool with the new token:

Authorization: Bearer <your-token>. - Test with a sample alert.

Failed Webhooks: 422 unprocessable entity

Symptom: Webhooks rejected with HTTP 422 and error message {"error":"invalid_payload"}.

Root Cause: JSON payload does not match our expected schema.

Resolution:

- Inspect the raw payload being sent by your monitoring tool.

- Compare it to our alert source API documentation for required fields (

title,status,deduplication_key). - Correct the payload structure and resend.

Alerts received but not routing correctly

Symptom: Alerts appear in our Alerts tab but do not trigger the correct escalation path.

Root Cause: Alert route conditions do not match the incoming alert's attributes.

Resolution:

- Click on the alert in incident.io to view its full JSON payload and extracted attributes.

- Review your alert route conditions and ensure they match the actual attributes.

- Use our alert route testing feature to validate your logic before sending live alerts.

Watch our AI-powered alert grouping demo to see how we reduce noise by intelligently bundling related alerts based on service, timing, and common attributes.

Moving forward with confidence

Migrating alert integrations from Opsgenie to incident.io is not a high-risk operation when you use a parallel-run strategy. By running both systems for 14 days, you validate routing rules, test escalation paths, and ensure zero alert loss before cutting over. The technical work—reconnecting Datadog, Prometheus, AWS CloudWatch, and Grafana—is mostly webhook configuration and JSON payload mapping. The harder part is getting your team comfortable with the new system, which is why the parallel run is so valuable. Your engineers explore incident.io during lower-severity incidents while knowing Opsgenie is still running as a backup.

If you are facing the Opsgenie sunset deadline, now is the time to start planning your migration. Book a demo to see our platform in action and get a customized migration plan for your team.

Key terminology

Alert Route: A configuration in incident.io that determines where an incoming alert should be sent based on its attributes (severity, service, team, etc.). Equivalent to Opsgenie's routing rules but with more flexible catalog-based routing options.

Escalation Path: A multi-level notification sequence that defines who gets paged and when if an alert is not acknowledged. Equivalent to Opsgenie's escalation policies but with more granular control over notification urgency.

Deduplication Key: A unique identifier in an alert payload that prevents duplicate incidents from being created for the same underlying issue. We use this to group related alerts.

Parallel Run: A migration strategy where both the old and new systems operate simultaneously for a validation period, allowing teams to verify the new setup without risk of missed alerts.

Webhook: An HTTP endpoint that receives JSON payloads from monitoring tools when alerts fire. We generate unique webhook URLs for each alert source.

Alert Grouping: The process of bundling related alerts together to reduce noise and prevent alert fatigue. We automatically group alerts based on deduplication keys and service metadata.

Service Catalog: A centralized inventory of services, systems, and features in incident.io that enables dynamic routing based on service ownership rather than static team assignments.

Time to Acknowledge (TTA): The maximum time an on-call engineer has to acknowledge an alert before it escalates to the next level of the escalation path.

FAQs

See related articles

The post-mortem problem

Post-mortems are one of the most consistently underperforming rituals in software engineering. Most teams do them. Most teams know theirs aren't working. And most teams reach for the same diagnosis: the templates are too long, nobody has time, nobody reads them anyway.

incident.io

incident.io

Keeping it boring: the incident.io technology stack

This is the story of how incident.io keeps its technology stack intentionally boring, scaling to thousands of customers with a lean platform team by relying on managed GCP services and a small set of well-chosen tools.

Matthew Barrington

Matthew Barrington

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian HansonSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization