5 best on-call features for SRE teams: How to choose the best incident management platform

Updated December 09, 2025

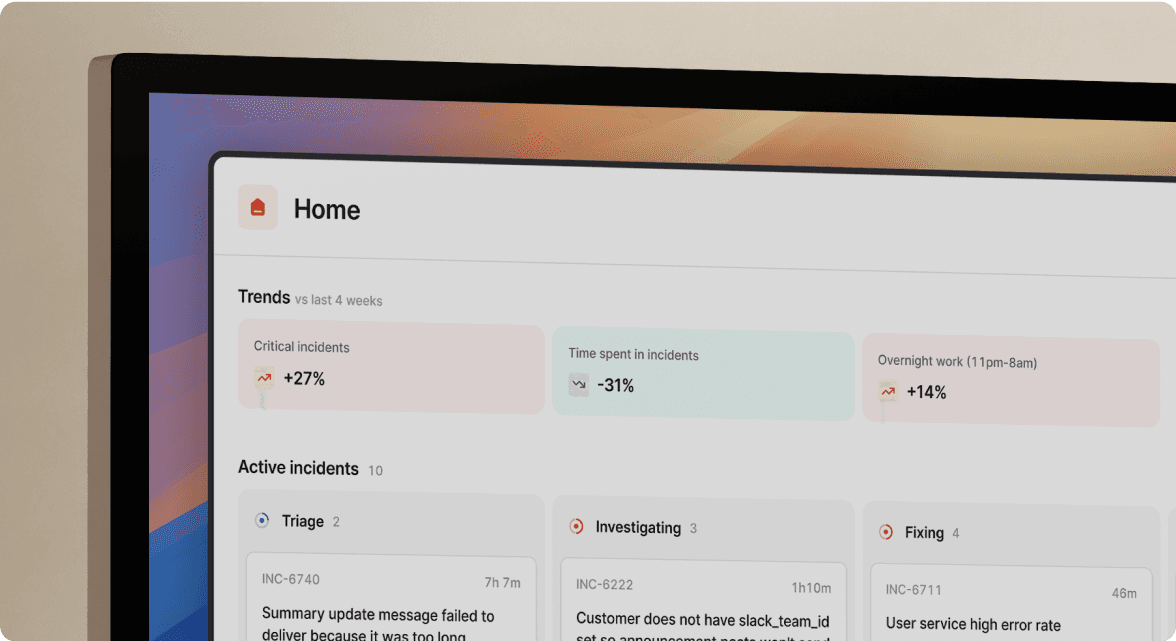

TL;DR: Traditional on-call tools create a "coordination tax" costing 10-15 minutes per incident before troubleshooting begins, that's 225 minutes monthly for teams handling 15 incidents. incident.io eliminates this by unifying on-call scheduling with Slack-native response workflows, reducing coordination overhead by up to 80% compared to PagerDuty (typically $30K-50K+ annually for 50 users). The key: intelligent escalation, burnout analytics, and slash commands that let engineers acknowledge, escalate, and resolve without leaving Slack.

How traditional on-call tools add 10-15 minutes to every incident

Traditional on-call tools create a "coordination tax" that costs engineering teams 10-15 minutes per incident before troubleshooting even begins. Here's what that looks like: PagerDuty fires an alert. You acknowledge it in the app. Then you manually open Slack to coordinate, switch to Datadog to check metrics, hunt through Confluence for the runbook, and finally start investigating. Twelve minutes have passed assembling context that should have been automatic.

For teams handling 15 incidents monthly, this overhead burns 225 minutes per month. At $150 loaded engineer cost per hour, that's $562.50 monthly in pure coordination waste from context-switching. The gap between "getting paged" and "starting the fix" exists because vendors built traditional on-call tools for a single job: reliable alert delivery.

PagerDuty excels at getting you on the phone with sophisticated routing rules and multi-channel notifications. But once you acknowledge the alert, you're on your own to figure out what's broken, who can help, and where the relevant context lives. This is where the coordination tax compounds.

Modern incident management demands more. When Datadog fires an alert, the platform should automatically create a dedicated incident channel, pull in the on-call engineer and service owner, surface the runbook, show recent deployments, and start capturing a timeline. All of this should happen in the tool where your team already works whether it's Slack or Microsoft Teams and not across five different applications.

The coordination tax isn't just frustrating. It increases Mean Time To Resolution (MTTR), burns out on-call engineers who spend more time context-switching than problem-solving, and creates gaps where critical information gets lost in Slack threads or never documented at all.

5 on-call features that prevent engineer burnout and reduce mean time to resolution

Automated escalation policies with round robin fairness

Escalation policies are your safety net when the primary on-call engineer doesn't acknowledge an alert. Basic time-based escalation (page person A, wait 5 minutes, page person B) is table stakes. Advanced policies distribute the workload fairly and route intelligently based on time and priority.

Round robin escalation rotates pages between team members to share the load more fairly. Instead of always escalating to the same senior engineer, the system distributes second-level pages across the team. This prevents a handful of people from becoming permanent firefighters while others never get paged.

Smart escalation paths consider the time of day and incident priority. We built flowchart-style escalation policies that can be configured to escalate differently during business hours versus 3 AM. A low-priority database warning doesn't need to wake anyone up at night. A critical payment processing failure pages everyone immediately, day or night.

For incidents requiring specialized expertise, escalation policies should support functional routing, not just hierarchical escalation. If a Kubernetes networking issue fires, the policy should pull in the platform team, not just escalate up the management chain. The ability to escalate directly to a Slack channel allows anyone monitoring that channel to jump in, which is faster than waiting for a single person to acknowledge.

Calendar sync and easy overrides for work-life balance

Effective on-call management hinges on seamless calendar integration. Engineers need visibility into their on-call shifts without manually checking a separate dashboard. Most platforms offer basic one-way calendar sync through iCalendar feeds that you can subscribe to in Google Calendar or Outlook.

Advanced platforms go further. We integrate with HR systems like BambooHR, CharlieHR, HiBob, and Personio to import time-off calendars, keeping on-call schedules current automatically. This prevents the awkward situation where someone gets paged while on their honeymoon because nobody remembered to update the schedule.

PagerDuty's Google Calendar Extension reads out-of-office events from Google Calendar to identify scheduling conflicts, though this is a one-way sync. When you mark yourself as out-of-office in Google Calendar, the extension helps flag potential coverage gaps.

We take this further by incorporating holiday feeds directly into schedules, preventing engineers from being scheduled on public holidays without manual intervention. You can specify countries where team members are located, and the platform automatically accounts for regional holidays.

Shadow rotations are another critical feature for reducing stress:

- A backup person is always designated in case the primary can't respond

- Primary engineers know someone has their back during meetings, commutes, or personal emergencies

- This provides psychological relief without adding constant burden to the secondary

The best platforms make overrides trivially easy. If you need to swap shifts or request cover, you shouldn't need manager approval or complex workflows. We emphasize liberal use of overrides and built cover request features that make asking for help feel natural, not burdensome. This flexibility is essential for maintaining work-life balance and preventing resentment.

Slack-native actions that eliminate context switching

The defining feature of modern incident management is chat-native response. When an alert fires, the entire incident lifecycle should happen in Slack or Microsoft Teams, not in a web dashboard you have to remember to check.

Our Slack-native architecture means you can acknowledge alerts, escalate to another team, assign roles, update severity, and resolve incidents using slash commands. You type /inc escalate to pull in another team, /inc severity critical to escalate priority, and /inc resolve to close the incident. This isn't just convenience, it's a fundamental reduction in cognitive load during high-stress moments.

Compare this to the traditional flow: PagerDuty sends an alert to Slack, you click the link which opens a web browser, you log into the web UI (10-15 seconds), you acknowledge the alert there, then you return to Slack to coordinate. That's four context switches costing 30-45 seconds before you've even started investigating.

"Its seamless ChatOps integration makes managing incidents fast, collaborative, and intuitive—right from Slack." - Ari W. on G2

Another reviewer noted the platform is easy to set up and use, with particularly smooth Slack integration. For a full walkthrough of how this works, watch our end-to-end on-call demo showing how on-call engineers receive pages, acknowledge them, and coordinate response without leaving Slack.

Workload distribution metrics that surface burnout before it happens

Preventing engineer burnout requires visibility into who's carrying the on-call burden. Burnout happens when workload distribution is invisible until someone quits. Modern platforms track alert volume, incident time, and sleep-hour interruptions per engineer so you can spot imbalances before they cost you talent.

PagerDuty's Responder Report tracks the number of notifications each responder receives, categorized into business hour, off-hour, and sleep hour interruptions. This is critical data. If one engineer is getting paged at 3 AM three times a week while others are never interrupted at night, your rotation isn't fair. The report also measures "Response Effort," which quantifies the total time a responder is engaged with incidents, from acknowledgment to resolution.

What's fair distribution? Most SRE teams target no more than 3-4 sleep-hour interruptions per person per month and aim to keep on-call time variance within 20% across the team.

Opsgenie provided on-call analytics including a "Total On Call Times per User" report that visualized distinct on-call hours for each team member, filterable by business hours versus off-hours. Dashboard templates introduced in late 2023 provided deeper analytics on team productivity and on-call time distribution.

Our AI assistant generates instant workload analysis reports, surfacing which team members handled the most incidents in any given period. The assistant generates heatmaps to show when incidents most commonly occur, helping you identify patterns and potential "hot spots" in the on-call schedule.

The key is using this data proactively. If your analytics show someone handling double the incident load of their peers, it's time to rebalance the rotation or add more people to the schedule. Most teams find 6-8 people optimal for a single rotation, balancing frequency with familiarity.

Integrated service catalog context attached to every alert

Alerts without context waste 8-12 minutes per incident while engineers hunt for service owners, runbooks, and dependency maps. This is time you're losing to preventable coordination overhead.

The solution is a service catalog that automatically attaches relevant context to alerts. When the monitoring tool fires an alert, the incident management platform should immediately surface the service owner, recent deployments, runbook links, dependencies, and current health status of related services.

Our Service Catalog integration ensures that when we create an incident channel, all relevant service metadata is automatically pulled in. The on-call engineer sees exactly which team owns the affected service, what other services depend on it, and where to find troubleshooting documentation. No hunting through Confluence or asking in Slack threads.

This integration extends beyond just displaying information. We use service catalog data to intelligently notify the right people. If the payment processing service is affected, the payments team gets automatically pulled into the incident channel based on ownership data in the catalog. This eliminates the manual step of figuring out who needs to be involved.

PagerDuty vs incident.io vs Opsgenie: Which on-call tool reduces costs and coordination overhead?

Feature comparison: what you actually need

| Feature | incident.io | PagerDuty | Opsgenie | Splunk On-Call |

|---|---|---|---|---|

| On-Call Scheduling | Unlimited schedules (Pro), round robin, smart escalation | Unlimited schedules, advanced rules engine | Daily/weekly/custom rotations, 200+ integrations | Weekly/daily handoffs (max 12 days) |

| Calendar Sync | HR system import (BambooHR, HiBob), holiday feeds | WebCal, Google Calendar extension | iCal export (3-month window), basic WebCal | Basic iCal export |

| Slack/Teams Native | Full lifecycle in Slack/Teams via slash commands | Notifications and actions, web UI for config | Notifications and basic actions in Slack | Basic ChatOps integration |

| Escalation Policies | Flowchart-style, priority-aware, time-aware routing | Advanced rules engine with sophisticated routing | Comprehensive multi-level escalation | Powerful rules engine, alert deduplication |

| Burnout Metrics | AI-powered workload analysis, natural language queries | Responder Report (sleep hour interruptions, response effort) | Total On Call Times per User, productivity dashboards | Standard reporting |

| Post-Mortem Automation | AI-drafted from timeline (80% complete automatically) | Manual with templates | Manual | Manual |

| Base Pricing | $25/user/month (Pro, annual) | $41/user/month (Business plan)* | Free tier (5 users), then custom pricing | Contact sales |

| On-Call Add-On Cost | +$20/user/month (Pro, annual) 30 users in this example | Included in base plans | Included in paid plans | Included |

| Real TCO (50 users, annual) | $22,200 (Pro with on-call)** | $30,000-50,000+ (estimated)† | Unknown (sunset 2027) | $25,000-40,000+ (estimated) |

| Support Model | Shared Slack channels, critical bugs fixed in hours | Email and chat support (Business plan) | Standard ticketing | Praised as helpful, friendly |

| Status | Active, $62M Series B (April 2025) | Active, public company | Shutting down April 5, 2027 | Active under Splunk/Cisco |

*PagerDuty pricing based on publicly listed Business plan rate. Enterprise requires custom quote.

**Correct calculation: (50 users × $25/month × 12) + (30 on-call × $20/month × 12) = $15,000 + $7,200 = $22,200

†PagerDuty estimates based on customer reports. Actual cost varies with add-ons for AI features, advanced analytics, and automation.

incident.io: unified, Slack-native incident response

We built incident.io for teams who want to manage the entire incident lifecycle in Slack or Microsoft Teams without switching to a web dashboard. Our platform combines on-call scheduling, alert routing, incident coordination, timeline capture, and post-mortem generation in one unified experience.

What sets us apart: We eliminate the coordination tax by running everything in Slack. When Datadog fires an alert, we auto-create a dedicated channel, page the on-call engineer, pull in the service owner based on catalog data, and start recording a timeline. Engineers use intuitive /inc commands to manage incidents without leaving the conversation.

"Incident.io was easy to install and configure. It's already helped us do a better job of responding to minor incidents that previously were handled informally and not as well documented." - Geoff H. on G2

One engineering team reported seamless rollout in under 20 days, with integrations to Linear, Google, New Relic, and Notion completed quickly. Another praised the platform's AI-powered automation that reduces friction in incident management.

Our AI SRE assistant automates up to 80% of incident response tasks (investigating, suggesting next steps, opening PRs), while the unified platform reduces coordination overhead, cutting MTTR by up to 80% for teams that previously used multiple fragmented tools. Post-mortems are pre-drafted from captured timeline data reducing documentation time.

Best for: Teams that live in Slack or Teams, want operational capability in days not quarters, and need transparent pricing without hidden add-ons.

Not for: Organizations not using Slack/Teams as their central hub, or teams needing deep microservice SLO tracking beyond incident response (we integrate with existing observability tools for that).

PagerDuty: expensive incumbent with deep alerting capabilities

PagerDuty is the established leader in on-call alerting, with advanced routing rules and 200+ integrations. The platform's WebCal feed provides calendar sync, and the Google Calendar Extension reads out-of-office events to help identify scheduling conflicts.

PagerDuty's Analytics Dashboard provides comprehensive reporting, including the Responder Report that tracks sleep hour interruptions and response effort per engineer. This visibility into workload distribution is valuable for preventing burnout.

The catch: While PagerDuty offers bidirectional Slack integration for incident actions, the platform's configuration and administration happen primarily through the web UI. When an alert fires, you get a Slack notification and can take many actions there, but the full incident management workflow often requires switching between Slack and the web dashboard. This creates coordination overhead that modern platforms eliminate.

Pricing is another pain point. PagerDuty publishes rates for Professional ($21/user/month) and Business ($41/user/month) plans, but Enterprise pricing requires custom quotes. Customers report that AI features, advanced analytics, and automation are gated behind higher-tier plans or sold as add-ons, pushing total cost of ownership well above simpler alternatives.

Best for: Large enterprises needing maximum alerting customization and willing to pay premium prices for an established vendor.

Not for: Teams wanting fully Slack-native workflows, transparent pricing, or responsive support without enterprise contracts.

Opsgenie: forced migration due to April 2027 shutdown

Atlassian announced in March 2025 that Opsgenie is shutting down, with new customer signups ending June 4, 2025 and the service terminating entirely on April 5, 2027. Teams currently using Opsgenie face a forced migration.

Before the shutdown announcement, Opsgenie offered comprehensive on-call analytics including the "Total On Call Times per User" report that visualized workload distribution. The platform supported daily, weekly, and custom rotation schedules with 200+ monitoring integrations.

The reality: Any organization evaluating Opsgenie today is making a short-term decision with a hard deadline. Atlassian is pushing customers to migrate to Jira Service Management, but JSM isn't purpose-built for real-time incident response the way Opsgenie was.

Best for: Nobody. The platform is being deprecated.

Recommendation: We offer migration assistance for Opsgenie customers, including schedule import and parallel-run capabilities to reduce risk during the transition.

Splunk On-Call: reliable but stagnant innovation

Formerly VictorOps, Splunk On-Call offers a powerful rules engine for alert deduplication and routing. The platform provides standard iCal export for calendar sync and supports weekly or daily on-call handoffs with a maximum of 12 days for daily rotations.

Customer feedback highlights helpful, friendly support and reliable multi-channel notifications. The ChatOps integration allows for basic incident coordination in Slack, though it's not as deep as our native implementation.

The limitation: Since the Splunk acquisition, customers report slower feature development compared to newer entrants. The interface feels dated compared to modern alternatives, and pricing is complex when bundled with Splunk's broader product suite. Teams not already invested in the Splunk ecosystem should evaluate more modern options.

Best for: Existing Splunk customers who want on-call capabilities integrated with their monitoring and observability stack.

Not for: Teams seeking cutting-edge features like AI-powered investigation or wanting transparent, standalone pricing.

How to migrate from Opsgenie to incident.io before the April 2027 shutdown without downtime

If you're currently using Opsgenie, you need to complete a migration before the April 5, 2027 shutdown deadline. This timeline creates urgency, but rushing the migration without a plan creates risk. Production incidents don't stop while you switch tools.

Steps to migrate your on-call rotation safely

Month 1-2: evaluation and planning

- Document your current state. How many schedules do you have? Which monitoring tools send alerts to Opsgenie? Who are your service owners?

- Define success criteria. What are the must-have features versus nice-to-haves? Clear success criteria are critical when evaluating incident management tools.

- Evaluate alternatives using the comparison table above. Schedule demos with your top 2-3 choices. Try incident.io to test Slack-native workflows with your actual team.

Month 2-3: proof of concept with parallel run

- Set up the new platform alongside Opsgenie. Import one on-call schedule and configure it to mirror your existing rotation.

- Run both platforms in parallel for 2-4 weeks. Configure both to receive the same alerts so you can validate routing rules match. If the new platform misses an alert or routes incorrectly, your existing Opsgenie setup catches it.

- Have a small team (5-10 engineers) handle incidents using both platforms. Gather feedback on usability, speed, and any gaps in functionality.

Month 4: full migration and cutover

- Migrate all on-call schedules to the new platform. Most modern tools offer CSV import or API-based migration for bulk schedule transfer.

- Update your monitoring tools (Datadog, Prometheus, New Relic) to send alerts to the new platform instead of Opsgenie. Do this service-by-service or team-by-team to limit blast radius.

- Maintain Opsgenie access for 1-2 weeks after cutover as a safety net. If something breaks in the new system, you can temporarily fall back while you fix it.

- Cancel Opsgenie subscription once you've confirmed the new platform is stable and all teams are successfully handling incidents.

Teams migrating can watch our PagerDuty migration webinar covering schedule import and integration setup.

On-call software pricing comparison: true cost of incident.io vs PagerDuty for 50-user teams

On-call features in incident management software typically follow one of two pricing models: per-user-per-month with tiered functionality, or base platform fee plus add-ons for on-call capabilities.

Understanding the real cost with add-ons

Our pricing is transparent, which is rare in this category. The Pro plan costs $25 per user per month on annual billing for incident response features. On-call scheduling is an add-on at $20 per user per month (annual). Real cost: $45 per user per month for complete on-call and incident response.

For a 50-person engineering team where 30 are on-call:

- Incident response for all 50: $15,000 annually ($25 × 50 × 12)

- On-call for 30: $7,200 annually ($20 × 30 × 12)

- Total: $22,200 per year

This tier includes unlimited workflows, Microsoft Teams support, AI-powered post-mortem generation, private incidents, and custom dashboards, features that teams need as they scale beyond basic incident response.

PagerDuty publishes pricing for Professional ($21/user/month) and Business ($41/user/month) plans on their pricing page. Based on user reports and ROI analysis, equivalent coverage for 50 users typically costs $30,000-$50,000+ annually. Costs reach the high end when you add AI features, advanced analytics, or automation, which are gated behind higher-tier plans or sold as separate add-ons.

Opsgenie offered a free tier for up to 5 users, then custom pricing for larger teams. This is now irrelevant given the shutdown timeline.

Splunk On-Call pricing is bundled with broader Splunk products and requires contact with sales. Standalone estimates for 50 users range from $25,000-40,000 annually based on third-party pricing data.

TCO beyond subscription costs

Total cost of ownership includes more than just subscription fees. Consider:

Implementation time: How long does it take to get operational? Our users report being live in days, not weeks. Complex platforms like PagerDuty may require 4-8 weeks of configuration and training.

Training overhead: Do engineers need extensive training to use the tool effectively? Slack-native platforms have minimal training requirements because engineers already know Slack. Web-first tools require documenting workflows and running training sessions.

Integration maintenance: Who maintains the integrations with monitoring tools, Jira, and status pages? Some platforms require custom scripting and ongoing maintenance as APIs change. Managed integrations reduce this burden.

Support responsiveness: When you hit a bug during a critical incident, how fast does the vendor respond? We offer shared Slack channels with our engineering team with critical bugs fixed in hours. Traditional vendors offer email support with 24-48 hour SLAs.

Choosing on-call management software: evaluation framework by company size and engineering team maturity

Evaluation checklist by company size and needs

Startup (5-20 engineers, Series A):

- Priority: Get operational fast with minimal configuration

- Must-haves: Basic on-call scheduling, Slack integration, straightforward pricing, responsive support

- Consider: incident.io Basic plan (free for core incident response without on-call scheduling) to start, or Pro plan at $45/user/month with on-call if you need scheduling and escalation policies

- Avoid: Enterprise platforms requiring 8-week implementations and complex pricing negotiations

Scale-up (50-200 engineers, Series B-C):

- Priority: Fair workload distribution, integration with existing tools (Datadog, Jira), post-incident learning

- Must-haves: Workload analytics, round robin escalation, service catalog integration, automated post-mortems, calendar sync with HR systems

- Consider: incident.io Pro plan ($45/user/month with on-call) for advanced features like AI post-mortems and Microsoft Teams support if needed

- Avoid: Tools that can't scale with your team or have per-incident pricing that penalizes high velocity

Enterprise (500+ engineers, pre-IPO or public):

- Priority: Compliance (SOC 2, GDPR), SAML/SCIM, dedicated support, advanced security controls, multi-region deployments

- Must-haves: Enterprise SSO, audit logs, SLA guarantees, customer success manager, sandbox environment for testing

- Consider: incident.io Enterprise plan (custom pricing), or PagerDuty Enterprise if maximum alerting customization is paramount and budget isn't constrained

- Avoid: Platforms without enterprise security certifications or those shutting down (Opsgenie)

Questions to ask during vendor demos

- Can you show me the entire incident lifecycle from alert to post-mortem in one tool? Watch for context-switching. Do they stay in Slack or constantly open web dashboards?

- How do I track who's getting paged at 3 AM? Ask to see burnout metrics and workload distribution reports. Can they show sleep hour interruptions per responder?

- What happens if someone is on vacation and gets paged? Test the calendar sync and override process. Is it automatic or manual?

- How long does implementation take, and what's required from my team? Calculate the real time-to-value including configuration, integration setup, and training.

- What's the all-in cost for 50 users including on-call? We publish our pricing openly, but many vendors don't. Push for transparent pricing including any add-ons for features you need.

- How fast do you respond to bugs and feature requests? Ask for customer references who can speak to support quality during incidents.

The real value is in unified workflows, not just better paging

You already know every platform can page you reliably. The differentiation is in what happens after you acknowledge the alert. Do you spend 15 minutes hunting for context and assembling the team? Or does the platform immediately provide the runbook, pull in the service owner, create a dedicated incident channel, and start capturing a timeline automatically?

We unified scheduling with incident response workflows specifically to eliminate coordination tax. When we page you, we also create the channel, assign roles based on your service catalog, and start documenting. You focus on solving, not coordinating. This is why teams using unified platforms can reduce coordination overhead by up to 80% compared to using separate tools for paging, communication, and documentation.

For teams currently using Opsgenie, the shutdown deadline creates urgency, but also an opportunity to upgrade rather than just migrate. For teams frustrated with PagerDuty's complexity and cost, Slack-native alternatives can reduce expenses significantly while improving response speed. And for teams cobbling together custom on-call bots and spreadsheets, professional platforms provide structure and automation that reduces MTTR while eliminating maintenance toil.

Book a demo with incident.io to learn more.

Key terminology

On-call rotation: A scheduled pattern where engineers take turns being the primary responder for production incidents during specific time periods (e.g., weekly shifts).

Escalation policy: Rules defining who gets notified if an incident isn't acknowledged within a specified timeframe, typically escalating through multiple levels or teams.

MTTR (Mean Time To Resolution): The average time from when an incident is detected to when it's fully resolved and services are restored.

Coordination tax: The time overhead spent assembling context, finding the right people, and switching between tools during incident response before actual troubleshooting begins.

Round robin escalation: A fair distribution method that rotates on-call pages between team members sequentially rather than always escalating to the same person.

Service catalog: A centralized database of services including ownership, dependencies, runbooks, and health status, automatically surfaced during incidents.

Shadow rotation: A backup on-call pattern where a secondary engineer is designated to respond if the primary is unavailable. This reduces pressure on the primary responder.

Responder report: Analytics showing individual engineer workload including incidents handled, sleep hour interruptions, and time spent on-call.

Slack-native: An architecture where the entire incident management workflow happens inside Slack using slash commands, not just notification delivery.

Follow-the-sun scheduling: A global rotation pattern that distributes on-call coverage across time zones so engineers are on-call during their daytime hours. This reduces nighttime pages.

FAQs

See related articles

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian Hanson

Everything you need to know about ITIL 5, AI and incident management

We break down ITIL 5's governance framework and what it means for teams using AI in incident response. For incident management, it addresses questions like: Who's accountable when an AI-suggested remediation backfires? How do you audit AI-generated updates?

Chris Evans

Chris Evans

DevEx matters for coding agents, too

When AI can scaffold out entire features in seconds and you have multiple agents all working in parallel on different tasks, a ninety-second feedback loop kills your flow state completely. We've recently invested in dramatically speeding up our developer feedback cycles, cutting some by 95% to address this. In this post we’ll share what that journey looked like, why we did it and what it taught us about building for the AI era.

Rory Bain

Rory BainSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization