DevEx matters for coding agents, too

The speed at which you can go from making a change in your code, to understanding if it actually works, has long been a popular topic of discussion (and often, humour) for engineers.

This remains true in a world with AI. Developer experience isn't just important for humans anymore. Those agents we're all using hundreds of times a day? Feedback cycles matter just as much for them, if not more.

We've recently invested in dramatically speeding up our developer feedback cycles, for humans and agents alike, with many key build, codegen and linting tools sped up up-to 95% in the process.

In this post we’ll share more on what that journey looked like, why we did it and what it taught us about investing more deliberately for the era of AI coding agents.

Tools matter more with AI, not less

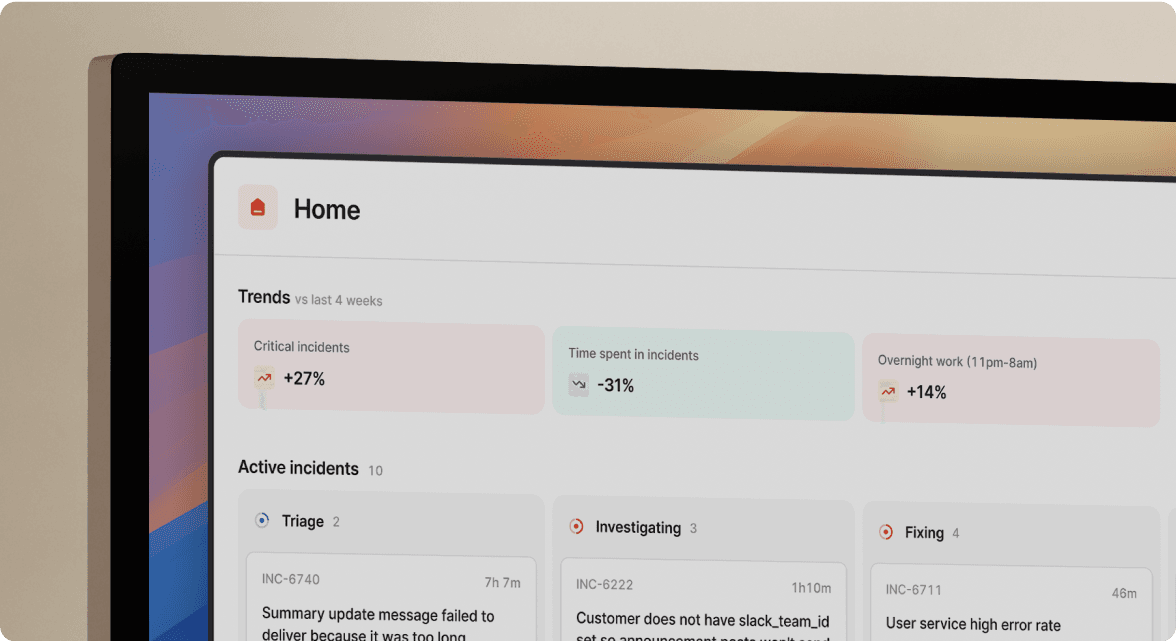

We've been using AI coding tools extremely heavily at incident this year. Claude Code, Cursor, and Codex are all part of our daily workflow now and we use over 50 billion tokens a month on development alone. It’s been transformative for us.

However, when you can quickly dictate to Claude Code and have it write a React component for you in seconds, and then you spend the next minute and a half waiting to find out if it actually compiles, type-checks and passes your linter rules, it feels slow.

Surprisingly, rather than compromising our code quality and developer experience, our AI investments have instead made us obsessive about our developer tooling. When you can generate code ten times faster than before, every part of your toolchain that can’t match that speed becomes unbearable.

As Lawrence who leads our AI team put it: "Loads of people out there are worried about AI compromising the quality of engineering but we've seen the reverse at incident. It's been a massive motivator for us to improve our DevEx and tools, which helps both AI and engineers."

The Biome migration: choosing speed over perfection

At the start of 2025, our web dashboard - about half a million lines of TypeScript across many thousands of files - took about 90 seconds to type-check and format. That’s already quite slow but just about manageable if you’re writing code manually, getting some feedback from your IDE as you work, and frequently context-switching to other tasks or further development in between builds.

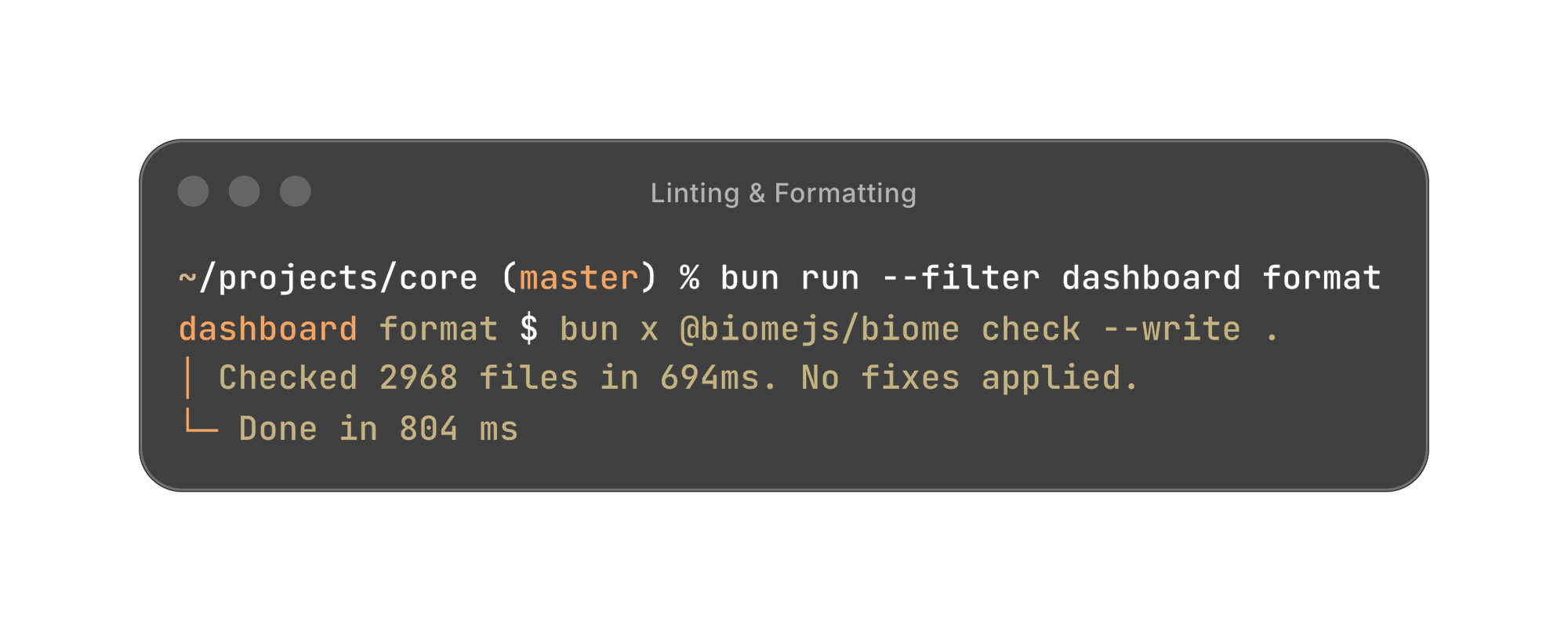

Let's start with the most pragmatic decision we made: switching from ESLint and Prettier to Biome. Our linting and formatting used to take 40 seconds (without caching). With Biome, it takes less than one second.

It wasn’t all smooth sailing - Biome's IDE integration hasn't been great and you have to fiddle with VS Code and GoLand settings to get it working properly. The migration itself was also a bit tricky because Biome's rules are slightly different from ESLint. We had to update thousands of files and cause some short term git-conflict pain, but AI makes it much more feasible to write small migration tools that make these kinds of changes possible.

But, when something is forty times faster, you can tolerate a few rough edges. Every time we run bun format (we switched from Yarn to Bun as our runtime for these tasks, which itself is 1.7x faster than Node/Yarn), we now get sub-second feedback. This totally changes how you work and lets you format aggressively and often, catching issues immediately, rather than batching them up.

Perfection is expensive and sometimes it’s better to optimise for velocity when "good enough and blazingly fast" clearly beats "slightly better but significantly slower".

When using an alpha version of your compiler makes sense

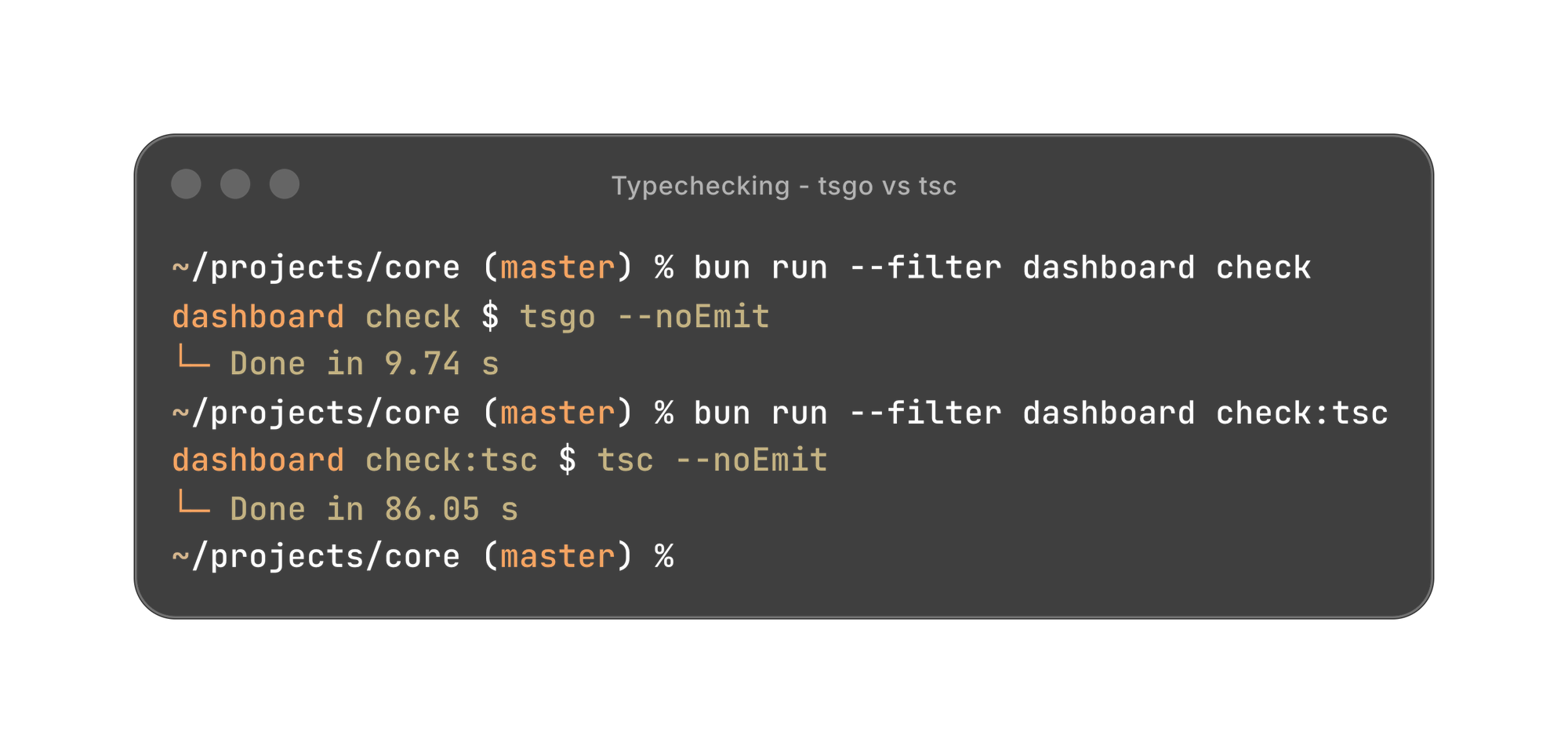

TypeScript's new Go-based compiler, tsgo, is still in preview. But it's fast. It checks half a million lines of TypeScript in 9.74 seconds. That’s over 50k lines a second and up to 10x faster than the original Typescript implementation - tsc.

We didn't just switch everyone over immediately. We made it opt-in first. bun check:tsgo ran alongside the regular bun check. We’ve long been using swc to generate the actual bundle we ship, so we were confident the actual build output wouldn’t change. A few people tried it out for a couple of weeks, reported back that it seemed fine, and then we flipped it to be the default.

The safety net: we still run both tsgo and tsc on CI. If there's ever a case where they disagree, CI catches it. This is the kind of pragmatic approach that lets you get speed wins immediately while maintaining confidence. Get the benefit now, keep the safety net just in case.

When AI generates code, that instant type feedback matters. You're not just checking your own work anymore—you're validating what the AI produced. Fast type-checking becomes part of the iteration loop, not a batch operation you run before committing.

Building our own OpenAPI generator

This one's my favourite, because it shows how the "obvious" solution isn't always the right one.

We use Goa for our Go API framework. Goa generates OpenAPI specs, and we use those specs to generate TypeScript clients for our dashboard. For years, we used the Java OpenAPI Generator for this. It worked fine—generated exactly the code structure we wanted.

But as our API grew, generation got slower. We now have over 100 services generating ~3,000 files through Goa, plus hundreds of API files (with thousands of individual operations) and many more thousands of model files for the TypeScript client.

Eventually the Java OpenAPI generator was taking upwards of 45 seconds per run.

So we did the "sensible" thing: we tried switching to modern JavaScript-based generators like hey-api. These tools are great! They're fast, well-maintained, actively developed.

Except... the generated output was different. Enums became union types instead of proper TypeScript enums. Casing conventions changed. Property names were formatted differently. The underlying fetch usage varied. None of these are wrong—they're just design choices. But these differences were far more significant than the Biome migration and often resulted in functionally different implementations. We ultimately decided that migrating thousands of lines of calling code to handle these differences was fiddly and risky.

These kind of experiments happened a few times, and we soon noticed a pattern. Off the shelf tools started out great, but as we customised them with flags and configuration to get exactly the output we wanted, complexity compounded and they slowed down just like what we had originally whilst also not quite getting what we needed.

Eventually it made sense to debate: what if we just wrote our own? It’s not something we do often here - we prefer to buy vs build and lean heavily on open-source where we can.

However, we also understand our requirements exactly. We know what output structure we want. And with Bun and TypeScript, we realised we could replicate a lot of the relevant behaviour in the Java generator behaviour precisely with almost no migration cost at all.

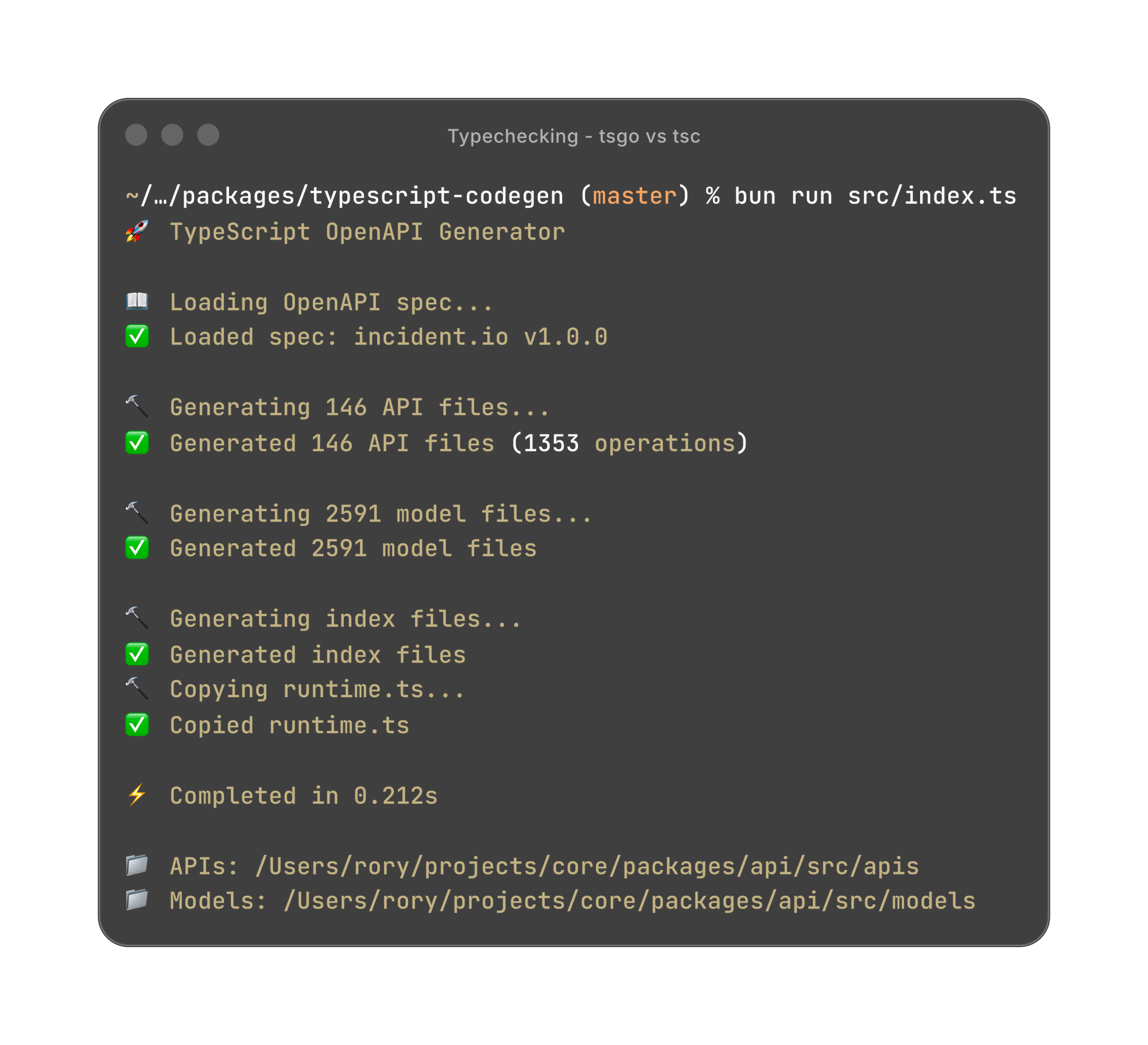

So, we built it. It ended up being far simpler than expected. We cloned the Java implementation, pointed Claude code at our input and our expected output, and let it keep working until it generated a like-for-like output. Around 700 lines of TypeScript that parses OpenAPI 2.0 specs and generates our TypeScript client code, running via Bun.

API client generation went from 45 seconds down to 0.21 seconds, an over 200x speedup!

Here's where the ROI starts to really become clear: we've had hundreds of commits in 2025 which touched our OpenAPI spec. Each of those API changes involved many regenerations as we fixed type errors, adjusted the implementation and fixed errors. If we assume 3 regenerations per commit, that's over a day of engineering time saved and thousands of CI credits saved.

On pure time alone we've probably saved around 3 days of engineering time previously just spent ... waiting. Factor in the context switching costs and general friction of an ill-fitting developer experience and the cost is likely higher still.

The cherry on the cake is that with AI, building these tools is also faster than ever so it’s already paid for itself and as we grow in size, that will only continue, which leads us on to our next tip.

Developing with AI, to speed up developing with AI

As above in the OpenAPI example, AI doesn’t just create the need for faster tools. It can help you build them.

Isaac, one of our engineers, recently contributed a PR to the aforementioned Goa project, which cut generation time from 52 seconds to 10 seconds - a 81% speedup. He added timing logs, implemented parallel file writing with a worker pool, and optimized import handling to write files once rather than multiple times.

Could Isaac have done this without AI? Absolutely. But it helped him understand Goa's internals much faster, prototype solutions more quickly, and validate his approach against the existing codebase with a rapid feedback loop.

Same with our custom OpenAPI generator. We used AI to understand the Java generator's behaviour, translate patterns to TypeScript, and handle edge cases.

There's a virtuous cycle here: fast tools make AI more effective, and AI helps you build fast tools.

It all adds up!

When we add it all up - Biome, tsgo, the custom API generator, Bun, rsbuild and more, our typical feedback loop for a simple lint + compile went from over 90 seconds to under 10 seconds.

We more than doubled our engineering team in 2025, and we're planning similar growth in 2026. At that scale, these investments compound across the team. Fast feedback loops don't just make one developer more productive - they make the entire team move faster

But the leverage isn't just about time saved per developer. It's about what becomes possible. Engineers iterate more confidently when they get instant feedback. CI failures get fixed immediately rather than breaking your flow. Context-switching costs drop because you're not spending 90 seconds waiting and opening Slack.

It’s the same for coding agents - whether you’re working single-threaded or with multiple agents and work-trees, you get far faster results when the agent can quickly find out the code it wrote is any good, and fix it if not.

The work continues

Speeding up feedback loops almost 90% from 90s to just 10s is great, but developer experience is an ongoing challenge. We have plenty more improvements we’ve made along the way and many more we want to make in future.

We just recently added build diagnostics to our Go backend hot-reloader so you can see exactly which files triggered rebuilds and where time is spent. We've set up goimports to run automatically on Go code, and Biome formatting on save for TypeScript. We've fixed database query patterns that caused timeouts for large customers. We've systematically removed barrel imports across the dashboard to improve tree-shaking.

Each improvement makes the next one easier. Good tooling compounds.

The culture around here is one of bottom-up engineering ownership and we make time for this work, because we all know how much it matters. When something's slow, we don't just accept it as "just how things are." We measure it, understand it, and either optimise it, replace it, or contribute upstream to make it faster for everyone. We have a whole channel just for sharing opportunities like the ones above and getting them shipped.

The goal isn't perfect tools—it's leverage. Better tools mean more time for customer problems. Faster feedback loops mean confident iteration.

Why this matters for hiring

If you're an engineer who values craft and compound leverage, you should talk to us.

We're doubling our engineering team again in 2026. We want people who:

- Believe that speed is a superpower and one worth investing in, not a luxury.

- Really feel a sense of ownership over their tools, and improve them relentlessly.

- Are constantly finding ways to use AI to accelerate themselves and their team.

- Want to spend time solving customer problems, not fighting slow builds.

Your AI is only as fast as your slowest tool. We're building tools that keep up.

See related articles

Everything you need to know about ITIL 5, AI and incident management

We break down ITIL 5's governance framework and what it means for teams using AI in incident response. For incident management, it addresses questions like: Who's accountable when an AI-suggested remediation backfires? How do you audit AI-generated updates?

Chris Evans

Chris Evans

Stop choosing between fast incident response and secure access

incident.io’s new integration with Opal Security delivers automatic, time-bound production access for on-call engineers, eliminating slow approvals and permanent permissions.

Brian Hanson

Brian Hanson

AWS re:Invent 2025: The top sessions SREs should attend

The top sessions every SRE should see at this year's AWS re:Invent.

Kate Bernacchi-Sass

Kate Bernacchi-SassSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization