Incident management tools for DevOps: the Kubernetes & microservices guide

Updated January 19, 2026

TL;DR: Traditional incident management breaks in Kubernetes because pods are ephemeral and disappear with their logs, while failures cascade across distributed microservices. You need tools that parse Prometheus labels for intelligent routing, correlate alerts with deployments (nearly 80% of outages stem from changes), and capture timelines before evidence vanishes. incident.io provides Slack-native coordination with deep Datadog and Prometheus integration. PagerDuty handles enterprise alerting but requires context-switching. Grafana OnCall suits Grafana-centric stacks. Komodor excels at K8s troubleshooting but complements rather than replaces incident platforms.

Fifty Prometheus alerts fire simultaneously across ten microservices. API latency spikes to 5,000ms. By the time you open Datadog, three pods have already CrashLoopBackOff'd and restarted. The logs are gone, the evidence has vanished. This is the reality of Kubernetes incident management: the infrastructure you're debugging is constantly destroying and recreating itself.

Kubernetes incidents differ fundamentally from monolith failures. In traditional environments, you can SSH into a failing server and review system logs. If an attacker exfiltrates data and the container shuts down, any record of the attack disappears along with the container.

This guide covers the specific tool requirements for DevOps teams managing incidents in distributed, container-orchestrated environments and compares the platforms built to handle this complexity.

Why traditional incident response breaks in Kubernetes environments

Ephemeral infrastructure destroys evidence

Kubernetes containers are naturally short-lived and ephemeral, constantly being created, altered, and removed. A pod experiencing an out-of-memory condition OOMKills and restarts. Your monitoring fires an alert. By the time you open the incident channel, Kubernetes has already respun the container. Everything shows green in your dashboard. The only evidence is the alert itself and whatever logs you managed to capture before the restart.

Microservices complexity masks root causes

The distributed nature of microservices makes it far more complex to identify what has gone wrong. When one service fails, it triggers alerts in five downstream dependencies. Your incident channel fills with dozens of simultaneous alerts from Prometheus, Datadog, and New Relic. Which alert represents the root cause? Which are symptoms?

According to Komodor's 2025 Enterprise Kubernetes Report, nearly 80% of production outages trace back to system changes. A bad ConfigMap change takes down your ingress controller, but your alerts scream about database connection failures. The actual culprit is buried in Kubernetes event logs three layers removed from where you're looking.

Data fragmentation increases cognitive load

Logs live in one place, metrics in another, traces in a third. During an active incident, you toggle between Grafana for metrics, Elasticsearch for logs, Jaeger for traces, Slack for coordination, and your alerting tool. Research shows context switching increases Mean Time to Resolution because teams lose critical thinking time rebuilding mental models across fragmented interfaces.

The typical coordination tax is 10-15 minutes per incident spent just assembling the team, finding context, and setting up communication channels before actual troubleshooting begins.

Key requirements for microservices incident management

Managing incidents in ephemeral infrastructure requires capabilities that traditional tools don't provide. Your platform must parse alert metadata intelligently, correlate runtime failures with deployment events, and capture evidence before containers disappear. Here are the three non-negotiable requirements.

Deep integration with Prometheus and Datadog

Generic webhook forwarding is not enough for Kubernetes environments. Your incident management platform must parse Prometheus Alertmanager payloads to extract labels like service:payment-gateway, severity:critical, or cluster:us-east-1 and use that metadata for intelligent routing.

Once Alertmanager receives an alert from Prometheus, it processes the alert based on configuration involving grouping, deduplication, and routing to appropriate receivers. A deep integration means your incident tool understands this structure natively, not just forwarding raw JSON.

The default configuration for Prometheus Alertmanager integration is designed for Kubernetes environments using standard labels, but customization is required to match your specific label schema. Your platform must parse annotations and labels to auto-assign incidents to the correct service owner from your service catalog.

Watch this hands-on introduction to incident.io Catalog to see how service mapping enables automatic routing based on Prometheus labels.

Kubernetes deployment correlation and root cause analysis

Nearly 80% of production incidents trace back to changes. Your incident platform must link alerts to recent deployment events. Komodor gathers all events regardless of source and presents them in a unified timeline: the deployment image upgraded via CI/CD, the HorizontalPodAutoscaler changing replica counts, and the ConfigMap modification that actually broke the service.

Deployment correlation works by tracking events from multiple sources. incident.io's GitHub integration enables automatic syncing, so when a deployment triggers an incident, the timeline shows exactly which commit, pull request, and deployment caused the issue.

Platform code-to-cloud correlation traces runtime incidents back to vulnerable source code and Infrastructure as Code templates, enabling true root cause remediation rather than symptomatic fixes.

Automated timeline capture for ephemeral infrastructure

Since containers and pods disappear within seconds, the timeline becomes your only source of truth. Manual note-taking during incidents is unreliable. You need automatic capture of alert trigger times, auto-scaling events, role assignments, and all human actions.

incident.io captures everything automatically: every Slack message in the incident channel, every slash command execution, every role assignment, and every status update. When you resolve an incident, the platform has already built your post-mortem timeline using captured data.

Watch Scribe's automatic incident call transcription to see how real-time transcription captures decisions without requiring a dedicated note-taker during incidents.

"incident.io saves us hours per incident when considering the need for us to write up the incident, root cause and actions, communicate it to wider stakeholders and overall reporting." - Verified User Review on G2

Top incident management tools for DevOps teams

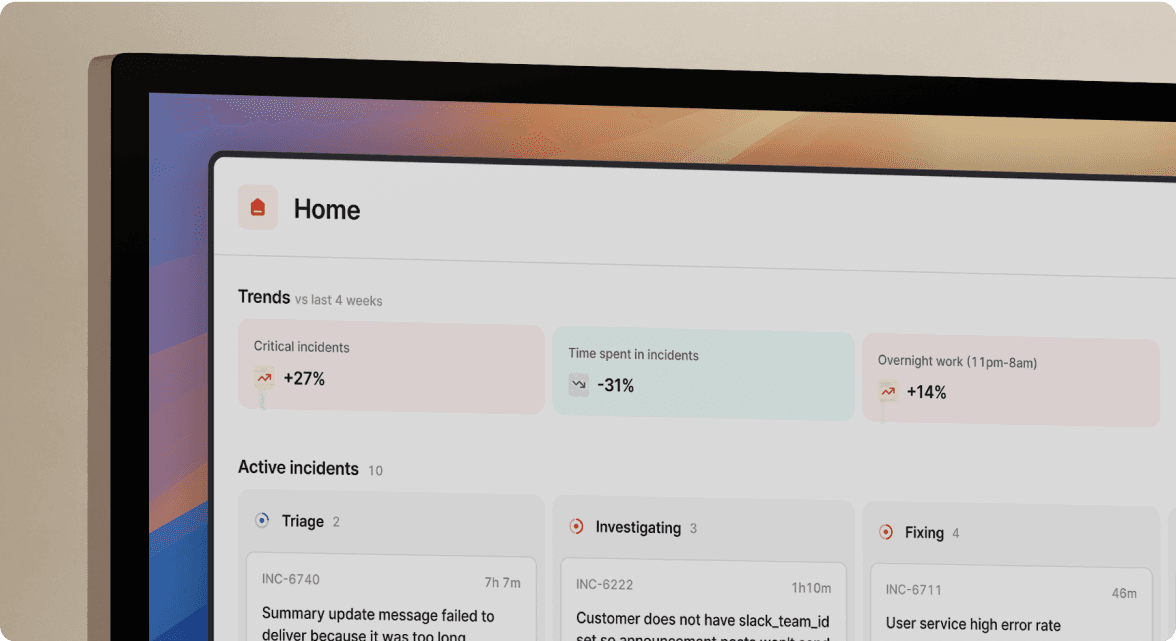

incident.io: The Slack-native command center

incident.io is purpose-built for coordination in distributed systems. The entire incident lifecycle happens inside Slack using slash commands and channel interactions, not a web-first tool with Slack notifications bolted on.

The Service Catalog connects your systems, features, and team owners so alerts route correctly. For teams using Backstage, catalog-importer supports catalog-info.yaml files natively. When a Prometheus alert fires for service:payment-api, incident.io pages the payments team, pulls in the database SRE, and surfaces recent deployment history automatically.

Workflow automation handles channel creation, on-call paging, and context population. AI SRE autonomously investigates incidents, automating up to 80% of incident response. Watch how to automate incident resolution with AI SRE from the SEV0 London 2025 conference.

For configuration details, review migrating Datadog monitors to incident.io and creating incidents automatically via alerts.

PagerDuty: The enterprise alerting standard

PagerDuty remains the established incumbent for alerting and on-call management. It integrates with over 700 tools, including Datadog and New Relic, to consolidate alerts and reduce noise. Teams can set up escalation policies that automatically notify the right people via SMS, email, or phone if incidents are not acknowledged promptly.

The mobile-first approach via iOS and Android apps allows engineers to acknowledge alerts, join conference bridges, and update incident status while away from their desks. For teams running global on-call rotations across time zones, PagerDuty's reliability is battle-tested.

The fundamental limitation: alert fires in PagerDuty, coordination happens in Slack, tickets get created in Jira, and post-mortems get written in Confluence. Every context switch adds cognitive load during K8s incidents where you're already debugging ephemeral pods and cascading failures.

If you're considering migration, check out these tools to make migrating from PagerDuty easier.

Grafana OnCall: The open-source Prometheus companion

Grafana OnCall is the natural choice for teams already invested in Grafana dashboards and alerting. Its data-native approach links alert details, visualization, and on-call handoff so responders can move from signal to action quickly.

OnCall, part of the Grafana Cloud IRM app, delivers context-rich notifications with metrics, logs, and related information for informed decisions. For SRE and DevOps workflows, this tight coupling reduces context switching when your observability stack is Grafana-centric.

The trade-off: if your stack isn't Grafana-focused, you'll find less flexibility integrating equivalent context from other observability tools. Grafana IRM shines in simplicity and visualization capabilities, making it ideal for smaller teams, but one common limitation is reduced incident response automation and AI-based features.

Important note: Grafana discontinued active development of OnCall OSS as of March 11, 2025, with archival scheduled for March 24, 2026. While you can continue using OnCall OSS in its current state, no further updates or new features will be introduced.

Komodor: The Kubernetes-native troubleshooting companion

Komodor is a Kubernetes troubleshooting tool specifically designed for developers, combining a live view of your cluster with integrations for metric providers you already have installed. Its purpose is incident investigation and diagnosis, not end-to-end incident management.

Komodor automatically detects, investigates, troubleshoots, and fixes issues, providing instant visibility into your clusters. The platform visualizes change timelines, dependency graphs, and correlated events that help answer "what changed?" during K8s incidents.

Komodor understands all events that happened in your application regardless of source. If a deployment image was upgraded by CI/CD and a HorizontalPodAutoscaler changed replica counts simultaneously, Komodor gathers all these events and presents them in chronological order.

Komodor does not offer comprehensive features like on-call scheduling, status pages, or post-mortem management. An incident would be declared and coordinated in incident.io (mobilizing the team, creating communication channels), while Komodor would be used within that incident channel to quickly diagnose the root cause.

Watch this DevOps Panel on the State of Modern Incident Management to understand how different tools serve different phases of the incident lifecycle.

Comparison: Feature matrix for Kubernetes-centric teams

| Feature | incident.io | PagerDuty | Grafana OnCall | Komodor |

|---|---|---|---|---|

| Prometheus/Alertmanager Integration | Native label parsing, intelligent routing | Webhook forwarding, basic parsing | Native Grafana integration | Metric visualization |

| Deployment Correlation | GitHub/GitLab timeline integration | Manual or via extensions | Limited | Deep K8s change tracking |

| Slack-native Actions | Full incident lifecycle via slash commands | Notifications only, web UI required | Notifications only | No Slack integration |

| Automated Timeline Capture | Real-time capture + AI transcription | Manual or third-party tools | Basic event capture | K8s event capture only |

| Service Catalog / Dependency Mapping | Built-in with Backstage import | Via ServiceNow integration (Enterprise) | Via Grafana Cloud services | Visualizes K8s dependencies |

| AI-Powered Root Cause | Autonomous investigation and fix generation | AIOps add-on (extra cost) | Not available | Pattern detection |

How to build a Kubernetes-ready incident workflow

Step 1: Tag everything with labels and annotations

Kubernetes is based on YAML files describing what you want the cluster to do. Maintain good YAML hygiene by adding metadata like labels, annotations, environment variables, version tags, and business-specific data. Your incident tool uses these labels as routing intelligence.

For example, labeling services with team:payments, tier:critical, and environment:production allows Prometheus alerts to automatically route to the correct on-call rotation and pull in appropriate service owners.

Step 2: Map services to owners in your incident tool

Gaining rapid situational awareness requires easy access to insight about services in Kubernetes through a service catalog that delivers critical core metadata and provides a single view of all services across clusters. A service catalog structures your inventory of services, owners, dependencies, and metadata, enabling automated alert routing and context delivery during incidents.

For incident.io users, you can import your existing service catalog data. Check the documentation on migrating Datadog monitors to incident.io for step-by-step instructions.

Step 3: Automate the "Assemble" phase

When alerts fire, automatically create dedicated Slack channels, page on-call engineers via push, SMS, and phone, and pre-populate channels with triggering alerts, service catalog context, and auto-assigned incident leads.

This automation eliminates the typical coordination tax of 10-15 minutes per incident spent just assembling the team and finding context before actual troubleshooting begins.

For Prometheus users experiencing alert delivery issues, troubleshoot using this guide: Why are my Prometheus Alertmanager alerts not appearing in incident.io?

Step 4: Correlate changes with deployment events

Pipe GitHub, GitLab, or CI/CD events into your incident timeline. Komodor gathers all events and presents them in chronological order, showing deployment image upgrades, autoscaler replica changes, and ConfigMap modifications on one screen.

This correlation is critical because nearly 80% of production outages trace back to system changes. When responders can immediately see "Payment API v2.3.1 deployed 4 minutes before first alert," they skip hours of investigation.

Watch this video on DevOps with AI: Microtica's AI Agent for Incident Response to see how modern platforms surface deployment correlation automatically.

Reducing MTTR in distributed systems

You need specialized tooling for Kubernetes incidents because the infrastructure you're debugging constantly recreates itself. Generic incident management platforms assume stable servers and persistent logs. In container orchestration, those assumptions break.

The incident management layer you choose must parse ephemeral events before they disappear, correlate alerts across distributed services to identify root causes, and capture timelines automatically because manual reconstruction is impossible when pods live for 90 seconds.

incident.io reduces coordination overhead through Slack-native workflows, deep Prometheus and Datadog integrations that parse labels for intelligent routing, and AI-powered investigation. PagerDuty provides enterprise-grade alerting but forces context-switching. Grafana OnCall serves Grafana-centric stacks. Komodor excels at K8s troubleshooting but complements rather than replaces end-to-end platforms.

For teams running modern microservices architectures, the real question is which combination of coordination, alerting, and troubleshooting platforms matches your stack.

Try incident.io in a free demo and run your first Kubernetes incident in Slack to see how Slack-native workflows compare to web-first platforms.

Key terminology

Ephemeral workloads: Kubernetes containers that are short-lived and constantly created, altered, and removed, making traditional debugging approaches ineffective because evidence disappears when pods restart.

CrashLoopBackOff: A Kubernetes error status occurring when a container repeatedly crashes after starting, often caused by application bugs, missing dependencies, or resource constraints.

Deployment correlation: Linking production alerts to recent code deployments or configuration changes by tracking events from CI/CD pipelines and version control systems on incident timelines.

Service catalog: A structured inventory of microservices, owners, dependencies, and metadata used by incident management platforms to route alerts correctly and surface relevant context during incidents.

FAQs

See related articles

The post-mortem problem

Post-mortems are one of the most consistently underperforming rituals in software engineering. Most teams do them. Most teams know theirs aren't working. And most teams reach for the same diagnosis: the templates are too long, nobody has time, nobody reads them anyway.

incident.io

incident.io

Keeping it boring: the incident.io technology stack

This is the story of how incident.io keeps its technology stack intentionally boring, scaling to thousands of customers with a lean platform team by relying on managed GCP services and a small set of well-chosen tools.

Matthew Barrington

Matthew Barrington

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian HansonSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization