Best incident management tools for platform engineering teams: Reducing toil and improving MTTR

Updated January 30, 2026

TL;DR: Platform teams waste significant time manually routing incidents before troubleshooting starts. The fix: treat incident management as a self-service IDP capability. Map services to owners in a Service Catalog, automate channel creation through Slack-native workflows, and use AI to reduce cognitive load. This approach cuts coordination overhead and improves MTTR, eliminating the toil that burns out on-call engineers.

You built a Kubernetes platform to automate deployments. Your infrastructure-as-code pipeline provisions resources in minutes. Your CI/CD system ships code without manual intervention. Yet when a production alert fires at 3 AM, you're still playing human switchboard operator, manually figuring out which team owns the failing service and hunting through outdated runbooks to find the right person to page.

This is the platform engineering paradox. We automate infrastructure but leave incident response manual, fragmented, and toil-heavy. Platform teams accidentally become incident help desks, fielding every alert because service ownership is unclear and routing logic lives in someone's head instead of in code.

The way out is applying the same platform engineering principles that transformed your deployment pipeline to incident management itself. Shift from centralized "gatekeeper" to automated "guardrails." Build self-service incident response as a capability of your IDP. Let service owners declare and manage their own incidents using the tooling you provide, while you focus on maintaining the platform infrastructure and automation that makes self-service response possible.

Why platform teams become incident bottlenecks

Problem: When service ownership is unclear or undocumented, platform teams become the default catch-all for every production alert. An alert fires for a service you don't own. You get paged because you're "infrastructure." You dig through Slack history, Git logs, and tribal knowledge to figure out which team built the service, then hunt for their on-call contact. By the time the actual service owner joins, critical minutes have evaporated.

Impact: This coordination tax compounds quickly. Research shows operations teams spend 15 to 30 minutes manually checking dependent services, alert dashboards, and logs in typical incident scenarios. The typical breakdown shows 12 minutes assembling the team before troubleshooting starts, with total median MTTR for P1 incidents reaching 45 to 60 minutes.

The Google SRE book defines toil as "manual, repetitive, automatable work that scales linearly as a service grows". Manual incident routing checks every box. Google's SRE teams report spending about 33% of their time on toil.

Context switching hell: Five tools and twelve minutes of logistics before troubleshooting starts represents the coordination tax. You toggle between PagerDuty for the alert, Datadog for metrics, Slack for communication, Jira for tickets, and Confluence for runbooks. Each context switch costs cognitive load during the exact moment you need focus.

"Easy of use and fast feature releases with excellent support that helps us almost immediately when we have a problem. I always thought that Incident Management is a hard thing to do. Incident.io change my opinion 🚀" - Felipe S. on G2

The platform approach: Shifting from gatekeeper to guardrails

The fix is reconceptualizing incident management not as a separate operational process but as a product capability within your Internal Developer Platform (IDP).

Incident management as IDP capability: Modern IDPs exist to enable development team velocity through self-service. IDPs integrate Site Reliability Engineering practices to streamline service reliability management, especially in incident detection and response. Connecting to tools like Jira Service Management enables automated alerts, root cause analysis, and incident tracking to populate the IDP, reducing time spent searching during incidents.

Golden paths for incident response: Just as you provide golden paths for deployment, provide golden paths for incident response. Pre-defined workflows differentiate SEV1 critical incidents (auto-page VP Engineering, create war room, start bridge call) from SEV3 moderate incidents (notify team Slack channel, create ticket, standard post-mortem). Service teams follow the path appropriate to severity without custom scripting.

Self-service declaration: Application teams declare and manage their own incidents using centralized tooling you provide. A developer types /inc in Slack and selects "declare incident," describes the issue, sets severity, and the platform handles the rest. No ticket to the platform team. No waiting for someone to create a channel manually.

Automated guardrails: Policy enforcement happens automatically. Every incident must have assigned severity within defined timeframes or auto-escalates. Status updates required regularly for SEV1. Post-mortems mandatory for SEV1 to SEV2. The platform enforces process without platform team intervention.

"Incident.io is a fantastic platform for managing incidents, customer status pages, and infrastructure catalogs. We love that it is slack-centric, but also web-native." - Verified user on G2

Structuring incident response for Internal Developer Platforms

The Service Catalog is the brain of automated incident response. You cannot route alerts correctly without a source of truth mapping services to owners, dependencies, and escalation policies.

Service Catalog as routing foundation: We map alerts to services, services to teams, and automatically page the right people based on ownership, every time. When alerts connect to our platform, Catalog translates metadata into action by identifying the owning team and automatically routing the alert to the right Slack channel, on-call engineer, or escalation policy.

Dependency mapping: The dependency graph ensures when shared infrastructure fails, we automatically notify all affected application teams, not just the infrastructure owner. When PostgreSQL fails, we notify all six application teams whose services depend on it automatically.

We integrate with existing service catalogs like Backstage or can import from YAML/JSON if you maintain service definitions in code. The Getting started with teams documentation walks through initial setup. The Hands-on introduction to incident.io Catalog demonstrates dependency modeling.

Best incident management features for shared infrastructure

Slack-native workflows eliminate context switching: The architectural difference matters. Slack-native means the incident workflow happens in slash commands and channel interactions, not in a web UI that sends notifications to Slack. Engineers declare, manage, and resolve incidents without leaving Slack. Our web dashboard exists for reporting and configuration, but during the actual incident, responders stay in Slack.

Our workflow: Engineers declare incidents, assign roles with /inc role, and update status directly in Slack. When Datadog fires an alert, we auto-create #inc-2847-api-latency, page the on-call engineer, pull in service owners, and start timeline capture. Watch the Slack for Incident Management: ChatOps Guide for workflow demonstrations.

"Incident.io stands out as a valuable tool for automating incident management and communication, with its effective Slack bot integration leading the way." - Vadym C. on G2

Automated status pages: Internal status pages update automatically when incidents are declared or resolved. Platform teams publish infrastructure status (Kubernetes clusters, shared databases, CI/CD pipeline health) to an internal page that development teams monitor. Severity mapping ensures appropriate visibility.

Integrations with platform tools: Connect the monitoring, infrastructure, and ticketing tools platform teams already use. Datadog and Prometheus for alerts, Grafana for dashboards, Terraform for infrastructure context, and Jira for follow-up tasks. Our API and webhooks enable custom integrations for specialized platform tooling.

Priorities in Alerts and On-call let platform teams define escalation paths matching infrastructure criticality. Building schedules creates rotation calendars that respect team capacity and time zones.

Using AI to automate root cause analysis and reduce cognitive load

Our AI SRE assistant automates up to 80% of incident coordination tasks, freeing platform teams to focus on resolution rather than data gathering.

Capabilities: AI SRE triages and investigates alerts, analyzes root cause, then recommends whether you should act now or defer until later. It connects telemetry, code changes, and past incidents to fix issues faster. The AI SRE demo video shows real-world usage.

During incidents, AI SRE pulls metrics from Grafana, Datadog, or alerts, explains them, and suggests what they mean, right in the incident Slack thread. Summarizing recent GitHub changes saves time by spotting deployment correlations that caused incidents.

Automated summaries: Get AI-powered summaries and timelines that keep everyone aligned during fast-moving incidents. When leadership asks for updates, AI provides current status without interrupting responders.

Implementation: AI capabilities are available starting with the Team plan, which also includes Slack-native incident response and status pages. The Resolve incidents while you sleep video demonstrates automated incident handling.

"The primary advantage we've seen since adopting incident.io is having a consistent interface to dealing with incidents... Ability to focus entirely on resolving the issue while incident.io makes sure every box is checked has been keenly felt within the engineering teams." - Jack S. on G2

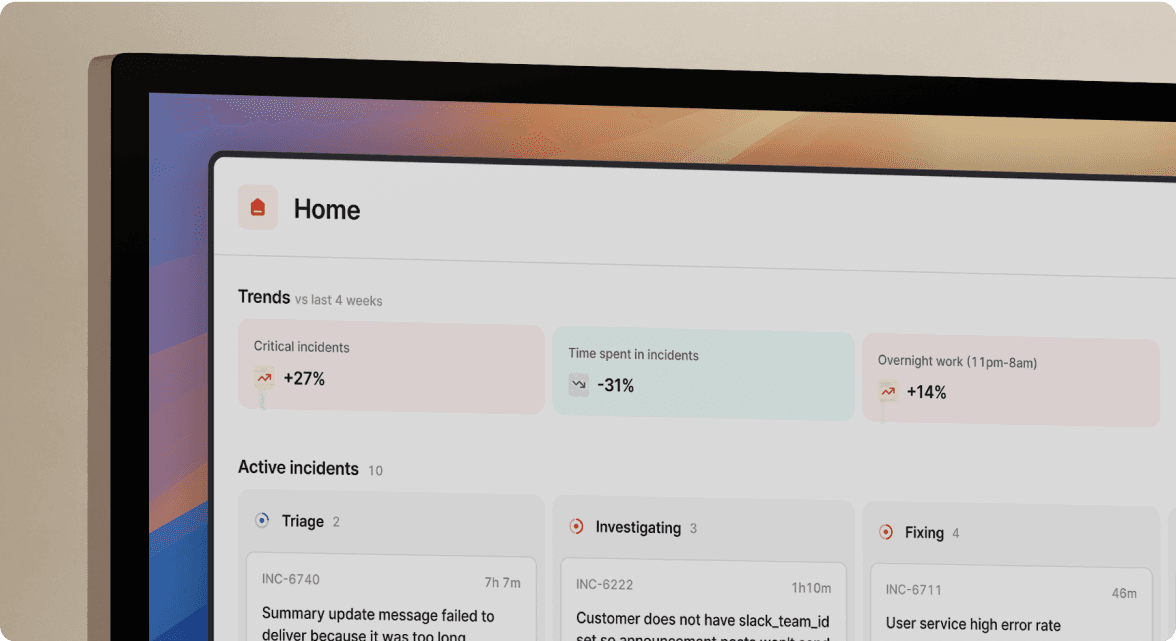

Measuring impact: MTTR, toil reduction, and reliability trends

MTTR reduction: Track Mean Time To Resolution as your primary metric. Favor achieved a 37% MTTR reduction after implementing Slack-native workflows, with a 214% increase in incident detection showing improved visibility.

Coordination overhead breakdown: Measure time from "Alert fires" to "Right people in channel troubleshooting." This metric isolates pure coordination tax from technical resolution time. Research shows 12 minutes assembling the team before troubleshooting starts when manually switching between Slack and Jira. Calculate monthly impact: if you handle 15 incidents monthly and save 10 minutes per incident on coordination, that reclaims 150 minutes (2.5 hours). At $150 loaded hourly cost per engineer, you save $375 monthly or $4,500 annually per on-call engineer.

Post-mortem completion rate: Every Slack message in the incident channel, role assignment, status update, and decision made on a call is captured automatically. AI drafts the post-mortem using this captured data. You spend 10 to 15 minutes refining, not 90 minutes writing from scratch. Track completion rate for SEV1 to SEV2 incidents and time-to-publish.

Dashboard visibility: The Insights dashboard shows MTTR trends over time, incident volume by service (identifying reliability investment priorities), and on-call load distribution. Export these charts when presenting quarterly engineering reviews to leadership. How incident io thinks about learning from incidents covers the measurement philosophy.

How to implement self-service incident response

1. Define ownership in your Service Catalog. Document which team owns which services using your existing catalog (Backstage, Cortex) or import from YAML. Map each service to a Slack channel and on-call schedule. Define dependencies so platform services automatically notify affected application teams during incidents. If you're migrating from PagerDuty or Opsgenie, use migration tools to import existing escalation policies.

2. Automate the "hello" phase. Connect your monitoring tools to automatically create incidents when alerts fire. Map alert metadata (service name, severity, affected region) to Catalog entries, automatically paging the right team and creating a Slack channel with relevant context. For platform incidents affecting multiple teams, set up workflows that auto-invite stakeholders based on dependency graphs.

3. Onboard the organization. Install incident.io and run sandbox incidents to familiarize teams. Run fake incidents to test workflows. Train development teams on /inc commands through hands-on practice. Watch How incident.io helped WorkOS transform its incident response and How Netflix uses incident.io to learn from successful rollouts.

4. Review and refine. After 30 days, review the data. Which services generate the most incidents? Are the right people being paged? Is MTTR improving? Use post-incident reviews to tune workflows. Managing team memberships outside of incident.io keeps Catalog data synchronized with your org chart as teams evolve.

"We've been using Incident.io for some time now, and it's been a game-changer for our incident management processes. The tool is intuitive, well-designed, and has made our Major Incident workflow much smoother." - Pratik A. on G2

Transform platform toil into self-service reliability

Platform engineering exists to enable development velocity through automation and self-service. Incident management should be no different. When you shift from manual routing to automated guardrails, coordination overhead drops significantly. MTTR improves not because problems are simpler but because you eliminate the coordination tax that inflates resolution time.

Service owners take responsibility for their own availability using the self-service workflows you provide. Platform teams focus on maintaining the underlying automation. On-call engineers solve problems instead of playing human switchboard operator, reducing burnout and improving retention.

The tools that got you here won't scale. If your incident response still relies on tribal knowledge, manual channel creation, and Slack archaeology to figure out who owns what, you're building technical debt that compounds with every new service. Treat incident management as a platform capability, automate it with the same rigor you apply to deployment pipelines, and measure the time you reclaim.

Ready to eliminate coordination toil? Try incident.io in a free demo, import your service catalog, and run your first automated incident in, typically, under 30 minutes. Or book a free demo to see Service Catalog integration, dependency mapping, AI assistance, and cross-team escalation in action.

Key terms glossary

Internal Developer Platform (IDP): A centralized system providing self-service capabilities for developers to build, deploy, and manage infrastructure and processes without requiring specialized platform team expertise for routine operations.

Service Catalog: A source of truth mapping services to owners, dependencies, escalation policies, and operational metadata, enabling automated incident routing and context enrichment during response.

Toil: Manual, repetitive, automatable work that scales linearly with service growth and provides no enduring value; in incident management, this includes manual routing, channel creation, and timeline reconstruction.

MTTR (Mean Time To Resolution): The average time from incident detection to full resolution, including coordination overhead, investigation, fix implementation, and verification.

Coordination overhead: Time spent assembling the incident response team, creating communication channels, gathering context, and assigning roles before technical troubleshooting begins.

Slack-native: An architectural approach where the entire incident workflow happens via chat commands and channel interactions rather than a web UI that sends notifications to chat platforms.

FAQs

See related articles

The post-mortem problem

Post-mortems are one of the most consistently underperforming rituals in software engineering. Most teams do them. Most teams know theirs aren't working. And most teams reach for the same diagnosis: the templates are too long, nobody has time, nobody reads them anyway.

incident.io

incident.io

Keeping it boring: the incident.io technology stack

This is the story of how incident.io keeps its technology stack intentionally boring, scaling to thousands of customers with a lean platform team by relying on managed GCP services and a small set of well-chosen tools.

Matthew Barrington

Matthew Barrington

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian HansonSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization