Implementation guide: Switching to a Slack-native incident management platform

Updated December 11, 2025

TL;DR: You can migrate to Slack-native incident response in 30 days, not six months. This guide walks engineering teams through a 4-week migration guide for engineering teams.

Engineering teams waste significant time just assembling people across multiple tools before troubleshooting starts. That coordination tax compounds across every incident, with teams burning valuable engineering hours on logistics rather than problem-solving. The technical fix is rarely the bottleneck, juggling too many tools is.

You can migrate to Slack-native incident response in 30 days, not six months. Because the entire workflow lives where your engineers already work, you eliminate that assembly tax in four weeks. One engineering team with approximately 15 people saw their integration with existing tools complete in less than 20 days with a seamless and fast rollout experience.

"The onboarding experience was outstanding — we have a small engineering team (~15 people) and the integration with our existing tools (Linear, Google, New Relic, Notion) was seamless and fast less than 20 days to rollout." - Verified user review of incident.io

This guide provides your week-by-week execution plan.

Why consolidate incident response in Slack

The old way forces you to toggle between PagerDuty for alerts, Slack for ad-hoc coordination, Datadog for metrics, Jira for tickets, and Confluence for post-mortems. Engineers waste cognitive load remembering which tool does what at 2 AM. The new way consolidates the incident lifecycle into Slack: alert fires, channel auto-creates, on-call joins, timeline captures, post-mortem auto-drafts. One interface, zero tab-switching.

Favor achieved this result, reducing MTTR by 37% after implementing incident.io's Slack-native workflow. Etsy went from having "very little data" on lower-severity incidents to tracking who was involved, time spent, and future remediation work shown monthly to VPs and the CTO. The common thread: eliminating coordination overhead that precedes actual troubleshooting.

What Slack-native really means

"Slack-native" means the incident workflow happens in slash commands and channel interactions, not in a web UI that sends notifications to Slack. You declare incidents with /inc declare, assign roles with /inc assign, escalate with /inc escalate, and resolve with /inc resolve. Engineers immediately prefer this approach over web-first tools because there's no new interface to learn.

The web dashboard exists for reporting and configuration, but during the actual incident, responders stay in Slack. This architectural choice is why teams find the tool easy to install and configure.

"Incident.io was easy to install and configure. It's already helped us do a better job of responding to minor incidents that previously were handled informally and not as well documented." - Verified user review of incident.io

What you need before starting

Before you start Week 1, confirm you have the necessary access and stakeholder alignment. This 30-minute checklist prevents blockers during implementation.

Slack workspace requirements

You need Slack workspace administrator permissions to install the incident.io application. The app works on all Slack plans including Free.

API keys and credentials

Gather these credentials before Day 1:

For PagerDuty integration: Create an API key with permissions to read services, schedules, users, and create webhooks. If you plan to import escalation policies from PagerDuty, ensure read access to those configurations.

For Datadog integration: Generate a Datadog API key and Application key.

For service catalog import: If you use Backstage, OpsLevel, or Cortex, you can sync your service catalog directly. For custom sources, the catalog-importer command-line tool accepts JSON and YAML files.

Stakeholder alignment

Secure buy-in from your CISO for the security review. incident.io holds SOC 2 Type II certification and is GDPR compliant. For a 120-person team on the Pro plan with on-call, expect $45 per user monthly, or $64,800 annually.

Identify your pilot team now: 5-15 engineers from a team that handles moderate incident volume and can evangelize success to others.

Week 1: Technical setup and integrations

Week 1 focuses on technical setup without disrupting current incident response.

Install and configure the Slack app

Day 1 (30 minutes): Visit the Slack App Directory, search for "incident.io," and click "Add to Slack." Installation typically completes in 5-10 minutes from clicking 'Add to Slack' to confirming the /inc help command works. Test by typing /inc help in any channel, you should see available commands.

Day 2 (1 hour): Configure your first incident type. Navigate to Settings, then Incident Types. Create a "Production Incident" type with default severity levels (SEV1 critical, SEV2 major, SEV3 minor). Define required roles: Incident Commander, Comms Lead, and Ops Lead. You can customize these later, but start with defaults.

Map your service catalog

Day 3-4 (3 hours): Your service catalog provides critical context during incidents. For Backstage users, the catalog-importer supports catalog-info.yaml files natively. For manual CSV import: Create a spreadsheet with service name, owner email, description, and on-call schedule. Upload via Catalog settings.

Connect observability tools

Day 5-6 (2 hours): For Datadog:

- Generate a webhook URL in incident.io (Settings > Alerts > HTTP source) and copy it.

- Add the webhook in Datadog (Integrations > Webhooks) and reference it in monitor messages using

@webhook-incident-io. - Configure Alert Routes to map Datadog severity to incident.io severity (e.g.,

status: error→ SEV1).

incident.io automatically captures Datadog monitor metadata including graph snapshots when creating incidents from alerts.

Day 7 (validation): Run a test alert from Datadog. Verify it creates an incident channel, pulls in the correct service owner, and posts monitor details.

Week 2: Pilot team validation

Week 2 validates the setup with real incidents from your pilot team.

Select and brief your pilot team

Day 8 (1 hour): Announce the pilot to your 5-15 engineers. In a 30-minute kickoff, explain why you're piloting (eliminate 15-minute coordination tax), what they'll do differently (use /inc commands), and what success looks like (10+ incidents managed in two weeks).

Share a one-page quick reference card with essential commands: /inc declare, /inc update, /inc role, /inc severity, /inc resolve.

Run game day drills

Day 9-10 (2 hours): Walk the pilot team through the workflow using /inc test The test flag marks it as a drill (or start a Test Incident from the dashboard). Practice key commands: /inc role lead @sarah for roles, /inc severity high to escalate, /inc update for stakeholder communication.

Notice how the timeline auto-captures every command and message. When you run /inc resolve, the post-mortem auto-generates from timeline data in minutes. Run 2-3 drills covering different severities before switching to live incidents.

Refine based on real incidents

Day 11-14 (ongoing): Switch the pilot team to managing real incidents through incident.io. Monitor the first 5-10 live incidents closely. Common refinements: severity calibration, role clarity, and alert routing adjustments. Track key metrics: command usage percentage, post-mortem completion within 24 hours, and qualitative feedback.

By Day 14, your pilot team should have managed 10+ incidents with 80%+ command usage versus web UI.

Week 3: Training and on-call setup, AI SRE

Week 3 scales knowledge beyond the pilot team through lightweight training and on-call configuration.

Scaling knowledge through enablement

Day 15-16 (3 hours): Create a 5-minute Loom video showing a pilot team member managing a real incident from declaration to post-mortem generation. Host two 30-minute "office hours" Q&A sessions for other teams. Invite pilot team champions to share their experience. Distribute the quick reference command card to all engineering teams.

Setting up on-call schedules

Day 17-19 (4 hours): You have two paths: sync schedules from PagerDuty via the native integration, or create schedules directly in incident.io (Settings > On-call). For teams migrating from other platforms, you can bulk import schedules using the automated tools provided, though escalation policies may require manual recreation.

Link on-call schedules to services in your catalog so alerts automatically page the correct team.

Configuring AI SRE features

Day 20-21 (2 hours): Enable AI features that reduce cognitive load. Configure Scribe for incident call transcription, when someone starts a Zoom or Google Meet call, Scribe joins, transcribes in real-time, and captures key decisions.

Set up AI-powered summaries. When a new responder joins 30 minutes into an incident, they can run the summary command to get an AI-generated recap of what happened, who's involved, and current status.

Enable automated fix suggestions. AI SRE identifies patterns from similar past incidents and suggests likely root causes based on recent deployments or configuration changes.

Week 4: Full rollout and automation

Week 4 expands to all engineering teams and establishes ongoing optimization practices.

The go live announcement

Day 22 (communication plan): Announce the full rollout to all engineering teams. Share the 5-minute demo video, quick reference card, and Insights dashboard showing pilot team MTTR improvements. Make clear to all teams: ad-hoc Slack threads and Google Docs are replaced. All new incidents go through incident.io.

Provide a clear point of contact. If you have an incident.io Customer Success Manager, they can support rollout. Many customers praise the support experience.

"It's so flexible we haven't found a process that doesn't fit. The Customer Support is fantastic, they were there to support us through every step of the journey." - Verified user review of incident.io

Day 23-25 (rapid response): Monitor adoption closely. Jump into incident channels to coach teams through their first incidents. Fix configuration issues immediately.

Automating post-mortems

Day 26-27 (configuration): Configure post-mortem templates in Settings. Include sections for incident summary (AI auto-generates), timeline of key events (auto-populated), root cause analysis, and action items.

When an engineer runs /inc resolve, the platform prompts them to generate the post-mortem. The document includes a complete timeline captured during the incident. Engineers spend 10 minutes refining root cause and action items, not 90 minutes reconstructing from memory.

Export post-mortems to Confluence or Google Docs with one click. Action items automatically create Jira or Linear tickets.

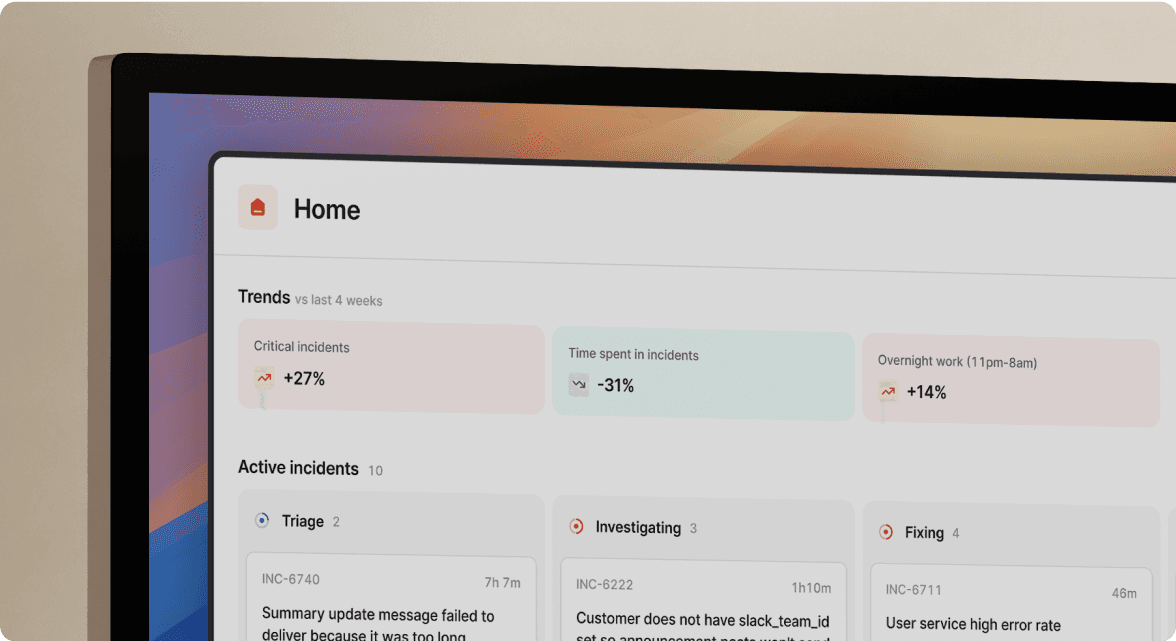

Setting up the insights dashboard

Day 28-30 (leadership visibility): Configure dashboards to track MTTR trend, incident volume by service, post-mortem completion rate, and on-call load. Engineering teams show this data to VPs, directors, and the CTO monthly to demonstrate operational improvements.

Share the first 30-day report with executive leadership showing coordination time reduced, post-mortem completion increased, and engineer feedback quotes.

Platform comparison: Key differences

| Feature | incident.io | PagerDuty | Opsgenie | FireHydrant |

|---|---|---|---|---|

| Slack Integration | Slack-native. Entire lifecycle in slash commands. | Slack-integrated. Notifications in Slack, management in web UI. | Slack-integrated. Basic notifications and actions. | Strong Slack integration but some actions require web UI. |

| On-Call Management | Native scheduling with PagerDuty sync option. | Core strength. Sophisticated alerting and routing. | Core strength being sunset April 2027. | Available but not as robust. |

| Post-Mortem Automation | AI-generated from captured timeline data. | Basic templates requiring manual entry. | Basic functionality. | Offers post-incident workflow automation. |

| AI Capabilities | AI SRE automates incident response tasks. Identifies root causes, generates fix PRs. | AIOps add-on for event intelligence. | Limited AI features. | Runbook automation. |

| Implementation Time | Less than 20 days for small teams per customer reviews. | 2-8 weeks for basic setup, months for full customization. | 3-7 days for basic alerting. Now sunset April 2027. | 1-2 weeks for standard configuration. |

| Support Quality | 9.8/10 on G2. Bugs fixed in hours to days. | Email-only support. Week-long response times. | Good support but sunsetting. | Good support per reviews. |

| Pricing (Pro + On-Call) | $45/user/month ($25 base + $20 on-call). Team plan available at $25/user/month for smaller teams. | Complex per-seat with add-ons. Often 2x cost. | No longer relevant. | Per-user pricing with tiers. |

incident.io strengths and weaknesses

Strengths

- Slack-native workflow reduces cognitive load: The entire incident management process happens in Slack with slash commands, eliminating context switching between tools.

- Support velocity creates competitive moat: Customer support rated 9.8/10. Engineering teams report rapid feature delivery and responsive bug fixes.

- AI automates incident response: The AI SRE feature automates incident response tasks, identifying root causes and suggesting fixes.

- Rapid time-to-value: Teams report operational status in 3 days without customization using opinionated defaults that work immediately.

"To me incident.io strikes just the right balance between not getting in the way while still providing structure, process and data gathering to incident management." - Verified user review of incident.io

Weaknesses

- Not built for microservice SLO tracking: incident.io handles incident visibility well but is not a dedicated SLO platform like Blameless.

- Dependency on Slack availability: The web dashboard provides backup access, and Microsoft Teams support is available on Pro+, but core value relies on chat platform uptime.

Ready to eliminate your coordination tax?

See how incident.io can reduce your MTTR by up to 80%. Our team will show you:

- Live demonstration of Slack-native incident response workflow

- Your specific migration path from PagerDuty, Opsgenie, or legacy tools

- Custom implementation timeline for your organization

Schedule a demo with our team to see the platform in action and get your questions answered by incident management experts.

Key terminology

Slack-native: The incident workflow happens in slash commands and channel interactions, not in a web UI that sends notifications to Slack. Engineers declare, manage, and resolve incidents without leaving Slack.

MTTR (Mean Time To Resolution): Average time from incident detection to full resolution. Favor achieved 37% MTTR reduction by eliminating coordination overhead.

Coordination tax: Time spent on administrative tasks before troubleshooting starts: creating channels, finding on-call engineers, setting up calls. Typically 10-15 minutes per incident that Slack-native platforms eliminate.

AI SRE: AI assistant that automates incident response tasks including root cause identification, fix pull request generation, and call transcription. Can automate significant portions of incident response work.

Service catalog: Directory of services, owners, dependencies, and runbooks that provides context during incidents. Catalog data automatically surfaces in incident channels to accelerate response.

Alert routes: Configuration that maps incoming alerts from monitoring tools to incident properties based on payload data (severity, service, tags).

FAQs

See related articles

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian Hanson

Everything you need to know about ITIL 5, AI and incident management

We break down ITIL 5's governance framework and what it means for teams using AI in incident response. For incident management, it addresses questions like: Who's accountable when an AI-suggested remediation backfires? How do you audit AI-generated updates?

Chris Evans

Chris Evans

DevEx matters for coding agents, too

When AI can scaffold out entire features in seconds and you have multiple agents all working in parallel on different tasks, a ninety-second feedback loop kills your flow state completely. We've recently invested in dramatically speeding up our developer feedback cycles, cutting some by 95% to address this. In this post we’ll share what that journey looked like, why we did it and what it taught us about building for the AI era.

Rory Bain

Rory BainSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization