Building with AI

April 1, 2025

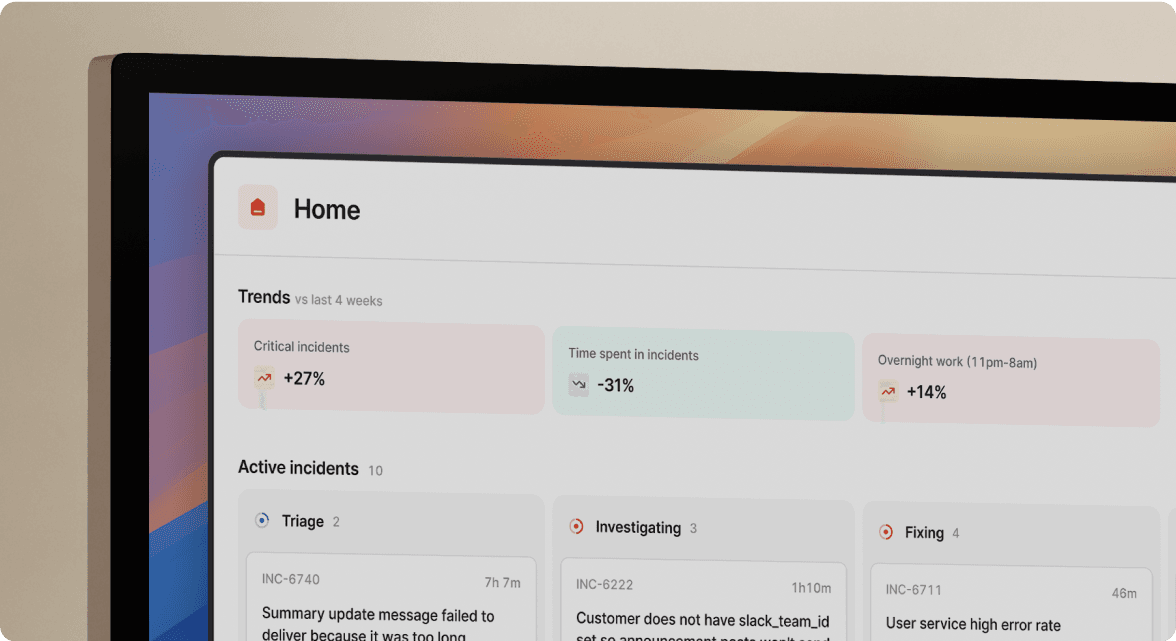

Over the past year, we’ve been hard at work building a new agent that will investigate incidents with you, show you what’s wrong and why it’s wrong. Eventually, it’ll show you how to fix incidents or offer to fix them on your behalf. It will feel like we’re right there with you, helping you resolve the incident just like your best and most experienced colleague would.

To get there, we’re on the sharp edge of what’s possible with AI. It’s pushed us to become ‘AI Engineers,’ build internal tools to tame the chaos of non-deterministic systems, and rethink our product process for AI systems which are much harder to evaluate. We’ve built resilience, learned to navigate the uncertainty and stay motivated through tough, ambitious R&D.

Last week, we launched a new page: incident.io/building-with-ai. The page is full of technical blog posts that the team has written with the lessons we’ve learned on that journey.

Here are some examples of what you can expect to see:

- You can’t vibe code a prompt: Why blindly trusting AI to optimize your prompts can backfire, and human intuition is still essential when building intelligent agents.

- Controlling costs when building with AI: Building with AI is one of the easiest ways to create a huge infrastructure bill. Teams need visibility and awareness of what they're spending, along with guardrails to catch mistakes.

- Optimizing LLM prompts for low latency: When the time taken to execute a prompt becomes an issue, these strategies can optimize response latency without impacting prompt behavior.

- Break chatbot speed limits with speculative tool calls: Learn how we engineered a 50% reduction in chatbot response times by speculatively executing tool calls, making us one of the fastest chatbots around.

- Why we built our own AI tooling suite: A technical deep-dive into why we built our own AI tooling suite, from evals to model leaderboards, and the key benefits we gained as a result.

- Tricks to fix stubborn prompts: If your prompt isn't doing what you expect and you've tried all the classic suggestions, here are tricks you can use to make even stubborn prompts listen.

We’re planning to continue building in public and sharing the lessons we’re learning on this page along the way. We’d love to hear from you with any stories or lessons you’ve learned, building AI products too.

What else we've shipped

New

- You can pull through custom metadata for Cortex Domains to Catalog

- Workflow steps that send messages can now make use of specific time zones

- You can now search fields you want to customize your incidents list by

- Bulk actions on the incident list page have been moved into a floating toolbox

Improvements

- Polished the cover request accepted screen in mobile when the users do not have avatars

- Display create and import flows the same way in schedules and escalation paths

- We now respect "Include declined/merged incidents" filtering when exporting incidents

- You can now pick a new destination if a postmortem fails to export

- Fixed some misalignments in the incident list page

- Filters across the dashboard have been given a polish with a new icon and styling

Bug fixes

- Fixed an issue where a number of subscribers being emailed was previewing incorrectly for scheduled maintenance status page incidents

- Fixed an issue where generating a summary was sometimes removing custom fields in Slack forms

- Fixed an issue where we always show your status as on call when you're on an escalation path that pages all members of a schedule

- Fixed a UI bug when deleting an alert route

- Fixed an issue in the on-call calendar view where showing a large time format meant the responder's name could not be read

- Scheduled maintenances on status pages now only update the specified subpages when scheduling.

- Fixed an issue where "between" date ranges sometimes calculated the wrong end time

So good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization