Engineering

Bloom filters: the niche trick behind a 16× faster API

Mike Fisher

Mike FisherNovember 14, 2025

Engineering

My first three months at incident.io

Edd Sowden

Edd SowdenSeptember 1, 2025

Engineering

Impact review: Scribe under the microscope

Kelsey Mills

Kelsey MillsAugust 20, 2025

Engineering

Ready, steady, goa: our API setup

Shlok Shah

Shlok ShahAugust 11, 2025

Engineering

Breaking through the Senior Engineer ceiling

Norberto Lopes

Norberto LopesAugust 5, 2025

Engineering

Why I decided to join incident.io and my impressions so far

James Jarvis

James JarvisJuly 31, 2025

Engineering

The quest for the five minute deploy

Matthew Barrington

Matthew Barrington July 22, 2025

Engineering

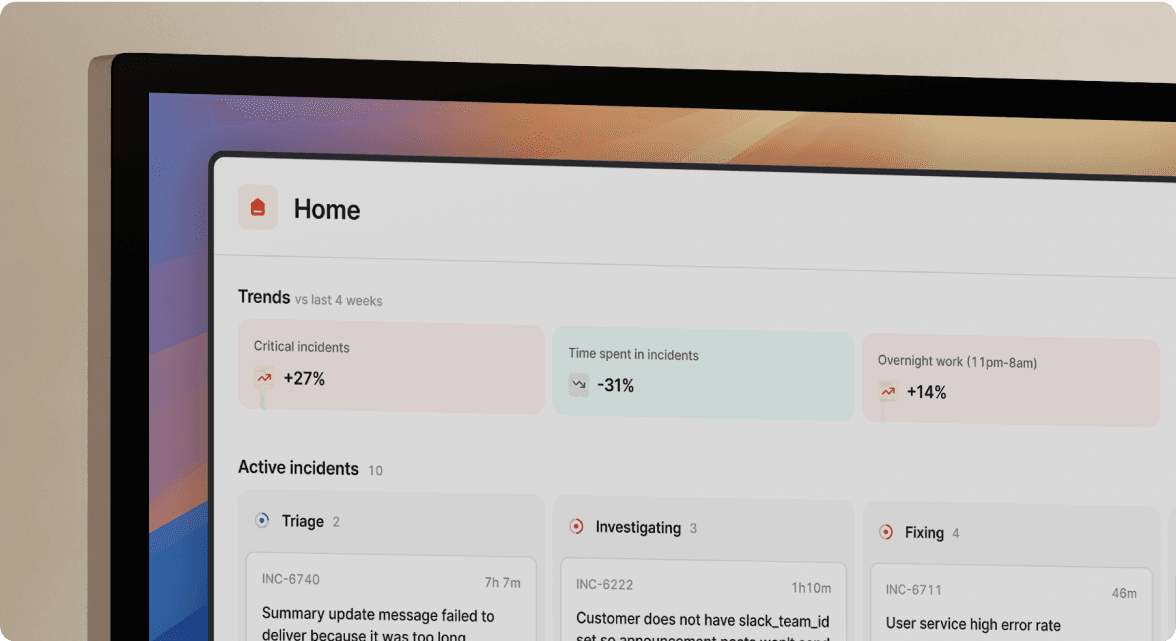

Being on-call at incident.io

Alicia Collymore

Alicia CollymoreJuly 18, 2025

Engineering

How we're shipping faster with Claude Code and Git Worktrees

Rory Bain

Rory BainJune 27, 2025

Stay in the loop: subscribe to our RSS feed.

So good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization