The quest for the five minute deploy

The Quest For The Five Minute Deploy

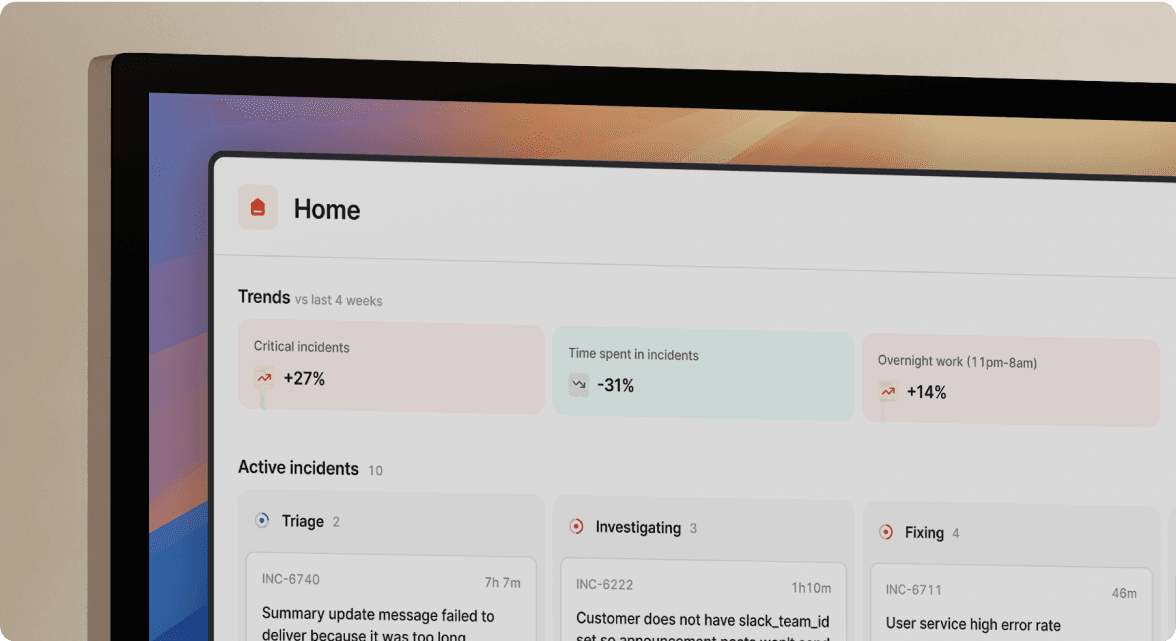

Speed is everything at incident.io. The faster we can test and ship code, the faster we can get new products and features out to customers.

Over the last three years, as our codebase grew and our test suite expanded, we drifted away from our own goals: "We aim for less than 5 minutes between merging a PR and getting it into production."

This is the story of how we got back on track.

Our pipeline

Our tech stack is straightforward but substantial:

- A Go modular monolith deployed to Kubernetes

- A React frontend application

- Over 15,000 Go tests

Every time an engineer pushes a commit, our CI/CD triggers a new build:

- Building our application into a Docker container

- Uploading static assets to our CDN

- Testing and linting both Go and React code

- Running end-to-end tests

When we merge to master we do all of the above, but on top of that:

- Publishing build information

- Deploying the changes to staging and production

- Running database migrations

When we need to make an emergency fix, we can initiate a “hotfix” deploy.

Our “hotfix” deploys work by changing the dependencies of our deploy flow, allowing us to get changes out in less than half the time of a normal deploy.

A slow start

As the size of our code base grew, the time to ship changes to production increased. The time taken to build, test and deploy changes was over 11 minutes, with a p90 nearing 14 minutes.

While building the Go application itself was slow, the biggest bottleneck we experienced was running our Go test suites.

It takes a large amount of resources to compile the test binaries for our 350+ different Go packages. With the largest instance type our CI/CD provider offered, combined with 10x parallelism, we were only getting to 6.5 minutes for the test step alone.

Go relies heavily on its build cache to speed up subsequent builds and we were unable to keep our build cache in our CI/CD provider due to its size.

Each change to our code base would add more objects to the cache, and changes to core packages would add a lot more objects to the cache.

Eventually the time taken to save and restore the cache was exceeding the time savings gained from having a cache. This meant that each new CI/CD run was from a cold start, which impacted the time taken to compile the test suites.

We spent time trying to improve our build times on our existing CI/CD provider. While we made some small gains, it would not have been able to make sizeable gains on this provider without rearchitecting how our application is built and tested, which was not an option.

Enter Buildkite

We realised that to achieve our speed goals, we needed to rethink how we ran our CI/CD pipeline.

We started looking at different providers that would give us the ability to run our CI/CD in a completely different way. We took into account: price, reliability, extensibility, and familiarity.

We settled on Buildkite, a CI/CD platform that lets us run our own servers while using their control plane for orchestration.

We perfomed some experiments and determined what resources we would need to be able to run a full CI/CD workflow on a single server, within a time period we were happy with.

The result is we chose servers with a large amount of processing power:

- 3 build servers, each with 48 vCPUs and 96GB RAM

- 1TB attached storage with 100,000 IOPS and 2,400 MB/s throughput

With this setup, we comfortably support our 20+ engineers with minimal contention.

(Go) Testing my patience

Now that we had our own servers, we were able to change how we ran our tests.

In the old world, we split our tests based on their package name and split them across 10 parallel containers. Each container would then build the 10% of packages that had been assigned to it.

Despite splitting the tests into 10 buckets, they were not split evenly, leading to up to a minute variance between the fastest and slowest bucket.

In Buildkite we split our Go tests into a two step process. First we build the Ginkgo test suites, this gives us binaries on disk (which we can cache), then we run these test binaries in batches.

The biggest difference here is Buildkite is able to run all these tests in a container, on a single server. The advantage of this is we don’t need to figure out how to split our tests evenly across multiple containers or servers.

With the resources of our CI/CD servers, our test suite now runs in 2 to 4 minutes, a best case saving of 70% from our old provider.

Cache Rules Everything Around Me

As we started moving our CI/CD jobs over to Buildkite we started implementing different forms of caching.

The biggest gain for us was having a Go build cache on each server. This means that each time we run go build or similar commands, we can reuse previous build objects.

Docker containers have access to this cache via volumes mounted into their Docker containers.

volumes:

- ../../:/project:cached

- /var/lib/buildkite-agent/cache/gobuild:/gocache

- /var/lib/buildkite-agent/cache/gomod:/gomodcache

- /var/lib/buildkite-agent/cache/binaries:/bincacheWhile we are happy with this approach, there are still some issues:

- The cache is per server, so depending on how jobs are assigned, we might not have build objects that exist on a different server.

- The cache grows quite fast (~30GB/hour on average), so we have to clear it when it hits a threshold. If you know a way to selectively clear the cache, please email me!

I’ve talked a lot about the Go backend, as it was the biggest bottleneck, but we also use Typescript for our frontend.

While our frontend build does not have a cache in the same way, we have large amount of modules for the different Typescript UIs that we deploy. Caching these modules reduces the need to download them on every CI/CD job, and has resulted in noticeable speed improvements.

Unlike the Go build cache, Node's module cache is sensitive to multiple processes writing to it concurrently. To reduce the risk, we have cached the modules for each version of our yarn.lock. We also have a pipeline-level cache that is updated on each deploy. A job will first try the file level cache, and will fall back to the pipeline level cache. This ensures that you will either have an exact cache, or a recently updated cache.

We implememted this caching behaviour using a pre-existing Buildkite plugin (We make heavy use of various Buildkite plugins, and find them very useful). All we needed to do was greate a bucket in Google Cloud Storage (GCS), set an environment variable so the cache knows which bucker to useI, and adding the following blocks to a job depending of if we wanted to restore or save the cache.

# Save Cache

plugins:

- cache#v1.7.0:

key-extra: v2

backend: s3

manifest:

- yarn.lock

path: .yarn/

save:

- file

restore: pipeline

compression: zstd# Restore Cache

plugins:

- cache#v1.7.0:

key-extra: v2

backend: s3

manifest:

- yarn.lock

path: .yarn/

restore: pipeline

compression: zstdWe make heavy use of Docker images as part of our CI/CD flow. These are either pre existing or ones we have build specifically for our workflows. As the jobs run on our own servers, we are able to have a cache on each machine. Not needing to pull down Docker containers on every job saves seconds off every build.

Gotta go fast

When making changes like this, you need to build up confidence that the new system works before making the big cutover.

We started running our branch builds on Buildkite, without disabling our old provider. This allowed us to build up confidence that our Buildkite jobs were faster and as reliable.

Once we were confident with our new system, it became the sole CI/CD provider we used for our branch builds.

We then worked on building out the jobs that only ran on deploys. Once we were confident that each step worked, we did the big cutover, and completely removed our old provider from deploying our application.

Here's what all this work achieved:

- Production deploys: Down 27% (11.5 → 8.5 minutes)

- Branch builds: Down 45% (10 → 5.5 minutes)

- Hotfix deploys: Down 30% (5 → 3.5 minutes)

Not all sunshine and roses

The migration wasn't without its challenges:

Bin packing: We run Docker containers with varying limits depending on the resources needed. Ensuring that these are properly sized and maximising utilisation of our CI/CD servers remains an ongoing effort.

Disk I/O: The initial migration of our jobs to our CI/CD servers did not provide the speed improvements we were hoping for. After much time spent investigating, we discovered that certain jobs had much higher IOPS requirements that previously thought, and the disks we attached to our servers defaulted to a value lower than required.

Flaky tests: Changing the parallelism of how we ran tests exposed previously hidden flaky tests that needed fixing.

Cache poisoning: A TypeScript dependency caching mistake led to extended build failures and a painful recovery process.

Zombie containers: A misconfiguration led to Docker containers running after their job had completed, eating up resources, and eventually making the host unusable.

Mission accomplished?

Have we achieved the mythical five-minute deploy? For hotfixes, yes—we're consistently under 5 minutes. For regular deploys, we're not quite there yet, but we've made massive progress.

"Raise the pace" is one of our core values at incident.io. Improving our CI/CD speed directly supports this value, helping us deliver value to customers faster. The quest continues, but we're a lot closer to that five-minute target than we were.

See related articles

Bloom filters: the niche trick behind a 16× faster API

This post is a deep dive into how we improved the P95 latency of an API endpoint from 5s to 0.3s using a niche little computer science trick called a bloom filter.

Mike Fisher

Mike Fisher

My first three months at incident.io

Hear from Edd - one of our recent joiners in the On-Call team - how have they found their first three months and what's it been like working here.

Edd Sowden

Edd Sowden

Impact review: Scribe under the microscope

In this post we review the impact of our AI-powered transcription feature, Scribe, as we analyse key metrics, user behaviour, and feedback to drive future improvements.

Kelsey Mills

Kelsey MillsSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization