Keep the monolith, but split the workloads

I’m a big fan of monolithic architectures. Writing code is hard enough without each function call requiring a network request, and that’s before considering the investment in observability, RPC frameworks, and dev environments you need to be productive in a microservice environment.

But having spent half a decade stewarding a Ruby monolith from 20 to 200 engineers and watched its modest 10GB Postgres database grow beyond 5TB, there’s a point where the pain outweighs the benefits.

This post is about splitting your workloads, a technique that can significantly reduce that pain, costs little and can be applied early as you scale. Something that, if executed well, can let you enjoy that sweet monolithic goodness for that much longer.

Let’s dive in!

A wild outage appears!

Back in November 2022, we had an outage we affectionately called “Intermittent downtime from repeated crashes”.

Probably the first genuinely major outage we’ve faced, it resulted in our app repeatedly crashing over a period of 32 minutes. Pretty stressful stuff, even for responders who spend their entire day jobs building incident tooling.

While the post-mortem goes into detail, the gist of the issue was:

- We run our app as a Go monolith in Heroku, using Heroku Postgres as a database, and GCP Pub/Sub as an async message queue.

- Our application runs several replicas of a single binary running web, worker, and cron threads.

- When a bad Pub/Sub message was pulled into the binary, an unhandled panic would crash the entire app, meaning web, workers, and crons all died.

Well, that sucks and seems easily avoidable. If only we’d built everything as microservices, we’d only have crashed the service responsible for that message, right?

Understanding reliability in monolithic systems vs. microservices

The most common reason teams opt for a microservice architecture tends to be for reliability or scalability, often used interchangeably.

This means that:

- The blast radius of problems – such as the bad Pub/Sub message we saw above – is limited to the service it runs in, often allowing the service to degrade gracefully (continue serving most requests, failing only for certain features).

- Each microservice can manage its own resources such as setting limits for CPU or memory that can be scaled to whatever that service needs at the time. This prevents a bad code path from consuming all of a limited resource and impacting other code, as it might in a monolithic app.

Microservices certainly solve these problems but come with a huge amount of associated baggage (distributed system problems, RPC frameworks, etc). If we want the benefits of microservices without the baggage, we’ll need some alternative solutions.

Rule 1: Never mix workloads

First, we should apply the cardinal rule of running monoliths, which is: never mix your workloads.

For our incident.io app, we have three key workloads:

- Web servers that handle incoming requests.

- Pub/Sub subscribers that process async work.

- Cron jobs that fire on a schedule.

We were breaking this rule by running all of this code inside of the same process (as in, literally the same linux process). By mixing workloads we left ourselves open to:

- Bad code in a specific part of the codebase bringing down the whole app, as in our November incident.

- If we deployed a Pub/Sub subscriber that was CPU heavy (maybe compressing Slack images, or a badly written loop that spun indefinitely) we’d impact the entire app, causing all web/worker/cron activity to slow to a halt. CPU in that process is a limited resource and by consuming 90% of it, we’d leave only 10% left for the other work.

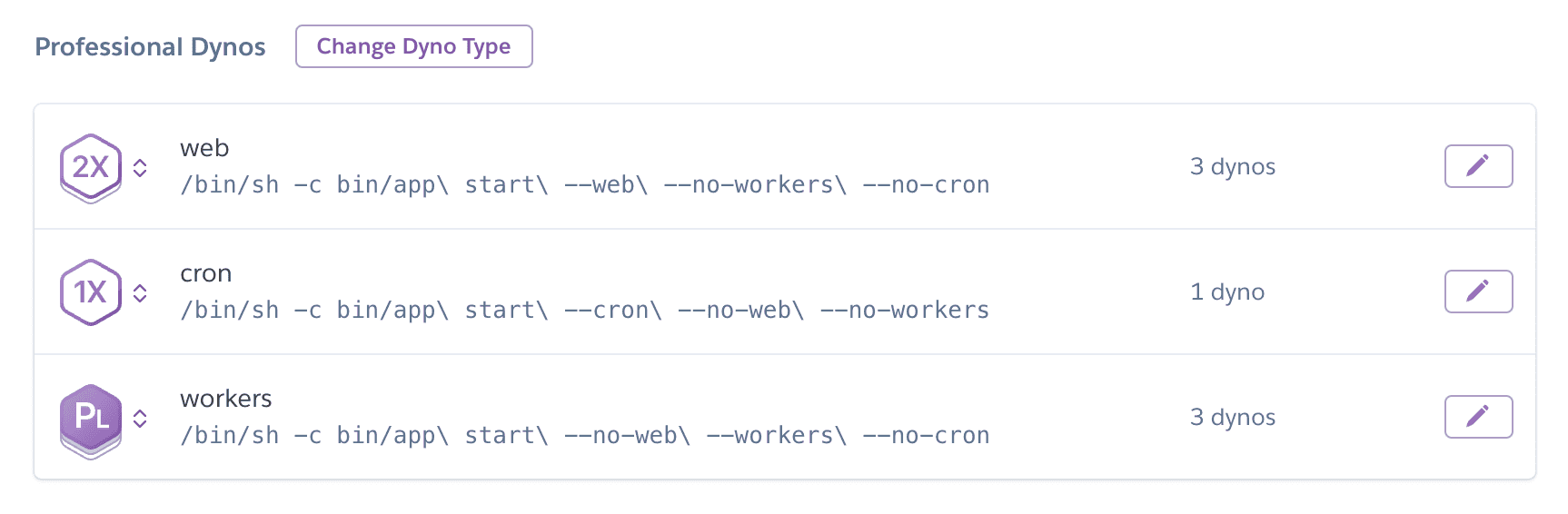

The same day as our incident occurred, we split our app into separate tiers of deployment for each workload type. This meant creating three separate dyno tiers in Heroku, which for those unfamiliar with Heroku just means three independent deployments of the app processing only its own type of workload.

You might ask if we’re doing this, then why not go the whole way and have separate microservices?

The answer is that this split preserves all the benefits of the monolith while solving the problems we presented above. Every deployment is running the same code, using the same Docker image and environment variables, the only thing that differs is the command we run to start the code. No complex dev setup required, or RPC framework needed, it’s the same old monolith, just operated differently.

Our application entry point code looks a bit like this:

package main

var (

app = kingpin.New("app", "incident.io")

web = app.Flag("web", "Run web server").Bool()

workers = app.Flag("workers", "Run async workers").Bool()

cron = app.Flag("cron", "Run cron jobs").Bool()

)

func main() {

if *web {

// run web

}

if *workers {

// run workers

}

if *cron {

// run cron

}

wait()

}

You can easily add this to any application, with the neat benefit that for your local development environment, you can have all components running in a single hot-reloaded process (a pipe dream for many microservice shops!).

As a small catch to switching over blindly, be aware that code assuming everything runs within the same process is both hard to recognize, subtly buggy, and difficult to fix. If, for example, your web server code stashes data in a process-local cache that the worker attempts to use, you’re going to have a sad time.

The good news is those dependencies are generally code smells are easily solved by pushing coordination into an external store such as Postgres or Redis, and won’t reappear after you’ve made the initial change. Worth doing even if you aren’t splitting your code, in my opinion.

Note there’s no limit to how granular you split these workloads. I’ve seen a deployment per queue or even job class before, going up to ~20 deployments for a single application environment.

Rule 2: Apply guardrails to your monolith for database efficiency

Ok, so our monolith is no longer a big bundle of code running all the things: it’s three separate, isolated deployments that can succeed or fail independently. Great.

Most people’s applications aren’t just about the code running in the process, though. One of the most significant reliability risks is rogue or even well-behaved but unfortunately timed code consuming the most precious of a monoliths limited resources, which is usually…

Database capacity. In our case, Postgres.

Even having split your workloads, you’ll always have the underlying data store that needs some form of protection. And this is where microservices – which often try to share nothing – can help, with each service deployment only able to indirectly consume database time via another services API.

This is solvable in our monolith though, we just need to create guardrails and limits around resource consumption. Limits that can be arbitrarily granular.

In our code, the guardrails around our Postgres database look like this:

package main

var (

// ...

workers = app.Flag("workers", "Run async workers").Bool()

workersDatabase = new(database.ConnectOptions).Bind(

app, "workers.database.", 20, 5, "30s")

)

func main() {

// ...

if *workers {

db, err := createDatabasePool(ctx, "worker", workersDatabase)

if err != nil {

return errors.Wrap(err, "connecting to Postgres pool for workers")

}

runWorkers(db) // start running workers

}

}

This code sets and allows customization of the database pool used specifically for workers. The defaults mean “maximum of 20 active connections, allowing up to 5 idle connections, with a 30s statement timeout”.

Perhaps easier to see from the app --help output:

--workers.database.max-open-connections=20

Max database connections to open against the Postgres server

--workers.database.max-idle-connections=5

Max database connections to keep open while idle

--workers.database.max-connection-idle-time=10m

Max time to wait before closing idle Postgres server connections

--workers.database.max-connection-lifetime=60m

Max time to reuse a connection before recycling it

--workers.database.statement-timeout="30s"

What to set as a statement timeout

Most applications will specify values for their connection pool, but the key ah-ha moment is that we have separate pools for any type of work we want to throttle or restrict, anticipating that it might – in an incident situation – consume too much database capacity and impact other components of the service.

Just some examples are:

eventsDatabasewhich is a pool of 2 connections used by a worker that consumes a copy of every Pub/Sub event and pushes it to BigQuery for later analysis. We don’t care about this queue falling behind but it would be very bad if it rinsed the database, especially if that happens – and it naturally would – at time when our service was most busy.triggersDatabasewith 5 connections used by a cron job that scans all incidents for recent activity, helping drive nudges like “it’s been a while, would you like to send another incident update?”. These queries are expensive and nudges are best effort, so we’d rather fall behind than hurt the database trying to keep up.

Using limits like this can help you protect a shared resource like database capacity from being overly consumed by any one part of your monolith. If you make them extremely easy to configure – as we have via a shared database.ConnectOptions helper – then it’s minimal effort to specify up-front “I expect to consume only up-to X of this resource, and beyond that I’d like to know”.

Useful for any moderately sized monolith, but even more powerful when multiple teams work in different parts of the codebase and protecting everyone from one another becomes a priority.

Keeping a monolithic architecture can still be a smart choice for scaling

Obviously you hit issues when scaling a monolith, but here’s the secret: microservices aren’t all rainbows and butterflies, and distributed system problems can be really nasty.

So let’s not throw the baby out with the bathwater. When you hit monolith scaling issues, try asking yourself “what is really the issue here?”. Most of the time you can add guardrails or build limits into your code that emulate some of the benefits of microservices while keeping yourself single codebase and avoiding the RPC complexity.

See related articles

What it meant for me to give away my Lego

If you join an early-stage startup, you'll probably have to give away your Lego one day. Here's what I learned when I had to part with mine.

Lisa Karlin Curtis

Lisa Karlin Curtis

Battling database performance

Earlier this year, we experienced intermittent timeouts in our application — here’s how we tried to address the underlying issue over the next two weeks.

Rory Bain

Rory Bain

Building Workflows, Part 1 — Core concepts and the Workflow Builder

Part one of a deep-dive into building our workflow engine, covering core workflow concepts and how they are used to power the Workflow Builder.

Lawrence Jones

Lawrence JonesSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization