Driving successful change: Understanding DORA's Change Failure Rate metric

Change is a meaningful part of growth.

While I’m not here to get into a philosophical conversation, generally speaking, change is both necessary and good – it’s how we advance as organizations and continue to delight our customers.

Shipping iterations of features. Going live with a brand-new product. These are all cause for celebration!

But sometimes, despite our best efforts, changes don’t go as planned, and you’re left scrambling for resolution when you’re strapped for resources. And assuming you’re in the world of SRE or DevOps, this is probably hitting close to home for you.

You ship a bug fix, but that deployment results in an incident that raises a five-alarm fire. Change in this case? Not so good.

This is, unfortunately, where Change Failure Rate comes in. And in the end, understanding the success rate of changes is crucial for optimizing your development, testing, and deployment processes.

So in this article, I’ll dive into this vital DORA metric, detail its benchmarks, and provide practical insights to help you drive more frequent successful changes. I’ll also explain how incident.io can help you meaningfully improve your response processes when, inevitably, a change results in an incident.

💭 This article is part of our series on DORA metrics. Here are some links to the rest:

What is Change Failure Rate?

Imagine you’ve just deployed a shiny new feature, say a Catalog. But a few hours after it goes live, you get a Datadog alert that triggers an incident.

It’s an issue with the Catalog you just shipped!

This is Change Failure Rate in practice. But by definition, it measures the percentage of changes that result in incidents or service disruptions.

By keeping tabs on this metric, teams can gain valuable insights into the efficacy of their entire software development lifecycle, from development through testing and on to deployment.

OK, how do I calculate it?

Change Failure Rate is calculated as the number of failed changes as a proportion of the total changes implemented over a specific period. It’s often expressed as a percentage to keep things simple.

Here’s what that formula looks like:

Why should I bother keeping tabs on this?

Generally speaking, having a low Change Failure Rate shows that your organization is effectively managing and implementing changes with minimal disruptions and issues.

On the other hand, a high Change Failure Rate might suggest that your process for introducing changes needs improvement, such as better testing, documentation, or communication. I’ll get into this in a bit.

How to benchmark your Change Failure Rate

DORA helpfully provides benchmarks for Change Failure Rate, letting teams gauge their performance, identify performance gaps, and highlight any areas for improvement.

Here are the benchmarks based on the calculations I shared above:

- Elite performers: Less than 5%

- High performers: Between 5% and 15%

- Medium performers: Between 15% and 45%

- Low performers: More than 45%

As is the case with all DORA metrics, it's important to note that these benchmarks can vary based on factors such as the complexity of your systems, the maturity of your organization, and the nature of the changes being implemented.

How you can optimize your processes to improve your Change Failure Rate

At this point, you may be wondering, “how can I reduce the number of incidents caused by new changes?”

Well, reducing your Change Failure Rate requires a few things, namely a proactive approach to change management and a cultural shift toward focusing on continuous improvement.

With that said, here are five practical strategies to optimize your change management processes and examples of how to implement each.

1. Focus on robust testing and quality assurance

First things first, if you want to ensure a successful change implementation, it’s important to invest in comprehensive testing and quality assurance processes upfront. This means using various testing techniques and tools to identify and address potential issues before deploying changes to the production environment. Here are some examples of testing approaches:

Automated Testing: Consider using frameworks like Selenium or Jest to run tests on software applications automatically before shipping them out. For instance, when a developer makes changes to a feature, automated tests can verify if the core functionalities of the application still work as intended, which can save you from the headache of responding to related incidents after the fact.

Regression Testing: Whenever a new change is introduced, conduct regression testing to ensure that previously functional aspects of the application have not been adversely affected. This way, you can catch unintended side effects that might have been introduced during the development process.

2. Implement incremental and controlled deployments

Instead of deploying all changes at once, it’s a good idea to implement an incremental and controlled deployment strategy. This approach involves releasing changes gradually in smaller batches to specific segments of users or systems. Two common techniques for controlled deployments are:

Feature Flags: Also known as feature toggles, feature flags allow you to enable or disable specific features in real time without redeploying the entire application. By controlling the rollout of features to specific user groups, you can minimize the impact of potential failures and incidents.

Canary Deployments: In a canary deployment, a small percentage of users receive the new changes first, while the rest continue using the existing version. This allows you to monitor the performance and behavior of the new version in a real-world scenario before fully deploying it. With canary deployments, the change might still fail, but the blast radius is drastically reduced.

3. Use monitoring and observability tools

To detect and respond to incidents quickly, you should lean on dedicated monitoring and observability software like Datadog or Sentry. These tools provide real-time insights into the health and performance of your systems during and after deployments. Some examples of features that monitoring and observability tools might enable include:

Application Performance Monitoring (APM): Helps collect data on application performance metrics, such as response times, error rates, and resource utilization. This data helps you pinpoint performance bottlenecks and potential issues.

Log Analysis: Lets you analyze log data generated by your applications and infrastructure to identify anomalies and errors. Logging can help you understand the sequence of events leading up to a failure and assist in diagnosing the root cause.

4. Perform post-deployment validation

Improving your change management isn’t exclusively a pre-deployment exercise. After deploying changes, it's crucial to validate their success and functionality. Post-deployment validation involves monitoring service levels (potentially leaning on SLOs) and collecting user feedback to ensure changes are working as intended. Some examples of validation practices include:

Monitoring KPIs: Continuously monitor essential performance metrics, such as response times, conversion rates, and error rates, to ensure that the changes have not negatively impacted critical aspects of your application or service.

5. Foster a culture of continuous learning and improvement:

To truly reduce the Change Failure Rate, it's crucial to promote a culture of continuous learning and improvement within your organization. If you accept that things will fail some of the time, then investing in robust feedback and learning loops is a great way to get value for money! Encouraging collaboration, openness, and learning from past incidents is a fantastic way to prevent similar issues from repeating in the future. Some examples of fostering such a culture include:

Being transparent with incidents: Incidents are a powerful learning tool, so declaring and managing them in public can help spread knowledge and expertise across an organization, which ultimately helps make future changes more successful.

Post-Incident Reviews: Conduct thorough post-incident reviews after any major failure or outage. It’s also worth considering holding these reviews for any small bugs that come up repeatedly.

When you host these sessions, you should involve all relevant stakeholders in a blameless environment to understand what happened, why it happened, and what steps can be taken to prevent it from recurring.

By implementing these strategies and fostering a culture of continuous improvement, you can optimize your change management processes and significantly reduce your Change Failure Rate, leading to more reliable and efficient operations.

Incidents will happen: incident.io can help you navigate them

While I’ve just outlined a bunch of different ways to help reduce the likelihood of incidents happening as a result of changes, the fact is that incidents can and will still happen. For moments like these, it’s important to have an incident response process in place that helps you resolve incidents faster and cut back on any downtime.

That’s where incident.io comes in.

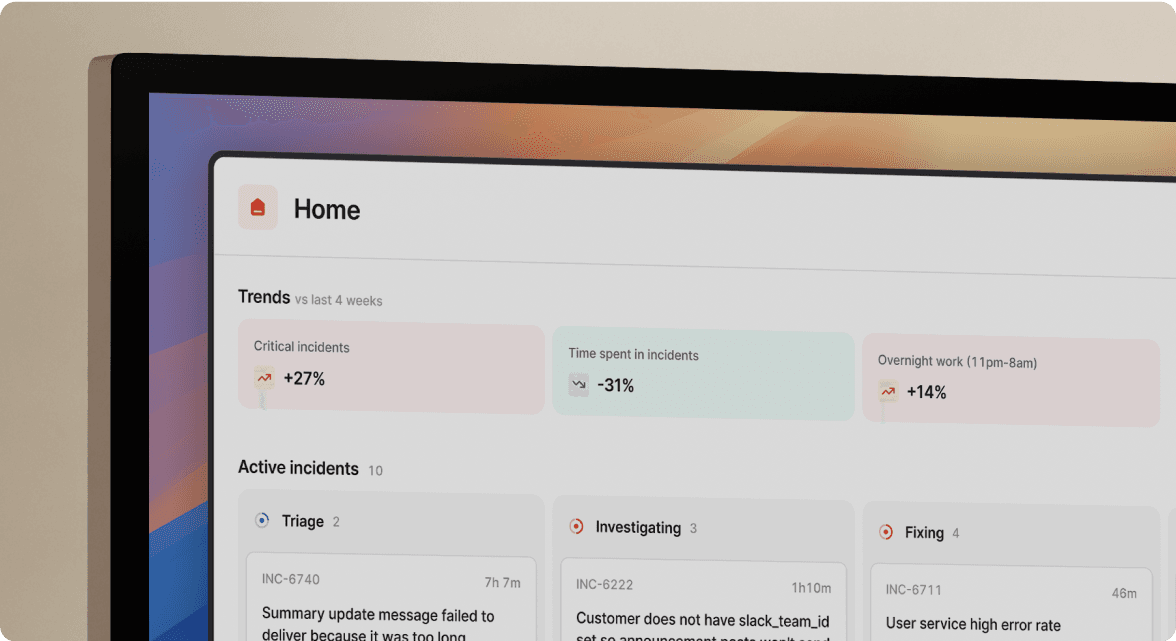

incident.io is the easiest way to manage incidents from declaration to post-mortem. Intuitively designed and with powerful built-in workflow automation, incident response can become a superpower for your organization.

With features that streamline communication, such as dedicated incident Slack channels, and an Insights dashboard that highlights any areas of improvement in your incident response, incident.io is an end-to-end solution that helps businesses build more resilient products. Interested in learning more? Be sure to contact us to schedule a custom demo.

More from DevOps Research and Assessment (DORA) guide

What are DORA metrics and why should you care about them?

Google's DORA metrics can help organizations create better products, build stronger teams, and improve resilience long-term.

Luis Gonzalez

Luis Gonzalez

Development efficiency: Understanding DORA's Mean Lead Time for Changes

By using DORA's Mean Lead Time for Changes metric, organizations can increase their speed of iteration

Luis Gonzalez

Luis Gonzalez

Shipping at speed: Using DORA's Deployment Frequency to measure your ability to deliver customer value

By using DORA's deployment frequency metric, organizations can improve customer impact and product reliability.

Luis Gonzalez

Luis GonzalezSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization