Incident management tools for SRE teams: What SREs actually need

Updated January 19, 2026

TL;DR: The bottleneck in modern incident response isn't alerting. It's coordination overhead. SRE teams spend 10-15 minutes per incident juggling PagerDuty, Slack, Datadog, Jira, and Google Docs before troubleshooting starts. Slack-native platforms like incident.io eliminate this "coordination tax" through automated channel creation, timeline capture, and AI-powered post-mortems. PagerDuty remains the enterprise standard for complex alerting. But if your MTTR stays high because your team manages process instead of solving problems, you need a coordination platform. (82 words)

The difference between a 15-minute fix and a 4-hour outage often comes down to how effectively teams coordinate under pressure, not individual technical skills. Your monitoring stack already detects issues instantly. The problem is what happens next: someone creates a Slack channel manually, hunts through PagerDuty to find who's on-call, opens Datadog in three browser tabs, starts a Google Doc for notes, creates a Jira ticket, and remembers to update the status page. Twelve minutes gone before troubleshooting starts.

This isn't incident management. It's incident administration.

The "coordination tax": Why SRE teams are burning out

Coordination tax is the time your team spends on logistics instead of engineering during incidents. incident.io's research on incident communication best practices shows manual status updates and stakeholder notifications extend MTTR by 10-15 minutes per incident. At 15 incidents per month, that's 225 wasted minutes of administrative overhead your tools should handle automatically.

The typical incident response creates massive coordination overhead. Your team checks PagerDuty to find who's on-call, opens Datadog for metrics, coordinates in Slack, takes notes in Google Docs, creates Jira tickets, and updates Statuspage. Five tools and twelve minutes of logistics before troubleshooting starts, according to Google's SRE Workbook on incident response, where large-scale incidents become confusing without agreed-upon structure.

One G2 reviewer captured this perfectly:

"incident.io consolidates workflows that were spread across multiple tools into one centralized hub within Slack, which is really helpful because everyone's already there." - Alex N. On G2

The cognitive load compounds when you're on-call at 2 AM. Context-switching between alerting (PagerDuty), observability (Datadog), communication (Slack), documentation (Google Docs), and ticketing (Jira) means critical details slip through the cracks. Post-mortems become archaeology expeditions three days later because nobody captured the timeline in real-time.

What SREs actually need in incident response software

Slack-native workflows vs. web-first integrations

"Slack-native" isn't marketing speak. It's an architectural distinction that determines whether your tool eliminates coordination tax or adds to it.

Slack-native incident management makes chat the primary interface. Responders declare incidents, coordinate response, and capture timelines inside the channel through slash commands, automated channel management, and real-time context capture. The entire incident workflow happens in Slack, from /inc declare through /inc resolve.

Web-first tools with Slack integrations send notifications to Slack with links that open external portals. The problem with manual status pages is context-switching. You're coordinating in Slack, but updating your status page means logging into a separate web portal, which gets forgotten during a fire.

When you type /inc declare in Slack with a truly native platform, you can configure workflows to automatically update status pages when incidents are declared or resolved. That's the difference between integration and native architecture.

"I appreciate how incident.io consolidates workflows that were spread across multiple tools into one centralized hub within Slack... It really helps teams manage and tackle incidences in a more uniform way." - Alex N. on G2

Automated timeline capture and post-mortem generation

The post-mortem problem isn't writing. It's remembering. Ninety minutes of Slack scroll-back archaeology trying to reconstruct who did what when, which alerts correlated, and what decisions were made in the Zoom call nobody recorded.

incident.io's automated timeline capture solves this. The platform scrapes every Slack message, slash command execution, role assignment, and call transcription as events happen. incident.io's Scribe feature provides AI-powered transcription and summarization for incident calls, extracting key decisions without requiring a dedicated note-taker.

When you resolve the incident, your post-mortem is 80% complete because the platform captured the timeline automatically. You spend 10 minutes refining, not 90 minutes reconstructing from memory.

"Incident provides a great user experience when it comes to incident management. The app helps the incident lead when it comes to assigning tasks and following them up." - Verified User on G2

Deep integration with observability stacks (Datadog, Prometheus)

Integration depth matters more than integration count. It's not enough to link to a Datadog dashboard. Your incident management platform should pull the specific graph, alert context, and recent deployment history directly into the Slack channel so responders see critical data without opening browser tabs.

Tools for migrating from PagerDuty and migrating from Opsgenie demonstrate how platforms can reduce migration friction by importing existing configurations. Similarly, migrating Datadog monitors to incident.io shows how native integrations preserve your existing observability investment while adding coordination capabilities on top.

AI SRE capabilities: Moving beyond log correlation

Most "AI-powered" incident tools just correlate logs or answer basic questions. Useful AI achieves measurable outcomes: identifying likely root causes, automating remediation suggestions, and timeline summarization that actually saves time.

incident.io's AI SRE handles the first 80% of incident response work. This means the AI filters noise, adds context from past incidents, and handles routine response tasks so engineers only get paged when it matters. The AI analyzes deployment timing, error log patterns, and service dependencies to surface the likely culprit, not just correlated logs.

When a P1 incident fires at 2:47 AM, the AI immediately correlates the spike to a deployment 15 minutes earlier, surfaces the specific commit hash and diff, and suggests the engineer who deployed it. Instead of spending 20 minutes hunting through logs, you start with the right hypothesis in 30 seconds. The AI also suggests deployment-ready remediation steps, not just "here are some related logs" but "this deployment at 14:32 likely caused the issue, here's the diff."

One SRE lead noted:

"AI in general is something that can continue to be improved upon... making smart workflows and having AI with the right context is something that [incident.io does] really well." - Alex N. on G2

Top incident management tools for SRE teams

| Tool | Slack-Native | On-Call Pricing | AI Capabilities | Support Score (G2) |

|---|---|---|---|---|

| incident.io | Yes (primary interface) | +$10/user/month (Team annual) or +$20/user/month (Pro) | Handles 80% of response work, auto-remediation | 9.8 |

| PagerDuty | No (web-first + notifications) | Included in higher tiers | Basic correlation (add-on cost undisclosed) | 8.9 |

| Opsgenie | No (web-first + notifications) | Included | Limited automation | 8.7 ⚠️ EOL April 2027 |

| FireHydrant | Yes (Slack-focused) | Free responder roles | Workflow automation | 9.2 |

| Rootly | Yes (born in Slack) | Included | Custom workflow triggers | Not available |

incident.io: The Slack-native platform for end-to-end coordination

Best for: SRE teams at 50-500 person companies who need to eliminate coordination overhead and reduce MTTR through automation.

Pricing: Team plan at $19/user/month (monthly); on-call scheduling adds $12/user/month. Pro plan at $25/user/month includes Microsoft Teams support and advanced AI features; on-call adds $20/user/month. Real cost with on-call: $31/user/month (Team monthly) or $45/user/month (Pro).

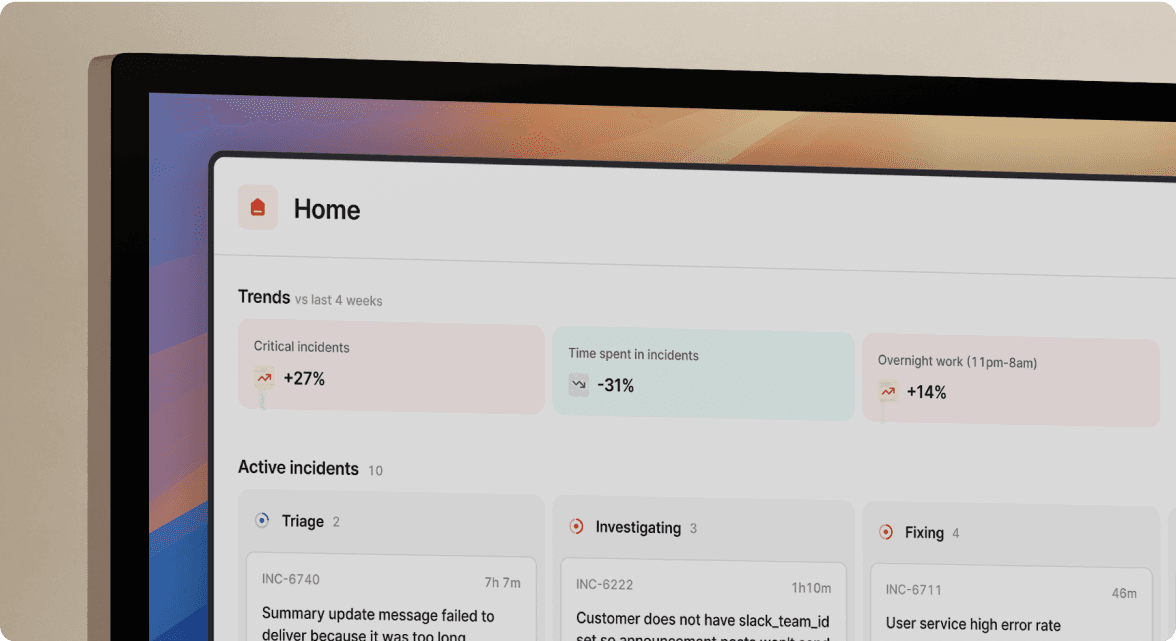

incident.io treats Slack as the primary interface, not a notification endpoint. Users can declare an incident instantly from any message using shortcuts or slash commands. The platform automatically creates dedicated channels, pulls in on-call responders based on affected services, starts timeline capture, and surfaces relevant runbooks.

Your workflow reduces coordination to seconds instead of minutes. Alert fires from Datadog. incident.io creates #inc-2847-api-latency-spike. The on-call engineer gets paged. The service owner from Catalog joins automatically. Timeline recording begins. Engineers use intuitive commands like /inc escalate, /inc assign, and /inc severity critical without leaving Slack.

"Without incident.io our incident response culture would be caustic, and our process would be chaos. It empowers anybody to raise an incident - and helps us quickly coordinate any response across technical, operational and support teams." - Matt B. on G2

Intercom migrated hundreds of engineers off PagerDuty and Atlassian Status Page onto incident.io in just a few weeks, resulting in faster incident resolution and reduced MTTR. The case study emphasizes that teams now have everything they need in one place, enabling quicker decisions, clearer communication, and simpler operational management.

The platform's support velocity sets it apart. incident.io received a Quality of Support score of 9.8 on G2, compared to PagerDuty's 8.9, suggesting more responsive and helpful customer service during critical incidents.

"Incident.io tech support is fantastic. When you have a problem, someone from incident.io immediately opens a chat with you in the slack channel, email, or anywhere else to solve the problem immediately." - Tiago T on G2

Watch out for: Microsoft Teams integration has fewer features than Slack, though the platform does support coordinating incidents directly from Microsoft Teams with automated workflows and AI assistance. Verify the Teams functionality meets your needs during trial if you're not Slack-centric.

PagerDuty: The enterprise standard for alerting

Best for: Large enterprises (500+ engineers) needing sophisticated alert routing at scale with complex escalation policies.

Pricing: Base pricing of $21-$41/user/month excludes critical features, with pricing opacity driving teams away. Add-on costs for AI features, runbooks, and noise reduction aren't publicly disclosed. One SRE lead reported their total cost reaching $65/user/month after add-ons, making total cost of ownership difficult to predict.

PagerDuty excels at one thing: waking you up reliably. According to PagerDuty's official integrations page, they offer over 700 integrations and battle-tested alerting infrastructure. The platform handles complex routing rules, time-based escalations, and multi-vendor monitoring aggregation better than any competitor.

The challenge is what happens after the alert. PagerDuty's Slack integration sends notifications with links to web UI. Clicking opens a browser. You manage the incident in PagerDuty's portal. Then you copy-paste updates back to Slack for team coordination. The timeline lives in PagerDuty. The conversation lives in Slack. Two sources of truth that never sync.

"I hate PagerDuty and really wish incident.io would let us replace PagerDuty. Hopefully soon." - Mike H on G2

That review captures the frustration: PagerDuty solves alerting but creates coordination friction. For organizations where incident response happens in Slack anyway, maintaining a separate incident management portal adds overhead.

PagerDuty's Quality of Support score of 8.9 trails incident.io's 9.8, reflecting the shift from live chat to email-only support that frustrated long-time customers.

Watch out for: Hidden costs in add-ons and per-seat pricing that escalates quickly as teams grow. If your bottleneck is coordination rather than alerting, PagerDuty won't solve your MTTR problem. Compare total cost carefully: PagerDuty's Business plan starts at $41/user/month. Add on-call ($15/user/month), AI features (undisclosed), and status pages (undisclosed). For the same 25-person team, real TCO likely exceeds $20,000/year with less Slack integration depth than incident.io offers.

Opsgenie: The legacy option you should avoid

Best for: No one. Opsgenie reaches end-of-life April 5, 2027. Atlassian stops new sales June 4, 2025.

Critical context: Atlassian requires existing Opsgenie data migrated before April 5, 2027, with alerting and on-call features now available in Jira Service Management and Compass. Opsgenie will no longer be available for new purchases starting June 4, 2025, with end of support on April 5, 2027, when all Opsgenie data will be deleted.

This isn't speculation. It's official Atlassian policy. Evaluating Opsgenie in 2026 means planning a mandatory migration in less than 18 months. Any investment in configuration, training, or process documentation becomes technical debt with an expiration date.

Atlassian offers automated migration tools to Jira Service Management, which provides the path of least contract friction if you're already an Atlassian shop. But JSM is service-desk-first design, not purpose-built for real-time incident response like Slack-native platforms.

Opsgenie's Quality of Support score of 8.7 trails incident.io's 9.8, and the sunset announcement creates uncertainty around continued support quality during the migration period.

Watch out for: The sunset deadline creates urgency. Don't wait for Atlassian to force your hand. Evaluate alternatives now while you control the migration timeline.

FireHydrant: Workflow automation for service owners

Best for: Platform teams wanting strong service catalog integration with customizable runbooks.

FireHydrant competes directly in the Slack-native incident management space, offering per-user pricing with free responder roles and comprehensive workflow automation. The platform emphasizes service ownership models where teams define their own incident response patterns.

FireHydrant's Quality of Support score of 9.2 is strong but trails incident.io's 9.8, suggesting solid support without the exceptional responsiveness that differentiates incident.io.

Watch out for: If you prioritize support velocity and AI maturity over maximum customization flexibility, incident.io's opinionated defaults and faster feature shipping may better fit your needs.

Rootly: Configurable automation for platform teams

Best for: Teams needing highly customizable workflows with enterprise security for regulated industries.

Rootly was born from recognizing Slack was already where everything was happening. The founders asked "What if incident response lived inside Slack?" The question emerged after building an internal tool where processes were fragmented, manual, and chaotic. The platform automates critical incident response steps, instantly creating dedicated Slack channels, inviting stakeholders, and launching workflows so responders focus on solving issues rather than managing process.

Rootly provides enterprise security features suited for regulated industries, holding SOC 2 Type II certification with explicit GDPR, CCPA, HIPAA, and DORA compliance support, offering native secrets management with HashiCorp Vault, granular RBAC, and comprehensive audit logs.

Watch out for: High customization comes with configuration overhead. If you want "works out of the box" with strong defaults, incident.io's opinionated approach accelerates time-to-value.

Key considerations for choosing an incident management tool

Pricing models and TCO transparency

Pricing opacity wastes evaluation time and creates sticker shock at contract renewal. Look beyond advertised "starts at" pricing to understand real total cost of ownership.

incident.io uses responder-based pricing where Responder and On-call seats are for users who actively engage with incident.io to be on-call and manage incidents, whereas Viewers (free seats) are users who declare and join incident channels to contribute information but don't actively participate in response. This model scales efficiently: your entire engineering org can have Viewer access for context, while only the 30 engineers in rotation pay for Responder + On-call seats.

Calculate your real cost: (on-call engineers × base price) + (on-call engineers × on-call add-on) × 12 months. For a 25-person rotation on incident.io Team plan with monthly billing: 25 × $19 + 25 × $12 = $775/month = $9,300/year. For Pro plan with advanced features: 25 × $25 + 25 × $20 = $1,125/month = $13,500/year.

On-call management and cognitive load

Your on-call tool should reduce burden, not add to it. Evaluate how easy it is to swap shifts when someone gets sick, handle shadow rotations for onboarding junior engineers, and visualize coverage gaps across time zones. This eliminates the "shadow spreadsheet" problem where on-call schedules live in PagerDuty but team communication happens in Slack, requiring constant manual checking.

Syncing on-call schedules with Slack user groups ensures the right people get @-mentioned automatically when incidents fire, eliminating manual lookup across spreadsheets or PagerDuty portals.

"incident.io allows us to focus on resolving the incident, not the admin around it. Being integrated with Slack makes it really easy, quick and comfortable to use for anyone in the company, with no prior training required." - Andrew J on G2

Support velocity and reliability

When your tool breaks during an incident, every minute of delayed support extends customer-facing downtime. Evaluate whether you get a shared Slack channel with engineering or an email ticket queue.

incident.io scores 9.8 versus Datadog's 8.3 for Quality of Support, demonstrating exceptional responsiveness that matters during critical incidents.

"Outside of incident response our feature and support requests are acknowledged in record time by a team that obviously cares about the product they're building and the problems they're solving." - Matt B on G2

Support velocity isn't just about fixing bugs. It's about competitive velocity. When one platform ships four requested features while a competitor is still scheduling a discovery call, that tells you which vendor will adapt to your evolving needs.

How to evaluate: A checklist for SRE leads

Run a structured 30-day proof of concept using real incidents, not hypothetical scenarios:

- Test Slack-native workflow: Declare a simulated incident using only Slack commands. Track how many clicks require leaving Slack. Target: zero browser tabs for basic incident management.

- Validate integration speed: Trigger a Datadog alert and measure seconds until incident channel creation and on-call engineer notification. Industry average with manual processes is 8-12 minutes; automated platforms should reduce this to under 2 minutes.

- Assess timeline accuracy: Run one real incident end-to-end, then review the auto-captured timeline for completeness. Check if it captured key decisions, role changes, and linked Datadog graphs automatically. Target: 80%+ complete post-mortem draft requiring only refinement.

- Measure cognitive load reduction: Survey five engineers after using the platform. Ask: "Did this reduce or increase your stress during incident response?" Look for unanimous "reduced" responses.

- Test support responsiveness: File a real bug report or feature request during the trial. Track time to first response and resolution. Look for sub-4-hour response to critical issues, sub-48-hour response to feature feedback.

Watch incident.io's video on improvements to on-call to see how the platform handles on-call engineering workflows. For post-incident capabilities, see how incident.io shines for post-incident workflows.

Moving from coordination chaos to coordinated response

Your decision is simple. If MTTR stays high despite excellent monitoring, your bottleneck is coordination overhead. The 10-15 minutes wasted assembling teams, hunting context, and updating tools before troubleshooting starts adds up to hours of lost time per month. Slack-native platforms eliminate this tax by automating the administrative work so engineers focus on engineering.

Try incident.io free and run your first incident in Slack. See if declaring with /inc declare, escalating with /inc escalate, and resolving with /inc resolve feels more natural than toggling between five tools. Or book a demo to see the AI SRE features identify likely root causes in real incidents.

"Incident has transformed our incident response to be calm and deliberate. It also ensures that we do proper post-mortems and complete our repair items." - Mike H. on G2

Your team already has the technical skills to resolve incidents. Give them tools that eliminate the coordination chaos so those skills can shine.

Key terminology

MTTR (Mean Time To Resolution): The average time from incident detection to full resolution, including detection, response, troubleshooting, and validation. Coordination overhead directly inflates MTTR without adding engineering value.

Slack-native: Architecture where the entire incident lifecycle happens inside Slack through slash commands and automated channel management, rather than sending notifications from a web-first tool. Native platforms treat chat as the primary interface.

Coordination tax: The time teams spend on incident logistics (creating channels, updating tools, finding context, notifying stakeholders) instead of engineering work (identifying root cause, deploying fixes). Typically adds 10-15 minutes per incident.

Timeline capture: Automated recording of all incident events (Slack messages, role assignments, alerts, decisions) in chronological order without manual note-taking, enabling accurate post-mortems without reconstruction from memory.

AI SRE: Artificial intelligence that automates incident response tasks like identifying likely root causes based on deployment timing and service dependencies, not just generic log correlation. Handles routine response work so engineers focus on complex problem-solving.

FAQs

See related articles

The post-mortem problem

Post-mortems are one of the most consistently underperforming rituals in software engineering. Most teams do them. Most teams know theirs aren't working. And most teams reach for the same diagnosis: the templates are too long, nobody has time, nobody reads them anyway.

incident.io

incident.io

Keeping it boring: the incident.io technology stack

This is the story of how incident.io keeps its technology stack intentionally boring, scaling to thousands of customers with a lean platform team by relying on managed GCP services and a small set of well-chosen tools.

Matthew Barrington

Matthew Barrington

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian HansonSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization