Incident communication best practices: Keep stakeholders informed

Updated January 8, 2026

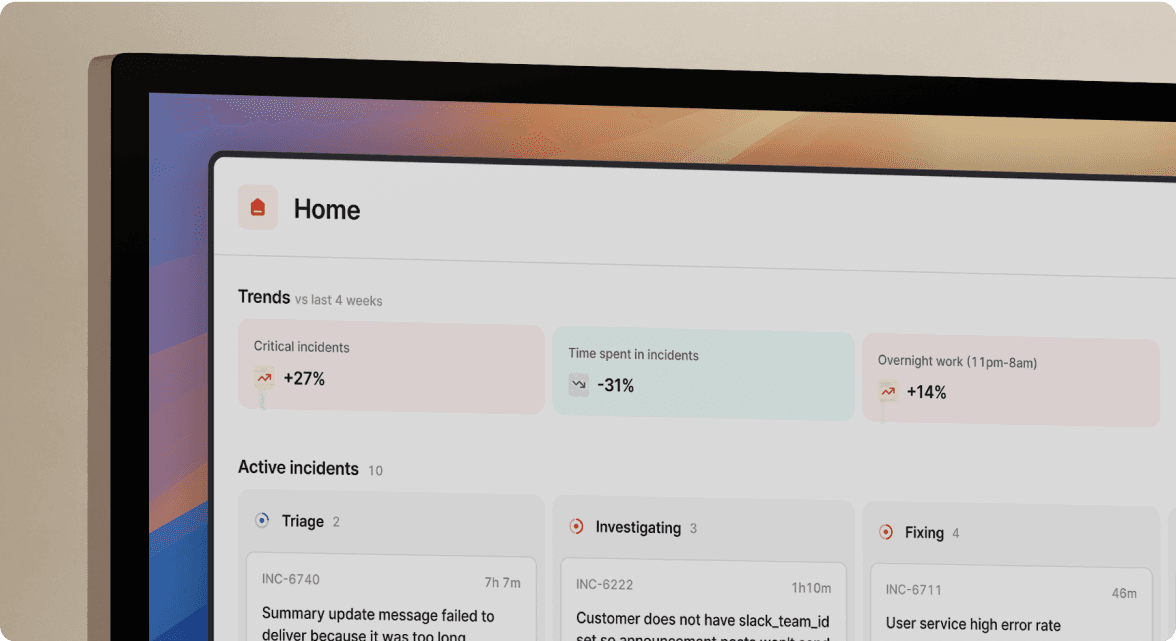

TL;DR: Manual status updates and stakeholder notifications are toil that extends MTTR by 10-15 minutes per incident and burns out your team. The fix: automate your communication workflow so updates happen as a byproduct of incident response, not a separate task. Use severity-based routing to notify the right people (not everyone), maintain a rigid update cadence (even when there's no news), and let AI draft your messages. Teams using automated workflows reclaim hours previously spent on manual processes while keeping stakeholders informed without pulling engineers away from troubleshooting.

In the heat of a P1, every minute spent crafting a polite status update for executives is a minute not spent fixing the root cause. The pattern is familiar: database troubleshooting gets interrupted by Customer Support asking for ETAs, executives demanding status, and customer success managers relaying outdated information because the status page hasn't been updated in 23 minutes. Communication becomes a competing priority that slows down the actual fix.

This is the communication tax that extends incidents and burns out engineers. The solution isn't "better discipline" or "remember to update more often." The solution is treating communication like code: automated, templated, and reliable. This guide covers how to structure, template, and automate incident communication so your team can focus on resolution without leaving stakeholders in the dark.

The hidden cost of communication toil in incident response

The Google SRE Book defines toil as work that is manual, repetitive, automatable, tactical, devoid of enduring value, and scales linearly as a service grows. Manual status updates check every box:

- Manual: An engineer stops troubleshooting to type

- Repetitive: The format is similar each time

- Automatable: Tools can trigger updates automatically

- Tactical: It's reactive work during an incident

- Devoid of enduring value: Once the incident resolves, the update has served its purpose

- Scales linearly: More incidents mean more manual updates

The typical incident response process creates massive coordination overhead. Checking PagerDuty to find who's on-call, opening Datadog for metrics, coordinating in Slack, taking notes in Google Docs, creating Jira tickets, and updating Statuspage requires five tools and 12 minutes of logistics before troubleshooting starts.

The real cost isn't just time. When you don't communicate, stakeholders assume the worst. Research shows poor incident communication directly affects customer churn, as customers left in the dark during outages worry about future reliability and evaluate competitors. Proactive incident communication builds trust with customers and can actually generate sales by positioning you as a forthright business partner.

"incident.io brings calm to chaos. We've been running incidents in Slack for a few years, however, our process and tooling were limiting and made incident response a challenge. Incident.io is now the backbone of our response, making communication easier, the delegation of roles and responsibilities extremely clear, and follow-ups accounted for." - Verified user review of incident.io

5 best practices for effective incident communication

1. Automate status page updates to stop the "is it down?" tickets

Your first external communication should go out within minutes of detecting an issue, even without complete information. Customers already know something is wrong. Silence creates a vacuum that fills with speculation, frustration, and social media complaints.

The problem with manual status pages is context-switching. You're in Slack coordinating response, and updating your status page means logging into a separate web portal, remembering your login, crafting a message, and clicking publish. During a fire, this gets forgotten.

The fix is treating your status page as an extension of your incident channel. When you type /inc declare in Slack, incident.io's built-in status pages automatically post an "Investigating" update. When you resolve with /inc resolve, the status page updates to "Resolved" without you touching a web portal. For a walkthrough of how this works in practice, watch our Status Pages for startups video.

The Slack integration is what makes this workflow stick. As one user noted:

"The practicality to use it integrated on our communications channel." - Verified user review of incident.io

Your customers can also subscribe to status page updates via email, reducing "is this fixed?" tickets by keeping them informed without your team lifting a finger.

2. Define severity levels to route alerts to the right people

Not every incident needs to wake up the CEO. A P3 bug affecting internal tooling doesn't require the same communication blast as a P1 affecting all customers. Without clear severity definitions, you'll either over-communicate and cause alert fatigue, or under-communicate and blindside stakeholders with customer complaints.

Here's a standard framework based on incident severity best practices:

| Severity | Definition | Who gets notified | Update cadence |

|---|---|---|---|

| SEV1 | Most/all customers affected, revenue impact | On-call + execs + CS + public status page | Every 20-30 minutes |

| SEV2 | Significant impact, workaround available | On-call + stakeholders in Slack | Every 4 hours |

| SEV3 | Minor impact, no immediate action required | Team channel only | Business hours updates |

The key insight: A SEV1 means waking people up at 3 AM. It means the CEO might be getting calls from major customers. SEV2 incidents are serious but may not require waking everyone. SEV3 needs attention soon but can be handled during normal business hours.

We automate this routing with incident.io Workflows. You configure trigger-condition-action logic once: when severity equals SEV1, automatically invite the @exec-team user group to the channel and post a summary to #company-announcements. When severity equals SEV3, keep it contained to the team channel only. Watch how Intercom migrated their incident workflows to see severity-based routing in action.

3. Establish a rigid update cadence and stick to it

During high-impact incidents, it's important to provide updates every 20-30 minutes until resolution, especially in early stages. Even when there's no significant progress to report, sending an update that acknowledges the ongoing issue maintains customer trust.

The "heartbeat" rule: Update every 30 minutes for SEV1 incidents, even if the update is "We're still investigating. No new information. Next update in 30 minutes." This sounds counterintuitive, but "no update" is still an update. It tells stakeholders you haven't forgotten about them and reduces the anxiety that triggers "is this fixed?" messages.

You can automate heartbeat reminders with incident.io scheduled nudges. Set a workflow that sends a Slack reminder to the incident commander every 30 minutes for SEV1 incidents: "Time for a status update. Use /inc update to post to the channel and status page simultaneously." This removes the mental burden of tracking time during a crisis.

For Customer Support teams specifically, predictable updates reduce their burden. Instead of fielding dozens of escalation requests ("Can you check on this?"), they can tell customers "Engineering is actively working on it and will provide the next update at 3:30 PM."

"The slack integration makes it so easy to manage the incident, it's a breeze to have it and not having to worry about forgetting some step, there are tons of ways to customize the decisions and automate communication." - Verified user review of incident.io

4. Centralize internal updates in Slack to reduce context switching

Split-brain happens when one conversation runs in Zoom while another runs in Slack without synchronization. Critical context gets lost and responders waste time re-establishing what's already been discussed.

The best practice from crisis communication experts is a two-channel structure:

- #inc-[date]-[description]: The war room for technical responders actively troubleshooting

- #incident-updates: A broadcast channel for broader visibility where leadership and stakeholders can follow along without cluttering the technical discussion

Limit war room participation to people actively resolving the incident. Anyone else may ask questions about why the incident happened, which creates distractions and wastes valuable time. The broadcast channel satisfies stakeholder curiosity without interrupting engineers.

We automatically create dedicated incident channels with consistent naming conventions (like #inc-240109-api-latency) so every incident has a searchable, centralized record. Our Response for startups video demonstrates the channel structure in practice.

5. Use AI to draft communications and post-mortems

In the middle of a P1, your brain is maxed out troubleshooting production. Writing a customer-friendly status update requires a mental gear shift that costs precious minutes and cognitive bandwidth.

AI tools now draft communications by reading your technical timeline and generating human-readable summaries. incident.io's AI automates time-intensive tasks like note-taking, live updates, and post-incident write-ups. The AI SRE assistant delivers 90%+ accuracy in autonomous investigation while suggesting deployment-ready remediation steps.

For post-mortems specifically, this transforms a 90-minute reconstruction exercise into a 15-minute editing task. The AI captures the timeline as the incident unfolds, recording who did what, when, which alerts correlated, and which fixes worked. Watch our Supercharged with AI video to see how this works in practice.

"Incident.io is very easy and intuitive to use, which greatly reduces communication time between teams, developers and external customers during an incident." - Verified user review of incident.io

Incident communication templates for SREs

Pre-written templates eliminate wordsmithing during a crisis. Decide on common language ahead of time, get it approved by leadership, and save it in your incident management tool.

Internal stakeholder update templates

Initial investigation:

Status: Investigating

Issue: [Brief description of the issue]

Impact: [Specific services affected and extent of impact]

Started: [Time incident began]

Next update: [Timeframe]

Root cause identified:

Status: In Progress

Issue: [Brief description]

Impact: [Current impact assessment]

Root Cause: [What we found]

Actions Taken: [What we're doing to resolve]

Next update expected by: [time]

Monitoring fix:

Status: Monitoring

Issue: [Brief description]

Resolution: [Actions taken]

Impact Duration: [Total time customers were affected]<p></p>

Save these templates as custom incident fields in incident.io. When you create an incident, the template populates automatically based on severity. Edit the bracketed sections, then use /inc update to broadcast to your team channel and status page simultaneously.

External status page templates

External communications need a different tone. Keep them empathetic but vague enough to avoid liability or premature root cause claims.

Investigating:

We are investigating issues with [product/service] and will provide updates here soon.

Identified:

We have identified the issue affecting [product/service]. Our team is implementing a fix and we expect resolution within [timeframe].

Monitoring:

A fix has been implemented for the [product/service] issue. We are monitoring to ensure full recovery.

Resolved:

The issue affecting [product/service] has been resolved. Service has returned to normal operation. We apologize for any inconvenience caused.

For Vanta's team, using incident.io reduced hours spent on these manual processes by automating template population from incident data.

Top tools for automated incident communication: A comparison

Not all incident management platforms handle communication equally. Here's what matters for reducing communication toil: can you update your status page without leaving Slack? Does the tool draft messages for you? Does it generate post-mortems from captured timelines?

Table 1: Core communication features

| Platform | Slack-native workflow | Automated status pages | AI-drafted communications |

|---|---|---|---|

| incident.io | Yes, built-in | Yes, integrated | Yes, 90%+ accuracy |

| PagerDuty | App integration | Available | Event correlation (AIOps) |

| Atlassian Statuspage | No, standalone web UI | Yes, core product | No |

Table 2: Post-incident capabilities

| Platform | Post-mortem generation | Pricing model |

|---|---|---|

| incident.io | AI-drafted from timeline | $19-25/user/month (Team/Pro) |

| PagerDuty | Automated timeline building, manual analysis | Per user + add-ons |

| Atlassian Statuspage | Manual post-mortems | Per page/subscriber |

The architectural difference matters for your workflow. PagerDuty excels at alerting (we integrate with it), but coordination features were added later. Atlassian Statuspage requires you to leave Slack and open a web portal to update. We built incident.io as a Slack-native platform from day one, so status page updates happen automatically when you type /inc resolve in your incident channel.

"Creating a dedicated temporary channel to split the communication was a huge improvement to help us separate concerns instead of sounding the alarms in the wrong places." - Verified user review of incident.io

As one Trustpilot reviewer noted: "A company using Incident.io shows they are serious about using the best incident management tools to ensure the best user experience and maximize participation in incidents."

Pros and cons of using AI in incident communication

AI is transforming how teams handle incident communication, but it's not a silver bullet. Here's an honest assessment:

Pros:

- Speed: AI drafts updates in seconds vs. minutes of human wordsmithing

- Tone consistency: Messages maintain professional, empathetic tone even at 3 AM

- Reduced cognitive load: Engineers stay focused on troubleshooting, not copywriting

- Non-technical translation: AI summarizes complex logs for executives and customers who don't need the technical details

Cons:

- Hallucination risk: Research shows all major LLMs hallucinate, with rates ranging from 3% (GPT-4) to 27% in some systems. Always verify AI-generated content before sending externally.

- Lack of nuance: AI may miss sensitivity requirements for data breaches or compliance-related incidents

- Over-reliance: AI models don't recognize when they're hallucinating, so human review remains essential

The critical distinction: AI should assist (drafting) rather than act autonomously (sending without human review). For external communications, keeping a human in the loop protects your brand reputation and ensures accuracy. incident.io automates internal timeline capture and post-mortem drafts where the stakes of minor errors are lower.

"A tool that simplifies documentation and management so that we can focus on solutions" - Verified user review of incident.io

Moving from chaos to clarity

Good incident communication buys your team time to fix the actual problem. When stakeholders know what's happening (even if "what's happening" is "we're still investigating"), they stop interrupting with status requests. When your status page updates automatically, you eliminate the guilt of forgetting. When AI drafts your messages, you stay focused on troubleshooting.

The pattern across all these best practices: Stop treating communication as a separate task that competes with incident resolution. Treat it as an automated output of your response workflow.

To see automated status pages and AI-drafted updates in action, book a demo to walk through severity-based routing with our team.

Key terms glossary

Toil: Manual, repetitive, and automatable work that scales linearly with service growth and provides no lasting value, such as manual status updates.

MTTR: Mean Time To Resolution, the average time from incident detection to full resolution.

AI SRE: An AI system that supports site reliability engineering tasks, including incident investigation, root cause analysis, and drafting internal or external communications.

Post-mortem: A document created after an incident that records the timeline, root cause, impact, and follow-up actions to prevent recurrence.

RCA: Root Cause Analysis, the process of identifying the underlying cause of an incident rather than just addressing symptoms.

Status page: A public or internal webpage used to display current service health and communicate ongoing incidents to stakeholders.

Severity level: A classification system (commonly SEV1–SEV3 or P1–P3) that indicates incident impact and determines response urgency and communication requirements.

See related articles

The post-mortem problem

Post-mortems are one of the most consistently underperforming rituals in software engineering. Most teams do them. Most teams know theirs aren't working. And most teams reach for the same diagnosis: the templates are too long, nobody has time, nobody reads them anyway.

incident.io

incident.io

Keeping it boring: the incident.io technology stack

This is the story of how incident.io keeps its technology stack intentionally boring, scaling to thousands of customers with a lean platform team by relying on managed GCP services and a small set of well-chosen tools.

Matthew Barrington

Matthew Barrington

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian HansonSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization