Incident management ROI: How to calculate value and justify investment

Updated January 8, 2026

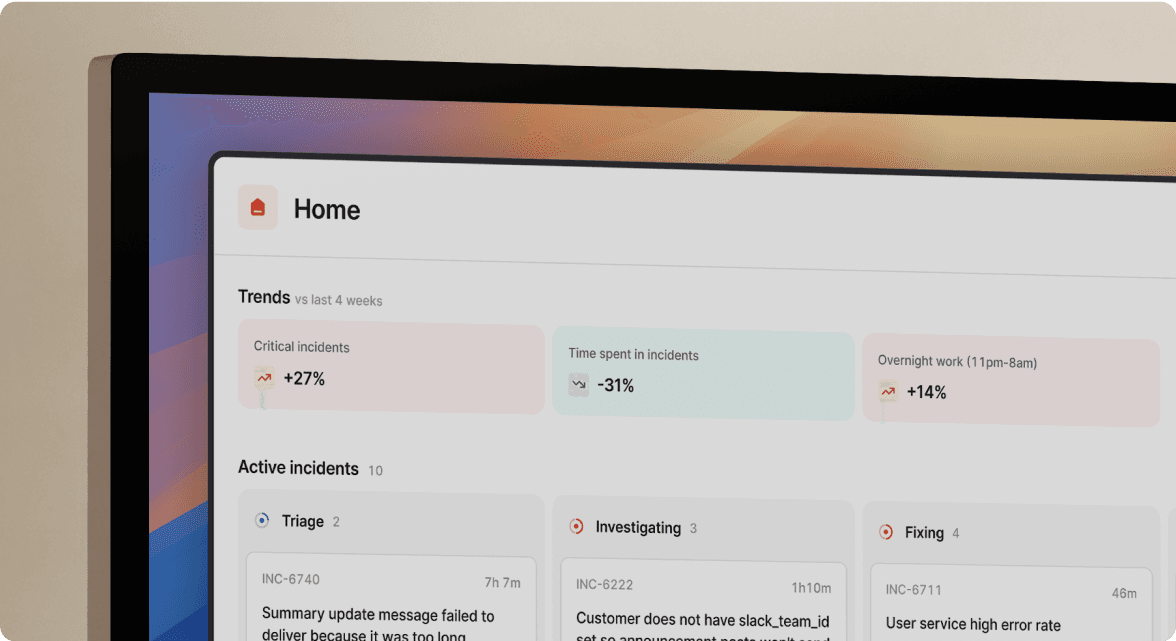

TL;DR: If you need to justify budget for a dedicated incident management platform, stop talking about "culture" and start talking about "efficiency." The ROI of incident.io comes from three sources: 1) Reducing downtime costs (by cutting MTTR 30-50%), 2) Reclaiming engineer hours (by automating the 90-minute post-mortem tax), and 3) Consolidating tool spend (replacing multiple tools with a unified platform). Use the framework and calculator below to build a business case that demonstrates strong ROI within 12 months.

Your CFO doesn't care about "reliability culture." They care about the engineering hours your team burns on coordination overhead while production is down. When you frame incident management as insurance against downtime, you lose the budget battle. When you frame it as an efficiency engine that reclaims thousands of engineering hours and consolidates wasted tool spend, you win.

This guide gives you the exact formulas, industry benchmarks, and an interactive calculator to prove that switching to a unified incident management platform pays for itself through reduced MTTR, reclaimed engineering hours, and tool consolidation. I've included real customer examples and sensitivity analysis so you can build a business case your finance team will actually approve.

Why "better reliability" isn't enough to unlock budget

Engineering teams and finance teams speak different languages. You talk about uptime percentages, MTTR trends, and blameless post-mortems. They talk about headcount costs, software spend, and ROI timelines. When you request budget for incident management tooling with "we need better reliability," you're asking finance to approve an expense they can't quantify.

The disconnect is real. According to Atlassian's research on downtime costs, the average cost of IT downtime is $5,600 per minute based on Gartner's widely-cited study. But that number means nothing to your CFO if you can't show how your specific team loses money during incidents, and how a platform investment changes that equation.

The solution is reframing "reliability" as "efficiency." The manual, repetitive work surrounding incidents (what Google's SRE team calls "toil") is a financial liability. Toil is work tied to running a production service that tends to be manual, repetitive, automatable, tactical, devoid of enduring value, and that scales linearly as a service grows. Every minute your senior engineers spend manually creating Slack channels, hunting for who's on-call, or reconstructing post-mortems from memory is a minute they're not shipping features or improving system resilience.

The hidden costs of the status quo: toil, burnout, and tool sprawl

Before calculating ROI on a new platform, you need to understand what the status quo actually costs. Most engineering leaders dramatically underestimate these numbers because the costs hide in engineer time, not software invoices.

The assembly tax

When an alert fires, your team doesn't immediately start troubleshooting. They spend 10-15 minutes on coordination overhead: manually creating Slack channels, hunting through systems to find who's on-call, copying alert links, checking team wikis for service owners, and pinging people individually.

For a team handling 15 incidents per month with 6 responders per incident at a fully loaded hourly rate of $120-$180 (I recommend using $150 as a conservative default), that coordination overhead adds up quickly. Using $150/hour, 15 incidents, 6 responders, and 12 minutes of assembly time per incident, you get: (15 × 6 × 12 ÷ 60) × $150 = $2,700 per month, or $32,400 annually spent just assembling teams.

"Without incident.io our incident response culture would be caustic, and our process would be chaos. It empowers anybody to raise an incident and helps us quickly coordinate any response across technical, operational and support teams." - Verified user review of incident.io

The context-switching tax

During every incident, your team juggles five or more tools: PagerDuty for alerts, Slack for coordination, Datadog for metrics, Jira for tickets, Google Docs for notes, and Statuspage for customer communication. Each context switch costs cognitive load and time.

According to research on AI incident management platforms, traditional incident management relies heavily on manual processes for alert triage, root cause analysis, and reporting, which increase time-to-resolution and error rates. The typical breakdown for a 45-minute incident shows that a significant portion of time goes to coordination and context-gathering rather than actual troubleshooting.

The documentation tax

After resolving an incident, engineers often spend 60-90 minutes reconstructing the post-mortem from memory. They scroll through Slack threads, review Zoom recordings, and piece together timelines from half-remembered conversations. Ninety minutes later, you publish an incomplete, probably inaccurate post-mortem 3-5 days after the incident when nobody remembers the details.

For a team handling 15 incidents per month where 5 require detailed post-mortems, that represents significant senior engineering time dedicated to post-mortem archaeology. At $150/hour for 7.5 hours monthly (5 incidents × 90 minutes each), you spend $13,500 annually on documentation that could be automated.

The ROI framework: three levers to quantify value

The total savings from a unified incident management platform comes from three distinct sources:

Total Annual Savings = (Downtime Cost Avoidance) + (Labor Efficiency Gains) + (Tool Consolidation Savings)

Each lever has a specific formula you can plug your own numbers into. Let me break them down with real benchmarks and customer examples.

Lever 1: reducing downtime costs via MTTR reduction

The first and often largest ROI lever is reducing Mean Time To Resolution (MTTR). Faster resolution means less revenue lost, fewer customers impacted, and lower reputational damage.

The formula:

(Downtime Cost per Hour ÷ 60) × MTTR Improvement in Minutes × Annual Incident Volume = Annual Savings

Industry benchmarks for downtime costs:

The numbers vary dramatically by company size and industry:

| Company Size | Downtime Cost Range | Source |

|---|---|---|

| Small businesses | $137-$427 per minute | EnComputers 2024 |

| Mid-sized companies | $1,000-$5,000 per minute | Gatling analysis |

| Large enterprises | $5,600+ per minute | Atlassian/Gartner |

A practical formula for SaaS companies: calculate your downtime cost as (Annual Revenue ÷ 8,760 hours) × percentage of customers affected.

Example: For a $40M ARR SaaS company where checkout downtime affects 80% of transaction volume: ($40M ÷ 8,760 hours) × 0.80 = approximately $3,653 per hour during peak times.

MTTR reduction benchmarks:

We've seen customers reduce MTTR by 30-50% consistently, with up to 80% reduction in optimal deployments using our Slack-native workflow and AI SRE capabilities. Specific customer results:

- Favor reduced MTTR by 37% largely by eliminating manual coordination overhead

- Buffer saw a 70% reduction in critical incidents

- Multiple deployments using AI-powered platforms report 30-40% drops in MTTR

Example calculation:

Take a $40M ARR SaaS company with $3,653/hour downtime cost. They reduce P1 MTTR from 48 minutes to 30 minutes (37.5% improvement). For 15 monthly incidents:

- MTTR savings: 18 minutes per incident

- Per-minute cost: $3,653 ÷ 60 = $60.88 per minute

- Monthly savings: 18 × $60.88 × 15 = $16,438

- Annual savings: approximately $197,000

Watch our full platform walkthrough to see how Slack-native workflows eliminate the coordination overhead that inflates MTTR. The key is that incident response happens at conversation speed because teams work where they already communicate.

Lever 2: reclaiming engineering hours with AI and automation

The second lever is labor efficiency. Every hour your engineers spend on manual, repetitive incident work is an hour they're not building features or improving reliability.

The formula:

(Hours Saved per Incident × Average Engineer Fully-Loaded Hourly Cost) × Annual Incident Volume = Annual Savings

Where time gets saved:

| Task | Time Before | Time After | Savings |

|---|---|---|---|

| Post-mortem generation | 60-90 minutes | 15-20 minutes review | 45-70 minutes |

| Investigation/correlation | 15+ minutes | Under 1 minute | 14+ minutes |

| Team assembly/coordination | 12-15 minutes | Under 2 minutes | 10-13 minutes |

- Post-mortem generation: Engineers traditionally spend 60-90 minutes after major incidents scrolling through Slack threads, reviewing recordings, and piecing together timelines. AI-drafted post-mortems from captured timeline data reduce this to 15-20 minutes of review and editing.

- Investigation and correlation: Instead of context-switching between Datadog, GitHub, and Slack to correlate a deployment with an error spike, AI surfaces correlations quickly. Our AI SRE connects the dots between code changes, alerts, and past incidents.

- Coordination overhead: Automated channel creation, on-call paging, and role assignment eliminates manual team assembly. See how WorkOS transformed its incident response by automating these workflows.

"I'm new to incident.io since starting on a new job, after many years using Atlassian's Statuspage and PagerDuty. Three things that I believe are done very well in incident.io: integration with other apps and platforms, holistic approach to incident alerting and notifications, and customer/technical support. It's on a very different level (much better) from other vendors." - Verified user review of incident.io

Example calculation:

Let's calculate savings for a team handling 180 annual incidents where 60 require detailed post-mortems:

| Savings Category | Calculation | Hours Saved |

|---|---|---|

| Coordination savings | 12 minutes × 180 incidents | 36 hours |

| Post-mortem savings | 55 minutes × 60 incidents | 55 hours |

| Investigation savings | 12 minutes × 180 incidents | 36 hours |

| Total | 127 hours |

At $150 fully loaded cost: approximately $19,000 annual savings

See how Vanta reduced hours spent on manual processes and how Bud Financial improved their incident response processes by implementing automated workflows.

Lever 3: hard cost savings through tool consolidation

The third lever is straightforward: replacing multiple tools with a unified platform reduces software spend and integration maintenance. I'm using a 100-person engineering team as the baseline because that's the typical size where tool sprawl costs become impossible to ignore.

The typical "Franken-stack" cost breakdown:

| Tool | Estimated Annual Cost | Purpose |

|---|---|---|

| PagerDuty Professional | $25,200 (100 users × $21/month) | Alerting/On-call |

| Statuspage.io Business | $4,788 ($399/month) | Customer communication |

| Documentation tools (portion) | $2,000-$4,000 | Post-mortem storage |

| Custom integration maintenance | $15,000+ (8-10 engineer-hours/month) | Integration glue |

| Total Range | $47,000-$49,000 |

Note: PagerDuty pricing based on Professional plan at $21/user/month annual billing. Statuspage pricing from Atlassian's official pricing.

incident.io unified platform cost:

Our Pro plan costs $54,000 annually for 100 users ($45/user/month with on-call) and includes everything: on-call scheduling, incident response, status pages, AI-drafted post-mortems, and unlimited workflows. No add-ons required.

The pricing comparison between incident.io, PagerDuty, and Opsgenie shows the real cost differences:

- Status pages: Included in all paid plans

- AI-drafted post-mortems: Included in Pro plan

- Automatic documentation of all incident actions, Slack messages, and role assignments

- No separate note-taking tool required

Calculating your consolidation savings:

For some teams, incident.io Pro may cost slightly more than their current stack on raw software spend. However, you eliminate integration maintenance costs and gain unified functionality. The real savings come from Levers 1 and 2 (MTTR reduction and engineering hours). Tool consolidation is often about workflow efficiency and reduced cognitive load rather than direct cost reduction.

See the Intercom migration story for how they moved from PagerDuty and Atlassian Status Page to incident.io in a matter of weeks.

"Incident.io stands out as a valuable tool for automating incident management and communication, with its effective Slack bot integration leading the way. The platform's compatibility with multiple external tools, such as Ops Genie, makes it an excellent central hub for managing incidents." - Verified user review of incident.io

Interactive ROI calculator: input your team's data

I've created an Excel template that automates these calculations. Download it, plug in your numbers, and generate a finance-ready ROI summary.

Download the ROI Calculator: Get the Excel template by booking a demo, or contact us for direct access. The template includes pre-built formulas for all three ROI levers, sensitivity analysis, and a finance-ready summary page.

Input variables you'll need:

- Team size: Number of engineers with incident.io seats

- Average fully-loaded hourly rate: $120-$180 is typical for SRE/DevOps. Use $150 as conservative default based on the 1.25x-1.4x multiplier on base salary

- Monthly incident volume: Total incidents across all severity levels

- Current MTTR by severity: P1, P2, P3 average resolution times

- Annual revenue: For calculating downtime cost

- Current tool spend: PagerDuty, Statuspage, etc.

Sample calculation for a 100-person engineering org using incident.io Pro

| Input | Value |

|---|---|

| Team size | 100 engineers |

| Fully-loaded hourly rate | $150 |

| Monthly incidents | 20 (5 P1, 15 P2/P3) |

| Current P1 MTTR | 48 minutes |

| Annual revenue | $40M |

| Current tool spend | $47,000/year |

| ROI Lever | Annual Savings (Moderate Scenario) |

|---|---|

| MTTR reduction (37.5% improvement) | $197,000 |

| Engineering hours reclaimed (127 hours) | $19,000 |

| Tool consolidation/efficiency | Varies by current stack |

| Estimated Total Annual Benefit | $216,000+ |

| incident.io Pro cost (all-inclusive) | $54,000 |

| Net Annual Benefit | $162,000+ |

Sensitivity analysis

The calculation above uses moderate assumptions. Here's the range based on conservative vs. optimistic scenarios:

| Scenario | MTTR Reduction | Hours Saved | Estimated Net Benefit |

|---|---|---|---|

| Conservative | 25% | 80 hours | $90,000-$110,000 |

| Moderate | 37.5% | 127 hours | $160,000-$180,000 |

| Optimistic | 50% | 175 hours | $230,000-$260,000 |

Even the conservative scenario delivers strong positive ROI, meaning the platform pays for itself within the first year. Your actual results will depend on incident frequency, current MTTR, and downtime costs specific to your business.

Building the business case: a slide-by-slide guide

Once you have your ROI numbers, you need to package them for executive approval. Here's a proven four-slide structure:

Slide 1: The problem (current waste and risk)

Lead with the financial impact of the status quo:

- Coordination overhead: X hours/month × $150 = $Y annually

- Post-mortem tax: X hours/month × $150 = $Y annually

- Tool sprawl: $X annual spend on fragmented stack

- Risk: Current MTTR of X minutes costs $Y per incident in potential downtime

Slide 2: The solution (unified platform + AI)

Present incident.io as an efficiency investment, not an expense:

- Slack-native architecture eliminates context switching

- AI SRE automates investigation and post-mortem generation

- Unified platform consolidates multiple point solutions

- You can install in 30 seconds, making the platform operational in days, not months

Slide 3: The financials (your ROI numbers)

Show the three-lever breakdown from your calculator:

- Lever 1: Downtime cost avoidance = $X

- Lever 2: Engineering hours reclaimed = $X

- Lever 3: Tool consolidation/efficiency = $X

- Net benefit within 12 months

Slide 4: The implementation (time to value)

Address the "how long until we see results" question:

- Week 1-2: Pilot with 5-10 engineers, run real incidents

- Week 3-4: Expand to full team, measure first MTTR improvements

- Week 5-8: Full deployment, integrate with full tool stack

- Month 3: First quarterly review with complete ROI data

"After a lengthy vetting process with multiple incident management tools, we decided to purchase incident.io for managing incidents. One key factor in this decision was the ease of use -- it was so extremely simple to set up, adjust on the fly, add or subtract features, it made a real difference compared to other software." - Verified user review of incident.io

Watch how WorkOS gained confidence to declare more incidents and see the hands-on introduction to incident.io Catalog for automated incident workflows.

Pros and cons of AI in incident management

I want to address the "AI hype" objection directly. Your finance team (and your engineers) are right to be skeptical of vendors throwing "AI-powered" onto everything. Here's an honest assessment of what AI actually delivers and where it falls short.

What AI does well

- Automated timeline capture: AI captures every Slack message, command, and decision during an incident without a dedicated note-taker. This eliminates the 60-90 minutes typically spent reconstructing post-mortems.

- Pattern recognition: AI correlates recent deployments with error spikes much faster than manual investigation. Our AI SRE surfaces these connections automatically.

- Documentation generation: AI drafts post-mortems from captured data that engineers can review and refine in 15-20 minutes rather than writing from scratch.

- Consistent execution: AI doesn't forget steps, get tired, or lose context. It applies the same rigor to incident #200 as incident #1.

What AI cannot do

- Understand unique business context: AI needs training data. For truly novel failure modes or company-specific edge cases, human judgment remains essential.

- Make trade-off decisions: "Should we roll back and lose the last hour of orders, or push forward with a hotfix?" That's a human call.

- Replace engineering expertise: AI assists with toil elimination. It doesn't replace the senior engineer who understands your system architecture.

- Guarantee accuracy: AI suggestions require human review. We recommend a "human-in-the-loop" approach where AI accelerates investigation but engineers validate conclusions.

The bottom line

True AI incident management platforms act as an "AI SRE" teammate that investigates issues, identifies root causes, and suggests fixes. Generic AI features just summarize chat logs. The distinction matters because one saves you minutes of reading while the other saves hours of investigation. Our AI handles the "robot work" so humans can do the "thinking work."

"I would really highlight the customer-centricity of the team working in incident.io. Feedback is always heard and has lead to product improvements - with a very short delivery cycle. The quality on the execution on the idea is usually quite polished and has a developer-friendly orientation." - Verified user review of incident.io

Moving from cost center to competitive advantage

Incident management is not insurance. Insurance protects against unlikely events. Incidents happen every week. The question isn't whether you'll have incidents. The question is whether you'll spend 48 minutes resolving them or 30 minutes. Whether you'll waste 90 minutes reconstructing post-mortems or 15 minutes reviewing AI drafts. Whether you'll pay for multiple tools that don't talk to each other or one platform that handles the full lifecycle.

The math works because you're not buying a tool. You're buying back engineering time, reducing downtime costs, and eliminating the coordination tax that makes incidents worse than they need to be.

I want to help you calculate your specific ROI. Book a demo and we'll walk through the numbers together with your team, and run your first incident in Slack to see how it feels.

Key terminology glossary

AI SRE: Artificial Intelligence features designed to automate Site Reliability Engineering tasks like timeline creation, root cause correlation, and fix suggestions. Unlike generic "AI features" that summarize chat logs, true AI SRE systems investigate issues and generate environment-specific recommendations.

MTTR (Mean Time To Resolution): The average time from when an incident is detected to when it is fully resolved. High-performing teams often target under 30 minutes for P1 incidents.

Toil: Manual, repetitive, automatable, tactical work devoid of enduring value (as defined by Google's SRE team). Examples include manually copying Slack messages to documentation, manually creating incident channels, and manually updating status pages.

RCA (Root Cause Analysis): The process of identifying the fundamental cause of an incident. AI can accelerate RCA by correlating alerts with recent changes, but human validation remains essential for complex failures.

Fully Loaded Cost: The true cost of an employee including salary, benefits, payroll taxes, equipment, and overhead allocation. Typically 1.25x to 1.4x base salary.

FAQs

See related articles

Keeping it boring: the incident.io technology stack

This is the story of how incident.io keeps its technology stack intentionally boring, scaling to thousands of customers with a lean platform team by relying on managed GCP services and a small set of well-chosen tools.

Matthew Barrington

Matthew Barrington

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian Hanson

Everything you need to know about ITIL 5, AI and incident management

We break down ITIL 5's governance framework and what it means for teams using AI in incident response. For incident management, it addresses questions like: Who's accountable when an AI-suggested remediation backfires? How do you audit AI-generated updates?

Chris Evans

Chris EvansSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization