Is your incident communication hurting your MTTR? (Templates to fix it)

Updated December 31, 2025

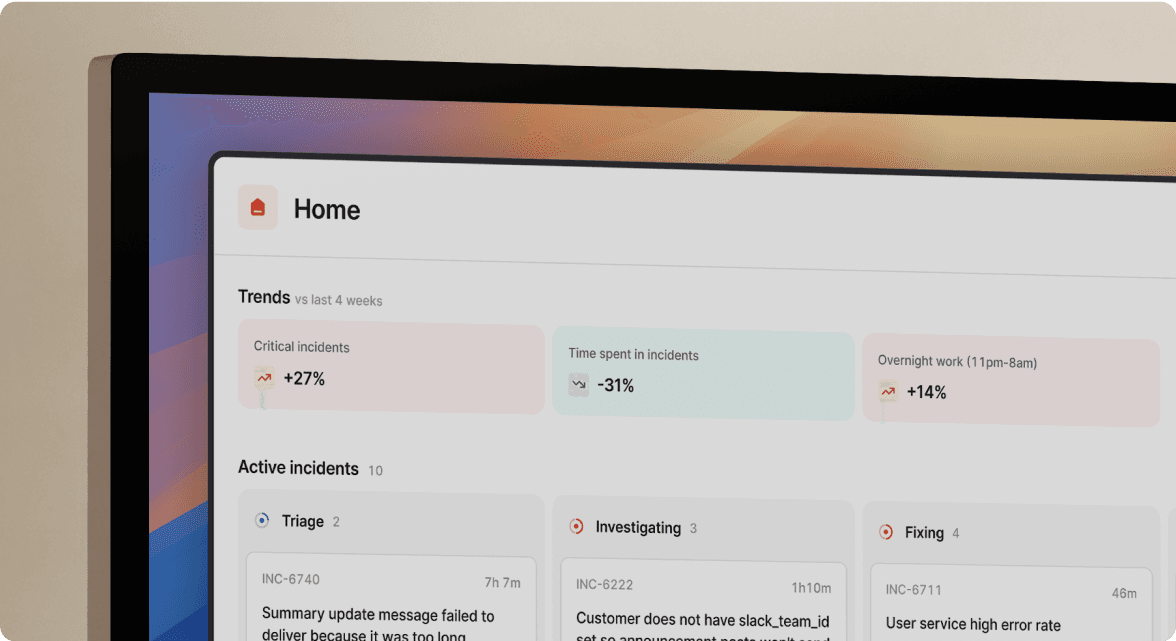

TL;DR: Manual incident communication creates bottlenecks that extend MTTR by forcing engineers to context-switch between fixing problems and updating stakeholders. The solution is not writing better updates manually. Automate the flow of information across internal teams, executives, customers, and status pages so responders focus on resolution. Use the four templates below for immediate improvement, or automate the entire communication layer with incident.io to reduce MTTR by up to 80% without adding headcount.

Incident MTTR templates help engineering teams standardize communication during outages, but manual coordination overhead still adds significant delays even with the best templates in place.

What slows down incident response?

Coordination overhead adds 12-15 minutes before technical troubleshooting even begins. For a 100-person engineering team handling 20 incidents monthly, that's $11,400 in annual waste at $190 loaded hourly cost. When your incident commander tabs out of Slack to update Jira, then switches to their status page tool to post a customer message, then opens email to brief executives, you destroy 15 minutes of focus during the most critical response window.

When your monitoring tool (Datadog) lives separately from your alerting system (PagerDuty), coordination hub (Slack), ticketing system (Jira), and customer communication channel (Statuspage), every update requires manual synchronization across disconnected systems.

Who pays the price for manual coordination?

Engineering teams bear the immediate burden. The incident commander must mentally toggle between "What's broken?" and "Who needs to know what?" during the most critical response window. Support teams field unnecessary tickets when status updates lag behind actual resolution. Other engineers who could contribute to the fix aren't effectively mobilized because communication breakdowns create a fog-of-war effect where coordination overhead eclipses the technical fix.

The root cause isn't poor writing skills on your engineering team. You should not treat communication as a manual task. Treat it as an automated system instead.

Why does communication overhead extend MTTR?

Context switching between communication tools and actual problem-solving carries a cognitive penalty, with recovery taking 23 minutes and 15 seconds on average. The real damage extends beyond the immediate incident. During incidents, cognitive tax from context switching directly extends your MTTR because responders mentally toggle between fixing problems and updating stakeholders.

The Google SRE Book documents this failure mode clearly: "Mary was far too busy to communicate clearly. Nobody knew what actions their coworkers were taking. Business leaders were angry, customers were frustrated, and other engineers who could have lent a hand weren't used effectively." Communication breakdowns during incidents create a fog-of-war effect where coordination overhead eclipses the technical fix. 15 seconds on average.

The root cause isn't poor writing skills on your engineering team. You should not treat communication as a manual task. Treat it as an automated system instead. When your incident commander tabs out of Slack to update Jira, then switches to their status page tool to post a customer message, then opens email to brief executives, you destroy 15 minutes of focus during the most critical response window.

During incidents, cognitive tax from context switching directly extends your MTTR because responders mentally toggle between "What's broken?" and "Who needs to know what?" When your monitoring tool (Datadog) lives separately from your alerting system (PagerDuty), coordination hub (Slack), ticketing system (Jira), and customer communication channel (Statuspage), every update requires manual synchronization across disconnected systems.

The Google SRE Book documents this failure mode clearly: "Mary was far too busy to communicate clearly. Nobody knew what actions their coworkers were taking. Business leaders were angry, customers were frustrated, and other engineers who could have lent a hand weren't used effectively." Communication breakdowns during incidents create a fog-of-war effect where coordination overhead eclipses the technical fix.

4 communication templates every engineering team needs

Here are four templates you can use immediately to standardize your incident communication. Copy and adapt them to your context. Later sections show how to automate these patterns entirely.

1. The internal technical update (Slack/Teams)

Use this format in your incident channel for technical team members actively responding.

Template:

**Status:** [Investigating / Identified / Monitoring / Resolved]

**Current impact:** [Specific performance metrics]

**What we know:** [Technical details: bottlenecks, error rates, affected services]

**What we're doing:** [Current actions with owners]

**Blockers:** [Dependencies or unknowns]

**Next update:** [Timestamp]

Example:

**Status:** Identified

**Current impact:** API response times at 5000ms (baseline: 200ms), 15% error rate

**What we know:** Database connection pool exhausted (maxed at 100 connections), recent deploy #4872 introduced connection leak

**What we're doing:** @sarah rolling back deploy #4872 (ETA 8 minutes), @mike scaling connection pool to 200 as temporary mitigation

**Blockers:** None currently

**Next update:** 3:15 AM UTC

Internal updates should include current status, impact scope, next actions, owners, and timeline in every technical update. Internal updates should use technical language appropriate for engineering audiences. This isn't where you sanitize jargon.

2. The executive briefing (Email/Slack)

Executives need business impact, not technical implementation details. Send this within 30 minutes of detecting a severity-1 incident.

Template:

**Incident:** [One-line business description]

**Customer impact:** [Number/percentage affected, revenue implications]

**Business risk:** [Current: Low/Medium/High] [Trending: Stable/Increasing/Decreasing]

**Resolution ETA:** [Timestamp or "Unknown - next update at [time]"]

**What we're doing:** [High-level action without technical jargon]

**Your action needed:** [Usually "None - team has resources" or specific approval requests]

Example:

**Incident:** Checkout unavailable for US customers

**Customer impact:** 100% of US checkout attempts failing since 2:47 AM UTC (~2,000 customers/hour attempting), estimated $15K revenue impact if unresolved for 1 hour

**Business risk:** High, trending to Medium (fix identified)

**Resolution ETA:** 3:15 AM UTC (28 minutes from now)

**What we're doing:** Rolling back recent code change that caused database overload, implementing connection pool fix

**Your action needed:** None - engineering team has resources and clear path to resolution

Separate technical details (for engineering) from business impact (for executives). Executives care about customer effect, revenue implications, and whether they need to escalate or provide resources.

3. The customer-facing status update (Status page)

Balance your status page messages carefully: acknowledge the problem, explain impact in user terms, avoid technical jargon.

Investigating template:

We're currently investigating reports of [user-visible symptom] affecting [impacted services]. We apologize for any inconvenience and will post another update within [30 minutes / 1 hour] as we learn more.

Identified template:

We've identified the cause of [user-visible symptom] affecting [impacted services]. Our team is actively working on a fix. Estimated resolution time: [timestamp]. Next update: [timestamp].

Resolved template:

This incident has been resolved as of [timestamp]. [Service name] is now operating normally. We apologize for the disruption and are conducting a full review to prevent recurrence.

Example sequence:

2:55 AM (8 minutes after detection):

We're currently investigating reports of checkout errors affecting US customers. We apologize for any inconvenience and will post another update within 30 minutes as we learn more.

3:05 AM (18 minutes after detection):

We've identified the cause of checkout errors affecting US customers. Our team is actively implementing a fix. Estimated resolution time: 3:15 AM UTC. Next update: 3:20 AM UTC.

3:18 AM (31 minutes after detection):

This incident has been resolved as of 3:15 AM UTC. Checkout is now operating normally for all customers. We apologize for the disruption and are conducting a full review to prevent recurrence.

Update status pages every 15-20 minutes for active customer-impacting incidents, and never exceed one hour without an update. The cardinal rule: tell customers what they can do (workarounds, alternatives) and when to expect resolution, not the technical root cause.

4. The post-mortem document

Post-mortems capture learnings after resolution. Our incident post-mortem template uses this structure:

Template sections:

- Summary: [2-3 sentence overview: what happened, why, severity, duration]

- Timeline: [Chronological sequence using UTC timestamps]

- 2:47 AM: First customer report received

- 2:49 AM: Alert fired, on-call paged

- 2:52 AM: Incident channel created, incident commander assigned

- 2:58 AM: Root cause identified (deploy #4872 connection leak)

- 3:10 AM: Rollback initiated

- 3:15 AM: Service restored, monitoring

- 3:25 AM: Incident resolved

- Root cause: [Final identified cause with contributing factors]

- Impact: [Customers affected, duration, business metrics]

- Resolution: [What fixed it, why it worked]

- What went well: [Effective practices during response]

- What went wrong: [Gaps or failures during response]

- Action items: [Specific tasks with owners and deadlines]

Write post-mortems without blame. Focus on system improvements and process refinements, not individual fault-finding. The goal is organizational learning, not blame assignment.

How to automate communication to reduce MTTR

Templates help standardize communication, but automation eliminates the manual work entirely. Here's how we handle multi-channel updates from a single source.

Auto-create channels with context pre-loaded

When alerts fire from monitoring tools like Datadog or Sentry, we automatically create dedicated Slack channels following your naming convention. We pull in on-call responders based on affected services and surface relevant context from your service catalog without manual lookup: owners, dependencies, recent deployments, and runbook links.

The /inc command enables entire incident workflows inside Slack. Type /inc escalate @database-team and we page the right people via phone, SMS, or push notification based on urgency. We capture every action automatically on the incident timeline: role assignments, severity changes, pinned messages. This builds your post-mortem as the incident unfolds rather than requiring 90-minute reconstruction from memory.

"One of the improvements that incident.io has brought to our incident response processes is the reduction of that cognitive overload. It's one tool … It's in the same context." - Verified user review of incident.io

Update status pages from Slack

Manually updating status pages requires logging into separate tools, finding the right incident, copying text from Slack, and publishing. This context switch takes 3-5 minutes and often gets forgotten during high-pressure response.

Our status page integration lets you publish updates directly from the incident channel using /inc update. We automatically create or update the corresponding status page incident, sync component status, and push messages to subscribers. When you type /inc resolve, the status page updates immediately. No separate login, no forgotten notifications, no stale "investigating" messages haunting your customers six hours after resolution.

Favor's engineering team reported their previous setup required manual status page updates through separate logins: "There was no way for engineers to post an update to Status Page without logging in and having a Status Page license." Automating this removed friction that delayed customer communication.

AI-drafted summaries

Our AI SRE reads your incident timeline (Slack messages, /inc commands, role changes, pinned context) and generates summaries automatically. When executives ask "What's happening?" mid-incident, AI SRE drafts business-impact summaries. When incidents resolve, we produce 80%-complete post-mortems using captured timeline data, decisions, and call transcriptions from Scribe, which achieved 63% direct acceptance rate with an additional 26% edited before use.

"The AI features really reduce the friction of incident management." - Verified user review of incident.io

The AI system can automate up to 80% of incident response by connecting telemetry, code changes, and past incidents to identify root causes, then drafting recommended fixes. Engineers spend 10 minutes refining AI-generated post-mortems instead of 90 minutes writing from scratch.

Follow-up automation

After resolution, we automatically create follow-up tasks in Jira or Linear, populating fields based on incident attributes. Configure templates to route tasks to different projects depending on severity or affected services. The platform syncs incident status changes bidirectionally with Jira. When you enter follow-ups in the incident channel, we create the corresponding tickets automatically.

Comparing communication workflows: incident.io vs. PagerDuty vs. Opsgenie

Compare communication capabilities across platforms:

| Feature | incident.io | PagerDuty | Opsgenie |

|---|---|---|---|

| Status page updates from chat | ✅ Native /inc update command in Slack | ❌ Requires separate Statuspage login or API | ❌ Requires separate Statuspage login |

| AI-drafted stakeholder summaries | ✅ AI SRE generates executive briefs and post-mortems | ⚠️ AI add-on available, not core platform | ❌ Basic automation only |

| Slack-native timeline capture | ✅ Auto-captures all activity, pinned messages, role changes | ⚠️ Slack integration notifies but doesn't capture timelines | ⚠️ Slack integration for alerting, manual docs |

| Automated post-mortem generation | ✅ 80% complete from timeline data | ❌ Manual template completion | ❌ Manual template completion |

| Multi-channel external comms | ✅ Status pages, webhooks, email subscribers automatic | ⚠️ Requires separate Statuspage subscription | ⚠️ Requires separate Statuspage (Atlassian) |

| Follow-up task automation | ✅ Auto-creates Jira/Linear tickets with field mapping | ⚠️ Manual ticket creation or API scripting | ✅ Good Jira integration (same vendor) |

PagerDuty excels at alerting and on-call but treats communication as a separate concern requiring add-ons or external tools. Opsgenie is sunsetting in April 2027, creating migration urgency. We built incident.io specifically for Slack-native coordination where communication automation is core platform functionality, not an add-on.

ROI: The cost of silence vs. the value of automation

Manual communication creates three measurable cost categories:

1. Coordination time waste: 12-15 minutes lost per incident before troubleshooting starts. For a 100-person team handling 20 incidents monthly, that's $11,400 annually burning coordination overhead.

2. Extended MTTR from context switching: Context switching drains up to 40% of productivity daily, with recovery taking 23+ minutes per interruption. During incidents, every tool switch adds cognitive load that delays resolution.

3. Customer trust erosion from stale updates: When status pages show "investigating" for six hours after resolution, support teams field dozens of "is this actually fixed?" inquiries. The reputation damage compounds. Customers remember poor communication longer than they remember the technical outage.

We deliver returns across all three categories:

- Coordination savings: Favor reduced MTTR by 37% by automating incident coordination in Slack, eliminating manual channel setup, role assignment, and status updates

- Time reclamation: Intercom's SRE team cut median response crew assembly time by roughly 40% through automated workflows

- Engineer productivity: Teams save time per incident via Slack-native workflows that eliminate context switching between tools

The math for a 150-person engineering team handling 20 incidents monthly:

- Manual coordination cost: 15 minutes × 20 incidents × 12 months = 3,600 minutes annually

- At $190 loaded hourly cost: 60 hours × $190 = $11,400/year baseline waste

- Post-incident documentation: 90 minutes manual vs. 10 minutes with AI assistance = 80 minutes saved × 20 incidents = 1,600 minutes = ~$5,100/year

- You reclaim $16,500+ in value annually before factoring MTTR improvement and customer satisfaction gains

Manual incident communication slows your response and burns engineering time. The four templates above provide immediate structure, but automation eliminates the root problem: context switching between fixing issues and updating stakeholders. We unify on-call, response coordination, status pages, and post-mortem generation in Slack. This cuts MTTR through workflow automation rather than asking engineers to write faster during 3 AM outages.

Book a demo to see how teams reduced MTTR by up to 80% by eliminating communication overhead.

Terminology

MTTR (Mean Time To Resolution): Average time from incident detection to full resolution. Reduce your MTTR to achieve faster response and less customer impact. DORA research shows elite performers resolve incidents in under one hour.

Cognitive load: The mental effort required to process information and make decisions. Context switching decreases cognitive function, resulting in lower quality work and slower resolution during incidents.

Slack-native: Tools that function entirely within Slack without requiring users to switch to web UIs or other applications. Our philosophy: "the only time you should ever leave Slack is when you're actually fixing the thing."

Post-mortem: A blameless document that allows you to identify root causes and learn from response. Should include timeline, root cause analysis, impact assessment, and action items with owners.

Timeline capture: Automatically creating events on your incident timeline using updates (severity changes, status changes) and pinned Slack messages to give an overview of key moments without manual note-taking.

AI SRE: Artificial intelligence that pulls data from alerts, telemetry, code changes, and past incidents to identify root causes, recommend fixes, and automate investigation. Can handle up to 80% of incident response tasks.

On-call rotation: A schedule system that ensures engineers are available to respond to incidents 24/7 by rotating responsibility among team members. Requires clear escalation paths and sustainable alert volumes to prevent burnout.

Coordination overhead: Time spent assembling responders and updating tools during an incident before actual troubleshooting begins. Costs 12-15 minutes per incident in manual workflows.

FAQs

See related articles

Keeping it boring: the incident.io technology stack

This is the story of how incident.io keeps its technology stack intentionally boring, scaling to thousands of customers with a lean platform team by relying on managed GCP services and a small set of well-chosen tools.

Matthew Barrington

Matthew Barrington

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian Hanson

Everything you need to know about ITIL 5, AI and incident management

We break down ITIL 5's governance framework and what it means for teams using AI in incident response. For incident management, it addresses questions like: Who's accountable when an AI-suggested remediation backfires? How do you audit AI-generated updates?

Chris Evans

Chris EvansSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization