Best Incident management tools for cloud-native SaaS: Microservices, and distributed systems

Updated January 26, 2026

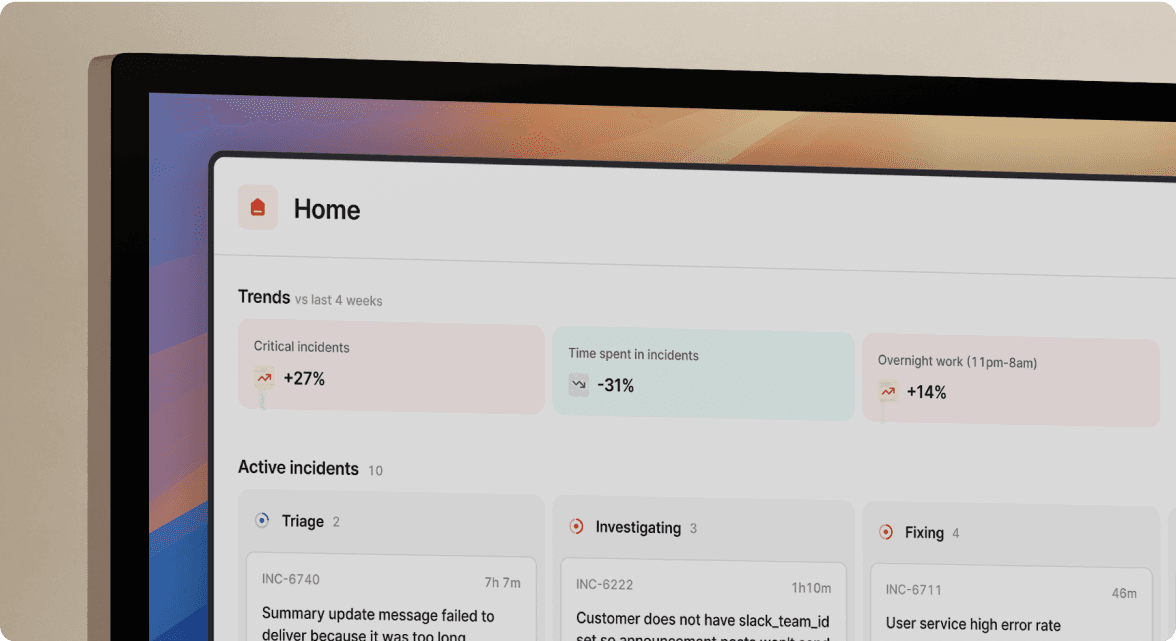

TL;DR: Traditional incident management tools assume static servers, not ephemeral Kubernetes pods and distributed microservices. When incidents hit cloud-native stacks, teams waste 12 minutes on coordination before troubleshooting starts. Context scatters across Datadog, PagerDuty, Slack, Jira, and tribal knowledge while pods restart every few minutes. Slack-native platforms like incident.io eliminate coordination overhead by auto-creating channels, surfacing service dependencies instantly, and capturing timelines automatically. For SRE teams managing Kubernetes and microservices, shifting from alert-routing to coordination-first platforms can reduce MTTR by up to 80%.

When your Kubernetes cluster throws a CrashLoopBackOff at 2 AM and five downstream services start timing out, the technical fix is often straightforward once you find it. The nightmare is the 15 minutes you spend figuring out which team owns the payment service, whether the database connection pool is exhausted, and which recent deployment might have introduced the regression. In distributed systems, containers are ephemeral and may live only seconds or minutes, making traditional incident response workflows fundamentally broken. Your runbooks reference servers that no longer exist. Your monitoring dashboard shows 47 pods across three availability zones, but the failing one already restarted. The incident management tool that worked perfectly for a Rails monolith on three EC2 instances cannot handle 200 microservices orchestrated by Kubernetes.

Why cloud-native incident response breaks traditional workflows

Traditional incident management assumes static, long-lived resources. You SSH into a server, check logs, restart the process. Cloud-native architectures invert these assumptions.

The ephemeral infrastructure problem

Kubernetes designs pods as ephemeral resources to replace, not repair. When a pod crashes, Kubernetes spins up a replacement. The failed pod takes your forensic logs with it unless you centralize logging. Your static runbook says "check server logs at /var/log/app.log," but in Kubernetes, there is no persistent server. Distributed environments with volatile containers make it difficult to conduct investigations because resources are constantly created, destroyed, and moved within seconds.

"I like best about incident.io that it augments your processes with all the steps that just make sense during incident handling... Last but not least, the customer support has been nothing but amazing. Feedback is immediatly heard and reacted to, and bugs were fixed in a matter of days max." - Leonard B. on G2

The service dependency web

In a monolithic application, failures follow linear paths. The app server breaks, customers see errors, you fix the app server. In microservices, failures cascade through dependency chains. The checkout service times out because the inventory service responds slowly, which happens because the authentication service exhausts its database connection pool. Manual service dependency mapping fails at scale. When your architecture has 50+ services, remembering which ones depend on the payment gateway is impossible during a 3 AM incident.

The coordination tax in distributed systems

Coordination and investigation phases consume the majority of incident time, while the actual technical repair finishes quickly once the right team has the right context. The typical incident breakdown: 12 minutes assembling the team and gathering context, 20 minutes troubleshooting, 4 minutes on mitigation, and 12 minutes cleaning up. For mid-market SaaS companies, one hour of downtime costs $25,000-$100,000 in lost revenue and customer trust.

The coordination tax compounds in cloud-native environments because context scatters. Your Datadog alert fires, creating a notification in Slack. Someone manually creates an incident channel. You hunt through PagerDuty schedules to find who owns the affected service. You paste links to Grafana dashboards. You scroll through recent GitHub deployments. By the time you assemble the context, 15 minutes have elapsed.

Core requirements for Kubernetes and microservices incident management

Cloud-native incident response demands capabilities that traditional alerting platforms were never designed to provide.

Service Catalog integration with automatic context

You need a live service registry that maps every alert to the owning team, dependencies, recent deployments, and relevant runbooks automatically. When a Datadog alert fires, incident.io's Service Catalog populates the channel with alert details, dependencies, and recent deployments. This eliminates the manual archaeology of finding service owners in spreadsheets or outdated wiki pages.

Netflix leverages this capability to automatically map incidents to their internal systems: "It's helped unlock better automation. We've done cool things where an alert fires, and we automatically create an incident with the appropriate field set using Catalog that maps to our systems."

Slack-native execution, not just notifications

ChatOps is not enough. Sending Slack notifications about incidents happening elsewhere is still context-switching. We architected incident.io to run entirely inside Slack or Microsoft Teams. Your on-call engineer types /inc assign @sarah to make someone incident commander, types /inc escalate @database-team to pull in specialists, and types /inc resolve when fixed. Every action happens in the same interface where your team already coordinates.

"Incident.io helps promore a positive incident management culture! As an organisation, now that we have become more accustomed to using it for incident management, its hard to think about how we used to manage without it!" - Verified user review of incident.io

Deep observability integrations

Your incident management platform must pull graphs, traces, and logs from Datadog, Prometheus, Grafana, or New Relic directly into the incident channel. We record Datadog monitors as attachments, making them visible in both the incident timeline and the web dashboard. When you paste a Datadog snapshot link in Slack and pin the message, we automatically unfurl the image and add it to the timeline.

Automated timeline capture

Compliance audits for SOC 2, ISO 27001, or GDPR require complete, timestamped incident documentation. You cannot manually reconstruct timelines in distributed environments because critical decisions happen across Slack threads, Zoom calls, and multiple tool interfaces. We record everything automatically: every Slack message in the incident channel, every /inc command execution, every role assignment, every severity change. Our Scribe AI transcribes incident calls in real-time, extracting decision points and flagging root causes mentioned in conversation.

"When we were looking for a tool to improve the experience for both our incident response teams as well as for communicating effectively with management, incident.io came through on both counts... Also, their customer success and responsiveness to bug reports and feature requests is superb." - Verified user review of incident.io

Integration with existing alerting infrastructure

You should not have to rip and replace your alerting stack. We integrate with PagerDuty as an alert source, letting you test on-call schedules and escalation paths without migrating your Datadog or Grafana alert providers. When you connect the integration, we automatically pull in PagerDuty services, teams, users, escalation policies, and schedules into the Catalog. You can page any PagerDuty escalation policy or user directly from Slack using /inc escalate.

Comparing incident management tools for distributed systems

Incident management platforms handle cloud-native complexity differently. The gap between alert-routing tools and coordination-first platforms becomes critical when you manage Kubernetes failures.

Table 1: Core capabilities comparison

| Feature | incident.io | PagerDuty | Opsgenie |

|---|---|---|---|

| Slack-native workflow | Full lifecycle via /inc commands | Notifications only, web UI required | Notifications only |

| Service Catalog depth | Automatic dependencies, owners, recent changes | Limited service directory | Basic service mapping |

| AI root cause analysis | Automates up to 80% of incident response | Add-on feature | Basic automation |

| Post-mortem automation | 80% auto-drafted from timeline | Manual reconstruction | Manual reconstruction |

Table 2: Setup and pricing comparison

| Feature | incident.io | PagerDuty | Opsgenie |

|---|---|---|---|

| On-call pricing | $20/user/month add-on | Included in Professional tier | Included |

| Total pricing | $45/user/month (Pro + on-call) | Contact sales | Contact sales |

| Multi-region support | Built-in | Enterprise tier | Yes |

| Pricing transparency | Public pricing | Hidden pricing | Hidden pricing |

The verdict for SRE teams

PagerDuty excels at alerting infrastructure. If your primary need is sophisticated alert routing, deduplication, and waking people up, PagerDuty's decades of investment in this domain show. However, PagerDuty can trigger incidents with in-line commands in Slack, but stops short of managing the full incident lifecycle in chat, leaving teams to toggle between tools during critical moments.

incident.io is purpose-built for coordination in cloud-native environments. We eliminate coordination overhead by handling the entire incident lifecycle inside Slack, from declaration through resolution. For teams where the bottleneck is coordination rather than alerting sophistication, our Slack-native architecture reduces MTTR by eliminating context-switching. Our AI SRE assistant automates investigation tasks that would otherwise require manual correlation of deployment history, alert patterns, and service dependencies.

Opsgenie is being sunset. Atlassian announced that new Opsgenie purchases stop June 4, 2025, and access ends April 5, 2027. Any organization evaluating Opsgenie faces mandatory migration in less than two years. Atlassian is consolidating Opsgenie capabilities into Jira Service Management, designed for IT service desk workflows rather than real-time SRE incident response.

Homegrown Slack bots break when Slack APIs change, lack capabilities like AI root cause analysis or automated post-mortems, and require ongoing maintenance that competes with product development. One customer noted: "It's tempting to build a Slack-based incident system yourself, don't... They have built a system with sane defaults and building blocks to customize everything".

Reducing cognitive load with Slack-native coordination

The mental overhead of coordinating a distributed systems incident often exceeds the technical challenge of fixing it. Automating the logistics lets SREs focus on problem-solving.

Automated incident channel creation with role assignment

When a Datadog monitor threshold triggers, we automatically create a dedicated Slack channel with a structured naming convention like #inc-2847-api-latency-spike. We page the on-call engineer from the affected service's schedule and auto-invite them. Service owners join based on Service Catalog configuration. The channel pins the triggering alert with full context, recent deployment history, and dependent services immediately visible.

Compare this to the manual process: someone sees an alert in a general #ops-alerts channel, manually creates a Slack channel with an inconsistent name, hunts through PagerDuty or a spreadsheet to find who is on-call, manually invites them, then starts pasting alert links. This manual coordination burns 12 minutes before technical troubleshooting begins.

"I appreciate how incident.io consolidates workflows that were spread across multiple tools into one centralized hub within Slack, which is really helpful because everyone's already there. It really helps teams manage and tackle incidences in a more uniform way." - Verified user review of incident.io

Command-based workflow that matches operational speed

Slack slash commands execute at conversation speed. /inc severity critical escalates severity in two seconds. /inc assign @database-lead designates incident command instantly. We record every command in the timeline automatically, eliminating the designated note-taker role that pulls one engineer away from troubleshooting. Watch this demonstration of Slack-native incident management showing the full declare-to-resolve workflow without leaving Slack.

Automated status page updates

During a Kubernetes incident affecting customer-facing services, someone needs to update the public status page and notify internal stakeholders. This administrative work interrupts your incident commander's focus. We automate status page updates and stakeholder notifications through workflow triggers. When you escalate an incident to "critical" severity, a workflow can automatically post to the public status page and send a formatted update to the executive team's Slack channel.

For a walkthrough of these automated workflows, see our startup response demo showing incident declaration, escalation, and resolution in real-world scenarios.

Leveraging AI for root cause analysis in microservices

High-cardinality data in distributed systems makes finding the root cause like searching for a specific log line among millions. AI assistance that correlates code changes, alert patterns, and historical incidents accelerates investigation.

AI-powered code change correlation and automated fixes

Our AI SRE connects telemetry, code changes, and past incidents to fix issues faster. When an incident fires, the AI analyzes recent pull requests merged to production, correlates timestamps with alert onset, and surfaces the likely deployment that introduced the regression. The AI can even generate a fix and open a pull request directly from Slack. If the root cause is a configuration error or simple code bug, AI SRE drafts a remediation PR that your engineer can review and merge. Watch AI SRE in action demonstrating real-time root cause identification and fix generation.

Automated investigation reducing MTTR

Our AI SRE automates up to 80% of incident response tasks by handling repetitive investigation work: pulling relevant Datadog metrics into the incident channel, identifying similar past incidents with comparable symptoms, extracting key decisions from call transcriptions, and suggesting next diagnostic steps based on service architecture. One customer reported: "incident.io saves us hours per incident when considering the need for us to write up the incident, root cause and actions".

Our AI understands service dependencies from the Catalog and applies that context to hypothesis generation. For a technical deep-dive, watch this Inside Traversal interview on AI SRE agents discussing architectural approaches to autonomous incident investigation.

Automating the post-incident review process

Post-mortems that take 90 minutes to reconstruct from scattered sources delay learning and frustrate engineers. Automated timeline capture and AI-assisted drafting reduce post-mortem completion time to 10 minutes.

Automatic timeline construction and AI-drafted post-mortems

We capture your timeline as the incident happens: every Slack message in the incident channel, every /inc command execution, every role assignment, every pinned Datadog graph. Scribe AI transcribes Zoom or Google Meet calls in real-time, extracting key decisions and flagging root causes mentioned verbally. When you type /inc resolve, we generate a post-mortem draft using this captured data. Engineers spend 10 minutes refining the narrative rather than 90 minutes reconstructing events from memory.

"Incident.io has dramatically improved the experience for calling incidents in our organization, and improved the process we use to handle them... 1-click post-mortem reports - this is a killer feature, time saving, that helps a lot to have relevant conversations around incidents (instead of spending time curating a timeline)" - Verified user review of incident.io

Compliance-ready documentation and follow-up automation

SOC 2 and ISO 27001 auditors require complete incident documentation with timestamps, assigned roles, and resolution evidence. Automated capture eliminates documentation gaps. Our GDPR-compliant data retention allows you to retain and remove data in accordance with regulatory requirements. For organizations handling sensitive security incidents, we support private incidents with restricted access.

Post-mortem action items often languish in Google Docs because teams disconnect them from actual task tracking. We integrate with Jira and Linear to automatically create follow-up tickets with incident context pre-populated. When the post-mortem identifies "migrate payment service connection pooling to use adaptive sizing," that action item becomes a Jira ticket assigned to the payment team automatically.

90-day plan to reduce MTTR in cloud-native environments

Rolling out cloud-native incident management requires a phased approach that balances integration complexity with quick wins.

Days 1-30: Foundation setup and initial integrations

Install incident.io in 30 seconds by connecting your Slack workspace. Configure the Datadog integration by tagging monitors with service identifiers. If you run PagerDuty, connect it directly as an alert source to test on-call schedules without migrating alert providers. Import your service inventory into the Service Catalog, defining owners, dependencies, and Slack channels. Connect Backstage integration if you already maintain a service catalog. Run your first 3-5 real incidents through the platform and measure baseline MTTR.

"For our engineers working on incident, the primary interface for incident.io is slack. It's where we collaborate and where we were gathering to handle incident before introducing incident.io... That's where incident.io really shines: it allows to seamlessly nudge or suggest actions." - Verified user review of incident.io

Days 31-60: Workflow optimization and team training

Build workflows for your three most common incident types: database connection exhaustion, Kubernetes pod crashloops, and third-party API failures. Configure severity-based automation where critical incidents automatically page the executive on-call and post to the internal status page. Set up reminders and nudges to prompt post-mortem completion within 24 hours. Run a 30-minute training session focused on five commands: /inc declare, /inc assign, /inc escalate, /inc severity, and /inc resolve. Establish the expectation that post-mortems publish within 24 hours using AI-drafted timelines. Configure Jira integration to auto-create follow-up tickets.

Days 61-90: AI enablement and measurement

Activate AI-powered root cause analysis and investigation assistance. Configure AI SRE to analyze recent deployments and correlate with alert onset. Test fix PR generation on non-critical incidents to build team confidence. Pull MTTR data from the Insights dashboard covering the full 90-day period. Compare the first 30 days against days 60-90 to measure improvement. Identify outlier incidents where MTTR remained high and investigate whether Service Catalog data was incomplete. Present findings to engineering leadership showing MTTR trends, incident volume by service, and time savings from automated post-mortems. Calculate ROI by comparing engineer hours saved against platform cost.

For visual demonstrations, watch incident.io supercharged with AI showing AI-assisted incident response in production scenarios.

Start reducing your MTTR today

Cloud-native architectures demand cloud-native incident response. The coordination overhead that burns 15 minutes per incident comes from inadequate tooling, not from your people or your process. When your Kubernetes cluster fails and 200 microservices are potentially affected, you cannot coordinate effectively using tools designed for three-server monoliths.

The shift from alert-routing to coordination-first platforms eliminates context-switching, automates timeline capture, and applies AI to the investigation work that currently overwhelms your SREs. Book and try incident.io in a free demo to test Slack-native incident management with your Datadog or PagerDuty integration. Run your next five production incidents through the platform and measure the coordination time savings yourself. Or book a free demo to see how teams like Netflix and Etsy manage hundreds of incidents monthly without increasing headcount or burning out their on-call rotations.

Key terms glossary

MTTR (Mean Time To Resolution): Average time from incident detection to full resolution, measured in minutes. Industry benchmarks for cloud-native SaaS range from 30-60 minutes for P1 incidents.

Service Catalog: Registry of microservices including ownership, dependencies, recent deployments, and runbook links. Essential for auto-populating incident context in distributed systems.

Coordination tax: Time spent assembling teams, gathering context, and managing process during incidents rather than solving the technical problem. Typically 12-15 minutes per incident in cloud-native environments.

Ephemeral infrastructure: Kubernetes pods and containers designed to be replaced rather than repaired, living seconds to hours rather than months. Traditional SSH-based debugging does not work with ephemeral resources.

Slack-native: Architecture where the full application lifecycle executes via chat commands rather than using chat for notifications about actions happening in a separate web UI. Eliminates context-switching during high-pressure incidents.

AI SRE: Artificial intelligence assistant that triages alerts, correlates code changes with incident onset, suggests root causes, and can generate fix pull requests. Distinct from simple log correlation engines.

Timeline capture: Automatic recording of all incident actions, Slack messages, role assignments, and pinned graphs as events occur, eliminating manual post-mortem reconstruction from memory.

CrashLoopBackOff: Kubernetes status showing a pod repeatedly crashes and restarts, typically due to application errors or missing dependencies. Common incident trigger in containerized environments.

Incident Management for Cloud-Native SaaS: Kubernetes, Microservices, and Distributed Systems

Updated January 23, 2026

TL;DR: Traditional incident management tools assume static servers, not ephemeral Kubernetes pods and distributed microservices. When incidents hit cloud-native stacks, teams waste 12 minutes on coordination before troubleshooting starts. Context scatters across Datadog, PagerDuty, Slack, Jira, and tribal knowledge while pods restart every few minutes. Slack-native platforms like incident.io eliminate coordination overhead by auto-creating channels, surfacing service dependencies instantly, and capturing timelines automatically. For SRE teams managing Kubernetes and microservices, shifting from alert-routing to coordination-first platforms can reduce MTTR by up to 80%.

When your Kubernetes cluster throws a CrashLoopBackOff at 2 AM and five downstream services start timing out, the technical fix is often straightforward once you find it. The nightmare is the 15 minutes you spend figuring out which team owns the payment service, whether the database connection pool is exhausted, and which recent deployment might have introduced the regression. In distributed systems, containers are ephemeral and may live only seconds or minutes, making traditional incident response workflows fundamentally broken. Your runbooks reference servers that no longer exist. Your monitoring dashboard shows 47 pods across three availability zones, but the failing one already restarted. The incident management tool that worked perfectly for a Rails monolith on three EC2 instances cannot handle 200 microservices orchestrated by Kubernetes.

Why cloud-native incident response breaks traditional workflows

Traditional incident management assumes static, long-lived resources. You SSH into a server, check logs, restart the process. Cloud-native architectures invert these assumptions.

The ephemeral infrastructure problem

Kubernetes designs pods as ephemeral resources to replace, not repair. When a pod crashes, Kubernetes spins up a replacement. The failed pod takes your forensic logs with it unless you centralize logging. Your static runbook says "check server logs at /var/log/app.log," but in Kubernetes, there is no persistent server. Distributed environments with volatile containers make it difficult to conduct investigations because resources are constantly created, destroyed, and moved within seconds.

"I like best about incident.io that it augments your processes with all the steps that just make sense during incident handling... Last but not least, the customer support has been nothing but amazing. Feedback is immediatly heard and reacted to, and bugs were fixed in a matter of days max." - Leonard B. on G2

The service dependency web

In a monolithic application, failures follow linear paths. The app server breaks, customers see errors, you fix the app server. In microservices, failures cascade through dependency chains. The checkout service times out because the inventory service responds slowly, which happens because the authentication service exhausts its database connection pool. Manual service dependency mapping fails at scale. When your architecture has 50+ services, remembering which ones depend on the payment gateway is impossible during a 3 AM incident.

The coordination tax in distributed systems

Coordination and investigation phases consume the majority of incident time, while the actual technical repair finishes quickly once the right team has the right context. The typical incident breakdown: 12 minutes assembling the team and gathering context, 20 minutes troubleshooting, 4 minutes on mitigation, and 12 minutes cleaning up. For mid-market SaaS companies, one hour of downtime costs $25,000-$100,000 in lost revenue and customer trust.

The coordination tax compounds in cloud-native environments because context scatters. Your Datadog alert fires, creating a notification in Slack. Someone manually creates an incident channel. You hunt through PagerDuty schedules to find who owns the affected service. You paste links to Grafana dashboards. You scroll through recent GitHub deployments. By the time you assemble the context, 15 minutes have elapsed.

Core requirements for Kubernetes and microservices incident management

Cloud-native incident response demands capabilities that traditional alerting platforms were never designed to provide.

Service Catalog integration with automatic context

You need a live service registry that maps every alert to the owning team, dependencies, recent deployments, and relevant runbooks automatically. When a Datadog alert fires, incident.io's Service Catalog populates the channel with alert details, dependencies, and recent deployments. This eliminates the manual archaeology of finding service owners in spreadsheets or outdated wiki pages.

Netflix leverages this capability to automatically map incidents to their internal systems: "It's helped unlock better automation. We've done cool things where an alert fires, and we automatically create an incident with the appropriate field set using Catalog that maps to our systems".

Slack-native execution, not just notifications

ChatOps is not enough. Sending Slack notifications about incidents happening elsewhere is still context-switching. We architected incident.io to run entirely inside Slack or Microsoft Teams. Your on-call engineer types /inc assign @sarah to make someone incident commander, types /inc escalate @database-team to pull in specialists, and types /inc resolve when fixed. Every action happens in the same interface where your team already coordinates.

"Incident.io helps promore a positive incident management culture! As an organisation, now that we have become more accustomed to using it for incident management, its hard to think about how we used to manage without it!" - Saurav C on G2

Deep observability integrations

Your incident management platform must pull graphs, traces, and logs from Datadog, Prometheus, Grafana, or New Relic directly into the incident channel. We record Datadog monitors as attachments, making them visible in both the incident timeline and the web dashboard. When you paste a Datadog snapshot link in Slack and pin the message, we automatically unfurl the image and add it to the timeline.

Automated timeline capture

Compliance audits for SOC 2, ISO 27001, or GDPR require complete, timestamped incident documentation. You cannot manually reconstruct timelines in distributed environments because critical decisions happen across Slack threads, Zoom calls, and multiple tool interfaces. We record everything automatically: every Slack message in the incident channel, every /inc command execution, every role assignment, every severity change. Our Scribe AI transcribes incident calls in real-time, extracting decision points and flagging root causes mentioned in conversation.

"When we were looking for a tool to improve the experience for both our incident response teams as well as for communicating effectively with management, incident.io came through on both counts... Also, their customer success and responsiveness to bug reports and feature requests is superb." - Verified user on G2

Integration with existing alerting infrastructure

You should not have to rip and replace your alerting stack. We integrate with PagerDuty as an alert source, letting you test on-call schedules and escalation paths without migrating your Datadog or Grafana alert providers. When you connect the integration, we automatically pull in PagerDuty services, teams, users, escalation policies, and schedules into the Catalog. You can page any PagerDuty escalation policy or user directly from Slack using /inc escalate.

Comparing incident management tools for distributed systems

Incident management platforms handle cloud-native complexity differently. The gap between alert-routing tools and coordination-first platforms becomes critical when you manage Kubernetes failures.

Table 1: Core capabilities comparison

| Feature | incident.io | PagerDuty | Opsgenie |

|---|---|---|---|

| Slack-native workflow | Full lifecycle via /inc commands | Notifications only, web UI required | Notifications only |

| Service Catalog depth | Automatic dependencies, owners, recent changes | Limited service directory | Basic service mapping |

| AI root cause analysis | Automates up to 80% of incident response | Add-on feature | Basic automation |

| Post-mortem automation | 80% auto-drafted from timeline | Manual reconstruction | Manual reconstruction |

Table 2: Setup and pricing comparison

| Feature | incident.io | PagerDuty | Opsgenie |

|---|---|---|---|

| On-call pricing | $20/user/month add-on | Included in Professional tier | Included |

| Total pricing | $45/user/month (Pro + on-call) | Contact sales | Contact sales |

| Multi-region support | Built-in | Enterprise tier | Yes |

| Pricing transparency | Public pricing | Hidden pricing | Hidden pricing |

The verdict for SRE teams

PagerDuty excels at alerting infrastructure. If your primary need is sophisticated alert routing, deduplication, and waking people up, PagerDuty's decades of investment in this domain show. However, PagerDuty can trigger incidents with in-line commands in Slack, but stops short of managing the full incident lifecycle in chat, leaving teams to toggle between tools during critical moments.

incident.io is purpose-built for coordination in cloud-native environments. We eliminate coordination overhead by handling the entire incident lifecycle inside Slack, from declaration through resolution. For teams where the bottleneck is coordination rather than alerting sophistication, our Slack-native architecture reduces MTTR by eliminating context-switching. Our AI SRE assistant automates investigation tasks that would otherwise require manual correlation of deployment history, alert patterns, and service dependencies.

Opsgenie is being sunset. Atlassian announced that new Opsgenie purchases stop June 4, 2025, and access ends April 5, 2027. Any organization evaluating Opsgenie faces mandatory migration in less than two years. Atlassian is consolidating Opsgenie capabilities into Jira Service Management, designed for IT service desk workflows rather than real-time SRE incident response.

Homegrown Slack bots break when Slack APIs change, lack capabilities like AI root cause analysis or automated post-mortems, and require ongoing maintenance that competes with product development. One customer noted: "It's tempting to build a Slack-based incident system yourself, don't... They have built a system with sane defaults and building blocks to customize everything".

Reducing cognitive load with Slack-native coordination

The mental overhead of coordinating a distributed systems incident often exceeds the technical challenge of fixing it. Automating the logistics lets SREs focus on problem-solving.

Automated incident channel creation with role assignment

When a Datadog monitor threshold triggers, we automatically create a dedicated Slack channel with a structured naming convention like #inc-2847-api-latency-spike. We page the on-call engineer from the affected service's schedule and auto-invite them. Service owners join based on Service Catalog configuration. The channel pins the triggering alert with full context, recent deployment history, and dependent services immediately visible.

Compare this to the manual process: someone sees an alert in a general #ops-alerts channel, manually creates a Slack channel with an inconsistent name, hunts through PagerDuty or a spreadsheet to find who is on-call, manually invites them, then starts pasting alert links. This manual coordination burns 12 minutes before technical troubleshooting begins.

"I appreciate how incident.io consolidates workflows that were spread across multiple tools into one centralized hub within Slack, which is really helpful because everyone's already there. It really helps teams manage and tackle incidences in a more uniform way." - Alex N on G2

Command-based workflow that matches operational speed

Slack slash commands execute at conversation speed. /inc severity critical escalates severity in two seconds. /inc assign @database-lead designates incident command instantly. We record every command in the timeline automatically, eliminating the designated note-taker role that pulls one engineer away from troubleshooting. Watch this demonstration of Slack-native incident management showing the full declare-to-resolve workflow without leaving Slack.

Automated status page updates

During a Kubernetes incident affecting customer-facing services, someone needs to update the public status page and notify internal stakeholders. This administrative work interrupts your incident commander's focus. We automate status page updates and stakeholder notifications through workflow triggers. When you escalate an incident to "critical" severity, a workflow can automatically post to the public status page and send a formatted update to the executive team's Slack channel.

For a walkthrough of these automated workflows, see our startup response demo showing incident declaration, escalation, and resolution in real-world scenarios.

Leveraging AI for root cause analysis in microservices

High-cardinality data in distributed systems makes finding the root cause like searching for a specific log line among millions. AI assistance that correlates code changes, alert patterns, and historical incidents accelerates investigation.

AI-powered code change correlation and automated fixes

Our AI SRE connects telemetry, code changes, and past incidents to fix issues faster. When an incident fires, the AI analyzes recent pull requests merged to production, correlates timestamps with alert onset, and surfaces the likely deployment that introduced the regression. The AI can even generate a fix and open a pull request directly from Slack. If the root cause is a configuration error or simple code bug, AI SRE drafts a remediation PR that your engineer can review and merge. Watch AI SRE in action demonstrating real-time root cause identification and fix generation.

Automated investigation reducing MTTR

Our AI SRE automates up to 80% of incident response tasks by handling repetitive investigation work: pulling relevant Datadog metrics into the incident channel, identifying similar past incidents with comparable symptoms, extracting key decisions from call transcriptions, and suggesting next diagnostic steps based on service architecture. One customer reported: "incident.io saves us hours per incident when considering the need for us to write up the incident, root cause and actions".

Our AI understands service dependencies from the Catalog and applies that context to hypothesis generation. For a technical deep-dive, watch this Inside Traversal interview on AI SRE agents discussing architectural approaches to autonomous incident investigation.

Automating the post-incident review process

Post-mortems that take 90 minutes to reconstruct from scattered sources delay learning and frustrate engineers. Automated timeline capture and AI-assisted drafting reduce post-mortem completion time to 10 minutes.

Automatic timeline construction and AI-drafted post-mortems

We capture your timeline as the incident happens: every Slack message in the incident channel, every /inc command execution, every role assignment, every pinned Datadog graph. Scribe AI transcribes Zoom or Google Meet calls in real-time, extracting key decisions and flagging root causes mentioned verbally. When you type /inc resolve, we generate a post-mortem draft using this captured data. Engineers spend 10 minutes refining the narrative rather than 90 minutes reconstructing events from memory.

"Incident.io has dramatically improved the experience for calling incidents in our organization, and improved the process we use to handle them... 1-click post-mortem reports - this is a killer feature, time saving, that helps a lot to have relevant conversations around incidents (instead of spending time curating a timeline)" - Verified User on G2

Compliance-ready documentation and follow-up automation

SOC 2 and ISO 27001 auditors require complete incident documentation with timestamps, assigned roles, and resolution evidence. Automated capture eliminates documentation gaps. Our GDPR-compliant data retention allows you to retain and remove data in accordance with regulatory requirements. For organizations handling sensitive security incidents, we support private incidents with restricted access.

Post-mortem action items often languish in Google Docs because teams disconnect them from actual task tracking. We integrate with Jira and Linear to automatically create follow-up tickets with incident context pre-populated. When the post-mortem identifies "migrate payment service connection pooling to use adaptive sizing," that action item becomes a Jira ticket assigned to the payment team automatically.

90-day plan to reduce MTTR in cloud-native environments

Rolling out cloud-native incident management requires a phased approach that balances integration complexity with quick wins.

Days 1-30: Foundation setup and initial integrations

Install incident.io in 30 seconds by connecting your Slack workspace. Configure the Datadog integration by tagging monitors with service identifiers. If you run PagerDuty, connect it directly as an alert source to test on-call schedules without migrating alert providers. Import your service inventory into the Service Catalog, defining owners, dependencies, and Slack channels. Connect Backstage integration if you already maintain a service catalog. Run your first 3-5 real incidents through the platform and measure baseline MTTR.

"For our engineers working on incident, the primary interface for incident.io is slack. It's where we collaborate and where we were gathering to handle incident before introducing incident.io... That's where incident.io really shines: it allows to seamlessly nudge or suggest actions." - Alexandre R. on G2

Days 31-60: Workflow optimization and team training

Build workflows for your three most common incident types: database connection exhaustion, Kubernetes pod crashloops, and third-party API failures. Configure severity-based automation where critical incidents automatically page the executive on-call and post to the internal status page. Set up reminders and nudges to prompt post-mortem completion within 24 hours. Run a 30-minute training session focused on five commands: /inc declare, /inc assign, /inc escalate, /inc severity, and /inc resolve. Establish the expectation that post-mortems publish within 24 hours using AI-drafted timelines. Configure Jira integration to auto-create follow-up tickets.

Days 61-90: AI enablement and measurement

Activate AI-powered root cause analysis and investigation assistance. Configure AI SRE to analyze recent deployments and correlate with alert onset. Test fix PR generation on non-critical incidents to build team confidence. Pull MTTR data from the Insights dashboard covering the full 90-day period. Compare the first 30 days against days 60-90 to measure improvement. Identify outlier incidents where MTTR remained high and investigate whether Service Catalog data was incomplete. Present findings to engineering leadership showing MTTR trends, incident volume by service, and time savings from automated post-mortems. Calculate ROI by comparing engineer hours saved against platform cost.

For visual demonstrations, watch incident.io supercharged with AI showing AI-assisted incident response in production scenarios.

Start reducing your MTTR today

Cloud-native architectures demand cloud-native incident response. The coordination overhead that burns 15 minutes per incident comes from inadequate tooling, not from your people or your process. When your Kubernetes cluster fails and 200 microservices are potentially affected, you cannot coordinate effectively using tools designed for three-server monoliths.

The shift from alert-routing to coordination-first platforms eliminates context-switching, automates timeline capture, and applies AI to the investigation work that currently overwhelms your SREs. Book and try incident.io in a free demo to test Slack-native incident management with your Datadog or PagerDuty integration. Run your next five production incidents through the platform and measure the coordination time savings yourself. Or book a free demo to see how teams like Netflix and Etsy manage hundreds of incidents monthly without increasing headcount or burning out their on-call rotations.

Key terms glossary

MTTR (Mean Time To Resolution): Average time from incident detection to full resolution, measured in minutes. Industry benchmarks for cloud-native SaaS range from 30-60 minutes for P1 incidents.

Service Catalog: Registry of microservices including ownership, dependencies, recent deployments, and runbook links. Essential for auto-populating incident context in distributed systems.

Coordination tax: Time spent assembling teams, gathering context, and managing process during incidents rather than solving the technical problem. Typically 12-15 minutes per incident in cloud-native environments.

Ephemeral infrastructure: Kubernetes pods and containers designed to be replaced rather than repaired, living seconds to hours rather than months. Traditional SSH-based debugging does not work with ephemeral resources.

Slack-native: Architecture where the full application lifecycle executes via chat commands rather than using chat for notifications about actions happening in a separate web UI. Eliminates context-switching during high-pressure incidents.

AI SRE: Artificial intelligence assistant that triages alerts, correlates code changes with incident onset, suggests root causes, and can generate fix pull requests. Distinct from simple log correlation engines.

Timeline capture: Automatic recording of all incident actions, Slack messages, role assignments, and pinned graphs as events occur, eliminating manual post-mortem reconstruction from memory.

CrashLoopBackOff: Kubernetes status showing a pod repeatedly crashes and restarts, typically due to application errors or missing dependencies. Common incident trigger in containerized environments.

FAQs

See related articles

The post-mortem problem

Post-mortems are one of the most consistently underperforming rituals in software engineering. Most teams do them. Most teams know theirs aren't working. And most teams reach for the same diagnosis: the templates are too long, nobody has time, nobody reads them anyway.

incident.io

incident.io

Keeping it boring: the incident.io technology stack

This is the story of how incident.io keeps its technology stack intentionally boring, scaling to thousands of customers with a lean platform team by relying on managed GCP services and a small set of well-chosen tools.

Matthew Barrington

Matthew Barrington

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian HansonSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization