How to build automated runbooks that reduce MTTR by 50%

Updated January 8, 2026

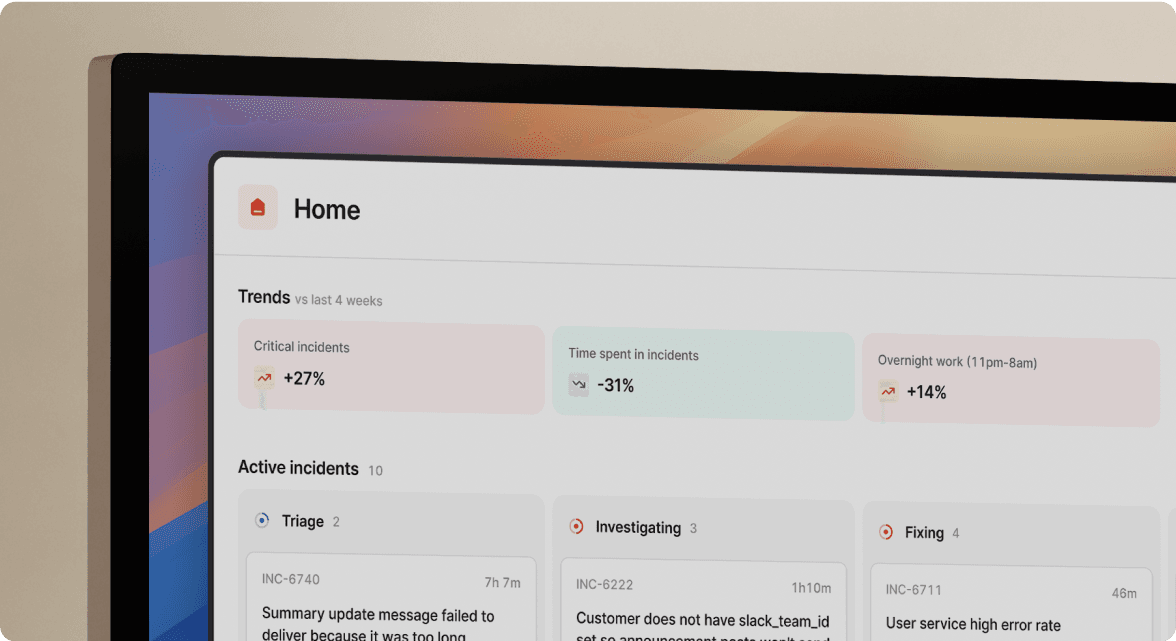

TL;DR: Automated runbooks reduce MTTR by replacing static documentation with executable workflows. Instead of reading a wiki page, the runbook automatically triggers diagnostics, assigns roles, and offers remediation buttons directly in Slack. To build one: identify high-frequency incidents, map the manual steps (triage, diagnose, fix), and use an automation platform to trigger these actions via webhooks or slash commands. Teams using this approach typically see MTTR improvements of 30-50% by eliminating context switching and manual toil.

Your runbook exists. It's in Confluence somewhere. Maybe Notion. The problem? During incidents, that runbook might as well not exist at all.

On-call engineers toggle between PagerDuty, Datadog, three Slack threads, and a Google Doc for notes. By the time they find the runbook link, it's either outdated, missing context, or requires copy-pasting commands into a terminal under extreme stress. This is why static documentation fails during incidents and why automation is the only path to meaningful MTTR reduction.

Why static runbooks are killing your MTTR

Every incident carries a hidden tax. Before any actual troubleshooting begins, your team pays a "coordination tax" of manual tasks: creating Slack channels, paging the right people, finding relevant dashboards, and locating documentation. You pay this coordination tax on every incident, and research confirms it consistently eats 10-15 minutes before anyone looks at the actual problem.

Static runbooks compound this problem in three ways:

- Context switching destroys focus. Every time you leave Slack to read Confluence, you lose momentum. The Google SRE book defines this type of work as "toil": manual, repetitive, automatable, tactical, and devoid of enduring value.

- Your documentation decays faster than your systems change. That runbook written six months ago? It probably references deprecated commands, old dashboard URLs, or services that no longer exist. Your engineers learn to ignore runbooks they can't trust.

- Reading isn't doing. Under stress, humans don't read carefully. They skim, miss steps, and make mistakes. A runbook that says "check the connection pool metrics" assumes the engineer knows where those metrics live, what "normal" looks like, and what to do if the numbers are wrong.

"The primary advantage we've seen since adopting incident.io is having a consistent interface to dealing with incidents. Our engineers already used slack as a response platform, but without the templated automation of channel creation/management, stakeholder updates & reminders, post-incident review pipeline etc., incidents often felt haphazard and bespoke." - Verified user review of incident.io

Better documentation won't solve this. You need to bring the instructions and actions directly to the engineer, inside the tool they're already using.

The anatomy of a high-performance automated runbook

Modern automated runbooks operate across three distinct layers. Understanding this structure helps you design workflows that actually reduce MTTR rather than just adding more complexity.

Layer 1: Trigger and triage

This layer handles the "assembly" phase that traditionally wastes significant time at the start of every incident. When an alert fires, automation should:

- Create a dedicated incident channel with a consistent naming convention

- Page the on-call engineer and relevant service owners

- Set initial severity based on the alert payload

- Post the triggering alert with full context (not just "CPU high")

Workflows in incident.io allow you to define conditional triggers based on specific criteria. For example, a Datadog alert with service: payments can automatically page the payments team and set severity to "high" because you know payments issues are customer-facing.

Layer 2: Diagnostics (the "read" phase)

Once the right people are in the room, they need context. This layer automatically fetches and displays:

- Recent deployment history for affected services

- Current metric graphs (error rates, latency, resource utilization)

- Active alerts on related services

- Links to relevant dashboards and previous similar incidents

You see this information in the incident channel automatically. No one on your team needs to remember which Datadog dashboard to check or where the deployment logs live. The Service Catalog in incident.io powers this by connecting services to their owners, runbooks, and dependencies, so workflows surface the right context for any affected service.

Layer 3: Remediation (the "write" phase)

This is where you gain the most power from automation and where human-in-the-loop patterns become essential. The remediation layer presents interactive options:

- Safe actions: Buttons to restart a specific pod, clear a cache, or trigger a canary rollback

- Approval gates: Actions that require confirmation before execution (e.g., "Scale down production cluster? [Approve/Deny]")

- Audit trails: Every action logged with who approved it and when

"Incident Workflows - The tool significantly reduces the time it takes to kick off an incident. The workflows enable our teams to focus on resolving issues while getting gentle nudges from the tool to provide updates and assign actions, roles, and responsibilities." - Verified user review of incident.io

Human oversight remains critical. As WorkOS engineering describes, "HITL systems are designed to embed human oversight, judgment, and accountability directly into the AI workflow." The goal isn't removing humans. It's removing the tedious parts so humans can focus on judgment calls.

5 steps to build your first automated runbook workflow

Don't try to automate everything at once. Start with one high-impact incident type and expand from there.

Step 1: Identify the right candidate

Don't try to automate every incident type. You should focus on candidates that share these characteristics:

| Criteria | Good candidate | Poor candidate |

|---|---|---|

| Frequency | Happens multiple times per month | Once per quarter |

| Predictability | Clear pattern of symptoms | Unique each time |

| Resolution path | Well-understood fix | Requires deep investigation |

| Current state | Documented but manual | No existing process |

The Google Cloud team recommends starting by tracking where your team spends time on repetitive work. Common candidates include: high memory alerts, failed health checks, certificate expirations, and deployment rollbacks.

Step 2: Map the manual path

Before automating, document exactly what a human does today. Write down every click, command, and decision point:

- Alert fires in Datadog

- On-call checks PagerDuty to see alert details

- Creates Slack channel manually

- Pings the backend team

- Opens Datadog dashboard to check error rates

- (and so on...)

This exercise often reveals unnecessary steps and inconsistencies between team members. It also gives you a clear automation checklist.

Step 3: Define the trigger

You need to define a specific trigger event for your automation:

- Alert-based: Specific monitor from Datadog, Prometheus, or New Relic

- Webhook-based: GitHub deployment events, CI/CD failures

- Manual: Slash command like

/inc declarewith specific parameters - Scheduled: Maintenance windows or capacity checks

incident.io Workflows support conditional logic so you can route different alert types to different runbooks. An alert with severity: critical triggers different automation than severity: warning.

Step 4: Build the "safe" automation first

Start with non-destructive actions that reduce coordination overhead:

- Auto-create the incident channel with consistent naming

- Page the right people based on service ownership

- Post diagnostic context (graphs, recent deploys, related alerts)

- Set reminders for status updates every 15 minutes

You save significant time per incident with these automations, and they carry minimal risk since they don't modify production systems. You can configure reminders and nudges in incident.io to prompt for updates without requiring manual calendar reminders.

Step 5: Add human-in-the-loop remediation

Once your safe automation is proven, add remediation options with approval gates:

- Revert last deploy: Shows the diff, tags the author, offers a "Rollback" button that requires confirmation

- Scale resources: "Add 2 pods to payment-service? [Approve/Deny]"

- Restart service: "Restart api-gateway pod in us-east-1? [Approve/Deny]"

Relay.app describes the pattern well: "Sometimes there are steps in a workflow that simply cannot be automated because a person needs to do something in the real world, provide missing data, or provide an approval."

This approach lets your junior engineers safely execute remediation steps that would otherwise require a senior engineer to run manually.

3 real-world automated runbook examples for SRE teams

These examples show concrete implementations you can adapt for your environment.

Example 1: Database connection pool exhaustion

The scenario: Your application logs show "connection pool exhausted" errors. This happens every few weeks during traffic spikes or when a query goes rogue.

| Runbook component | Automation |

|---|---|

| Trigger | Alert on connection pool availability dropping below threshold (e.g., postgresql.pool.available < 5) |

| Auto-diagnostics | Fetch active query list, identify blocking queries, show connection count by application |

| Human action | Button: "Kill query PID 12345? [Yes/No]" with preview of the query |

| Post-resolution | Create follow-up ticket to investigate why pool exhausted |

Time saved: You eliminate the SSH step, manual query analysis, and command execution. Your engineer sees the problematic query in Slack and clicks one button.

Example 2: Bad deploy rollback

The scenario: Error rates spike immediately after a deployment. This is one of the most common incident patterns and has a well-understood fix: rollback.

| Runbook component | Automation |

|---|---|

| Trigger | Webhook correlation: GitHub release event + error rate spike within 10 minutes |

| Auto-diagnostics | Link to PR, tag the merger, show the diff, display before/after error rates |

| Human action | Command: /inc rollback triggers CI/CD revert with approval |

| Post-resolution | Auto-draft post-mortem with deployment timeline |

Watch how incident.io handles automated incident resolution with AI SRE for a live demonstration of this pattern.

Example 3: Third-party outage communication

The scenario: A critical dependency (AWS, Stripe, Twilio) reports degraded service. You need to communicate internally and potentially to customers.

| Runbook component | Automation |

|---|---|

| Trigger | Webhook from third-party status page (or manual declaration) |

| Auto-actions | Create incident, draft customer communication, notify customer support team |

| Human action | Review and approve external communication |

| Post-resolution | Track third-party incident duration for vendor SLA discussions |

You can configure workflows to publish to your status page when specific conditions are met, eliminating the "forgot to update the status page" failure mode that plagues most incident processes.

Automate your runbooks in Slack using incident.io

We built incident.io to eliminate the friction between your team and runbook automation. You get workflows that feel like a natural extension of Slack, not another tool to learn.

Stop wasting time on the wrong runbook for the wrong service

Workflows fire based on specific conditions, not just "any incident." This means you get exactly the right runbook for payments issues vs. database issues vs. deployment failures. You can trigger different automation based on:

- Service affected:

service: paymentsgets the payments runbook - Severity level: Critical incidents get immediate paging, warnings get a Slack notification

- Time of day: After-hours incidents might page backup on-call

- Custom fields: Any metadata you attach to incidents

"With incident.io, managing incidents is no longer a chore due the automation that covers the whole incident lifecycle; from when an alert is triggered, to when you finish the post mortem. Since using incident.io people are definitely creating more incidents to solve any issues that may arise." - Verified user review of incident.io

Eliminate the "who owns this?" question that derails incidents

The incident.io Catalog connects your services to their owners, documentation, and dependencies. When an incident affects the "checkout-service," your automation can:

- Page the checkout team's on-call (not a generic rotation)

- Link to the checkout-specific runbook

- Show dependencies (payment gateway, inventory service)

- Display the right Datadog dashboard (not a generic overview)

AI SRE: Dynamic runbooks without pre-written rules

Static automation requires you to anticipate every scenario. AI SRE acts as a dynamic runbook by:

- Correlating alerts across services to identify cascading failures

- Suggesting root causes based on recent code changes

- Summarizing context from past similar incidents

- Recommending next steps based on what worked before

See how AI SRE can resolve incidents while you sleep for a walkthrough of these capabilities, or watch how Bud Financial improved their incident response processes using incident.io automation.

Measuring the impact: KPIs for runbook automation

You need metrics to prove automation is working and identify opportunities for improvement.

MTTR (Mean Time To Resolution)

This is your north star. DORA research identifies MTTR as one of four key metrics that distinguish elite engineering teams. Track:

- Baseline MTTR before automation (typically 45-90 minutes for P1s)

- Current MTTR after automation (target: 30-50% reduction)

- MTTR by incident type to identify which runbooks need improvement

MTTA (Mean Time To Acknowledge)

MTTA measures the time from alert to acknowledgment, which reflects your automation's ability to reach the right person quickly. With good automation, you should target MTTA under 5 minutes.

Time to assemble

This measures the time from alert to "team working in channel with context." Manual assembly often takes 10-15 minutes. Effective automation can cut this dramatically.

On-call sentiment

Qualitative but essential. Track through post-incident surveys:

- "How overwhelming was this incident?" (1-10 scale)

- "Did you have the context you needed?" (Yes/No)

- "Could a less experienced engineer have handled this?" (Yes/No)

Vanta reduced hours spent on manual processes after implementing automated workflows, improving both efficiency and team satisfaction.

Ready to convert your static documentation into automated workflows? Build your first automated runbook in Slack. Book a demo to see how teams like yours have reduced MTTR through workflow automation.

Key terminology

Toil: The Google SRE book defines toil as manual, repetitive, automatable, tactical work devoid of enduring value that scales linearly as a service grows. Eliminating toil is a core SRE practice.

MTTR: Mean Time To Resolution. The average time from incident detection to full recovery. DORA research uses MTTR as a key indicator of engineering team performance.

MTTA: Mean Time To Acknowledge. The time from alert to acknowledgment, measuring team responsiveness and alerting effectiveness.

Human-in-the-loop (HITL): An automation pattern that pauses workflows for human verification before executing sensitive actions. Essential for safe remediation automation.

Runbook: A compilation of routine procedures for managing and resolving specific incidents. Modern runbooks are executable workflows, not static documents.

FAQs

See related articles

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian Hanson

Everything you need to know about ITIL 5, AI and incident management

We break down ITIL 5's governance framework and what it means for teams using AI in incident response. For incident management, it addresses questions like: Who's accountable when an AI-suggested remediation backfires? How do you audit AI-generated updates?

Chris Evans

Chris Evans

DevEx matters for coding agents, too

When AI can scaffold out entire features in seconds and you have multiple agents all working in parallel on different tasks, a ninety-second feedback loop kills your flow state completely. We've recently invested in dramatically speeding up our developer feedback cycles, cutting some by 95% to address this. In this post we’ll share what that journey looked like, why we did it and what it taught us about building for the AI era.

Rory Bain

Rory BainSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization