12 Opsgenie Data Export Gotchas (And How to Avoid Them During Migration)

Updated December 2nd, 2025

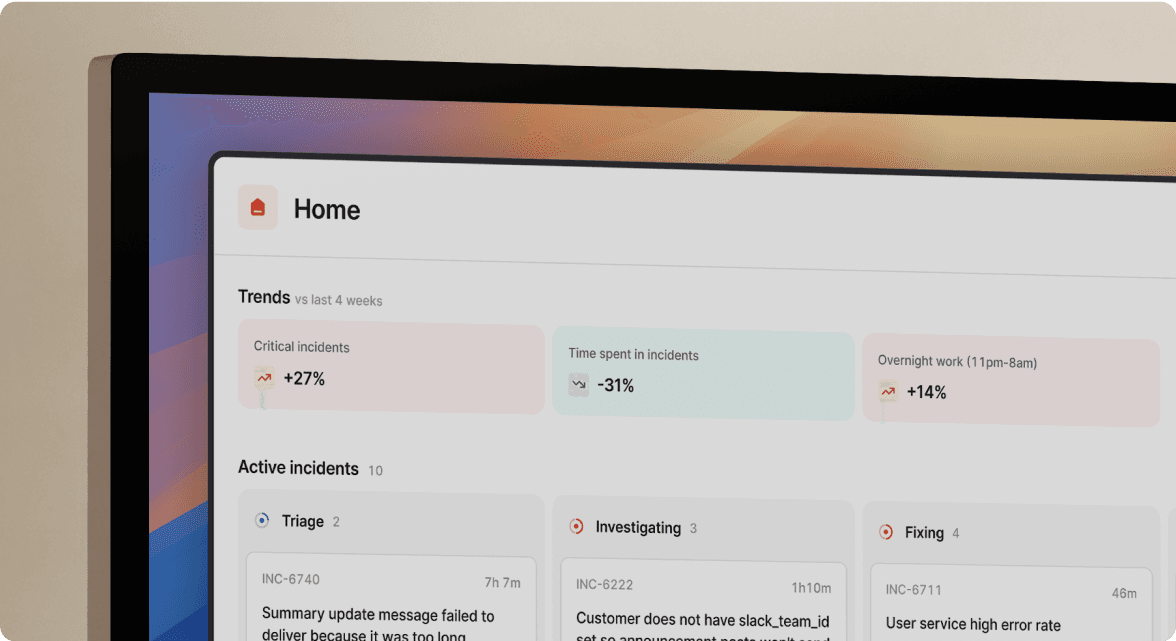

TL;DR: Migrating off Opsgenie before the April 2027 shutdown requires careful data extraction. The UI export only captures surface-level data like on-call schedules in iCalendar format. For a complete migration, use the Opsgenie REST API to extract alert history, routing rules, escalation policies, team structures, and integration configurations. The 12 most common gotchas include API rate limits that throttle bulk exports, user email mismatches between systems, routing logic that doesn't export via UI, and the details field requiring separate API calls per alert. We offer import tools that map Opsgenie schedules directly, making your migration smoother.

Atlassian announced the Opsgenie sunset in March 2025. New customer sales ended June 4, 2025, and the platform shuts down completely April 5, 2027. If you're managing on-call for 50-500 engineers, this deadline creates another todo and migration risks for you to handle.

The real risk isn't picking a new tool, it's losing years of incident history, misconfiguring your on-call rotations, or discovering three months after migration that your escalation policies never transferred correctly.

The Opsgenie UI export options won't give you everything needed for a complete migration. You can export alerts to CSV from the dashboard, but key fields like priority are missing. Incident history has no bulk UI export. Routing rules, escalation policies, integration webhooks, and team structures all require API extraction.

This guide walks through the 12 most common data export gotchas that engineering teams hit during Opsgenie migrations, provides methods for bulk extraction, and shows how to import your data into our platform without losing context.

Understanding the Opsgenie sunset timeline

Atlassian's shutdown timeline creates two critical windows for action.

June 4, 2025: End of sale. Opsgenie stopped accepting new customers. Existing customers can still purchase additional user licenses until the shutdown date, but plan upgrades and downgrades are no longer available.

April 5, 2027: End of life. The platform shuts down completely. All data becomes inaccessible unless you've exported it. Atlassian will not provide data retrieval services after this date.

Why act now: The closer you get to April 2027, the more migration support resources get saturated. Teams that wait until Q4 2026 face longer implementation queues and pressure to rush decisions. Starting your export process in Q1-Q2 2025 gives you time to test parallel runs and validate data integrity without panic.

Historical incident data matters beyond nostalgia. Compliance audits require demonstrating incident response patterns, MTTR trend analysis depends on comparing historical performance, and post-mortem references link to specific alert IDs. Lose your alert history and those links break permanently.

The 12 data export gotchas

These are the specific failure modes that trip up engineering teams during Opsgenie migrations. Each represents a real scenario where "I thought I exported everything" turned into "we just lost critical data."

1. UI exports miss critical routing logic

Opsgenie's iCalendar export captures who is on-call and when, but omits the configuration that determines how alerts route to those people:

- Routing rules (which integration triggers which team)

- Escalation policies (who gets paged if first responder doesn't acknowledge)

- Conditional routing logic (P1 vs P2 alert handling)

Fix: Extract routing configurations via the Escalation API and Policy API endpoints as JSON before migrating schedules.

2. API rate limits break bulk exports mid-stream

Opsgenie enforces runtime rate limits that return HTTP 429 errors when you exceed request thresholds. The /v2/alerts endpoint paginates at 100 alerts per page. Exporting 5,000 alerts requires 50 API calls—fire them rapidly and your script crashes with incomplete data.

Fix: Implement exponential backoff in your scripts. When you receive a 429 error, wait 2 seconds, then 4, then 8 before retrying.

3. User email mismatches break team assignments

If your Opsgenie users have different email formats than your new platform (john@company.com vs. john.smith@company.com), automated user mapping fails. Schedules import but show "unassigned" for half your rotation.

Fix: Export users via the Users API, cross-reference against your new platform's directory, and create a mapping CSV linking Opsgenie user IDs to new platform user IDs before importing schedules.

4. Complex rotation logic doesn't serialize

While on-call schedules can be exported as iCalendar files, complex rotation logic like "follow the sun" doesn't serialize into .ics format. You get names and dates, not the rules that generate those dates.

Fix: Use the Schedule API to pull full schedule configuration including rotation rules, handoff times, and override policies.

5. Alert pagination hits hard limits

The List Alerts API returns a 422 error when the sum of offset and limit exceeds 20,000 records. If you have more than 20,000 alerts to export, standard pagination breaks.

Fix: To get around this, use the date-range filters in your query (e.g., createdAt>31-12-2022 AND createdAt<10-12-2023) to segment exports into smaller result sets Atlassian Community that stay under the 20k limit.

6. Integration credentials require regeneration

Team-level API keys used for integrations are Opsgenie-specific and don't transfer. You'll need to regenerate webhook URLs and API keys for every integration (Datadog, Prometheus, New Relic).

Fix: Create a complete inventory of every integration before starting. Document source system, alert type, and destination team as your reconfiguration checklist.

7. Webhook URLs aren't fully exportable

Integration configurations can be listed via the Integrations API, but actual webhook URLs and authentication tokens are sensitive credentials that may not be fully exportable for security reasons.

Fix: Export configuration via GET /v2/integrations/{integrationId}, then manually verify webhook URLs in the source monitoring tool for regeneration.

8. Escalation repeat logic may not transfer

Some escalation policies use repeat logic ("page primary, wait 5 minutes, page again") specific to Opsgenie's escalation engine. Other platforms handle repeats differently or not at all.

Fix: Audit escalation policies for repeat steps and time-based logic. Determine if your new platform supports equivalent patterns before importing.

9. Heartbeat monitors are easily forgotten

Opsgenie supports heartbeat monitoring where external systems ping the platform to prove they're alive. These configurations are separate from alert integrations and easy to overlook.

Fix: List all active heartbeats via API before migrating. Document expected interval and grace period, then reconfigure in your new platform.

10. Custom fields face schema mismatches

Opsgenie allows custom alert fields and freeform tags up to 50 characters. Your destination platform might have different field names, character limits, or schema requirements.

Fix: Export 50-100 recent alerts via API. Inspect details and tags fields. Map custom fields to equivalent fields in your destination platform's schema before bulk import.

11. Point-in-time overrides get lost

When someone swaps an on-call shift, this creates a temporary override. The Schedule Override API can list all overrides for a schedule, but iCalendar exports only capture the following 12 months of the schedule. Overrides outside that window or created after export will be missed.

Fix: Use the Schedule Override API to export all active overrides separately. Re-export close to your cutover date to capture recent changes.

12. Permission models don't map directly

Opsgenie's permission model (admin, user, read-only) doesn't map one-to-one to other platforms' role-based access control. An Opsgenie "admin" might map to multiple separate roles in your new platform.

Fix: Document who has admin permissions today and what they do with those permissions. Map actions to specific roles in your new platform rather than blindly assigning equivalence.

Step-by-step guide to exporting your Opsgenie data

You have two paths to extract data from Opsgenie. The hard way gives you complete control and works for any destination platform. The easy way uses our dedicated importer to automate the schedules field mapping and data transformation.

Method 1: REST API export with Python

This method uses the Opsgenie REST API to programmatically extract all exportable data. You'll need an API key with full read and configuration access.

Generate your API key:

- Log into Opsgenie with administrator privileges

- Navigate to Settings > API key management

- Click "Add new API key"

- Assign these permissions: Read Access, Configuration Access

- Copy the API key immediately (Opsgenie only shows it once)

Export alerts with full field preservation:

Here's an outline in pseudo code you can start with to extract alerts via the /v2/alerts endpoint while handling pagination and rate limits.

import requests

import time

import os

API_KEY = os.environ.get("OPSGENIE_API_KEY")

BASE_URL = "https://api.opsgenie.com/v2/alerts"

HEADERS = {"Authorization": f"GenieKey {API_KEY}"}

def get_all_alerts():

"""Paginate through /v2/alerts endpoint."""

all_alerts = []

next_url = BASE_URL

params = {"limit": 100, "sort": "createdAt", "order": "asc"}

while next_url:

response = requests.get(next_url, headers=HEADERS, params=params)

if response.status_code == 429:

# Handle rate limiting - implement backoff here

time.sleep(int(response.headers.get("Retry-After", 5)))

continue

data = response.json()

all_alerts.extend(data.get("data", []))

next_url = data.get("paging", {}).get("next")

params = {} # Only needed for first request

return all_alerts

def get_alert_details(alert_id):

"""Fetch full alert including description and details fields."""

# List endpoint omits these - individual fetch required

url = f"{BASE_URL}/{alert_id}"

response = requests.get(url, headers=HEADERS)

return response.json().get("data", {})

def export_alerts():

"""Main export function - extend with error handling and storage."""

alerts = get_all_alerts()

for alert in alerts:

full_data = get_alert_details(alert["id"])

alert.update(full_data)

# Add rate limit handling between requests

# Save to JSON, database, or destination platform

return alerts

Export schedules and teams:

After extracting alerts, you need on-call schedules and team structures. Use these endpoints:

GET /v2/schedules- Lists all schedules with names and IDsGET /v2/schedules/{identifier}- Gets full schedule configuration including rotation rules, handoff times, and overridesGET /v2/teams- Lists all teams with member countsGET /v2/teams/{id}- Gets team members, escalation settings, and routing rules

Modify the script above to target these endpoints, adjusting the field extraction logic for schedule and team objects.

Method 2: Our dedicated Opsgenie importer

If you don't fancy hacking around with scripts, we built import tools for teams moving off Opsgenie. Our importer pulls schedules directly from your Opsgenie instance via API.

What we import:

- On-call schedules (bulk import supported)

- Schedules that include teams as targets

- User mappings (we automatically link users via email where possible, and flag conflicts when we can't)

What requires manual setup/scripting:

- Escalation policies (not supported for Opsgenie imports)

- Team structures and routing rules

- Historical alert data

How it works:

- Connect your Opsgenie API key

- Select schedules to import from the On-call dashboard

- Review any flagged incompatible schedules (usually due to unidentified users)

- Manually create escalation policies using our interface

The importer handles pagination automatically and is a one-time operation, changes in Opsgenie after import won't sync.

Watch our full platform walkthrough to see how schedules imported from Opsgenie appear in our on-call management interface. For a detailed migration playbook covering timeline and risk mitigation, see our Opsgenie alternative guide.

"Incident.io was easy to install and configure. It's already helped us do a better job of responding to minor incidents that previously were handled informally and not as well documented." - Geoff H., verified user

Comparing migration targets and alternatives

You're choosing between fundamentally different architectural approaches that will shape your incident response for the next 3-5 years.

| Platform | Migration Ease | On-Call Pricing | Slack-Native | Historical Data Import | Best For |

|---|---|---|---|---|---|

| incident.io | Opsgenie schedule importer (escalation policies and routing rules require manual setup) | $45/user/month (includes on-call) | Yes, entire workflow in Slack | Schedules only; alert history and routing logic require manual migration | Teams wanting unified incident management with AI automation |

| PagerDuty | Manual reconfiguration with migration support | $21-41/user/month + add-ons | No, web-first with Slack notifications | Requires custom API scripting | Enterprise teams needing maximum alerting customization |

| Jira Service Management | Atlassian's official migration path | Bundled with JSM (starts at $20/user/month) | No, service desk focused | Automated from Opsgenie via Atlassian tools | Teams already committed to Atlassian ecosystem |

| Splunk On-Call | Manual schedule rebuild required | Custom pricing | No, web-first | Limited, requires manual export from Opsgenie | Existing Splunk customers wanting consolidation |

Why teams choose us for Opsgenie migrations:

Reduce MTTR by coordinating incidents in Slack. Our Slack-native architecture means your team coordinates incidents where they already work, eliminating the context-switching tax that comes with web-first tools. One SRE manager described the impact:

"Incident.io is incredibly flexible and integrates smoothly with the tools we rely on. It makes it easy to collaborate at key moments, which helps us maintain SLAs and fix things quickly." - Verified user in Information Technology and Services

Automate up to 80% of incident response with AI. Our AI SRE assistant identifies root causes, suggests fixes, and generates post-mortems from captured timeline data. This represents a capability upgrade beyond what Opsgenie provided.

Total cost comparison for 120 users:

- Current Opsgenie cost: Approximately $19/user/month = $2,280/month ($27,360/year)

- Our Pro plan: $45/user/month = $5,400/month ($64,800/year)

- Net increase: $3,120/month ($37,440/year)

Value gained: You consolidate on-call, incident response, status pages, and post-mortem automation into one platform. Teams typically reclaim 25 engineer-hours per month on incident coordination. At a $150 loaded rate, that's $3,750/month in reclaimed productivity, offsetting most of the cost increase.

For a detailed ROI analysis comparing us to PagerDuty, see our pricing guide.

Minimizing downtime during the cutover

Plan for a parallel run period where both Opsgenie and your new platform receive alerts simultaneously.

4-week parallel run strategy:

- Weeks 1-2: Configure and test - Set up your new platform with imported schedules and routing rules. Create test incidents manually to verify functionality without connecting monitoring integrations.

- Week 3: Dual delivery - Configure monitoring integrations to send alerts to both Opsgenie (primary, pages on-call) and your new platform (observer mode). Compare alert delivery timing.

- Week 4: Cutover - Flip primary alerting to your new platform. Keep Opsgenie in observer mode for 7 days to catch any routing issues.

- Week 5+: Decommission - If the new platform performs reliably for 2 weeks, disconnect Opsgenie integrations. Keep the account active for 30 days in case you need historical data reference.

Each monitoring tool integration (Datadog, Prometheus, New Relic) requires webhook URL updates. Test webhook delivery before cutover by creating a dedicated test alert that fires every 5 minutes. Verify it appears in both platforms during parallel run.

A platform engineering lead shared their migration approach:

"The onboarding experience was outstanding — we have a small engineering team (~15 people) and the integration with our existing tools (Linear, Google, New Relic, Notion) was seamless and fast less than 20 days to rollout." - Bruno D., verified user

Define rollback criteria before cutover. If any of these occur during weeks 3-4, revert to Opsgenie as primary:

- More than 5% of alerts fail to route to the correct team

- Any P1 alert takes longer than 5 minutes to deliver compared to Opsgenie baseline

- On-call engineers report missed pages or confusion during incident response

Having specific rollback criteria prevents panic decisions and ensures you make data-driven choices about proceeding.

Ready to migrate from Opsgenie without losing data?

The April 2027 deadline is fixed. Your data export strategy doesn't need to be a panic.

With Incident you get a Slack-native incident management platform that unifies on-call, response coordination, status pages, and AI-powered post-mortems in one tool.

Your incident response depends on data you're about to lose. Book a demo to get peace of mind and kick off your migration.

Key terms glossary

Opsgenie sunset: Atlassian's scheduled shutdown of the standalone Opsgenie product. New sales ended June 4, 2025, with full platform shutdown April 5, 2027.

iCalendar export: Opsgenie UI feature that exports on-call schedules as .ics files for calendar integration. Contains only schedule dates and assigned users, not underlying rotation configuration or rules.

Routing rule: Configuration logic that determines which team receives an alert based on payload attributes like integration source, tags, or priority level. Routing rules don't export via Opsgenie UI and require API extraction.

Escalation policy: Workflow that defines who gets notified if an alert isn't acknowledged within a specified timeframe. Includes repeat steps, conditional branches, and multi-level escalation paths.

API rate limit: Opsgenie's throttling mechanism that restricts the number of API requests within a given time window. Exceeding the limit triggers HTTP 429 errors requiring exponential backoff and retry logic.

FAQs

See related articles

Keeping it boring: the incident.io technology stack

This is the story of how incident.io keeps its technology stack intentionally boring, scaling to thousands of customers with a lean platform team by relying on managed GCP services and a small set of well-chosen tools.

Matthew Barrington

Matthew Barrington

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian Hanson

Everything you need to know about ITIL 5, AI and incident management

We break down ITIL 5's governance framework and what it means for teams using AI in incident response. For incident management, it addresses questions like: Who's accountable when an AI-suggested remediation backfires? How do you audit AI-generated updates?

Chris Evans

Chris EvansSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization