Reducing our pager load

At incident.io, we pride ourselves on providing a great product to our customers.

We’re also a small team, so we move fast and (whisper it not) occasionally break things.

To mitigate the impact on our customers, we have our app set up so that every time our application raises an error (via Sentry), we get paged. It’s been this way since the very early days of incident.io, and it’s helped us find and fix bugs super responsively - often before the customer even knows they’ve happened.

But it’s not all good news: having such a sensitive pager means that, inevitably, it’s a lot noisier than we would like. The pager also has no concept of ‘working hours’: we have customers around the world, and even if we didn’t - it’s not like incidents are a 9-to-5 sort of business anyway.

As we mature, we’ll eventually leave this behind and move to a more conventional model: paging on error rates & a few critical paths rather than every single exception. However, we don’t have enough traffic yet for this to be reliable. Also; we like knowing when anything breaks - it’s kind of our superpower. It helps us keep the quality of the product really high, and also gives us confidence to ship quickly knowing we can find and fix things quickly if there are some sharp edges.

So, what do we do when the pager gets too loud?

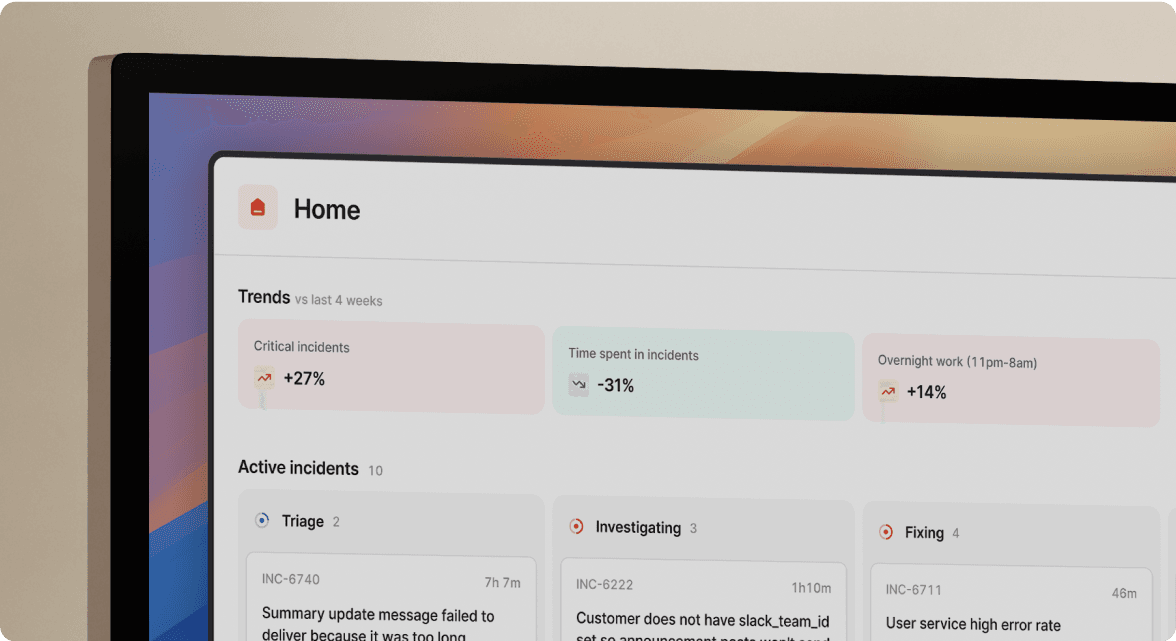

In mid-February, one of our engineers had a really bad week on the pager. Our pager load had increased, and meant they were woken up multiple times in the week, which we considered unacceptable.

For the reasons explained above, we weren’t willing to let go of alerting on all our errors. So we set out to reduce our pager load in other ways.

To set the scene a bit more: we were looking for quick wins. There are many more sophisticated things we could do, but wanted the low-hanging fruit to get as much impact for as little engineering time as we could spare. Not because we don’t think it’s important: if we’d needed to spend more time, we would have. But engineering time is at a premium, so we want to try and use it wisely.

So here’s what we did to reduce our pager load.

1: Expected errors

Our app talks to Slack, a lot. There are a few actions we do particularly regularly:

- Post a message to a channel

- Invite someone to a channel

- Get information about a channel

There are a number of ‘expected’ ways that these actions can fail; for example if the channel is archived then we can’t send someone a message, or invite someone to it.

Most of the time, these should be treated as best effort (e.g. if we try to notify a channel that someone’s created an action, but the channel has been archived, that shouldn’t be considered an error). But of course, not always.

We use Go, and that means we explicitly return errors and each caller has to choose what to do with the error. We realised that this wasn’t nuanced enough for us: certain errors are due to expected situations (e.g. an archived channel) while others aren’t (e.g. a badly formatted message).

We chose to build helpers that return a warning and and err, so the helper would define which errors were ‘probably fine’ and which were unexpected. This means a caller can choose to handle or ignore each error, depending on how ‘best effort’ it wants to be.

// PostMessage tries to post a message to a given Slack channel (or

// user). It will return a warning for known issues (missing scope, archiving

// channel, auth issues) or an error for anything else. It should be used in

// favour of PostMessageContext in almost all contexts.

func PostMessage(

ctx context.Context,

sc slackclient.SlackClientExtended,

channelID string,

fallbackText string,

messageOpts ...slack.MsgOption,

) (messageTs *string, warning error, err error) {

messageOpts = append(messageOpts,

slack.MsgOptionText(fallbackText, false))

//lint:ignore SA1019 we want to allow this to be called only inside this helper

_, messageTS, err := sc.PostMessageContext(

ctx, channelID, messageOpts...)

if err != nil {

if slackerrors.IsChannelNotFoundErr(err) ||

slackerrors.IsIsArchivedErr(err) ||

slackerrors.IsAuthenticationError(err) ||

slackerrors.IsMissingScopeErr(err) {

return nil, err, nil

}

return nil, nil, err

}

return &messageTS, nil, nil

}

We used staticcheck to mark the underlying method as deprecated, so that no-one would accidentally bypass our new helpers.

2: Best effort paths

Certain paths in our application are considered ‘best effort’. One example is the code we use to nudge users to (for example) update a summary if it’s not present. Of course, we’d like it to work: it’s part of our product. But we don’t think someone should be woken up in the middle of the night if it’s broken.

We use Sentry to manage our exceptions, and PagerDuty to handle our on-call rota and escalate to an engineer. We’ve also done lots of work with Go errors to make them play nicely with Sentry.

We labelled these paths by setting a default Urgency on the context, which we review before sending our errors to Sentry. The urgency gets sent to Sentry, and our alerting rules mean only Urgency=page errors will wake someone up.

// WithDefaultUrgency allows the caller to override the default urgency (which is

// page) for a particular code path.

func WithDefaultUrgency(ctx context.Context, urgency ErrorUrgencyType) context.Context {

return context.WithValue(

ctx,

defaultUrgencyKey,

urgencyContext{defaultUrgency: &urgency},

)

}

You can see all the code we use to manage urgencies here.

3: Transient errors

Lots of our application work is asynchronous, triggered from Pub/Sub messages. We integrate with a lot of third parties (Slack, Jira, PagerDuty etc.) and often receive transient errors; as is the nature of HTTP requests and integrations.

We investigated trying to identify specific errors as transient, but found that many of the client libraries we use don’t make that particularly simple.

Instead, we opted for a quick fix.

We changed our code so that it would only page if a message couldn’t be processed more than 3 times. This felt like a fair balance between ‘we’ve given this a good shot’ and ‘something’s wrong and we’d like to know about it’.

4: Demo accounts

Some of the pager noise was being driven from demo accounts; used by our sales and customer success teams to test and demonstrate parts of the product. As they were being re-used to demonstrate many different configurations, they would often encounter edge cases.

If anything was urgent our team could escalate to the on-call engineer themselves so we decided to stop paging if something went wrong with these accounts. The error is still raised, and we’d expect to review the error during working hours, but no-one should be woken up unnecessarily.

So, did it work?

Well, yes. Of course there’s the consistent flow of ‘this shouldn’t have paged me’ style errors, which we fix as we encounter them. But overall, this was a step change in our pager load.

I’m sure we’ll have to run a similar project again as our pager load creeps up over time. For the time investment (one engineer for a short week), the rewards were excellent.

See related articles

The post-mortem problem

Post-mortems are one of the most consistently underperforming rituals in software engineering. Most teams do them. Most teams know theirs aren't working. And most teams reach for the same diagnosis: the templates are too long, nobody has time, nobody reads them anyway.

incident.io

incident.io

Keeping it boring: the incident.io technology stack

This is the story of how incident.io keeps its technology stack intentionally boring, scaling to thousands of customers with a lean platform team by relying on managed GCP services and a small set of well-chosen tools.

Matthew Barrington

Matthew Barrington

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian HansonSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization