Day in the life: Data Analyst at incident.io

At incident.io we’re a small team, moving fast. No two days are the same, but I’ve picked out a day that feels representative of what we’re working on, and what you could expect if you joined the team.

✅ 8:30 to 9:30 - Big code change wrap-up, and PR review

At incident, we work flexibly but most of the team come in around 9:00-9:30. I came in a little early on Friday because I was keen to ship a chunky code change to help us analyse how our product is being used.

Data is embedded in every team at incident. As a data team, we’re focusing on exposing data in a user-friendly way in Metabase to continue building a culture of self-served analytics in every part of the company. Take a look at these blog posts to find out more about how we think about data: A modern data stack for startups and Updating our data stack.

This means we do the heavy lifting upstream using dbt so that we have precomputed & easy to use metrics downstream. The code change I shipped added lots of product engagement metrics to our core organization-based tables for our product features like workflows & generating post-mortems, and user actions like changing the severity of an incident. Felt awesome to hit merge on a big code change!

✅ 9:30 to 10:00 - Data team weekly planning

Every Friday, we catch up as a data team. It’s a pretty informal check-in where we go over how we’re feeling as a team, anywhere we need support, what’s working & what’s not. We also run through what we shipped the previous week, and what our goals are for the week ahead.

In our temperature check, we were both feeling great getting stuck into loads of work with our product & growth teams on exciting new launches and shipping some big code changes. We also couldn’t be more excited to grow the team.

We spoke about balancing infrastructure investments & building out our data models VS helping to push the company forward day-to-day with new product launches!

✅ 10:00 to 10:30 - CI pipeline failure - oops

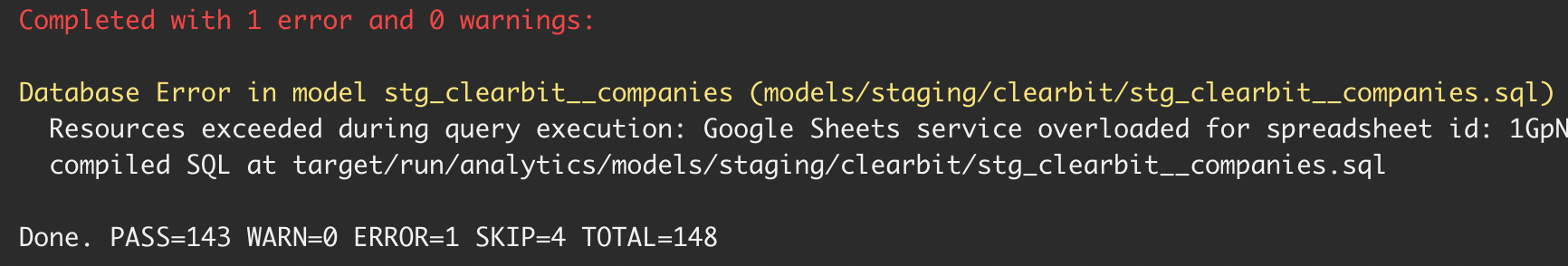

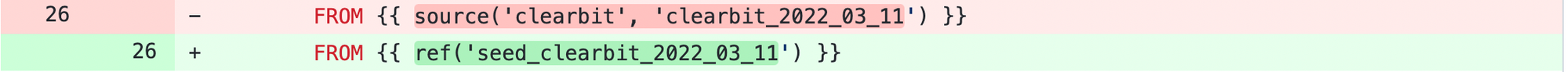

During planning, we got a notification that our pipeline failed! Uh oh - what’s happened here? Let’s take a look at the output in CircleCI:

We bumped into a known issue (mentioned in Google’s docs here) that BigQuery is unreliable querying from drive once sheets are relatively large/complex.

This data is Clearbit company data and doesn’t need to be real-time so dbt has a nifty solution we can use: dbt Seeds. Seeds are version-controlled CSV files that are super easy to get up and running, so are a great solution to get our pipeline up and moving again. We simply add the sheet as a .CSV to our seeds folder and reference the seed instead of the old table in our staging tables:

✅ 10:30 to 11:00 - Data hiring sync

We sat down with our awesome head of talent, Dani, to talk about growing the team. Every part of incident is growing; it feels surreal how quickly things are changing. Building a world-class data team is top of mind (for obvious reasons), and on a personal level, I can’t wait to see the team grow.

We discussed how we’d get you to apply. From content to creating a world-class interview process, we want to pull every lever to talk to great people.

✅ 11:00 to 11:15 - Product launch check-in

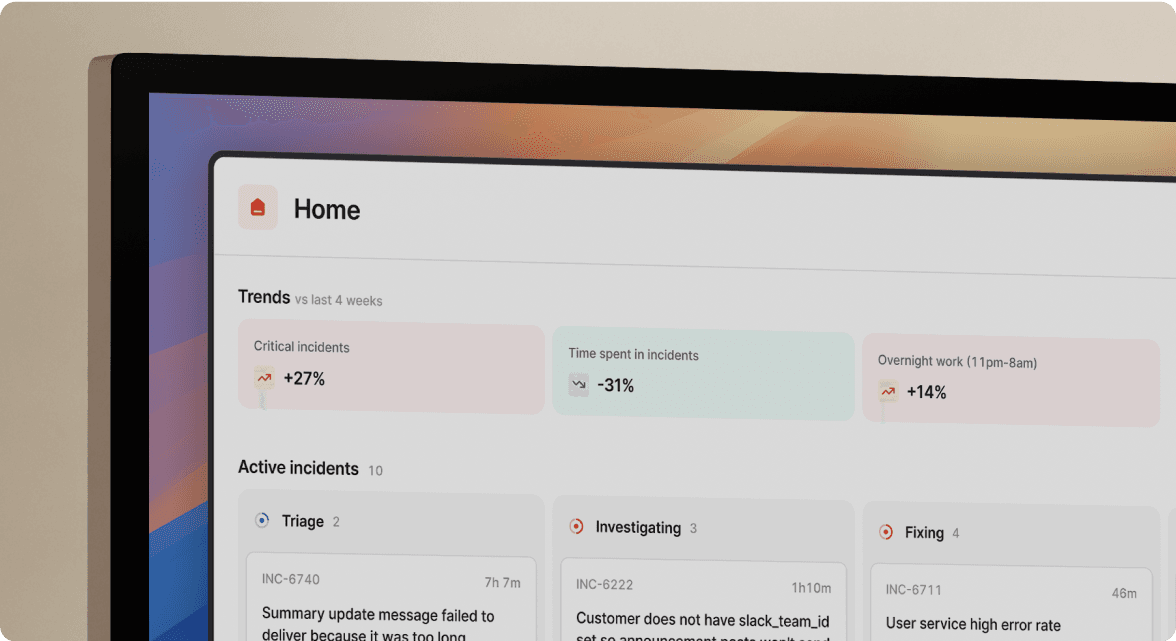

At incident, we’re building for the whole org from engineering and customer success to operations & legal, so when things go wrong our customers can be more resilient. That means we’re shipping a bunch of new features, speaking to customers, analysing our data, then iterating.

We’ve built out a playbook for how we use data for new launches, that looks something like this: 1) Before: analyse the potential impact of a project & develop project scope 2) During: define what success looks like, how we collect the data & measure it 3) After: hold 2/4/6 week follow-ups reviewing our numbers, and do follow up analytics to gauge how we iterate next.

This particular check-in was step 2), which decided what success looks like & how we’ll measure it.

✅ 11:30 to 12:00 - Insight of the week

Each week, everyone in the data team creates an ‘Insight of the week’. The idea here is a stand-alone piece of analysis, that ensures the surface area we’re covering spans the business beyond day-to-day projects. It’s something that makes you go ‘hmmmm’ and might inspire a more comprehensive piece of data analytics.

I used this time to think about what to look into and how ahead of the afternoon. I decided I’d focus on user-level retention metrics & how they interact with different product features.

✅ 12:00 to 12:30 - Donut coffee catch up

At incident we use Donut, which is a tool that plans a ‘water-cooler’ catch up each week. This week, I went for a lovely walk in the sun with Lisa.

✅ 12:30 to 1:30 - Walk to Whitecross market with the team to pick up some lunch

We often have a lunch train head over to Whitecross Market to pick up lunch. It’s a great way to spend some time with the team outside of a working context, stretch your legs, and there are good options for everybody.

We sat outside to eat lunch, and had a lovely break ahead of the afternoon!

✅ 1:30 to 2:00 - Swiss army knife Kingston product metrics request

First up after lunch, I looked into an ad hoc request for some product metrics that showcase the value of our product (eg. How many tasks have we automated through workflows? How many post-mortem docs have we automatically generated?). We’ve come a huge way in such a short time as a company, it was fun for a lot of team to see how these metrics have boomed. One of our first engineers, Lawrence, said:

When I joined we had no such thing as workflows. I guess I’m frozen in time when it comes to product stats, and I probably need to update my mental model.

✅ 2:00 to 4:00 - Analytics

Analytics here is focused on actions, and as a data team, we evangelise everyone bringing their domain expertise and contributing. This means we always share succinct actions with the entire team in our #data channel & build our visuals in Metabase where anybody in the business can run with and do their own analysis.

This piece focussed on user-level retention of our ‘responders’ (which are our products active users), by engagement with our integrations (eg. Pagerduty) & product features (eg. Workflows). As we scope new projects, it’s important to understand what’s already delivering value and double down on the adoption of these aspects of our product. I didn’t have time to write this piece up, so one for next week!

✅ 4:00 to 5:00 - Team time

Team time is dedicated time for catching up with the whole incident team. We talk about who’s joining the team, any major announcements and have a Q&A with Stephen our lovely CEO. We also go through a product demo with our latest new features. The product velocity at incident is incredible, so it’s great to stay in touch with everything that’s happening across the company!

Each of the ‘disciplines’ ****then spend 3-5 minutes sharing a short update, including a temperature check and the most important thing they got done last week + what it’ll be next week.

✅ 5:00 to 5:30 - Planned what we’d put on our Smiirls

We’ve just bought two new Smiirls, so kicked off the debate on what we should show. Suggestions very welcome (edward@incident.io)!

✅ 5:30 -

After team time we stuck around the office & hung out as a team. Andy the Nintendo machine also crushed me on Super Smash Bros, but I can get over that.

See related articles

Behind the Flame: Lucy

Meet Lucy Jennings, Expansion Account Manager 🔥

Megan Batterbury

Megan Batterbury

Behind the Flame: Dylan

Meet Dylan Rose Muller, Business Development Representative 🔥

Megan Batterbury

Megan Batterbury

Behind the Flame: Mohit

Meet Mohit Bijlani, Chief Revenue Officer 🔥

Megan Batterbury

Megan BatterburySo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization