Best incident management tools for financial services: Regulatory and compliance requirements

Updated January 26, 2026

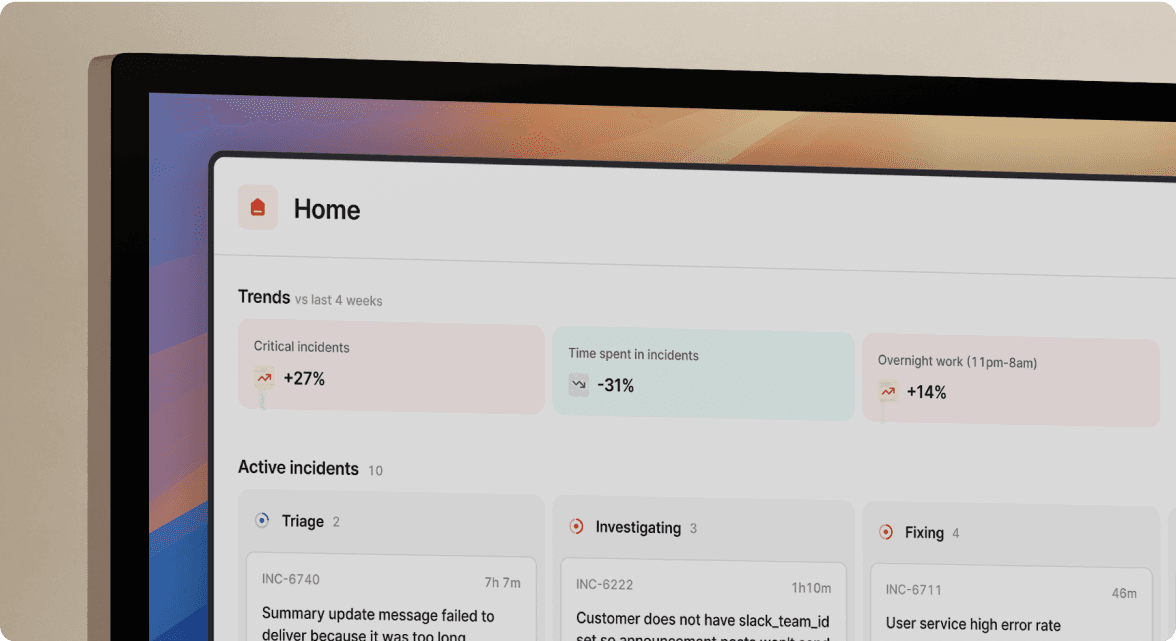

TL;DR: In financial services, you must prove how you fixed incidents while meeting strict deadlines. The OCC's 36-hour notification rule, DORA's 4-24 hour window, and SEC's 4-business-day disclosure create unprecedented time pressure. Manual post-mortem reconstruction from Slack scroll-back won't satisfy auditors demanding complete, immutable timelines. The solution is automating compliance as a byproduct of response through platforms that capture every action, decision, and timestamp automatically.

When your payment processing API fails at 2:47 AM, two clocks start ticking. The first is your MTTR. The second is the regulatory notification countdown. The OCC's 36-hour notification rule means missing the deadline adds regulatory scrutiny on top of customer impact. Spend too much time documenting for compliance, and MTTR suffers. This is the tension every FinTech SRE faces.

You need speed. But in financial services, you also need audit-ready documentation, regulatory notification, and complete evidence preservation. This guide covers the regulatory landscape (OCC, DORA, SEC), the specific technical requirements for FinTech incident response plans, and how we automate compliance without slowing down resolution.

What defines a "material" incident in financial services?

Not every bug triggers regulatory reporting. Understanding the threshold matters because over-reporting creates noise while under-reporting creates legal risk. If you're debugging at 3 AM and asking "do I need to notify the OCC?" here's how to decide.

According to 12 CFR Part 53, a computer-security incident is "an occurrence that results in actual harm to the confidentiality, integrity, or availability of an information system or the information that the system processes, stores, or transmits." This is the baseline definition covering everything from a failed login attempt to a full breach.

The regulatory trigger is narrower. A "notification incident" under OCC rules means "a computer-security incident that has materially disrupted or degraded, or is reasonably likely to materially disrupt or degrade" your ability to carry out banking operations, deliver products to a material portion of customers, or threatens financial stability. The Federal Register guidance clarifies with examples: a large-scale DDoS attack disrupting customer account access for extended periods, or a hacking incident disabling banking operations for multiple hours.

In practice, here's the line:

Reportable incidents:

- Extended payment processing outage affecting customer transactions (materiality depends on duration and customer impact)

- Customer data breach exposing PII or account credentials

- Ransomware encrypting critical systems

- DDoS attack preventing customer login for extended periods

- Third-party service disruption materially impacting your services

Non-reportable incidents:

- Internal API latency spike resolved in 15 minutes

- Single microservice restart with no customer impact

- Planned maintenance causing brief downtime

- Failed deployment caught in staging

The judgment call is "material disruption." If customers can't transact, can't access accounts, or if sensitive data is compromised, assume it's reportable until proven otherwise. Learn more about incident severity frameworks in our full platform walkthrough.

The regulatory landscape: Notification windows and reporting mandates

Financial services incident response operates under multiple overlapping regulations. Missing a deadline isn't just embarrassing, it's a compliance violation.

US Banking: The 36-hour rule (OCC, FDIC, Fed)

The OCC's Computer-Security Incident Notification rule requires banks to notify their primary federal regulator within 36 hours of determining a notification incident occurred. The clock starts when you determine it's material, not when the incident began. This distinction matters: if your team declares an incident Friday at 6 PM and realizes Saturday morning it meets notification criteria, you have until Monday morning to report.

Banks can notify via email to their supervisory office, phone call, or through the BankNet portal. The notification doesn't require a complete root cause analysis, just acknowledgment that a material incident occurred and initial impact assessment.

Bank service providers face a different standard. According to 12 CFR Part 53, providers must notify affected bank customers "as soon as possible" when a disruption to covered services lasts four or more hours. There's no 36-hour grace period. The "as soon as possible" language means if your infrastructure-as-a-service platform goes down at 2 AM and affects customer banks, you're notifying them immediately, not Sunday afternoon.

EU and UK: DORA and FCA requirements

For organizations with EU operations, the Digital Operational Resilience Act brings harmonized incident reporting. DORA's reporting framework establishes three stages: initial notification within 4-24 hours of discovery, intermediate report within 72 hours, and final report within 1 month. This is significantly tighter than the OCC's 36-hour window. Incidents classify as major based on seven criteria including service criticality, client impact, reputational damage, and duration.

Publicly traded companies: SEC materiality disclosures

The SEC's cybersecurity disclosure rules require registrants to file Form 8-K disclosing material cybersecurity incidents within four business days of determining materiality. The SEC framework requires disclosure of "the material aspects of the incident's nature, scope, and timing, as well as its material impact or reasonably likely material impact." Unlike banking regulators focused on operational resilience, SEC disclosures emphasize investor materiality and financial impact.

Summary table:

| Jurisdiction | Regulation | Initial Deadline | Reporting Channel |

|---|---|---|---|

| US (Banks) | OCC/FDIC/Fed | 36 hours from determination | Supervisory office, BankNet |

| US (Bank Service Providers) | 12 CFR 53 | As soon as possible (4+ hour disruption) | Bank-designated contact |

| US (Public Companies) | SEC Form 8-K | 4 business days | SEC EDGAR |

Core components of a FinTech-ready Incident Response Plan

You need documented, tested incident response procedures to pass regulatory exams. The NIST SP 800-61 framework provides the baseline, but FinTech environments require additional layers.

According to NIST SP 800-61 Revision 3, the framework divides into four phases: Preparation, Detection and Analysis, Containment/Eradication/Recovery, and Post-Incident Activity. Financial services add regulatory notification as a distinct workflow within the Containment phase.

The non-negotiable components for FinTech incident response plans:

- Immutable timeline capture: Every action, decision, and status change recorded with timestamps and attribution. This is where most homegrown tools fail. Auditors specifically check for comprehensive logging mechanisms providing audit trails.

- Regulatory notification workflow: Automated prompts evaluating notification requirements. For banks, determining if it's a "notification incident" per OCC criteria. For EU entities, checking DORA major incident classification. For public companies, assessing SEC materiality.

- Evidence preservation procedures: Log retention, database snapshots, and configuration backups captured immediately upon incident declaration. SOC 2 compliance requires documented post-incident analysis to understand what happened, how it was remediated, and how to prevent recurrence.

- Status communication automation: Internal and external status pages updating automatically as incident status changes, ensuring transparent customer communication about service disruptions.

We automate these components through Workflows. Watch how Netflix scales incident response across thousands of microservices using workflow automation. One verified user in financial services confirms the value:

"The ease of use and the interface. It's so easy that for reporters across the company it's basically no training and for responders or users customizing workflows it's minimal... We can allow all business units to customize workflows for their specific process while having a consistent process overall across the company and we can keep track of any setting changes with audit logs going to DataDog." - Verified user on G2

Why generic incident tools fail in regulated environments

You're juggling PagerDuty for alerting, Slack for coordination, Jira for tracking, Google Docs for post-mortems, and Statuspage for customer updates. This tool sprawl creates compliance gaps and burns time. Your persona shows this coordination overhead takes 10-15 minutes per incident just assembling the team before troubleshooting even starts.

The audit trail gap: Why manual entry isn't enough

Google Docs and Confluence pages are editable. Timestamps can be modified. Attribution is unclear. When an auditor asks "show me exactly who decided to roll back the deployment and at what time," a retrospectively written post-mortem from memory doesn't satisfy the requirement.

SOC 2 Type II audits check for "comprehensive monitoring and logging mechanisms to track system activities" and note that logs "provide an audit trail to help you piece together what happened after an incident." The audit examines whether incident response processes are documented, followed, and result in complete records.

Manual documentation fails because:

- Engineers write post-mortems 3-5 days after resolution when memories fade

- Critical decisions made in Slack threads get lost or summarized incorrectly

- Timestamps are approximate ("around 3 AM") rather than precise

- No clear record of who took which actions

- Editable formats allow post-incident modification (intentional or accidental)

We solve this through automatic timeline capture. Every Slack message in the incident channel, every /inc command, every role assignment, every workflow trigger gets recorded with millisecond timestamps and user attribution. The timeline is immutable. You can't edit history. When auditors ask for evidence, you export a complete, timestamped record generated during the incident, not reconstructed afterwards.

This automatic capture reduces post-mortem reconstruction time from 90 minutes to 10 minutes. You spend those 10 minutes refining AI-generated drafts instead of reconstructing timelines from memory.

One user describes the impact:

"The separation of functionality between Slack and the website is extremely powerful. This gives us the ability to have rich reporting and compliance controls in place without cluttering the experience and the workflow for the incident responders working to solve the incident during the live phase." - Joar S on G2

Data residency and encryption requirements

Financial services handle sensitive customer data subject to encryption requirements, data residency rules, and access controls. Generic collaboration tools don't provide the necessary security boundaries.

We provide SOC 2 certification, GDPR compliance, and encryption at rest and in transit. For enterprises, we support SAML SSO and SCIM provisioning to ensure incident access aligns with your corporate identity management.

Private incidents address a critical gap. When your security team investigates potential fraud or a data breach, you can't coordinate in a public Slack channel visible to 500 engineers. Our Private Incidents feature restricts channel access to authorized responders only. While alerts remain visible to maintain operational awareness, the incident channel, escalations created from the incident, and all response coordination stays restricted to authorized users. We maintain an audit trail of access grants and attempts. Read more about sensitive incident handling.

This matters for regulatory exams. When the OCC or FDIC reviews your incident response procedures during an audit, they expect controls preventing unauthorized access to sensitive incident data. Private channels with access logging satisfy this requirement. Ad-hoc Slack channels with 200 members don't.

How to automate compliance without slowing down response

Here's the core insight we've seen working with FinTech teams: the features that make incident response fast are the same features that make it compliant. Automated timeline capture saves you from manual documentation and simultaneously creates audit-ready records. This isn't compliance theater. It's compliance as a natural byproduct of efficient workflows.

Auto-capturing the timeline for immutable records

When a Datadog alert fires and triggers our workflow, we create a dedicated Slack channel, page the on-call engineer, pull in the service owner from your catalog, and start recording. Every subsequent action gets captured:

- Alert details and triggering conditions (imported from Datadog, Prometheus, etc.)

/inc escalatecommand invoking additional responders- Role assignments (Incident Commander, Communications Lead)

- Status updates posted via

/inc update - Pinned messages highlighting critical discoveries

- Zoom call start/end times with AI Scribe transcription

- Resolution timestamp via

/inc resolve

The timeline is comprehensive. One engineer observes:

"incident.io allows us to focus on resolving the incident, not the admin around it. Being integrated with Slack makes it really easy, quick and comfortable to use for anyone in the company, with no prior training required." - Andrew J on G2

When the incident closes, you export a complete record showing exactly who did what and when. No 90-minute reconstruction session. No gaps where "we think Sarah restarted the pods around 3:15 AM but nobody documented it." We documented it automatically when Sarah ran the command.

For regulatory notifications, this matters critically. The OCC wants to know when you detected the issue, when you determined it was material, when you began containment, and when service was restored. Our timeline provides precise answers with evidence.

Using AI for root cause analysis in high-stakes environments

In FinTech, knowing why an incident happened is as important as fixing it, both for preventing recurrence and for regulatory reporting.

Our AI SRE capability automates up to 80% of incident response by correlating recent deployments, configuration changes, and infrastructure events to identify likely root causes. When your payment API starts throwing 500 errors at 3 AM, our AI checks recent code deployments, infrastructure changes, and dependency updates to surface the probable culprit. For a SEV1 incident, shaving 10 minutes off root cause identification means 10 fewer minutes of customer impact and 10 minutes closer to meeting your notification deadline.

We also provide AI-powered post-mortem generation. Because we capture timelines automatically, our AI drafts an 80% complete post-mortem using actual event data rather than engineer recollections. You spend 10 minutes refining and adding context instead of 90 minutes writing from scratch.

One reviewer notes the workflow benefits:

"Clearly built by a team of people who have been through the panic and despair of a poorly run incident. They have taken all those learnings to heart and made something that automates, clarifies and enables your teams to concentrate on fixing, communicating and, most importantly, learning from the incidents that happen." - Rob L. on G2

Integrating with existing compliance and security tools

Financial services already use tools like ServiceNow for IT service management, Jira for task tracking, and Splunk for log aggregation. Rip-and-replace isn't realistic.

We integrate with Vanta to synchronize incident follow-ups with SOC 2, ISO 27001, and other security standards. When your Vanta audit requires evidence that incidents are tracked and remediated, our integration automatically syncs incident records and follow-up task completion.

For enterprises, audit logs stream to Splunk SIEM for centralized security monitoring. Every workflow edit, role change, and private incident access attempt gets logged and exportable for compliance review.

We also support internal status pages to keep employees and customers informed. When the OCC asks "how did you communicate service disruption to customers," you point to timestamped status page updates generated automatically from incident status changes.

90-day roadmap: Modernizing incident response for financial services

Moving from ad-hoc Slack channels and Google Docs to a compliance-first platform requires planning. Here's a realistic 90-day implementation timeline for a 100-person FinTech engineering team.

Days 1-30: Foundation and centralization

Start with the basics: "Establish clear roles and make sure everyone knows their responsibilities. Create simple documentation templates for incident tracking. Set up basic communication channels for incident coordination."

Actions this month:

- Install incident.io and connect to Slack. Integrate existing monitoring tools (Datadog, Prometheus, PagerDuty) using migration guides.

- Define incident severity levels (P0-P4) with clear criteria for regulatory reporting thresholds. Configure on-call schedules and escalation paths.

- Train your team on

/incslash commands and document incident roles (Incident Commander, Communications Lead, Technical Lead). - Run 2-3 practice incidents using the sandbox feature to build muscle memory.

By day 30, your team should handle their first real incident using incident.io instead of creating ad-hoc Slack channels manually.

Days 31-60: Automation and workflows

Begin conducting postmortems and "gradually automate manual processes as your team matures."

Actions this month:

- Build automated workflows triggering on SEV1 incidents to auto-page VP Engineering, create Jira tickets, and update internal status pages.

- Configure post-mortem templates specific to financial services with sections for regulatory notification evaluation and customer impact.

- Set up service catalog linking services to owners, runbooks, and dependencies.

- Create dedicated workflows for security incidents using Private Incidents.

By day 60, incidents should trigger automated response workflows requiring minimal manual coordination.

Days 61-90: Measurement and refinement

Focus on measuring progress: "Measure your progress, and continuously refine your approach based on what you learn from each incident."

Actions this month:

- Review MTTR and acknowledgment metrics in Insights dashboard to identify process bottlenecks.

- Conduct a notification drill simulating a material incident to test your 36-hour reporting workflow end-to-end.

- Export sample timelines and post-mortems for mock audit review.

- Document ROI: time saved per incident, MTTR improvement, on-call satisfaction scores.

By day 90, you have a production-grade incident management system generating audit-ready documentation automatically and data proving the investment's value.

Watch how Bud Financial improved their incident response following a similar implementation timeline.

Proof of compliance readiness

When your next SOC 2 audit arrives or the OCC schedules a regulatory exam, you need evidence that incident response procedures exist, are followed, and create complete records.

We provide:

- Immutable audit logs tracking every configuration change, workflow edit, and private incident access attempt

- Complete timeline exports showing the full incident lifecycle with timestamps and attribution

- Post-mortems generated from actual event data rather than engineer recollection

- Integration with compliance platforms like Vanta to sync incident records with security standards

- Role-based access controls and SAML SSO ensuring incident access aligns with corporate identity policies

- Evidence of continuous improvement through Insights dashboards showing MTTR trends and incident volume patterns

One financial services user confirms the compliance value:

"We can allow all business units to customize workflows for their specific process while having a consistent process overall across the company and we can keep track of any setting changes with audit logs going to DataDog. It gives us the flexibility and oversight we need." - Verified User on G2

We don't just help you pass audits. We make compliance a natural output of your response process rather than a separate administrative burden.

Moving forward

Financial services incident management balances speed and safety. Regulatory deadlines create time pressure. Customer impact creates business pressure. Audit requirements create documentation pressure. Manual processes can't handle all three simultaneously.

The solution is automating the administrative overhead. We capture timelines automatically, prompt regulatory notifications based on severity, and generate audit-ready documentation from actual event data. You focus on resolution rather than paperwork. Compliance becomes a byproduct of efficient incident response instead of a distraction from it.

If your team handles incidents using ad-hoc Slack channels and reconstructs post-mortems from memory days later, you're one regulatory exam away from painful audit findings. Teams using incident.io reduce MTTR by up to 80% while automatically generating the audit trails regulators expect.

Try incident.io in a free demo and run your first incident with automated timeline capture and compliance-ready documentation. Or book a demo to see Private Incidents, audit logs, and regulatory notification workflows in action.

Key terminology

Material incident: Under OCC rules, a computer-security incident that materially disrupts or degrades banking operations, service delivery to customers, or threatens US financial stability. Triggers mandatory 36-hour notification requirement.

Financial Market Utility (FMU): According to the Federal Reserve, multilateral systems providing infrastructure for transferring, clearing, and settling payments, securities, or financial transactions. Designated FMUs include CHIPS, CME, and DTC.

Immutable audit trail: Complete incident record capturing every action, decision, and status change with precise timestamps and user attribution. Cannot be edited after creation, required for regulatory compliance and post-incident investigation.

Notification incident: OCC term for computer-security incidents meeting materiality thresholds requiring regulator notification within 36 hours. Distinct from general security incidents not meeting reporting criteria.

DORA major incident: Under Digital Operational Resilience Act, incidents classified as major based on service criticality, client impact, reputational damage, duration, geographic spread, data losses, or economic impact. Triggers 4-24 hour initial reporting requirement.2

FAQs

See related articles

The post-mortem problem

Post-mortems are one of the most consistently underperforming rituals in software engineering. Most teams do them. Most teams know theirs aren't working. And most teams reach for the same diagnosis: the templates are too long, nobody has time, nobody reads them anyway.

incident.io

incident.io

Keeping it boring: the incident.io technology stack

This is the story of how incident.io keeps its technology stack intentionally boring, scaling to thousands of customers with a lean platform team by relying on managed GCP services and a small set of well-chosen tools.

Matthew Barrington

Matthew Barrington

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian HansonSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization