Opsgenie Sunset Migration: 90-Day Jira + Slack On-Call Integration Plan

Updated December 23, 2025

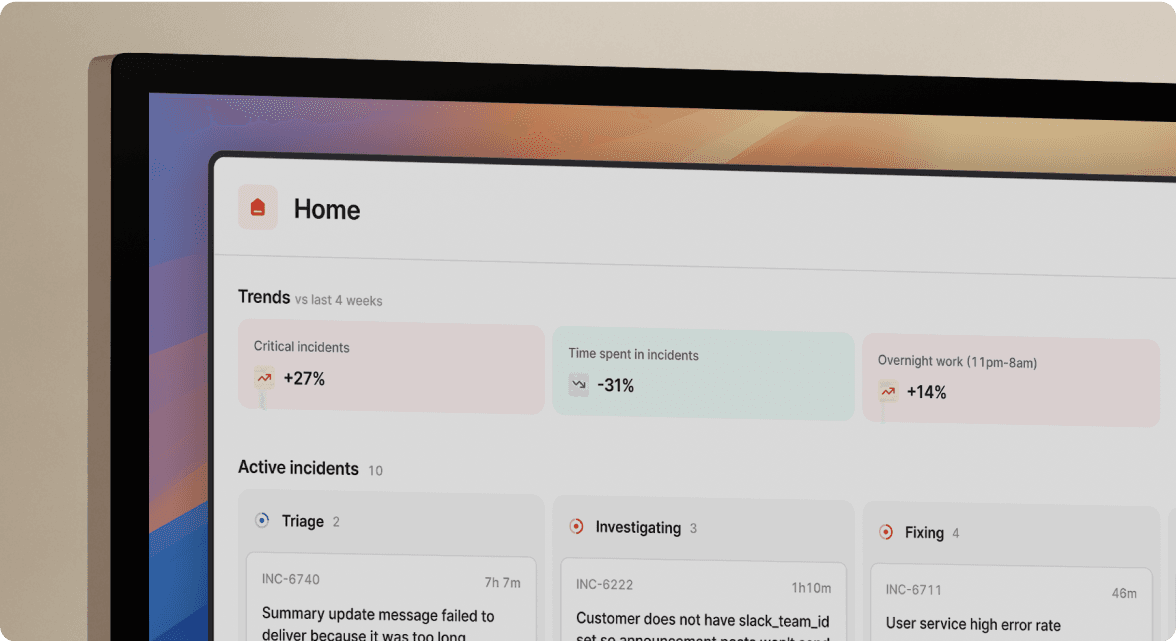

TL;DR: Atlassian's Opsgenie shutdown by April 2027 forces a migration decision. Don't just replace alerting with another tool like PagerDuty. Instead, consolidate on-call scheduling, incident response, and Jira tracking into Slack where your team already works. This 90-day plan eliminates the "swivel-chair" coordination tax that adds 10-15 minutes to every incident. incident.io's bi-directional Jira sync means engineers work in Slack while management gets the Jira reporting they need. The result: up to 80% faster MTTR, zero missed alerts during migration, and hundreds of engineer-hours reclaimed annually.

The hidden cost of "swivel-chair" incident response

When a P1 incident fires, the coordination overhead starts before troubleshooting does. Acknowledge the Opsgenie alert, manually create a Slack channel, paste the alert link, tag engineers, open Jira to create a ticket, update the status page. Twelve minutes of administrative work before anyone examines logs.

This coordination tax isn't a training problem. It's tool sprawl creating what the industry calls "swivel-chair" response: constant context switching between systems that delays actual incident resolution.

The data is stark. Workers lose an average of 51 minutes per week to tool fatigue, adding up to over 44 hours of lost time per year. For incident responders managing frequent escalations, more than 1 in 5 workers (22%) lose 2+ hours weekly. Interrupted tasks take twice as long and contain twice as many errors as uninterrupted work.

When your team handles 20 incidents monthly at a $190 loaded hourly cost, coordination savings alone total $11,400 annually just by eliminating those 10-15 wasted minutes per incident.

The Opsgenie sunset on April 5, 2027 isn't a crisis. It's an opportunity to fix this broken workflow before Atlassian forces your hand.

Why Slack-native on-call beats the "alert-only" model

The old model separates alerting from coordination. PagerDuty or Opsgenie page you, Slack is where you coordinate, Jira is where you track follow-ups, Confluence is where post-mortems live, and Statuspage is where customers get updates. Five tools for one incident.

The Slack-native model moves the entire workflow into the platform where your team already collaborates. Alert fires from Datadog, incident.io automatically creates a dedicated channel, pulls in on-call responders based on your service catalog, starts capturing timeline data, and creates the Jira ticket without anyone lifting a finger.

Engineering teams report immediate adoption advantages. One team noted that "the Slack integration makes reporting and following incidents easy, which was the original reason for signing up", while another found that incident.io has "by far the quickest and most efficient way to get started with incident management" specifically because the tool disappears into their existing workflow.

The difference matters during actual incidents. Your engineers type /inc escalate platform-team in Slack, and incident.io handles the routing, updates the Jira ticket status, notifies the right people via SMS and phone, and logs every action automatically. No browser tabs. No remembering which tool does what. No coordination tax.

Phase 1: Establishing bi-directional sync between Jira and Slack (days 1-30)

The foundation of eliminating swivel-chair coordination is connecting your system of engagement (Slack) with your system of record (Jira) so updates flow automatically in both directions. This phase establishes the integration with parallel-run capability built in from day one.

Set up the Atlassian Marketplace integration

Use the Atlassian Marketplace app to connect your incident.io organizations directly to your Jira Cloud instances. You'll need Jira site admin permissions to install and configure the app. The setup takes 15-30 minutes.

During setup, head to Settings → Incident tickets in the incident.io dashboard. Before saving, add a template and configure any conditions for routing incidents to specific Jira projects.

Configure field mapping with conditional routing

When syncing information with Jira, specify project, issue type, and required fields using either static text or fields powered by variables. The real power is conditional routing. Map P0/P1 incidents to your "Production Incidents" Jira project with immediate escalation, while P2/P3 incidents route to standard development backlogs.

If there are no conditions specified, the system uses the first template in the list and creates a ticket for all incidents. For most teams, configuring 3-4 templates covers all incident types.

Understand the status sync approach

Direct status synchronization is not available between incident.io and Jira, but you can implement a workaround using string custom fields and Jira automation rules. Create a string custom field in Jira to store incident status, configure incident.io's Jira export template to sync the status to this field, then use Jira automation rules to update the native status field.

This limitation matters less in practice because incident.io automatically syncs the incident ticket when the incident changes, keeping fields and variables current. Engineers work in Slack, not Jira, so the Jira ticket serves as the system of record for reporting rather than the live coordination interface.

Test with a pilot team

Run 5-10 test incidents with a small team. Verify that Slack updates reflect in Jira and follow-up tasks export correctly. Anyone with access to the chosen Jira project will be able to see the incidents, so validate permissions match your security requirements before rolling out company-wide.

Phase 2: Automating ticket creation and status updates (days 31-60)

Phase 1 established the connection. Phase 2 removes humans from the administrative loop entirely while maintaining zero-downtime capability through parallel alert routing.

Configure alert-triggered incident creation

Connect your monitoring tools (Datadog, Prometheus, New Relic) so that alerts automatically create incidents when something fires, removing unnecessary friction from the incident response process. Configure database latency alerts to automatically create a P1 incident, page the database on-call engineer, create a Jira ticket in the Infrastructure project, and notify the #database-oncall Slack channel.

One engineering team captures the value:

"incident.io makes handling crisis or live issues a breeze... The Slack integration lets our team not have to think about yet another service." - Verified user review of incident.io

Set up on-call schedule imports for parallel operation

incident.io supports importing schedules and escalation paths from both PagerDuty and Opsgenie, allowing you to run parallel systems during the migration period. This parallel-run approach is critical for zero-downtime migration.

To build schedules, head to On-call within the dashboard, go to Schedules and create a new schedule. You have options for Daily, Weekly, or Monthly rotations. For asymmetric shifts, create time intervals within hours, days, and weeks.

For global teams, advanced rotas support follow-the-sun coverage where different locations are on-call during different times of day. Configure your UK team on-call between 9 AM to 8 PM daily, and US team between 8 PM to 9 AM GMT for 24-hour coverage.

Validate notification routing across all channels

Test that SMS, phone calls, email, and Slack notifications reach the right people at the right time. Configure manual escalations for teams still using legacy tools. If you're mid-migration with some teams on PagerDuty or Opsgenie and others on incident.io, you can pick a Team and the system will figure out escalation paths based on what's configured for that team in Catalog.

Phase 3: Full migration and Opsgenie deprecation (days 61-90)

Phase 3 is the cutover, designed for zero missed alerts. Your goal: deprecate Opsgenie completely while maintaining 100% alert delivery continuity through a controlled parallel-run period.

The parallel-run approach means both Opsgenie and incident.io receive alerts simultaneously for 14 days. This validates routing parity, catches edge cases, and provides fallback protection. incident.io supports importing schedules directly from PagerDuty and Opsgenie, allowing you to validate complete parity before deprecating legacy tools.

Export historical Opsgenie data

Use the Opsgenie API to retrieve incident details, alert history, and assignments in JSON format. For simpler alert data, CSV export is available from the dashboard. The GET Schedule API retrieves on-call schedules in JSON that you can convert to CSV.

Build a mapping table that translates Opsgenie fields to incident.io's equivalents. Map P1 to Critical severity, P2 to High, and so on. This ensures reporting continuity when you show quarterly incident trends to leadership.

Run parallel systems and validate parity

Keep both systems receiving alerts simultaneously for 14 days. Monitor for any missed pages or routing failures. Document any edge cases where alert routing behaves differently between systems. This parallel-run approach minimizes compliance risk by treating migration as a compliance project, not just a tooling swap.

Cutover and archive Opsgenie

Once you've validated 100% parity in alert delivery and escalation routing, cut over entirely. Archive your Opsgenie account but maintain read-only access for 30 days so you can reference historical incidents if questions arise during post-mortems. Proactively account for vacation time and configure overrides so shift swaps don't break during the cutover period.

How incident.io unifies on-call, Jira, and Slack automatically

The unified platform eliminates the tool sprawl that created coordination overhead in the first place.

When an alert fires, incident.io automatically creates a dedicated channel, assigns an incident lead, pulls in the service owner from your catalog, and starts capturing timeline data. Engineers manage everything with slash commands: /inc escalate, /inc assign, /inc severity critical. The timeline capture is automatic—every Slack message, role assignment, and status update gets recorded.

Information on the Jira ticket such as title, assignee, and completion is synced back to incident.io, updating follow-ups automatically. Engineers never leave Slack to update tickets. Product managers reviewing Jira never wonder if ticket status reflects reality.

For on-call scheduling, incident.io's solution includes Alerts, Schedules, Escalation Paths, and Notifications across SMS, phone, email, Slack, and mobile app. When a team member goes on vacation, create one-time overrides without modifying your current schedule. For global teams, advanced rotas support follow-the-sun coverage where different locations handle different times of day.

This matters for compliance. If your SOC 2 auditor asks "show me the complete incident timeline with every action taken," you export the Jira ticket with full timeline intact. No manual reconstruction. No gaps in the audit trail.

Comparison: Native Jira integration vs. incident.io vs. custom bots

| Feature | Native Jira Cloud App | Custom Script/Bot | incident.io |

|---|---|---|---|

| Setup time | 15-30 minutes | 2-4 weeks development | 1-2 days operational |

| Bi-directional sync | Limited (comments only) | Manual configuration required | Automatic field sync |

| On-call routing | Not included | Manual integration with PagerDuty/Opsgenie | Built-in schedules and escalations |

| Auto-create incident channels | No | Yes (with custom code) | Yes (out of the box) |

| Post-mortem export | Manual | Custom implementation | Auto-drafted from timeline |

| Maintenance required | None (vendor-managed) | High (breaks on API changes) | None (vendor-managed) |

| Cost | Free (included with Jira) | Engineer time ($40-80k/year) | $45/user/month with on-call |

The native Jira Cloud for Slack app provides basic functionality: preview issues when mentioned, create tickets, see notifications. But it lacks true bi-directional sync where Slack actions update Jira fields automatically, and it doesn't support on-call routing or incident coordination workflows.

Custom bots give you infinite flexibility but require ongoing maintenance. Based on analysis of multiple GitHub repositories implementing Jira-Slack bots, typical implementations require manual field mapping that breaks whenever Jira or Slack change their APIs.

One team's experience captures the tradeoff:

"It's tempting to build a Slack-based incident system yourself, don't. incident.io has a very responsive and competent team. They have built a system with sane defaults and building blocks to customize everything." - Verified user review of incident.io

Why incident.io won't be the next Opsgenie

The "another vendor sunset" fear is real after Atlassian's Opsgenie deprecation announcement. Here's why incident.io represents a different bet:

We raised a $62M Series B in April 2025 with 600+ customers including Netflix, Etsy, and Vercel. We're not a feature inside a larger suite that can be sunsetted. Incident management is our entire business. We ship 200+ features quarterly on a public roadmap, and we maintain 99.99% uptime SLA with SOC 2 Type II certification.

For teams burned by vendor lock-in, we provide complete data export via API so you're never trapped. But our customer retention rate suggests teams don't leave once they consolidate their incident stack into Slack.

Cost comparison: Opsgenie to incident.io migration

For a 100-person team, the math is straightforward:

Current Opsgenie spend: ~$40,000/year (typical for 100 users with on-call)

incident.io Pro plan: $45/user/month × 100 users × 12 months = $54,000/year

But the effective cost drops when you account for consolidation:

- Eliminate separate status page tool: Statuspage.io costs $29-99/month ($348-1,188/year saved)

- Eliminate custom bot maintenance: Engineering time maintaining Jira-Slack scripts costs 40-80 hours/year at $190 loaded hourly = $7,600-15,200/year saved

- Reclaim coordination overhead: 10 minutes saved per incident × 20 incidents/month × $190/hour loaded cost = $11,400/year value

Net effective cost: $54,000 - $19,348 = $34,652/year, below your current Opsgenie spend while consolidating three tools into one.

The result: 30% lower MTTR and zero missed alerts

The consolidated platform delivers measurable improvements across three dimensions.

MTTR reduction through eliminated coordination overhead

Teams report significant MTTR improvements after migration. Favor reduced MTTR by 37%. Buffer saw a 70% reduction in critical incidents. The reduction comes from eliminating the 10-15 minutes of coordination overhead per incident. For a 100-person team handling 20 incidents monthly, coordination savings alone total $11,400 annually at typical loaded hourly costs.

Engineer hours reclaimed for proactive work

Post-mortems that previously took 90 minutes of manual reconstruction now take 10 minutes to refine because the timeline and key decisions are already captured. Multiply that 80-minute savings across 20 incidents per month, and your team reclaims 26+ hours monthly for reliability improvements instead of documentation archaeology.

Alert delivery continuity during migration

The parallel-run migration approach means zero missed pages during cutover. Engineering teams from 2 to 2000 have migrated to incident.io On-call successfully using this approach, with no incidents attributed to migration issues.

Key integrations and their benefits

The platform connects to your existing stack rather than replacing it:

- Monitoring tools (Datadog, Prometheus, New Relic, Sentry, Grafana) trigger automatic incident creation based on alert conditions

- Task tracking (Jira, Linear, GitHub Issues) receive automatic follow-up tasks with full incident context

- Documentation (Confluence, Notion, Google Docs) receive exported post-mortems with complete timelines

- Status pages: incident.io includes status pages in all paid plans, where PagerDuty users need separate Statuspage.io subscriptions

Security and compliance considerations

Incident management platforms touch sensitive production data and must meet enterprise security requirements.

SOC 2 Type II certification

incident.io maintains SOC 2 Type II certification, covering security, availability, processing integrity, confidentiality, and privacy controls. The certification requires independent audit of controls over a 6-12 month observation period.

For teams maintaining their own SOC 2 compliance, this playbook treats migration as a compliance project, not just a tooling swap. Document your parallel-run period, maintain audit trails during cutover, and retain incident data for at least 3 years to cover multiple audit cycles.

GDPR and data residency

incident.io complies with GDPR, UK GDPR, Data Protection Act 2018, and Privacy and Electronic Communications Regulations 2003. The platform includes role-based access control, audit logs, and encryption at rest and in transit to meet security and compliance needs. Enterprise plans add SAML/SCIM for centralized identity management.

Jira permission inheritance

When exporting incidents to Jira, anyone with access to the chosen Jira project can see them. For security incidents or sensitive production issues, use incident.io's private incidents feature (available on Pro and Enterprise plans) to restrict Slack channel access, then route to private Jira projects with limited permissions.

Ready to consolidate your incident stack?

The Opsgenie sunset by April 2027 gives you 15 months to migrate, but waiting until Q4 2026 puts you at risk if the migration hits unexpected complications. Starting now gives you time to validate in parallel before the deadline.

Schedule a demo to see how incident.io supports a structured 90-day migration plan. Start with Phase 1’s bi-directional Jira sync, add Phase 2’s alert automation, and complete Phase 3’s parallel-run validation with zero missed alerts.

For teams handling the Opsgenie migration, download the migration tools that import schedules and escalation paths directly. We’ve helped engineering teams from 2 to 2,000 migrate to incident.io On-call using parallel-run configurations that validate parity before deprecating legacy tools.

Terminology

Two-way sync: A data connection where updates in one tool (Slack) immediately reflect in the other (Jira) and vice versa. Changes to incident status, assignees, or custom fields sync automatically without manual copying.

Slack-native: Workflows that occur entirely within the Slack interface via slash commands and channel interactions, without requiring a web browser. The tool feels like using Slack rather than using a separate application that sends notifications to Slack.

MTTR: Mean Time To Resolution measures the average time from when an incident is detected to when it's fully resolved and normal service is restored. Reducing MTTR by eliminating coordination overhead is the primary goal of consolidating incident management tools.

Swivel-chair coordination: The practice of manually copying information between multiple tools during incident response, named after the physical act of swiveling between computer screens. This context switching adds 10-15 minutes of overhead per incident.

Parallel run: Operating two systems simultaneously during a migration period to ensure no data loss and provide a fallback option if issues arise. For incident management, this means both Opsgenie and incident.io receive alerts during the validation period.

Escalation path: A predefined sequence of notifications and escalations that determines who gets paged if the primary on-call engineer doesn't acknowledge an alert within a specified timeframe. Essential for 24/7 coverage and weekend incidents.

FAQs

See related articles

So good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization