Why we're hiring AI Engineers

Over the last 9 months, we’ve been building some of the most ambitious AI-native features in our product. Agents that can investigate incidents in real time. Systems that identify likely root causes. AI that writes exec-ready summaries without being prompted. Natural language interfaces that let engineers ask questions like “what changed before this broke?” and get useful answers.

To do this, we had to fundamentally re-evaluate how we built AI products at incident.io. We built a new kind of infrastructure: one that supports orchestrating complex agents, running evals, and putting safety rails around probabilistic systems.

In the 3.5 years we’ve been building incident.io, we’ve always hired Product Engineers. We’re now launching a new role at incident.io - AI Engineers - to go further, faster. Why are we changing our approach?

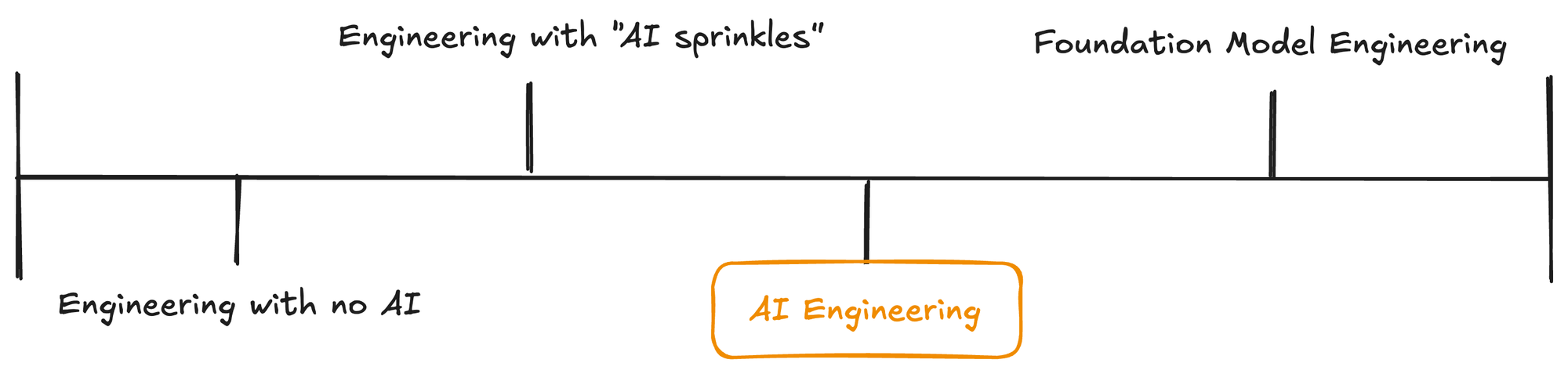

The spectrum of AI in product development

AI shows up in software in different ways and where you sit on that spectrum changes your job significantly. Here’s one way to think about it:

Engineering with no AI

Purely deterministic software. Logic you control end-to-end. No LLMs, no prompts, just code that does exactly what you tell it to. We've all been doing this for years.

Engineering with “AI sprinkles”

This is where most companies with existing products start.

You take a small part of the product, send some context to an LLM, and slot the result back into the existing product. This is usually a “delight” moment, but not fundamental or critical product functionality. Maybe you’re automatically pre-filling a field, summarising unstructured content, or generating suggestions.

It works. But if the LLM went down, most of your core product would still function just fine. The use of AI is delightful, but fundamentally additive—not essential.

Over time, I think this kind of work will be subsumed into normal product development and be “a given” for most of us.

AI Engineering

This is where things start to feel different. You’re now building with probabilistic systems, where outcomes can vary a lot, and determinism doesn’t apply.

A lot of traditional software engineering relies on deterministic execution, which suddenly feels less reliable. Unit tests don’t really apply, integration tests aren’t predictable, performance is often a bottleneck over which we have little control.

Instead, we’re in a world of evals, backtesting frameworks, speculative execution optimisations and benchmarks on tone of voice and sentiment. The list goes on.

It’s not just ‘prompts’. You’re often build the infrastructure around working with them, too: versioning, orchestration, tools for debugging. You create the system that lets the agent learn, improve, and behave predictably over time, often doing work at the frontier of what is possible with foundation models.

The output here is world-class product, not research papers.

Foundation Model Engineering

You’re training models from scratch - building the underlying infrastructure others use. You likely work at foundation model companies like OpenAI, Anthropic, or a larger organization that can finance training their own.

This is deep research work. The focus is on model architecture, training data, and scaling. The output here looks more like models, papers, and achievements on benchmarks.

Why we’re betting on “AI Engineering”

AI Engineering is a fundamentally different kind of software development. It’s not traditional product engineering, and it’s not ML research: it’s something in between. The industry is starting to coalesce around that idea too.

When it comes to building AI-native products, the biggest skills gap isn’t in model training or infra. It’s in AI Engineering: taking foundation models as a new addition to our toolkit, and using them to build reliable, real-world, world-class product.

To do this well, you don’t need a PhD, but you do need strong product and engineering instincts, a deep interest in LLMs, and a willingness to rethink how software gets built.

We’re putting a name to this role because we want to speak clearly to the kind of people we’re looking for. Not everyone wants to live at the intersection of using AI to directly build world-class product: but some people do. And those people are going to have the most fun and impact here. So we’re calling it what it is, and hiring for it directly.

Existing engineers can absolutely learn this. Our own team already has (and we’ve written about it). We’ve taken people who were already exceptional product engineers and watched them become exceptional AI engineers. They’ve built their own eval frameworks, orchestration engines, and debugging tools and are now shipping work that would’ve been unimaginable here a year ago.

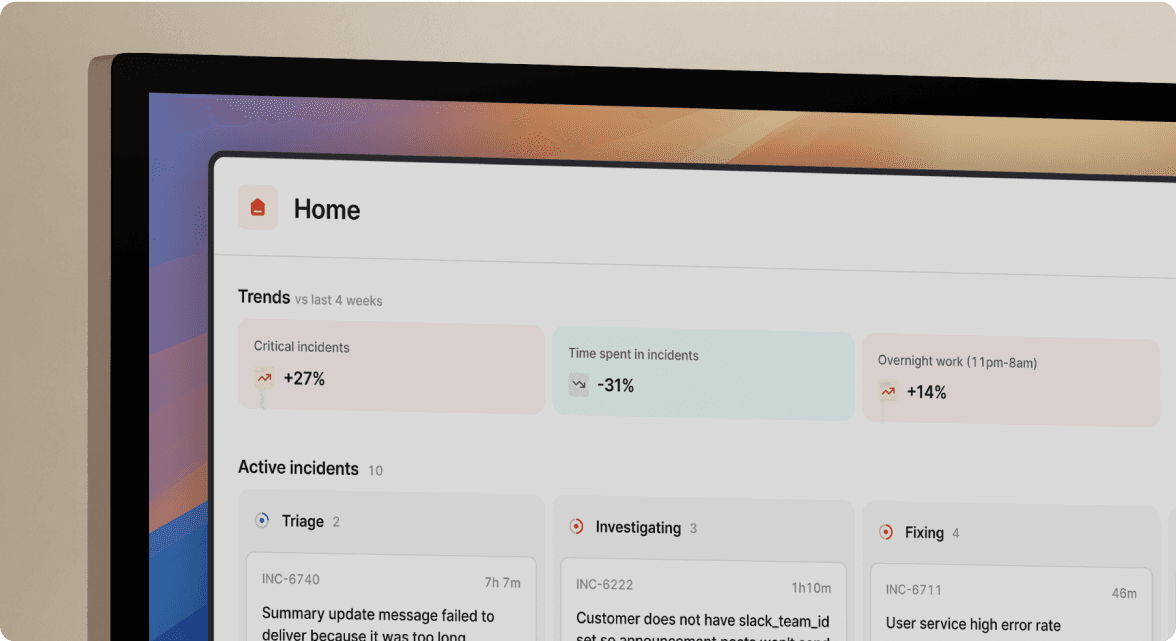

What AI Engineering looks like at incident.io

We’ve made a big internal shift, and spent the last year focused on levelling up our game around use of AI internally and in our product. We’ve gone from a simple prompt and a test harness, to a world-class internal platform: one that helps us run and evaluate LLMs, orchestrate complex agents, and build safety checks around the edges.

Right now, we’re actively building an agent that will investigate incidents, find out what’s wrong and why it’s wrong by analysing code, past incidents, logs, monitoring and more.

Next, we’ll extend the agent to show responders how best to fix incidents, or even offer to fix them on their behalf. Either way, we’ll help responders to resolve incidents much, much faster just like their most experienced colleagues would.

Crucially, we’re not just building prototypes, we’re building production grade products used in production incidents. We work with some of the best and brightest AI labs & product companies in the world, counting companies from Lovable & Langchain to OpenAI, Intercom & Netflix among our customers.

We’re hiring AI Engineers, starting today

The demand is real. The use cases are real. The infra is in place. And what we think we can achieve keeps increasing.

Given that, we’re meaningfully increasing our investment in AI Engineering today, with multiple roles now open to join our in-office team here in London and work at the frontier of agentic incident response.

You’d be a great fit if you:

- Are deep in the world of AI already - you’re reading the blog posts, following the model updates closely and playing with new tools as they drop, to see how they can improve what we build and how.

- Want to apply this knowledge to ship world-class, never-built-before products (vs writing papers — impressive, just not our focus!)

- Like working with messy, probabilistic systems and making them feel safe and reliable

- Care about user experience, product craft, and customer impact

- Want to build not just AI products themselves, but the infra and tooling that supports them

If you’ve been on your own version of that journey, or you’ve been waiting for a team like this to join - this could be the perfect role and the perfect time. We’d love to chat! Learn more about the role here.

See related articles

Bloom filters: the niche trick behind a 16× faster API

This post is a deep dive into how we improved the P95 latency of an API endpoint from 5s to 0.3s using a niche little computer science trick called a bloom filter.

Mike Fisher

Mike Fisher

My first three months at incident.io

Hear from Edd - one of our recent joiners in the On-Call team - how have they found their first three months and what's it been like working here.

Edd Sowden

Edd Sowden

Impact review: Scribe under the microscope

In this post we review the impact of our AI-powered transcription feature, Scribe, as we analyse key metrics, user behaviour, and feedback to drive future improvements.

Kelsey Mills

Kelsey MillsSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization