Practical lessons for AI-enabled companies

We went live with our first set of AI-enabled features a few months ago.

Needless to say, we learned a lot along the way, as this was the first time we had experimented with generative AI.

Here, I'll share some of what we've learned as we’ve grappled with using LLMs to power new products at incident.io. This will be most applicable to the application layer, AI-enabled but not AI companies.

You have to invest in your team upfront

With so much excitement to build with AI, where do you even start?

Honestly, it can be a little overwhelming, with hundreds of possibilities floating around—so let me share how we tackled this conundrum and what we learned.

Our first learning was that it was helpful to discover what's technically possible by running several short experiments. The goal here is to iterate quickly, so sometimes a Jupyter Notebook is enough to get a feel for what's possible before building something you can ship to users.

We experimented with RAG, embeddings, multi-shot prompts, code generation, function calling, and others to build conviction in the team about where AI-powered features would be most compelling. We treated our first release similarly, as we wanted to understand what new AI-enabled features would have the biggest impact on our users.

We shipped four features that helped us cover a lot of ground. Doing this helped us build a breadth of knowledge about what’s possible early so we could move fast later.

- Suggested Summaries & Follow-ups: These features helped us leverage all the context we have about incidents, powered by GPT-4, JSON mode, and some clever prompting. With these tools, we could turn unstructured data into structured data and work smoothly with our native product features.

- Related Incidents: One of the most popular features in this set, Related Incidents suggest past incidents that could have similar root causes. This helps teams learn from them and improve response processes. This was built using knowledge of embeddings and vector databases.

- Insights and analysis: Like ChatGPT in our product, you go from natural language to metrics, graphs, and Insights. This feature taught us about natural language interfaces and assistants.

It's equally as important to invest in tooling

We quickly realized that investing in tools and developer experience up front was as important as investing in the team. This allowed us to experiment faster and test more ideas.

One prominent example to highlight the importance of this:

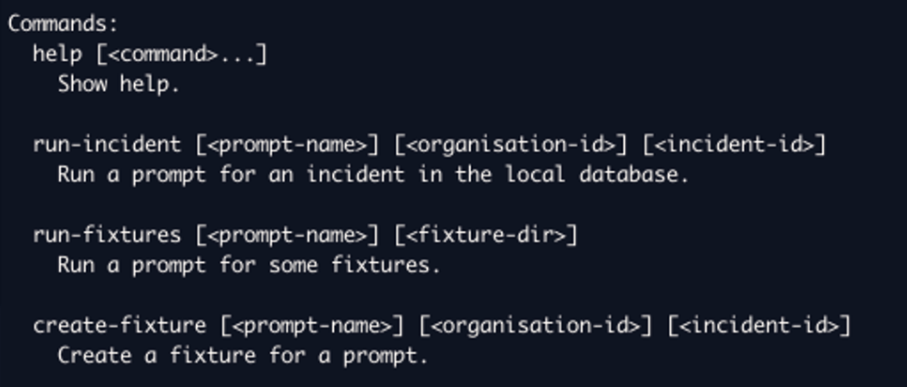

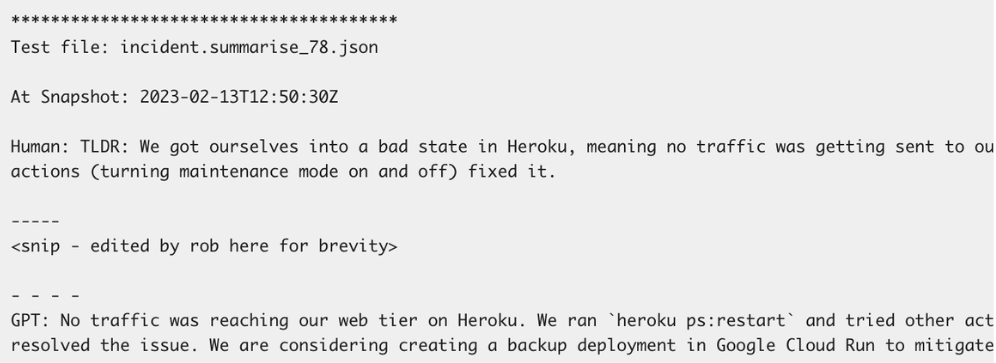

We built a CLI tool that could run against OpenAI with good examples and bad examples of incidents where the model struggled or did well.

This was essentially a fixture test.

Then, during development, we repeatedly used those for more predictable outputs.

Our tooling meant our summarization feature took ~1.5 weeks, and we could iterate faster. Fast-forward to today and our AI features are used by more than 95% of customers monthly.

Things have changed quite a bit since we built this feature, so there are a lot more good tools to help with use cases like this. As an aside, you should definitely consider buying vs. building (e.g., context.ai).

Have a principled product

The general idea here is that AI isn’t all that different from good old-fashioned software. You should still have principles about building products your customers love, which will help you narrow down from a long list of ideas to valuable products.

Here are a few you should keep in mind that proved invaluable to us:

- AI is ready for prime time, but keep a human in the loop: At least for now, our customers don’t want agents to complete tasks independently. They want powerful automation to enable their productivity. Realizing this helped us narrow down what we should build and how we should build it.

- AI doesn’t mean chatbot: Everybody wants to implement a chatbot/co-pilot/revolutionary new UI, which might be the future. But for now, users want familiar interfaces with less manual work. Subtly automating existing workflows is the way to get killer adoption and, critically, retention for features in 2024. This extends into the future, where systems will be more agentic and can take on bigger chunks of work autonomously.

AI is a powerful tool, so use it effectively in development

Large language models (LLMs) are powerful tools for your product but you should also use them during development.

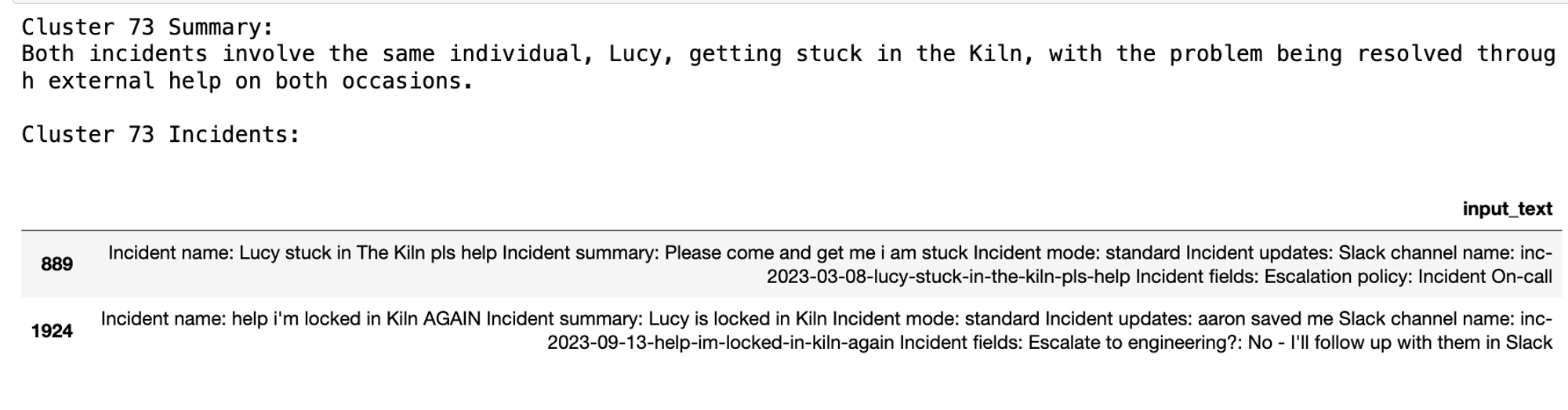

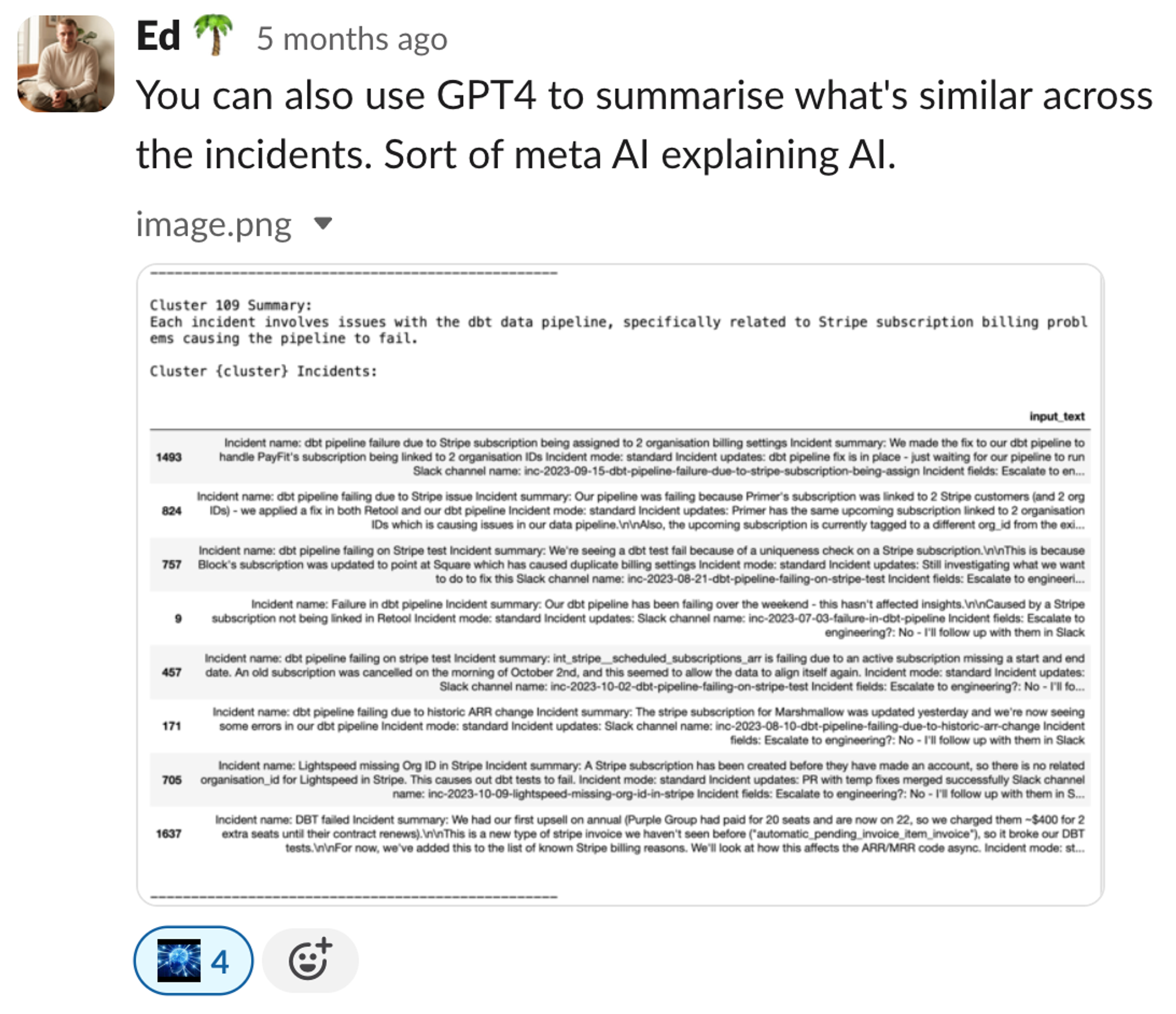

While building Related Incidents, we used clustering and GPT-4 to explain cluster similarities. This is just one example, but LLMs are fantastic partners for prompt engineering, interpretability, and more!

Foundation models change every week—bet on the platform

Foundations models and developer tooling are changing so fast that you probably shouldn’t focus too much on any single detail but on the big picture and moving quickly.

Here are some of the observations we made with this in mind:

- Our features have been live for ~6 months, and we’ve already benefited significantly from new model versions.

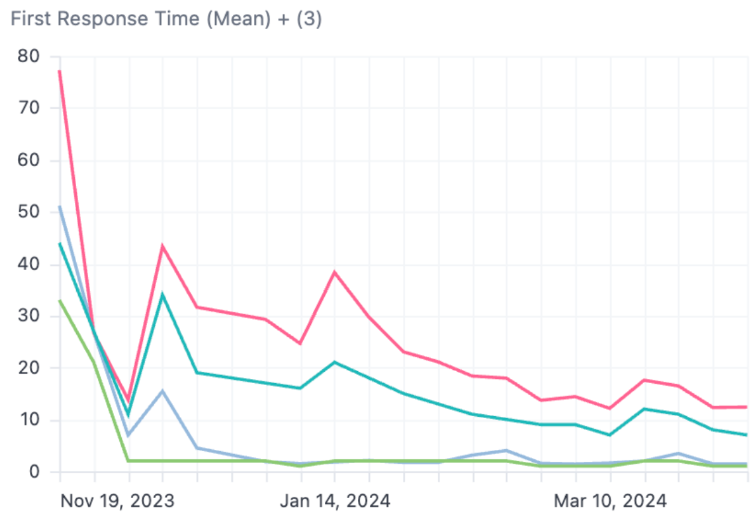

- Things that feel slow now won’t be soon. We’ve seen response time and cost fall dramatically. Things will be more real-time. You can see that on the graph below, which is the response time for the Assistants API from OpenAI.

- We had one funny example with our analytics and insights-focused Assistant. We’d done a ton of engineering work from prompt to insight, building complex analytics models with dbt and lots of instructions/prompt engineering. But eventually found the best results with one flat table and the OpenAI Assistants API with code interpreter.

- Building a lot of complexity on top of these systems will quickly become legacy overhead. This might create roadblocks and stop you from benefiting as much from the billions of dollars behind OpenAI and all the other wonderful companies building in this space.

Launch in phases, be close to customers

Finally, launch in phases. This is often helpful in product development, particularly with AI-enabled features, where many unknowns exist. Here's an anecdote from our experience that highlights why this is so critical:

We had a support request about “mistranslation in Portuguese," which surprised us because we hadn't built multi-language support for most areas of our product yet, so everything should've been in English.

It turns out that our AI features were working in all languages, depending on their input data. It's pretty incredible that we got it for free, but catching it before GA enabled valuable QA and gave us some control over that experience.

The reality is that these systems are so new, you’re likely to bump into things you don’t expect.

Don't wait

My final thought: don't wait.

Today, our AI features are amongst our most loved, with >95% of customers using them every month. The potential to make something people want with AI is huge, and I bet you can improve your product in ways you don't expect.

That said, it's still so early. Foundation models change weekly, and we're discovering new user experiences, like Devin from Cognition AI. But if you start now, you could be a leader in your industry.

See related articles

Supercharged with AI

Introducing our AI-powered features to enable incident responders to save crucial time, learn from previous incidents, and become more resilient over time.

Charlie Kingston

Charlie Kingston

Running projects for AI features

In this article, Aaron the Technical Lead for the Post-incident team highlights the differences of running projects for AI powered features

Aaron Sheah

Aaron Sheah

The Debrief: incident.io, say hello to AI

In this episode, we dive into our latest product release: a full suite of AI features designed to help you get the most out of your incident response.

incident.io

incident.ioSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization