Don't count your incidents, make your incidents count

We can't have more than two major incidents per quarter.

It happens all the time: senior folks at your company feel like things are out of control, and they attempt to improve the situation by counting how many incidents you're having.

And it's not an unreasonable approach — on the surface, the number of incidents seems like a great measure for how well things are going.

Whilst setting targets might work in some organizations, it's worth considering whether they provide the signal you expect, and whether the implications of doing so have been properly considered. We've had this conversation more times than we can count, so here's a few tips on how to navigate the situation.

Fewer incidents doesn't mean things are better

The absence of incidents doesn’t mean your systems are reliable or things are safe. I've worked in teams where we've had months of smooth sailing, followed by intense periods of seemingly everything being on fire. Nothing materially changed between the two periods. Deeper analysis showed the many contributing factors present throughout. We just got lucky and the perfect storm of latent errors and enabling conditions didn’t occur in the first instance.

More incidents is no bad thing

Incidents aren't an evil we need to stamp out. In many cases, they're the cost of doing business. We shouldn't encourage failure, but despite our best efforts to maintain high levels of service, surprises will catch us out. When done right, a healthy culture of declaring incidents can be a super power. I want my teams to feel comfortable sharing when when things may be going wrong, be excellent at responding when they do, and democratising knowledge and expertise after the fact.

Targets can drive the wrong behaviour

I’ve seen people arguing why something is or isn’t an incident because they don’t want to reset the “days since incident” counter. Equally, I’ve seen engineers waste time in an incident trying to justify a minor severity rating, rather than major, because they don't want to trigger the company target.

As stated in Goodhart's Law, "when a measure becomes a target, it ceases to be a good measure". If you set a low target with severe consequences, you'll probably meet it, whether that means suppressing reporting, arguing over labels, or some other counterproductive measure.

Targeted or not, you're not in control

The vast majority of incidents are outside of our control. At best, a “no incident” goal is un-actionable and ignored. At worst, it can alter behaviour to the detriment of the organization.

If you were set a target of not spilling a drink for a year, what would you do differently? Nobody sets out to spill a drink, and when it happens it’s not because you’re careless, it’s just random chance sprinkled with misfortune. Pick a better target, like suggesting I don't run with drinks.

There are better alternatives to counting incidents

So you've convinced your leadership team it might be a bad idea, but to seal the deal they're after an alternative. What can you offer in return?

The best advice is to understand their motivations for the goal. For example, is there a lack of trust between leadership and engineering? Is that fuelled by them seeing incidents, but not seeing the analysis and follow-up that happens afterwards? Perhaps a target around the number incidents which didn't have a debrief would help.

Whatever the motivation, here's a few options you might want to consider.

Measure what you actually care about

You don't really care about the number of incidents. You care about what that means; whether it's lost revenue, customer satisfaction, or the service you provide — incidents are just a useful proxy.

Instead, measure the thing you actually care about like service uptime, the number of times PII data was shared, or the number of failed payments. These are tangible measures that can be targeted and improved.

Measure the value you get from incidents

If you can accept that incidents are unavoidable surprises, why not measure how well your org is using them to improve?

We suggest writing debrief documents that are used to educate, holding sessions to discuss them, and ensure you're seeing follow up actions through to completion. If you do all of the above, you’re likely getting your money's worth.

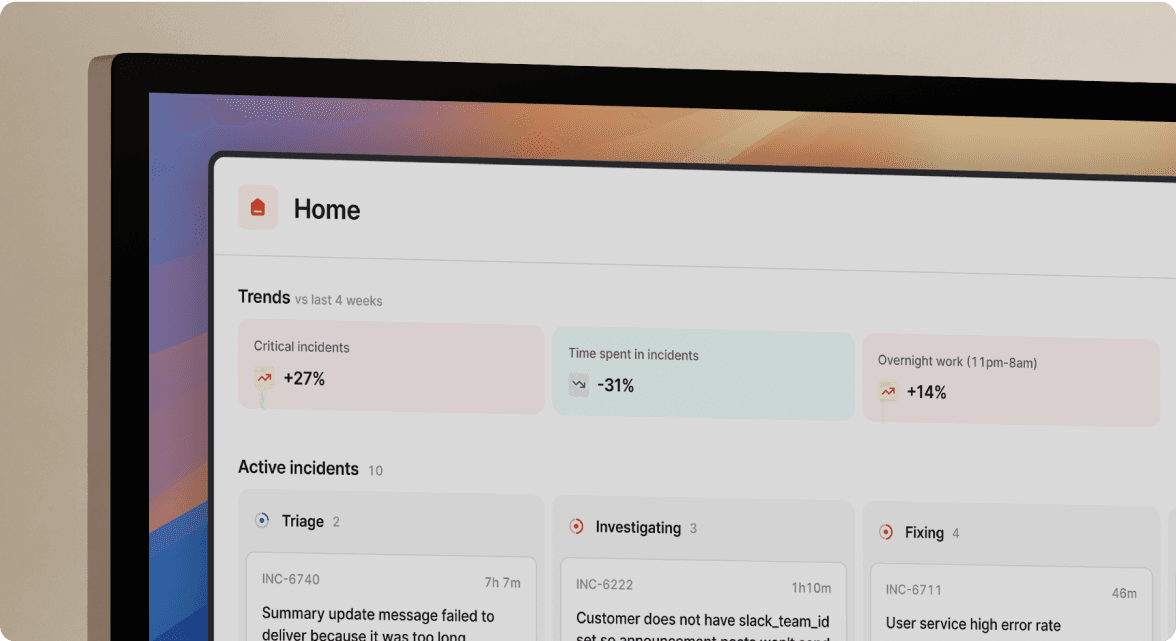

Give them the metrics they want, with the context they need

If you can't convince people not to target the number of incidents, why not provide the metrics they want but with the context they need to understand the full picture?

💭 If you’re interested in benchmarks that can help improve the way your engineering team operates, how you build products, and improve your resiliency, then be sure to check out our blog post on DORA metrics.

Rather than "we had 5 major incidents", share the contributing factors and risks, the commonalities and differences, and what's being done to improve. It's relatively easy to take the heat out of a number by providing some qualitative context. As it happens, there’s a great post from the Learning from Incidents blog about this here.

If you've got any pro tips of your own, we'd love to hear them! Send us an email at hello@incident.io, or find us on Twitter at @incident_io.

I'm one of the co-founders, and the Chief Product Officer here at incident.io.

See related articles

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian Hanson

Everything you need to know about ITIL 5, AI and incident management

We break down ITIL 5's governance framework and what it means for teams using AI in incident response. For incident management, it addresses questions like: Who's accountable when an AI-suggested remediation backfires? How do you audit AI-generated updates?

Chris Evans

Chris Evans

DevEx matters for coding agents, too

When AI can scaffold out entire features in seconds and you have multiple agents all working in parallel on different tasks, a ninety-second feedback loop kills your flow state completely. We've recently invested in dramatically speeding up our developer feedback cycles, cutting some by 95% to address this. In this post we’ll share what that journey looked like, why we did it and what it taught us about building for the AI era.

Rory Bain

Rory BainSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization