Best incident management tools for security operations: Coordinating security incidents

Updated January 26, 2026

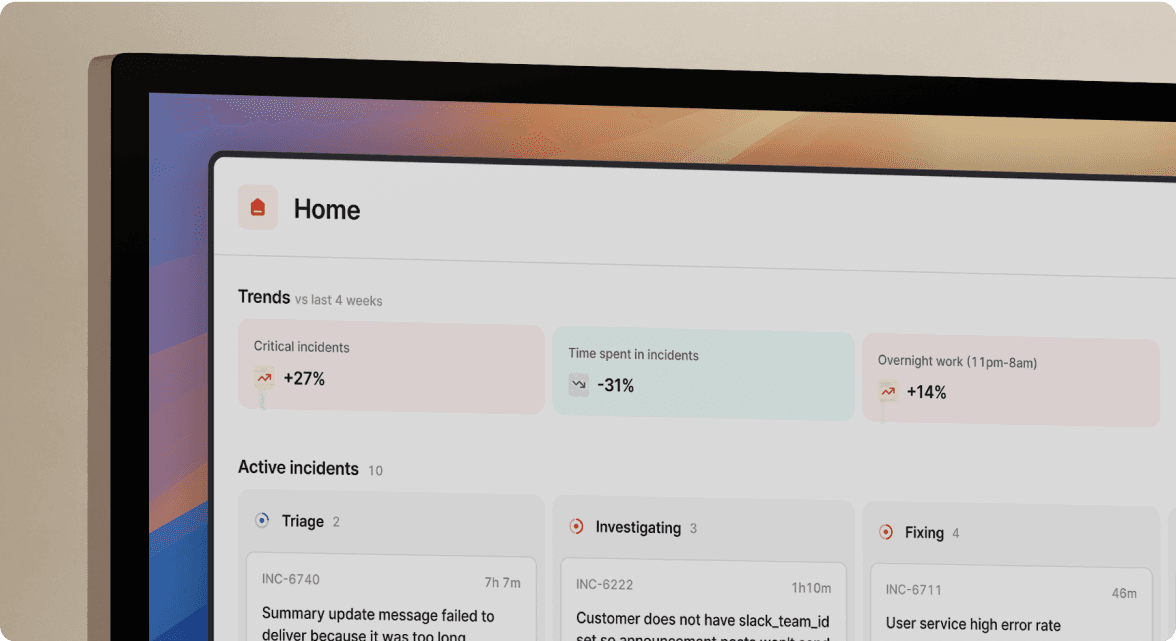

TL;DR: You need fundamentally different workflows for security incidents compared to operational outages. Your SIEM detects threats in milliseconds, but you often spend hours coordinating the response. This coordination gap is where most breaches spiral out of control. Security operations demand private incident channels, immutable audit trails, and automated service-to-owner mapping. Tools built for operational incidents leave sensitive breach details exposed in public Slack channels, creating compliance nightmares and reputational risks.

Security incident response breaks down at the coordination layer. Your SIEM detects unauthorized database access in milliseconds, but the next 45 minutes unfold in chaos. The SOC analyst pages the on-call engineer via personal text, creates a Slack channel, then realizes they just exposed "potential data breach" to 300 employees in a public workspace. Legal needs involvement, but nobody has contact information readily available. Three months later during the forensic audit, you reconstruct the timeline from scattered DMs, half-remembered Zoom calls, and incomplete notes. Detection worked perfectly. Coordination failed catastrophically.

Security incident response requires you to coordinate people, process, and technology simultaneously. While frameworks like NIST and SANS provide the steps, you struggle with execution. We'll walk through how to coordinate cross-functional breach response involving Legal, Communications, and Engineering without leaking sensitive data.

What is security operations incident management?

Security Operations Incident Management (SOIM) is how you combine people, processes, and technology to detect, analyze, and respond to cybersecurity threats. According to NIST SP 800-61 Rev 2, you need incident response capability for rapidly detecting incidents, minimizing loss and destruction, mitigating exploited weaknesses, and restoring IT services.

SANS defines incident response as the structured process of identifying, managing, and mitigating cybersecurity incidents to minimize damage, recover operations, and prevent future occurrences.

The historical context

Modern security incident management traces back to 1981 when Ian Murphy broke into AT&T's computers and changed billing rate clocks. The real catalyst came in 1988 when Robert Tappan Morris launched the Morris Worm from MIT's network, becoming one of the first distributed Internet attacks to gain mainstream attention. DARPA responded by requesting the Software Engineering Institute create the CERT Coordination Center (CERT/CC) at Carnegie Mellon University, establishing foundations for how we handle security incidents today.

IMT vs CSIRT: Understanding the distinction

The Computer Security Incident Response Team (CSIRT) is a centralized function that handles cyber incident response and management activities specifically, focusing on cybersecurity threats like malware infections, data exfiltration, and unauthorized access.

An Incident Management Team (IMT) typically handles all major incidents, including operational outages like database failures. In mature organizations, the CSIRT operates as a specialized subset within the broader IMT. Our platform supports both teams using the same tool stack with different permission models.

The security incident response lifecycle: NIST vs SANS frameworks

Two dominant models guide security incident response. Understanding both helps you structure workflows that satisfy compliance requirements while maintaining coordination speed.

NIST's four-phase model

NIST SP 800-61 Rev 2 segments incident response into four phases:

- Preparation: Establish incident response capability with policies, tools, and training. Build your CSIRT, define escalation paths, and integrate detection systems.

- Detection and Analysis: Identify and analyze security incidents through monitoring and investigation. Your SIEM correlates events, but analysts make the final threat determination.

- Containment, Eradication, and Recovery: Limit damage by isolating affected systems, remove threats from your environment, and restore systems to normal operations with verification.

- Post-Incident Activity: Conduct lessons learned sessions, update runbooks, and implement improvements to prevent recurrence.

The NIST model emphasizes iteration. You might cycle between Detection and Containment multiple times as you uncover the full breach scope.

SANS Institute's six-step framework

The SANS incident response process provides more granular tactical guidance:

- Preparation: Build organizational security policy and assemble your CSIRT with defined roles.

- Identification: Monitor IT systems and detect security incidents through SIEM correlation and IDS alerts.

- Containment: Perform short-term containment (isolate infected hosts) and long-term containment (implement temporary fixes).

- Eradication: Remove malware, close unauthorized access points, and eliminate the root cause.

- Recovery: Restore affected systems to production and verify functionality through monitoring.

- Lessons Learned: Analyze incidents within 48 hours of resolution and implement improvements.

NIST combines Containment, Eradication, and Recovery into one phase, while SANS separates them for clearer tactical execution. Both frameworks serve as compliance benchmarks for SOC 2, ISO 27001, and PCI DSS audits.

Security incidents vs operational incidents: Why the workflow differs

Your database failover and your data breach both qualify as "incidents," but you need opposite approaches to manage them. Understanding these differences prevents catastrophic mistakes like discussing sensitive breach details in public channels.

Privacy and access control: Default open vs default closed

Operational incidents follow a "default open" model. When your API response times spike to 5000ms, you want every engineer who might help jumping into the incident channel. Broad visibility accelerates troubleshooting.

Security incidents demand "default closed" access. When your SOC analyst confirms unauthorized database access, you need a private channel visible only to the CSIRT, Legal counsel, Communications lead, and specific remediation engineers. Leaking breach details to your 300-person Slack workspace triggers panic, premature public disclosure, and compliance violations.

Our Private Incidents feature creates invite-only channels automatically, restricting visibility to designated responders.

"incident.io allows us to focus on resolving the incident, not the admin around it. Being integrated with Slack makes it really easy, quick and comfortable to use for anyone in the company, with no prior training required." - Andrew J on G2

Legal and compliance timelines: Nice-to-have vs mandatory

Operational incidents have internal SLA targets. Missing your 45-minute MTTR target by 10 minutes doesn't trigger regulatory fines.

Security incidents operate under strict legal deadlines. GDPR Article 33 requires notifying the supervisory authority within 72 hours of becoming aware of a personal data breach unless the breach is unlikely to result in risk to individuals' rights and freedoms. California law mandates notifying affected California residents within 30 calendar days of discovering the data breach, with additional requirements to notify the Attorney General within 15 days if the breach affects 500 or more residents.

Your incident coordination platform must provide immutable timestamps showing exactly when you became aware of the breach. Our automated timeline capture creates audit trails that satisfy regulators.

Stakeholder coordination: Engineering team vs cross-functional crisis management

When your Kubernetes cluster runs out of memory, your SRE team handles it. Coordination stays within Engineering.

Security breaches require cross-functional coordination. Your response team includes the SOC analyst who detected the breach, forensic specialists analyzing logs, engineers closing the vulnerability, Legal counsel determining breach notification requirements, Communications preparing customer statements, and executives making containment decisions with business impact.

Chain of custody and forensic preservation

Operational incidents focus on resolution speed. You restart the pod, clear the cache, rollback the deployment. Preserving every log line matters less than restoring service.

Security incidents require forensic-grade evidence preservation. Before you wipe that infected host, you need disk images, memory dumps, and complete logs with verified timestamps. This evidence might appear in legal proceedings months later.

We built automated timeline generation with validated timestamps that create the chain of custody auditors and legal teams require.

Common security incident categories

Different security incidents require distinct response playbooks. Understanding these categories helps you build workflows that trigger appropriate containment measures automatically.

Malware and ransomware infections: Isolate infected hosts immediately to prevent lateral movement. Ransomware escalates to executive leadership because ransom payment decisions carry legal and ethical implications. The FBI's Internet Crime Complaint Center coordinates with victims during ransomware incidents affecting critical infrastructure.

Data exfiltration and unauthorized access: Determine what data was accessed, whether it was copied externally, and whether it contains PII or PHI. If PII was accessed, GDPR's 72-hour notification clock starts immediately. Your incident workflow must prompt Legal involvement to assess breach notification requirements.

Insider threats and credential misuse: Employee credentials used from impossible travel locations indicate potential insider threats or credential compromise. Private incident channels prevent alerting the suspected insider during investigation.

Phishing campaigns and credential harvesting: Determine campaign scope quickly—how many employees received the phishing email, who clicked, and whether credentials were entered. Coordinate Security alerts, IT credential resets, and Communications company-wide warnings in shared incident channels.

The role of the SOC in incident coordination

Security Operations Centers detect threats that engineering teams must remediate. This handoff between detection and resolution is where most breaches spiral out of control.

Alert fatigue: The silent killer

A recent Trend Micro survey found 51% of SOC teams feel overwhelmed by alert volume, spending over 25% of their time on false positives. Research shows 71% of SOC personnel experience burnout. When your SOC analyst hits hour 10 of a 12-hour shift, they're more likely to dismiss real threats as noise.

According to the 2024 KPMG cybersecurity survey, 30% of security leaders reported alert fatigue as one of their top challenges.

The coordination gap: From detection to remediation

Your SIEM detects the SQL injection attempt in milliseconds. But the next period unfolds in slow motion. The SOC analyst must figure out which engineering team owns the vulnerable endpoint, find the on-call engineer's contact information, page them, explain the technical details, and hand off context.

During this coordination gap, attackers move laterally through your network. IBM's Cost of a Data Breach report shows the global average breach cost reached $4.44 million in the previous year, a decrease from $4.88 million in 2024 largely attributed to faster detection and containment driven by security AI and automation.

For the 14th consecutive year, healthcare organizations saw the costliest breaches, with average costs reaching $9.77 million.

"I appreciate how incident.io consolidates workflows that were spread across multiple tools into one centralized hub within Slack... It really helps teams manage and tackle incidences in a more uniform way." - Alex N on G2

Bridging the gap with service catalog

The solution lies in mapping technical assets to owners before incidents occur. Our Service Catalog connects every API endpoint, database, and microservice to its owning team and on-call schedule. When your SIEM alerts on suspicious activity targeting the payment gateway, we automatically page the payments team engineer and create a private incident channel with relevant context.

The SOC analyst no longer hunts for contact information or explains which service is affected. The right engineer joins a channel pre-populated with the triggering alert, service information (owners, dependencies, runbooks), and an auto-assigned incident lead.

Top incident management tools for security teams

Security teams juggle detection, automation, and coordination. Understanding which tool category handles each function prevents expecting your SIEM to coordinate human response.

Tool category comparison

| Platform Category | Primary Function | Use Case | Example Tools |

|---|---|---|---|

| SIEM | Threat detection through log correlation | "Is something bad happening?" | Splunk, Datadog Security |

| SOAR | Automated response playbooks | "Can we fix this automatically?" | Palo Alto XSOAR, Tines |

| Coordination | Human collaboration and audit trails | "How do we organize the response?" | incident.io |

SIEM (Security Information and Event Management) systems collect security event data from various sources, correlating events and recognizing patterns. SIEM primarily focuses on collecting and analyzing security event data to provide insights into potential security issues. Popular platforms include Splunk Enterprise Security, IBM QRadar, and Datadog Security Monitoring.

SOAR (Security Orchestration, Automation, and Response) platforms focus on automating incident response processes. SOAR enables security teams to reduce response time through automated playbooks, API integrations, and workflow orchestration. Popular platforms include Palo Alto Cortex XSOAR, Splunk SOAR, and Tines.

Incident coordination platforms handle the human layer. We don't detect threats or automate forensic analysis. We coordinate the humans who respond to threats your SIEM detected and remediate vulnerabilities your SOAR platform can't automatically patch.

These categories complement each other. Your SIEM alerts on the SQL injection attempt. Your SOAR platform automatically blocks the attacking IP and runs a vulnerability scan. Your coordination platform assembles Legal, Engineering, and SOC in a private channel to determine breach scope and notification requirements.

"Without incident.io our incident response culture would be caustic, and our process would be chaos. It empowers anybody to raise an incident and helps us quickly coordinate any response across technical, operational and support teams." - Matt B. on G2

How to coordinate security breaches using incident.io

We built specific features for security operations that require privacy, audit trails, and cross-functional coordination. Here's how to coordinate breach response from detection through post-mortem without leaking sensitive data.

Step 1: Automatic private incident creation from security alerts

When your Datadog Security Monitoring or Splunk alert fires, our alert routing automatically creates a private incident based on alert severity or tags. Set up a rule: "Any alert with tag security-breach creates a private incident visible only to CSIRT members."

The incident channel is invite-only by default. It doesn't appear in your Slack channel list for regular employees. The breach remains contained to need-to-know personnel from the first second.

Step 2: Automated responder assembly through service catalog

The private channel opens with the triggering alert, but who should join? Our Service Catalog integration maps technical components to owners and on-call schedules. If the alert indicates database access anomalies, we automatically page the database team's on-call engineer.

For security incidents, you can define additional responder roles beyond the technical owner. Add "Security Lead," "Legal Counsel," and "Communications Lead" as standard roles for any incident tagged as a potential breach. These stakeholders join automatically.

Step 3: Guided workflows for breach assessment

Once responders assemble, they need to determine scope quickly. Our workflow automation prompts the incident lead with breach-specific custom fields that populate your post-mortem automatically:

- "Was PII or PHI accessed? (Required for GDPR/HIPAA assessment)"

- "Geographic location of affected users? (Determines notification requirements)"

- "Timestamp of initial unauthorized access? (Starts regulatory clock)"

- "Has the vulnerability been patched? (Required before resolution)"

Legal counsel sees these responses in real-time and makes notification decisions without scheduling separate meetings. The 72-hour GDPR clock is ticking.

Step 4: Real-time timeline capture for forensic accuracy

During breach response, we capture everything automatically: every status update, role assignment, Slack message, participant action. When someone mentions "we found the SQL injection in the payment endpoint at 3:47 AM," that gets timestamped and added to the timeline.

For incident calls, our Scribe feature transcribes Google Meet or Zoom conversations, extracting key decisions and flagging root cause mentions. When Legal asks three months later "when exactly did you become aware PII was accessed?", you have an immutable timeline with precise timestamps.

"The customization of incident.io is fantastic. It allows us to refine our process as we learn by adding custom fields, severity types or workflows to tailor the tool to our exact needs." - Nathael A on G2

Step 5: Secure post-mortem generation with access controls

When you resolve the incident, we auto-generate a post-mortem using captured timeline data, transcribed notes, and breach assessment responses. The post-mortem respects the same privacy controls as the incident itself: only CSIRT members can access it initially.

You can then selectively share sanitized versions with broader teams for learning. The executive summary goes to leadership, technical details go to Engineering for remediation, and the complete forensic timeline stays restricted to Legal and Security for regulatory proceedings.

Step 6: Compliance-ready audit trail export

For SOC 2, ISO 27001, or GDPR audits, you need complete incident documentation with immutable timestamps. Our audit trail capability provides exactly what auditors require: who was notified when, who joined the incident channel, what decisions were made, what actions were taken, and when you contained the breach.

Export the complete timeline with validated timestamps that satisfy regulatory requirements. Unlike manually-constructed records that auditors question, our automated capture creates defensible evidence that you followed proper breach response procedures.

Key takeaways for security leaders

You need fundamentally different coordination approaches for security incidents compared to operational incidents. The "default open" model that accelerates operational troubleshooting creates unacceptable risks for breach response. Your platform must support private, invite-only channels that restrict sensitive information to need-to-know personnel while maintaining ChatOps coordination speed.

The coordination gap between detection and remediation is where breaches become catastrophic. Service catalog mapping that automatically pages the right engineers eliminates the manual hunting that adds critical minutes to every incident.

Compliance deadlines are unforgiving. GDPR's 72-hour notification window requires precise timestamps showing when you became aware of the breach. Immutable audit trails generated automatically during the incident satisfy auditors more effectively than reconstructed timelines written from memory weeks later.

The convergence of SecOps and SRE is accelerating. Your security team and engineering team increasingly share tools, workflows, and on-call rotations. Choosing platforms that serve both teams with appropriate privacy controls reduces procurement complexity while improving coordination during critical incidents.

Ready to see how Private Incidents and Service Catalog mapping work for security operations? Book a demo and we'll walk you through a breach scenario from detection through post-mortem, showing exactly how Legal, SOC, and Engineering coordinate in private channels.

Key terms glossary

CSIRT (Computer Security Incident Response Team): A centralized organizational function that handles cybersecurity incident response and management activities specifically, as distinct from broader incident management teams.

SIEM (Security Information and Event Management): Systems that collect security event data from various sources, correlating events and recognizing patterns that indicate anomalous activity for threat detection.

SOAR (Security Orchestration, Automation, and Response): Platforms focused on automating incident response processes through pre-defined playbooks, API integrations, and workflow orchestration.

Chain of custody: Forensic evidence preservation showing exactly who accessed what data when, required for legal proceedings and regulatory investigations following security breaches.

Private incident: An incident managed in an invite-only channel visible only to designated responders, preventing sensitive breach information from leaking to the broader organization.

Alert fatigue: A condition where SOC analysts become desensitized to security alerts due to overwhelming volume and high false positive rates, leading to missed genuine threats.

FAQs

See related articles

The post-mortem problem

Post-mortems are one of the most consistently underperforming rituals in software engineering. Most teams do them. Most teams know theirs aren't working. And most teams reach for the same diagnosis: the templates are too long, nobody has time, nobody reads them anyway.

incident.io

incident.io

Keeping it boring: the incident.io technology stack

This is the story of how incident.io keeps its technology stack intentionally boring, scaling to thousands of customers with a lean platform team by relying on managed GCP services and a small set of well-chosen tools.

Matthew Barrington

Matthew Barrington

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian HansonSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization