Recapping SEV0 San Francisco 2025

Earlier this week, we gathered in San Francisco for our second SEV0—almost a year after our very first event. SEV0 has always been about shining a light on the biggest challenges (and opportunities) in incident response. Last year, we were still talking about the fundamentals: blameless culture, strong processes, and lessons from the best in reliability.

This year felt different. AI has moved from background noise to front and center in every conversation, every team, everywhere. And it’s no longer a question of if it will change incident response, but how fast.

We’re standing on the edge of a shift that will reshape how engineering teams detect, respond to, and learn from incidents. So what does the future of incident management look like when humans and machines work side by side? That was the question at the heart of SEV0 2025.

Keynote: Humans, machines, and the future of incident response

Stephen Whitworth (CEO, incident.io)

incident.io was born nearly four years ago out of frustration with the status quo of incident response. What started at a kitchen table has grown into a 100-person team, all driven by the same obsession: reliability. Because at the end of the day, that’s what customers really want. Incidents aren’t the goal—reliability is.

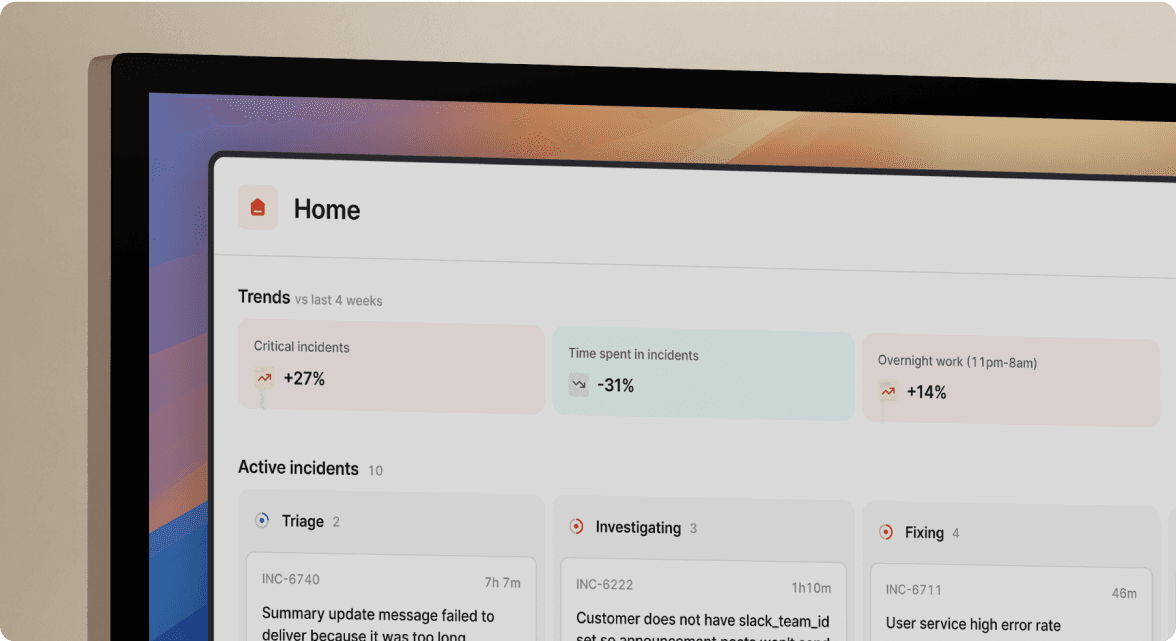

We believe AI enables a step-change in reliability. It frees humans to think strategically, slashes resolution times, and automates the grunt work that eats into engineers’ days. Tasks that once took hours—like documenting incidents or drafting postmortems—now take seconds.

Deterministic automation only goes so far. AI brings context, intelligence, and agency into the loop—transforming how we build, respond, and recover. That’s why earlier this year we reorganized the entire company around AI agents, and in July, unveiled AI SRE: an always-on assistant that triages issues, uncovers root causes, and generates fixes—often before you even open your laptop.

The goals are ambitious: cut downtime by 80%, eliminate alert fatigue, and keep builders building—not firefighting.

But AI alone isn’t the full story. Incidents are about more than just fixing problems. You need communication, coordination, and continuous improvement—all of which require a strong, end-to-end platform. Splitting incident management and AI across separate tools is an artificial distinction. They’re better together.

AI SRE also gets stronger with every incident, much like an engineer finding their footing on a new team. It’s not just about more logs or traces—it’s about learning in context, inside the platform that holds the entire incident lifecycle.

Reliability has always been our north star. Now, with capital, an amazing team, and world-class customers, we’re in the best possible position to make AI-powered reliability the new standard.

The evolution of incident management

Martin Smith (Principal Architect/SRE, NVIDIA)

A simple, but uncomfortable truth? You will always have incidents, and your customers will always see them. No matter how much effort you throw at prevention, outages are inevitable. The real question isn’t whether customers see them—it’s how they experience them.

Reliability is no longer just an infrastructure metric—it’s a product feature. When your site goes down, what customers see next—the transparency of your communication, the choices you give them, and the fallbacks you design—becomes part of your brand.

Martin illustrated this with a personal story. Ahead of hurricane season, his local power company sent actual line workers door to door with updates on planned repairs. It wasn’t a marketing push; it was radical transparency from the people doing the work. That proactive approach turned a disruption into a positive experience—and gave customers the chance to prepare.

We’re seeing this shift everywhere. Regulators fined Southwest not just for its December 2024 meltdown, but for failing to communicate with customers in real time. In South Africa, utilities integrate load shedding schedules into apps, treating planned outages as part of the customer experience. And in the October 2024 Meta outage, the impact was far deeper than downtime—millions relied on the platform for communication, safety, and basic needs.

The takeaway: customers (and regulators) care as much about the incident experience as they do about the outage itself.

For Martin, that means weaving incident response into product design. Product managers should sit alongside responders, writing “incident requirements” the way they would product requirements. Teams should use postmortems not just to fix systems, but to ask: what could we build into the product itself to make future incidents better for customers?

Think status pages that confirm “it’s not just you,” read-only modes to keep people working, or even AI agents that communicate directly with users while engineers resolve the issue.

“Your incident management is part of your product, your user experience, just like any other feature.”

By reframing incidents this way, organizations can move from damage control to building trust—even in their toughest moments.

From error to insight: human factors in incidents

Molly Struve (Staff SRE, Netflix)

Molly brought the focus back to the human side of incident response. While post-incident reviews often dive deep into technical causes, human factors are just as critical—and often overlooked.

Molly’s passion for the subject comes from a background in aerospace engineering, where she studied how pilot error contributes to aviation accidents. Roughly 70–80% of those accidents are caused by human mistakes, but instead of ignoring them, aviation has transformed safety by leaning in—studying human–machine interactions and redesigning cockpits to make pilots more effective.

Software, she argued, needs its own “engineering cockpit.” Today, that cockpit is Slack, observability dashboards, and deployment tools. The way these systems present signals can be the difference between efficient operations and a full-blown incident. Just like aviation, we need to treat human factors as a core part of reliability.

The challenges

Molly outlined a few cultural hurdles that keep teams from embracing human factors:

- Automation bias – Software systems feel like they “run themselves,” so we forget that humans are still essential.

- Overemphasis on blamelessness – In trying to avoid blame, teams sometimes avoid looking at human contributions altogether, missing opportunities to improve.

- The “human error” stigma – Mistakes are inevitable, but treating them as shameful stops teams from learning.

The opportunities

When we do examine human factors, the payoff is huge. One mistake can reveal systemic issues—like confusing tooling or unclear processes—that affect many teams. Fixing these has high ROI, creating safer environments and making engineers more confident and effective. Confident engineers move faster, take smarter risks, and ultimately prevent more incidents.

Molly gave practical strategies to make this real: bake human factors into post-incident reviews, use “5 Whys” to explore the human angle, and model vulnerability by sharing your own mistakes as opportunities for system improvement.

Her closing reminder was simple but powerful:

“Every human decision reveals insight into your system. When humans struggle, your system is speaking—and if you’re listening, the gains can be massive.”

Human factors aren’t a distraction from technical analysis—they’re the secret weapon. By treating our engineering cockpit with the same care as aviation, we can build safer, more reliable, and more productive systems for everyone.

Our data disappeared and (almost) nobody noticed: incident lessons learned

Michael Tweed (Principal Software Engineer, Skyscanner)

Michael shared the story of what became known internally as “the iOS data incident”—a week-long rollercoaster that started with a casual Slack message on a Monday morning and ended with millions of lost (and then suddenly spiking) events. The root cause: a change to legacy Objective-C dependency code that broke a required transformation in Skyscanner’s data emission SDK. Instead of sending events, the SDK queued them indefinitely. When the fix was released, every cached event was suddenly sent at once, producing massive dips and spikes in key datasets.

The impact was broad. Experimentation, marketing, and partner datasets all went haywire, raising questions from every corner of the business. And while the technical fix was relatively simple, the investigation exposed painful gaps in monitoring, testing, and process. Automated tests for data emission stopped at the SDK boundary and missed the broken transformation. Code quality tooling for Objective-C had been disabled to save CI time. Dashboards existed but had no alerts, and default data alert thresholds were so wide they never fired. With adoption curves delaying the effect, it took six days for manual detection—and longer still for an incident to be formally declared.

The lessons were stark. Invest in true end-to-end tests, not just local checks. Keep documentation close to the code and review it regularly. Prune dashboards that no one actively uses, and never rely on default alerting thresholds. Pay closer attention to release adoption curves. And most importantly, don’t hesitate to declare a triage incident—faster coordination could have shortened the cycle dramatically.

AI played a mixed role. It helped with data analysis, allowing stakeholders to write impact queries quickly and freeing engineers to focus on fixes. But “AI-enabled” anomaly detection failed to flag the problem, and language models couldn’t identify the legacy Objective-C issue even in hindsight.

Still, there were bright spots. Cross-business collaboration kicked in immediately once the incident was declared, with engineers self-organizing to chase down different failure points. Communication stayed centralized in the incident Slack channel, reducing noise and building alignment. And thanks to a strong no-blame culture, nobody pointed fingers—even though the blast radius was huge. Everyone focused on fixing, learning, and preventing the next one.

In the end, corrective actions fixed the data flow and backfilled the missing events. Preventative actions improved alerting and updated runbooks. Strategically, Skyscanner committed to removing all legacy Objective-C code from its iOS codebase. For Michael, the biggest takeaway was that every layer of tooling had reported “green,” yet the system was failing silently. Incidents like this prove the value of digging deeper, strengthening observability, and designing for resilience.

Claude Code for SREs

Kushal Thakker (Member of Technical Staff, Anthropic)

Kushal opened with a striking stat: according to the 2024 DORA DevOps report, 76% of developers use AI daily, yet SRE teams are still bound to traditional 1:10 engineer-to-system ratios. Development is speeding up, but ops support hasn’t kept pace. His point: we can’t hire our way out—we need AI to augment SREs.

That’s where Claude Code comes in. Built for SRE realities, it runs in the terminal, integrates with existing tools, improves as models advance, and uses a permission-based design so humans stay in control while the system handles the heavy lifting.

To show this in action, Kushal walked through the dreaded 3 a.m. page. Instead of responders scrambling through dashboards and logs, Claude Code auto-collects metrics, checks commits, and assembles context before asking permission to act—scaling a cluster, reverting a commit, or paging oncall. The human shifts from manual investigation to decision-making, cutting recovery times and easing fatigue.

He extended this to monitoring: Claude Code can analyze service code, generate Prometheus configs, Grafana dashboards, and alert rules, and even draft docs automatically. Integrated with GitHub Actions, it delivers monitoring from day one, with fewer blind spots and faster onboarding.

Beyond incidents, automation opportunities span audits, infra management, and compliance—with hooks and subagents handling repetitive work while humans keep oversight. Kushal acknowledged AI won’t replace engineering; it adds new responsibilities like permission engineering, refining prompts, and integrating tools.

As he put it: AI won’t eliminate incidents, but it can flip the role of the SRE—from bottleneck to empowered decision-maker.

Boundary cases: technical and social challenges in cross-system debugging

Sara Hartse (Software Engineer, Render)

Sara shared stories from Render, a platform-as-a-service company that sits between its users and upstream infrastructure providers. That position makes debugging especially complex—when customers see failing or slow requests, the root cause could lie anywhere along the chain: user application, Render’s platform, or an upstream provider. The challenge is not just technical but also social: knowing when to push deeper, when to escalate, and how to collaborate across those system boundaries.

She illustrated this through three incidents with identical symptoms but very different causes. In the first, a major customer escalated quickly to engineering, bypassing support. The team assumed the issue was with Render or an upstream provider, but experiments revealed the user’s own application was hanging up WebSocket connections. The takeaway: escalation paths matter, and engineering biases toward “platform problems” can actually slow root-cause discovery compared to the support team’s practiced triage.

In the second, a single user reported high latency on requests. The instinct was to blame the user’s app, but new tracing infrastructure showed the issue came from lock contention inside Render’s own proxies. CPU profiling eventually tied it to a background task, and eliminating that contention fixed the problem. Here, the bias was reversed: assuming “one user report = user issue.” The real lesson was that different customers have different tolerances for latency, and investing in boundary-level observability—like distributed traces—was the breakthrough.

The third incident looked the most like a Render failure: widespread slow and failing requests across multiple customers. After exhausting theories about proxy issues, Kubernetes networking, and VM bandwidth limits, the team dug deeper with packet captures. The traces showed elevated packet loss during the spikes, which pointed back to their upstream provider. Armed with specific TCP data and clear debugging steps, Render reopened the support case, and the provider traced the problem to network congestion caused by another customer. They installed more capacity and the issue disappeared.

What tied all these incidents together was not just technical detective work but the human factors of debugging across organizational boundaries. Render’s engineers had to check their own biases, collaborate with support teams and upstream providers, and rely on systematic elimination backed by hard evidence. As Sara put it, the hardest part of boundary incidents is that you can’t just inspect logs or attach a debugger to someone else’s system—you have to build trust, share data, and make your case.

Her closing reminder: debugging at the seams requires both technical rigor and social coordination. Observability, process discipline, and strong relationships are as important as the tools themselves.

SEV me the trouble: pre-incidents at Plaid

Derek Brown (Head of Security Engineering, Plaid)

Derek opened with a provocative question: if most incidents are predictable, why don’t we treat them that way? Even if we can’t anticipate the exact outcome, we still control how changes are released, the levers we have to mitigate risk, and how ready we are to respond. The problem, he argued, is cultural—engineers are optimists, and rarely imagine that their change might cause the next incident.

Traditional pre-mortems are one approach, but they’re too heavy-weight to be a default behavior. Instead, Plaid has introduced the idea of the pre-incident: a lightweight, low-friction practice designed to nudge rigor and prepare responders before changes go live.

The process is simple. An operator fills out a short pre-incident questionnaire outlining the change, the systems impacted, and how it will be tested, monitored, and rolled out. A dedicated Slack channel is spun up automatically, and updates are shared there in real time. If something goes wrong, the operator can instantly escalate into a full-blown incident with all the context already in place. By design, it’s meant to be a knee-jerk habit, reinforced through training and culture.

So does it work? Derek was candid: measuring impact is tricky. Because engineers self-select when to file a pre-incident, the data carries a selection bias—people are more careful when they already suspect risk. And with a small sample size, it’s hard to draw strong statistical conclusions. Still, the early signs are promising. Year-to-date, Plaid logged 224 pre-incidents, and only 3 of them became actual incidents. In two-thirds of those cases, the operator caught issues early and rolled back quickly, well below MTTR. In the other case, visibility from the pre-incident let someone outside the change identify a downstream issue faster than they otherwise would have.

Perhaps most surprisingly, pre-incidents haven’t slowed development—they’ve sped it up. With a standardized way to communicate changes, teams no longer need to spin up ad-hoc processes. Risk is reduced, confidence is higher, and response is faster when things do go wrong.

Derek closed with practical advice for any team interested in adopting the practice: start by copying the pre-incident questionnaire, adapt it for your organization, and bake it into culture through training and retrospectives. Then build the tooling to make monitoring pre-incidents part of the normal investigative workflow. In his words, pre-incidents turn optimism into preparedness—helping teams catch issues before they become incidents at all.

Navigating disruption: Zendesk’s migration journey to incident.io

Anna Roussanova (Engineering Manager, Zendesk)

Anna told the story of one of the highest-stakes migrations you can imagine: moving 1,200 engineers, 150 scrum teams, 5,000 monitors, and more than a dozen alert sources—all in just ten weeks. The scale alone was daunting, but the real challenge was ensuring the migration didn’t create organizational burnout or derail other initiatives already in motion.

The key, she explained, was a clear set of guiding principles. Zendesk anchored the project on two north stars: first, there would be one source of truth to avoid the lax guidelines and ad-hoc configurations that had left their old system in disarray; and second, the migration had to minimize work for other teams, reducing friction and making it as efficient as possible.

A small expert team drove the effort: two engineers focused on architecture and build, a product manager owned communication, a program manager managed tasks, and a rotating group of SRE helpers filled in gaps. With organizational backing, they had the permission to pull in SMEs across the company, apply 80/20 solutions to cover the bulk of routing and scheduling needs, and lean on long-standing relationships to recruit early adopters.

Communication, Anna emphasized, was the glue that held it all together. Public Slack announcements explained why the migration was happening and reassured engineers that the work required would be minimal. Detailed documentation—step-by-step guides, FAQs, and updated troubleshooting resources—gave teams confidence. But most of the heavy lifting came from targeted Slack bot DMs, which nudged on-call users and engineering managers at exactly the right time. The mix of broad announcements, thorough documentation, and hyper-targeted messaging ensured the right people got the right information in the right way.

The result: every single engineering team migrated efficiently by the go-live date.

Looking back, Anna shared three lessons. First, build real-time reports for migration progress earlier—having visibility into monitor migration was invaluable late in the project. Second, engage edge-case users sooner, especially non-engineering teams who were touched by the system but not as connected to the core migration effort. And third, always include a buffer—Zendesk gave themselves four weeks between go-live and contract expiration, and they needed every bit of it.

The big takeaway was that scale alone doesn’t sink migrations—misalignment does. With clear principles, a focused team, and a communication strategy that combined broad visibility with precise nudges, Zendesk pulled off a migration that looked impossible on paper.

What the Real Housewives taught me about retros

Paige Cruz (Principal Developer Advocate, Chronosphere)

Paige began with a stat from the 2024 Catchpoint State of SRE survey: nearly half of respondents said the area their organizations most needed to improve was emphasizing learning versus fixing. Too often, she argued, incident retros devolve into reliability theater—reports written, action items logged, and the same cycle repeated, without deeper understanding. Her premise: incident retros should be designed for learning, not just fixing. And to show what that looks like, she turned to an unlikely source of wisdom: the Real Housewives.

At first glance, the worlds couldn’t be more different. Reality TV thrives on drama, gossip, and suspect evidence, while engineering prides itself on logs, metrics, and root causes. But Paige drew a sharp comparison: both reunions and retros are structured meetings where colleagues come together, review the “incident,” and recount their perspectives. The difference is intent—Bravo produces for drama, while facilitators should produce for learning.

Through the infamous “Hawaii Incident” feud between Kyle and Camille, Paige highlighted facilitation lessons. Andy Cohen’s role mirrors a retro facilitator’s: replaying the timeline, inviting perspectives, and carrying learnings forward. Where he succeeded was in creating a shared artifact (a montage), giving each side airtime, and uncovering context that shaped their interpretations. Where he failed was in blame-driven, trapping questions—phrased to corner, not to reveal. In retros, that approach polarizes instead of illuminating.

Instead, Paige argued, facilitators hold narrative power. By setting the right vibe, starting with a recap, seeking multiple perspectives, and asking open-ended questions—“What surprised you?” “How did the system respond differently than expected?” “What actions do you feel good about?”—they can surface organizational dynamics that never make it into a root cause writeup. Psychological safety is essential: when people feel safe to share pressures, emotions, and blind spots, retros generate empathy and true resilience.

Her closing reminder was clear: retros aren’t about discovering a single objective truth. They’re about weaving together multiple perspectives into a richer understanding of how systems work, how they fail, and how teams coordinate under stress. As Paige put it, the choice is yours: will your retros produce reliability theater—or resilience?

Security without speed bumps: making the secure path the intuitive path

Dennis Henry (Productivity Architect, Okta)

Dennis framed his talk around a simple truth: humans aren’t malicious when they work around security controls—they’re just trying to get work done. When the login prompt appears again and again, engineers optimize for flow: sticky notes with passwords, reused tokens, or disabled prompts. This isn’t rebellion; it’s survival. And when security creates friction, people will always route around it.

He defined this “security friction” as anything that taxes limited human attention—interrupts, context switches, delayed workflows. And he showed how “strong” controls can backfire. Push-based MFA fatigue attacks lead to accidental approvals. Forced password churn creates weaker passwords. Security fatigue leads to risky defaults. The lesson: bad friction amplifies risk. Good friction, on the other hand, provides strong defense with minimal burden—phishing-resistant MFA, device-bound signals, and secure-by-default workflows.

Case studies underscored the point. The 2022 Uber breach showed how attackers exploit push-bombing; resilient defenses like number matching, contextual prompts, and especially WebAuthn/passkeys close that gap. Docker once stored credentials in plaintext config files; adding credential helpers and just-in-time tokens reduced long-lived secrets. And at Okta, the team built Okta Credential Manager (OCM)—a tool that transparently mints short-lived credentials without changing existing workflows. Engineers use the same commands they always have, but behind the scenes, OCM eliminates copy-paste tokens, reduces credential sprawl, and improves auditability. The secure path becomes the default path.

Dennis emphasized that defaults matter far more than training. Google’s auto-enrollment into 2SV cut account takeovers by half, while Microsoft reports MFA makes accounts 99.9% less likely to be compromised. If the sunny path is fast and reliable, shadow paths disappear. UX in internal tooling, he argued, is a security control.

He closed with a clear playbook: start by hardening MFA with number matching and deprecating SMS, pilot passkeys to build organizational muscle, and invest in helpers that remove secret-copying from daily workflows. Track leading indicators like onboarding time, login failures, prompts per day, and presence of long-lived tokens. Pair them with DORA metrics to show business impact.

The message was simple: resilience is secure flow. Lower auth toil, faster response, fewer workarounds, fewer incidents, and stronger culture. Treat friction reduction as a core resilience investment, measure it like product work, and make the secure path the easy path.

In conclusion

Across every talk at SEV0, one theme stood out: incidents aren’t just technical failures to be fixed, they’re opportunities to rethink how we work—whether that’s reshaping incident management as part of the product experience, embracing the human factors that shape every response, or reimagining SRE workflows with the help of AI. From Plaid’s pre-incidents to Zendesk’s massive migration, from Okta’s efficient security to Skyscanner’s hard-won lessons, the message was clear: AI is accelerating a shift toward faster, more resilient, and more human-centered incident response. And this is just the beginning. We can’t wait to continue the conversation—and see what new lessons emerge—this October at SEV0 in London. 🔥

See related articles

Everything you need to know about ITIL 5, AI and incident management

We break down ITIL 5's governance framework and what it means for teams using AI in incident response. For incident management, it addresses questions like: Who's accountable when an AI-suggested remediation backfires? How do you audit AI-generated updates?

Chris Evans

Chris Evans

DevEx matters for coding agents, too

When AI can scaffold out entire features in seconds and you have multiple agents all working in parallel on different tasks, a ninety-second feedback loop kills your flow state completely. We've recently invested in dramatically speeding up our developer feedback cycles, cutting some by 95% to address this. In this post we’ll share what that journey looked like, why we did it and what it taught us about building for the AI era.

Rory Bain

Rory Bain

Stop choosing between fast incident response and secure access

incident.io’s new integration with Opal Security delivers automatic, time-bound production access for on-call engineers, eliminating slow approvals and permanent permissions.

Brian Hanson

Brian HansonSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization