Industry Interviews: Uma Chingunde, Vice President of Engineering at Render

Who are you, and what do you do?

I’m Uma Chingunde, and I’m Vice President of Engineering at Render. We’re the fastest way to host your apps and websites. We’re based in San Francisco and other places, and although we’re still quite a small team, we work with customers all across the world.

I’ve always worked in infrastructure myself, having started my career at VMware, where I was working on some of the world’s most popular virtualization platforms. Eventually, I worked my way up to become a consumer of the virtualization stack as an engineer!

While I’ve worked with many different teams over the years, Stripe was one of the largest I supported as a manager. I was there before Render, where my team was responsible for managing some of the core infrastructure, specifically Compute.

How did you get involved with incident management?

Mostly through my time at Stripe. It was growing really quickly when I joined, and throughout my time there.

Although it probably goes without saying, customers depend on Stripe’s infrastructure to manage some of their most critical services, which is why incident management was pretty central to their culture. They had a very rigorous program, complete with weekly incident reviews.

I was working with a lot of experienced folks from companies that had also scaled up, such as Uber and Twitter. Although I came in when a lot of people had already done much of the hard work, we all shared a great deal of responsibility around incident management. We had a shared rotation for what we called Incident PM (Incident commander in other places) that I was on for quite a while and saw incidents first hand. As we were growing rapidly a lot of people knew what to expect, having already experienced it and because of that, they were looking for ways to strengthen the foundations. There had also been some very interesting incidents in the early days that had strengthened the organizational muscle memory in great ways that meant there were a lot of great tools and people to learn from.

There was a big focus on how to behave during an incident, which I think is one of the most important things of all

When an incident occurred at Stripe, the engineers who owned the part of the stack would be the first to get paged. I learned that the most important thing for a manager was not to get in peoples’ way. In other words, let the experts do what they do best, and help out where you can! We learned by observing without interfering. There was a big focus on how to behave during an incident, which I think is one of the most important things of all.

What was the most difficult incident you were involved in?

A period of time comes to mind, as opposed to one specific incident!

We were migrating our service-to-service communication within Stripe to a tool called Envoy. We were going through an intense testing period during the rollout. During this phase, we encountered a combination of bugs in Envoy, as well as in how we’d configured it. Should anything go wrong, we could end up with large parts of Stripe being down.

Inevitably, we encountered a few incidents, some with broad impact with some sweaty-palms moments. Foundational infrastructure comes with a huge amount of responsibility. When we were dealing with them, I found that having a blameless culture helped enormously. Investing in getting the overall systems better quickly took a lot of team effort.

What stands out as the most successful incident you were involved in?

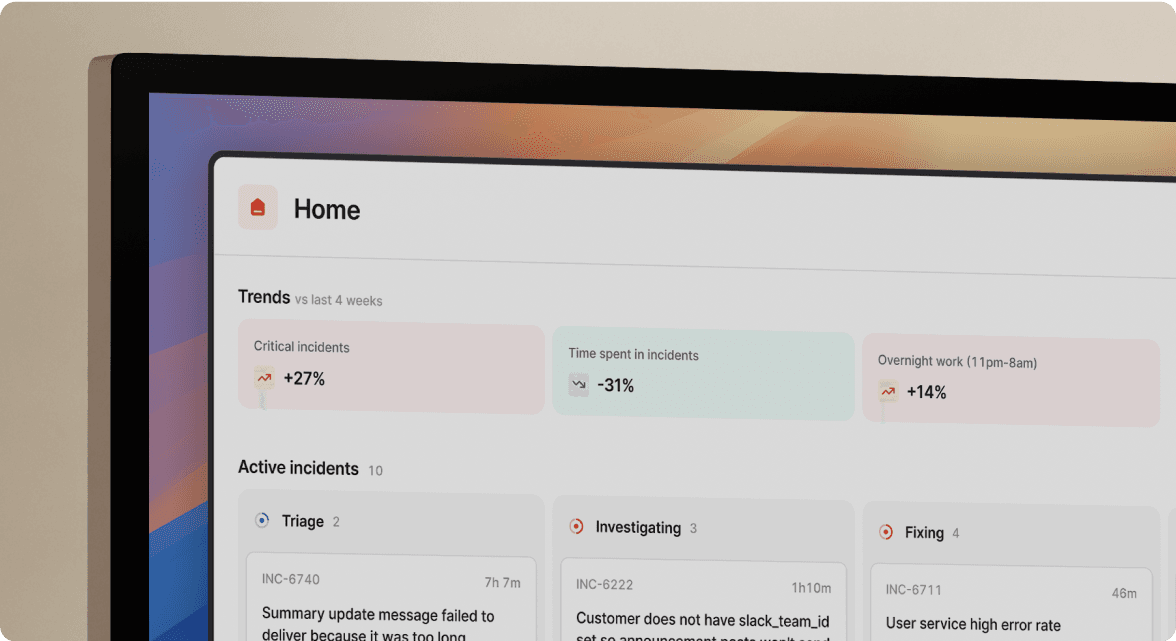

Good question! In general good incidents are when you understand what’s gone wrong, and you can respond quickly. Recently at Render, we were going through a phase of adding more observability to our services alongside the introduction of incident.io, to make them easier to understand and debug.

We encountered an incident where everything worked in lock-step. With our improved observability someone noticed users were experiencing issues and opened an incident. Another person noticed the incident had been declared and immediately flagged a recent database change that could have caused the problems. Within minutes we’d rolled back the change and the impact was over. Without the improvements on both fronts, this could easily have been hours of impact. It’s nice to get validation that what you’re working on is helping, even if it takes an incident to do so.

What led you to invest time and effort into incident management when you could have focused on other areas of the business?

When you’re providing an infrastructure product, reliability and security are the most important things. To achieve high standards here it’s important to focus on the basics – getting better at dealing with anything that threatens your uptime or security. Incidents are going to happen whether you’re prepared or not, so having something in place to remove the cognitive overhead and processes people need to otherwise keep in their heads just makes sense.

Every incident you don’t need to open is downtime avoided and happier users

Developing a robust incident management process might feel like an overhead, but it’s really something that saves you time and effort down the line. Every incident you don’t need to open is downtime avoided and happier users. Adopting good tooling here is literally saving us time — we’re immediately able to move faster.

And finally, if you were managing an incident, and everything’s burning, what’s the one song you’d have on in the background?

Actually, I personally prefer to have no music in the background if I’m trying to concentrate on something! But that aside, you should let the engineers themselves pick the music, since they are the ones in the driver’s seat if there’s an incident. If you’re not a direct responder, and more in a management role like I am, you don’t get to pick the music! But, if it were my choice, I’d prefer silence. 🤫

See related articles

The post-mortem problem

Post-mortems are one of the most consistently underperforming rituals in software engineering. Most teams do them. Most teams know theirs aren't working. And most teams reach for the same diagnosis: the templates are too long, nobody has time, nobody reads them anyway.

incident.io

incident.io

Keeping it boring: the incident.io technology stack

This is the story of how incident.io keeps its technology stack intentionally boring, scaling to thousands of customers with a lean platform team by relying on managed GCP services and a small set of well-chosen tools.

Matthew Barrington

Matthew Barrington

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian HansonSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization