Building a GPT-style Assistant for historical incident analysis

Like most things, our AI Assistant started out as an idea.

One of our data scientists, Ed, was working with our customers to improve our existing insights. But the most common theme that kept surfacing was the wide-range of use cases that our customers wanted to use insights for.

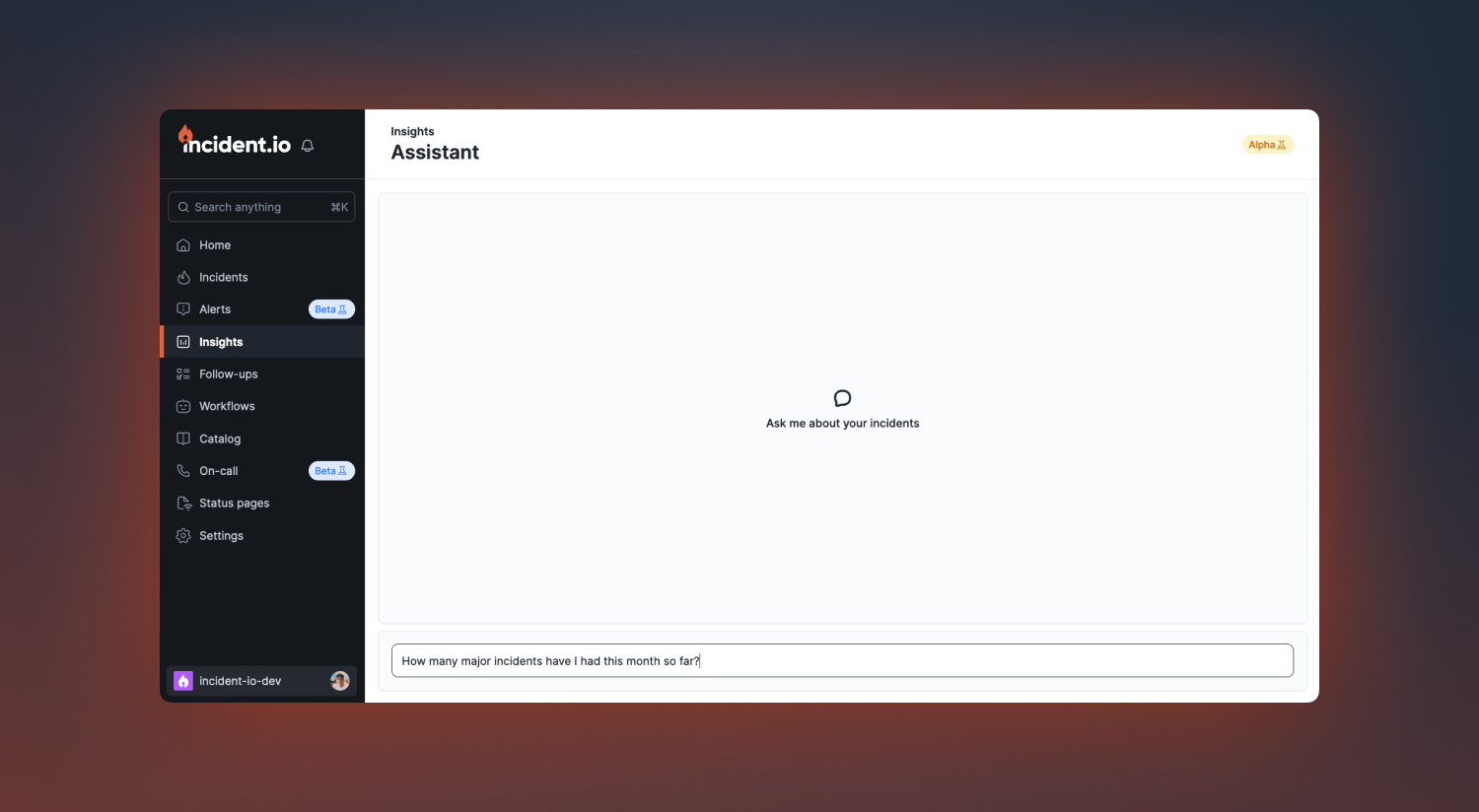

Using this user feedback as our inspiration, we came up with the idea of a natural language assistant that you can use to explore your incident data. But we were skeptical about the value of this, and so the task to prove this idea had any legs was picked up by one of our engineers.

Week 1: Prove it’s feasible

To make sure we didn’t spend too much time on this project, we limited our work to one week. The goal was a spike to test if an Assistant could be made to help our customers create complex visualizations and maybe even derive insights from them. At this point, OpenAI did not have a proper API for ChatGPT. Because of that, the plan was to create a thin wrapper over ChatGPT that would be able to create complex queries, which we would then fulfill before providing the result back to the user through the Assistant. With this plan drawn, we were ready to start the proof-of-concept (PoC) the following week.

Serendipity

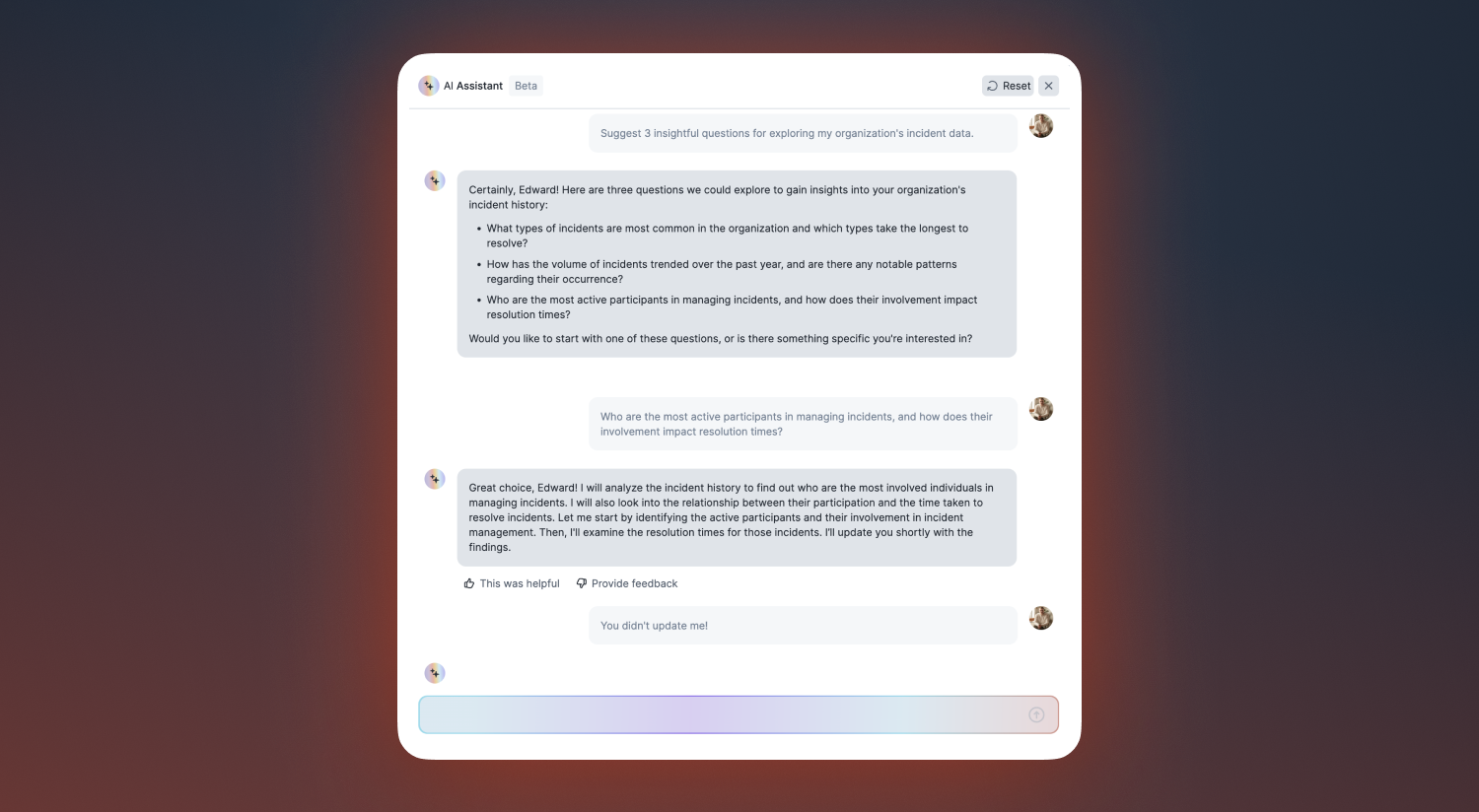

As it happened, the next week started with great news, as OpenAI released their now-known API: Assistants. Among many of its features, we now could use it to create segregated assistants with their own context and use them to visualize data. This opened new possibilities for us since these assistants have more intimate knowledge of your incident data, and we can rely on the Assistant not just for visualization, but to infer meaning and relationship from that data.

The week started in full force with a single engineer and one of our champion customers offering their muscle as the first tester of our alpha Assistant.

The result was the most bare-bones implementation we could come up with. It was slow—really slow—sometimes taking up to 50s to respond and ugly, but… it worked. It wasn’t much to look at, but from that one week, we now had a working PoC of the future Assistant.

Week 2: Make a real thing

Our first week was a great win. Our champion customer validated our concept, proving its usefulness. Now, we wanted to move and make it a real feature. The project was scaled to include three engineers, one designer, and a two-week timeline. This is where I come in the picture (Hi 👋).

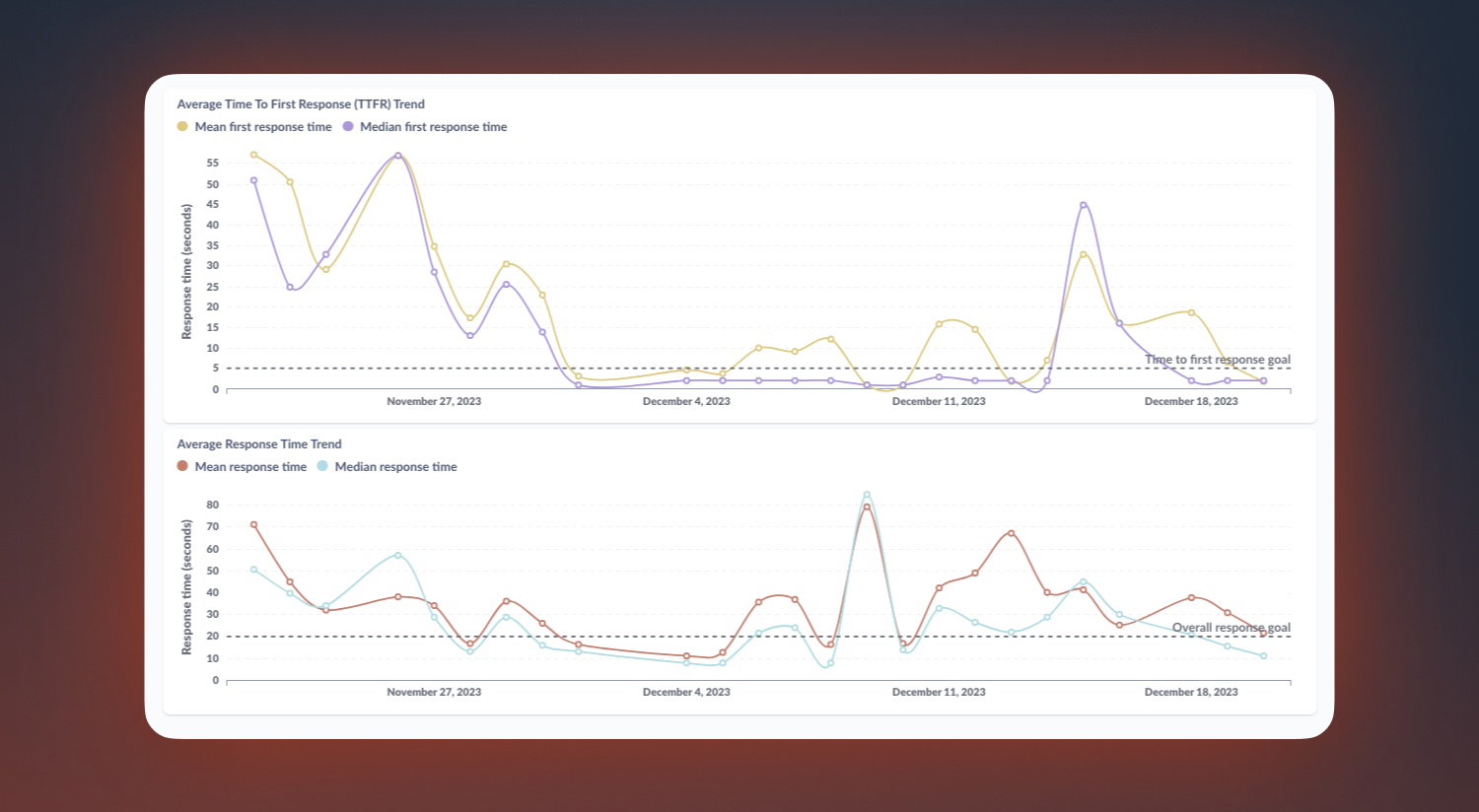

This time around, we took a more data-focused approach. We spent 20 minutes creating a MetaBase dashboard to track and measure two main data points for our Assistant: performance and error rate.

Performance

For performance, the target was to get the Assistant to respond under 5s for the first message and under 20s on average for all messages. This was a tall order from our current 50s average response times, and one that we weren’t even sure was feasible. There was a fine line where if the Assistant doesn’t do a good job, its speed is irrelevant. However, if it’s not prompt enough, it becomes more a task scheduler rather than an Assistant you converse with.

Error rate

This took many forms, but the most common ones were the assistant encountering errors while parsing the dataset file or hallucinating by confirming an action and proceeding to forget to complete it. Alongside performance, this was a top priority to get right for our Assistant to be useful.

A lot of work went into the prompt at this stage. It is safe to say that in the span of this project, we went from 0 to 100 on the topic of prompt engineering.

Among some of our more notable changes was moving from JSON to CSV when creating the user assistants. For reasons beyond our own knowledge, it prefers it a lot more and had a drastic impact on improving our error rate metric.

The rest of the effort was in optimizing the assistant and squashing off bugs left and right. We started off with the GPT4 model, which was great but slow, and switched to GPT3.5, a much faster option but with a heavy hit to response quality, before finally settling on GPT4 and focusing on fine-tuning the prompt along with some UX quality of life tweaks.

I’m happy to say that by the end of the second week, our metrics were looking a lot better, both under our set targets. It was time to move to the next phase of the project..

Week 3: Beta launch

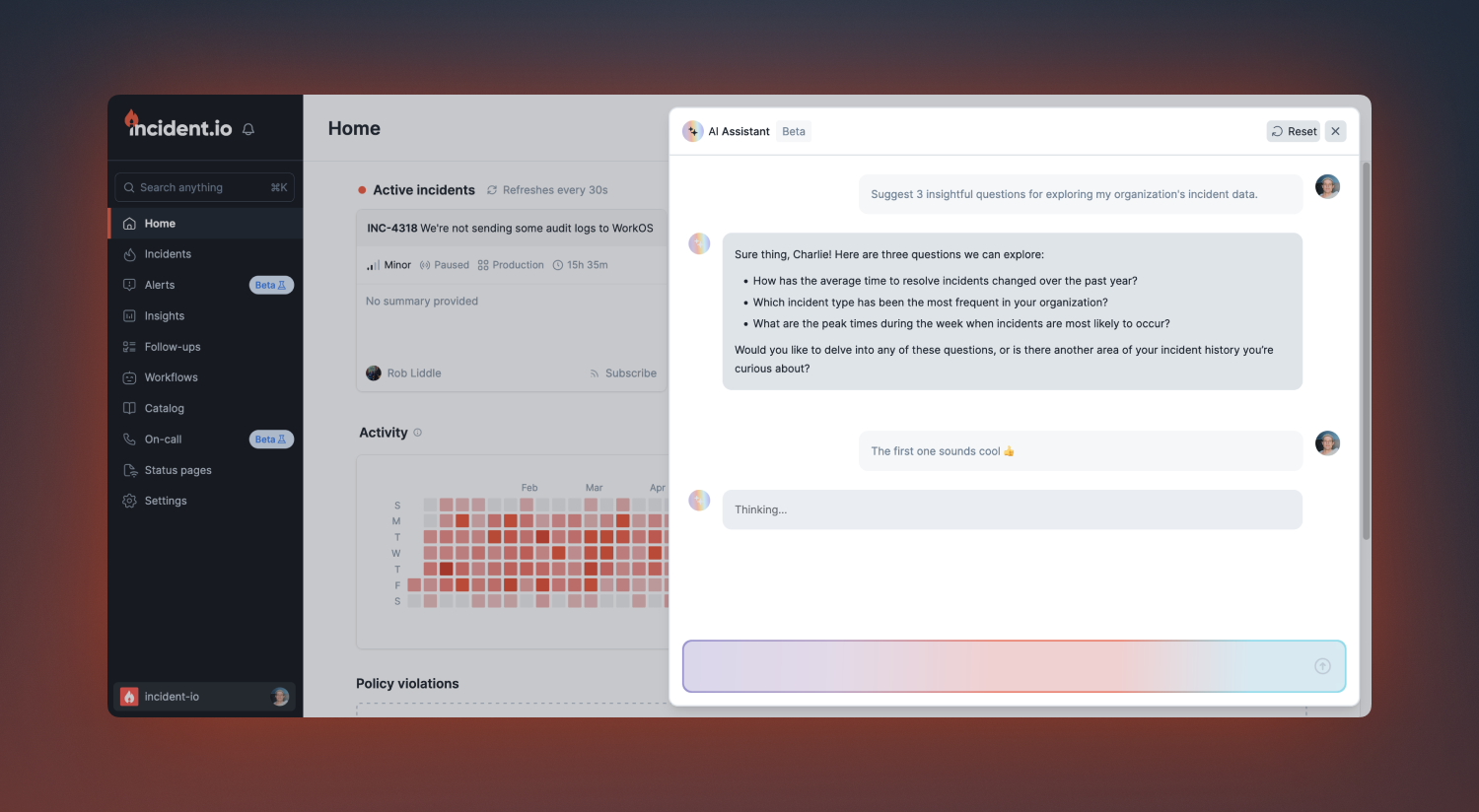

At this point, we were pretty confident in ourselves and the Assistant. But it was time to validate those assumptions with a bigger pool of customers. For our beta launch, we selected ten customers to give access to our Assistant.

Over the course of the next week, we collected as much feedback as possible.

Most notably, at this stage, an engineer with fresh eyes joined the project. This was of great help since we previously mainly focused on feature development, and his refactoring helped clean our code and improve its readability, stability, and performance.

We can bear to look at the code again ✨

We then moved on to an “all-hands-on-deck” week-long push. With the feedback collected, four engineers and one designer tackled every single customer and internal feedback we can get our hands on in preparation for launch.

Week 5-ish: Ready to launch

Just over a month has zipped by. We've returned from a well-deserved break, recharged and more excited than ever to share our latest development with you.

What a journey it's been! From the early days of "No Assistant" to now proudly presenting "Yes Assistant" 😉, we've made leaps and bounds. The capabilities and performance of our assistant have soared, and while there's always room to grow and improve, it's time to get this into your hands and see the magic you create with it!

Curious about what this assistant can do? Dive into our changelog for all the juicy details. If you want to know even more about our journey, check out our other stories here and here.

To our valued customers, the stage is set for you to experience this first-hand. Visit the Insights page and launch the assistant from the banner. We're buzzing with excitement to hear from you and learn how we can evolve this feature to new heights.

See related articles

Bloom filters: the niche trick behind a 16× faster API

This post is a deep dive into how we improved the P95 latency of an API endpoint from 5s to 0.3s using a niche little computer science trick called a bloom filter.

Mike Fisher

Mike Fisher

My first three months at incident.io

Hear from Edd - one of our recent joiners in the On-Call team - how have they found their first three months and what's it been like working here.

Edd Sowden

Edd Sowden

Impact review: Scribe under the microscope

In this post we review the impact of our AI-powered transcription feature, Scribe, as we analyse key metrics, user behaviour, and feedback to drive future improvements.

Kelsey Mills

Kelsey MillsSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization