Alert fatigue solutions for DevOps teams in 2025: What works

Alert fatigue is a critical challenge for DevOps teams, with thousands of alerts received weekly, most being noise that slows responses and leads to outages. Fortunately, AI-driven approaches and strategic alert management can significantly alleviate this issue.

Understanding alert fatigue and its business impact

What is alert fatigue?

Alert fatigue occurs when teams are overwhelmed by excessive alerts, leading to desensitization and reduced responsiveness to critical incidents. For instance, an engineer bombarded with 47 alerts during a shift may dismiss a genuine database failure as mere noise.

This desensitization undermines system reliability, creating blind spots when maximum visibility is essential.

How does it affect response times and team health?

Noisy alerts contribute to increased mean time to resolution (MTTR) and higher burnout risk. Research shows teams receive over 2,000 alerts weekly, with only 3% needing immediate action, leading to missed critical alerts and prolonged outages.

The financial impact is significant, with unplanned downtime costing organizations an average of $5,600 per minute, compounded by recruitment and retention challenges due to chronic alert fatigue.

Key industry statistics that illustrate the problem

| Metric | Percentage | Impact |

|---|---|---|

| Alerts ignored daily | 67% | Critical issues missed |

| False positive rate | 85% | Wasted investigation time |

| Teams with alert overload | 74% | Reduced response effectiveness |

| MSPs struggling with tool integration | 89% | Duplicate alert sources |

| Analysts overwhelmed by context gaps | 83% | Slower triage decisions |

These statistics underscore alert fatigue as a systemic issue in DevOps organizations, especially with 89% of managed service providers experiencing tool sprawl.

Why alerts explode: the five dominant root causes

Over-sensitive static thresholds

Static thresholds trigger alerts based on fixed values, ignoring legitimate variations. For example, a static CPU threshold of 80% might alert during normal traffic spikes. AI-driven adaptive thresholds adjust based on historical patterns, reducing false positives.

Duplicate and redundant monitoring tools

Tool proliferation leads to overlapping coverage, resulting in multiple alerts for a single issue. Conduct a tool audit to:

- List all monitoring tools

- Create an overlap matrix

- Identify redundant areas

- Consolidate tools to avoid duplicates

Poor prioritization & tiering

Treating all alerts equally causes low-impact issues to compete with critical ones. Implementing tiered escalation policies allows engineers to focus on urgent matters. A signal-to-noise KPI can help assess prioritization effectiveness.

Lack of contextual information

Analysts often feel overwhelmed due to insufficient metadata in alerts. Missing context delays resolution as engineers gather necessary information. Modern alerting platforms can enrich alerts with context from service catalogs and deployment data.

Flapping and noisy seasonal patterns

Flapping alerts frequently trigger and resolve without actionable information. Seasonal traffic patterns can also create false positives. Implementing hysteresis and time-based suppression can mitigate this noise.

Immediate tactics to cut noise and boost signal

Tune thresholds with dynamic baselines

Dynamic baselines shift from reactive to predictive alerting by learning normal behavior patterns. The process includes:

- Collect baseline data: Use at least 30 days of historical metrics.

- Train model: Analyze patterns and set confidence bands.

- Monitor drift: Continuously update baselines with evolving behavior.

Implement tiered escalation policies

A three-tier escalation model aligns alert severity with appropriate responses:

Low tier: Non-urgent issues

- Route to dedicated Slack channels

- No immediate paging

- 4-hour response SLA

Medium tier: Attention required within hours

- Send to team channels with notifications

- Escalate if unacknowledged after 30 minutes

- 1-hour response SLA

High tier: Critical issues

- Page on-call engineer immediately

- Auto-invite service owners

- 15-minute response SLA

Consolidate alerts via correlation engines

Alert correlation engines group related notifications, reducing volume and providing richer context. Instead of multiple alerts, teams receive a single notification indicating "web service degradation" with all related symptoms.

Automate common remediation steps

Automating routine fixes can quickly resolve alerts while alleviating on-call burdens. incident.io workflows enable safe automation through:

- Approval gates

- Dry-run mode

- Rollback capabilities

- Audit logging

AI-driven triage: turning alerts into actionable insights

Contextual AI pre-investigation (AI SRE)

AI SRE enhances alert triage by automatically gathering relevant context, reducing manual investigation time by up to 40%. This includes:

- Recent logs

- Deployment history

- Service ownership details

- Historical incident patterns

Predictive anomaly detection and early warning

Generative AI anomaly detection identifies deviations before they trigger alerts, allowing proactive intervention. The system analyzes multiple data streams to catch issues early when remediation is easier.

Automated fix suggestions with safety checks

AI-generated remediation scripts suggest fixes while ensuring human oversight. Key safety features include:

- Confidence scoring

- Approval-only execution

- Canary testing

- Automatic rollback

Building trust: transparency, explainability, and human-in-the-loop

Trust in AI systems requires transparency and clear human intervention paths. Essential features include:

- Confidence scores

- Reasoning displays

- Decision audit trails

- Manual override options

This hybrid model leverages AI efficiency while preserving human expertise for complex situations.

Designing a sustainable alert-management lifecycle

Continuous audit and signal-to-noise KPI

Sustainable alert management involves ongoing measurement. The signal-to-noise KPI (actionable alerts divided by total alerts) helps teams set goals, with targets of >30% actionable alerts indicating effective tuning.

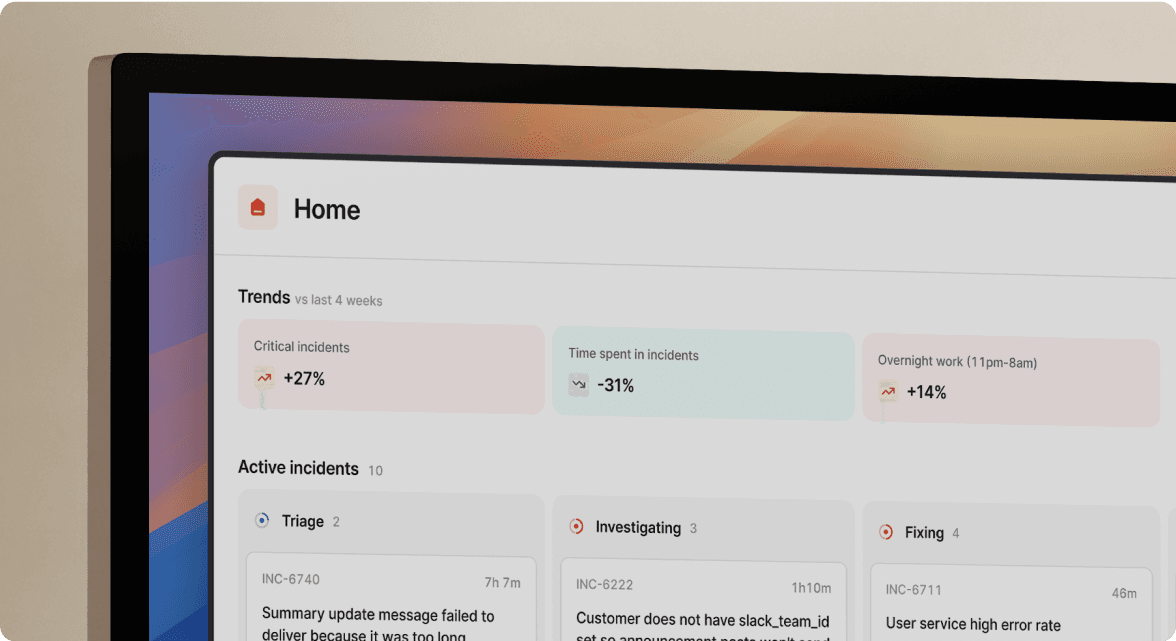

Quarterly audits using incident.io's dashboards enable systematic improvements by reviewing alert trends and identifying noisy alert sources.

Embedding AI SRE into Slack/Teams workflows

Integrating AI SRE into communication channels streamlines workflows and reduces context switching. Key enhancements include:

- Auto-invite functionality

- Channel tagging

- Action buttons for remediation

- Status updates for stakeholders

Post-incident learning loops and automated documentation

Automated documentation captures incident learnings, including:

- Timeline generation

- Pre-populated post-mortem templates

- Action item tracking

Linking tickets to service catalog entries creates a knowledge base for future incident response.

Team-wellness practices: balanced on-call rotation and burnout monitoring

Alert management must address human factors to prevent burnout. Effective practices include:

- Rotation limits

- Recovery windows

- Load balancing

- Escalation support

Surveys and dashboards track burnout trends, enabling proactive intervention.

Frequently asked questions

How do I know if my alerts are too noisy?

If fewer than 10% of your alerts are actionable, you likely have significant noise. Healthy systems typically achieve 30-50% actionable rates. Monitor team feedback for additional tuning signals.

What's the safest way to automate remediation without breaking compliance?

Use incident.io's workflow engine to automate low-risk fixes with approval gates, complete audit logs, and rollback mechanisms. Gradually build confidence while maintaining oversight for high-impact changes.

Can AI replace my on-call engineer?

AI SRE enhances triage and suggests fixes but cannot replace human judgment for high-impact decisions. AI serves as a force multiplier, improving efficiency while leveraging human expertise.

How often should I review and retune alert thresholds?

Review thresholds quarterly or after significant changes, while dynamic baselines automate much of the tuning. Focus quarterly reviews on AI performance and feedback from incidents.

My stack uses multiple monitoring tools. How do I avoid duplicate alerts?

Utilize incident.io's correlation engine to consolidate alerts, mapping each tool to a single service. Create a monitoring inventory to identify overlaps and de-duplicate alerts effectively.

What metrics should I use to prove ROI after reducing alert fatigue?

Track reductions in MTTR, improvements in the signal-to-noise ratio, decreased on-call hours, and team satisfaction through surveys. Monitoring retention rates for on-call engineers also provides insights into ROI.

If AI suggests a fix that fails, what's the fallback?

Implement a rollback step that automatically reverts changes and notifies the engineer. The system should monitor key metrics post-fix and trigger rollbacks if necessary, while maintaining human escalation paths.

See related articles

Keeping it boring: the incident.io technology stack

This is the story of how incident.io keeps its technology stack intentionally boring, scaling to thousands of customers with a lean platform team by relying on managed GCP services and a small set of well-chosen tools.

Matthew Barrington

Matthew Barrington

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian Hanson

Everything you need to know about ITIL 5, AI and incident management

We break down ITIL 5's governance framework and what it means for teams using AI in incident response. For incident management, it addresses questions like: Who's accountable when an AI-suggested remediation backfires? How do you audit AI-generated updates?

Chris Evans

Chris EvansSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization