October wiggle week: Platform improvements, game days, and new blog content

October 15, 2024

Aside from shipping new product features left and right each week, on occasion we like to take the opportunity to invest in other areas of our platform and our team that are equally important. For instance, we've run two backlog crushes this year (in January and May) that have focused on bug fixes, performance improvements, polish, and other small feature requests.

This week our engineering team spent time making a few significant under-the-hood improvements to make our application even more resilient, reliable, and easier and quicker for us to build on.

We also spent time in an area core to incident.io—our own incident response. The team ran game days throughout the week for several different critical scenarios to practice how we respond to incidents on our platform. After each scenario, engineers who were part of the response then went through a debrief to understand:

- What went well?

- What could we improve?

- What could we do in our own product to make responding to incidents even better for us and our customers?

Finally, both our data and engineering teams also did a lot of writing for our blog. Stay on the lookout for some great technical content—covering topics like observability, debugging, our own infrastructure stack, building a good data culture, and more—to drop in the coming weeks

Here are the highlights from throughout the week:

TypeScript performance

Our web dashboard is a big React app written in TypeScript, with almost 300,000 lines of code. Recently, it's been getting slower to work on and test on our computers.

This slowdown means it takes us longer to add new features, which we want to avoid. We believe our engineers should be able to work quickly without waiting for the computer to catch up.

We spent a few days working on this problem and made big improvements. We sped up the checking time for our most complex files from over 4 seconds to just milliseconds. We also reduced the time it takes to process all our code from 40 seconds to 27 seconds.

To keep things running smoothly, we've added checks to spot any future slowdowns. We've also found more ways to make our work even faster and more efficient in the future.

Chat message developer experience

Sending messages to users and channels is an incredibly important part of incident.io. It’s not just important that this is reliable, but it’s also important that those messages are easy for our engineers to write, test and iterate on.

Behind the scenes, we have a chat library, which is designed to make sending a message easy. Earlier this year, we shipped a Teams integration — and it had been a while since we took a step back to see if the library is still serving us well.

Three of us spent a few days migrating some of our older Slack-only code over to the chat library. We’d migrate something, sit and chat about the things that felt either confusing or high-friction, and then go and try and fix that. We managed to get rid of some of our old Slack code, polish up some of our existing messages, and make life easier for incident.io message writers of the future.

Database library upgrades

Having a performant and reliable connection to our database lays the foundation for the rest of our application. It ensures everyone has a snappy experience in our dashboard, mobile app, and Slack or Microsoft Teams. We also want to make sure that engineers can work at speed, with most of the low-level work taken care of by our tooling, making it easy for everyone to ship a product experience that feels instant.

To interact with our database on our servers, we rely on two libraries, Gorm and pgx. Gorm takes care of querying our database, while pgx handles the actual network connection to the database. Over the last year, we had fallen behind on the versions of these libraries and also knew we had an opportunity to improve our use of pgx to become more performant and resilient.

We spent a couple of days upgrading these libraries to their latest versions in our codebase, to gain any benefits that had been contributed recently. Then, we spent some time replacing our existing use of pgx with a variant that reduces the number of connections we need to make to our database. The result is that we end up slightly more performant, and even better able to handle traffic spikes than before.

Game days

We also ran 3 game days, putting all our engineers through the paces of responding to simulated major incidents.

As a team, we deal with small incidents all the time, but we (thankfully) rarely have a chance to test our incident response for more severe incidents affecting our whole product. These typically involve coordinating larger teams of responders and clear communication with stakeholders - all whilst keeping a cool head.

Game days offer us the opportunity to practice our response in a safe environment. Through this, we’ve improved our incident process, and even gained a few new insights into our own product!

You can read all about our thoughts on game days in the incident response guide, or find out more about the last time we ran them.

🚀 What else we’ve shipped

Bug fixes

- We'll now order your alert attributes in Slack messages according to the order in your configuration

- We'll no longer show the Acknowledge button in Slack on expired escalations

- We'll error with the correct information when trying to create an override for a non-on-call responder in the API

- Fixed an issue where we were overly truncating some field descriptions in Slack modals

- Fixed an issue where pinned images in Slack weren't being added to the incident timeline

- Fixed a bug that prevented promotion of a Catalog attribute to a type

- Fixed an issue where some integrations were showing up multiple times on the page

Improvements

- When extracting metadata from an alert payload, we'll either show you the last successful result or the full alert payload if you encounter a Javascript error

- Increased the limit for bulk-creating catalog entries

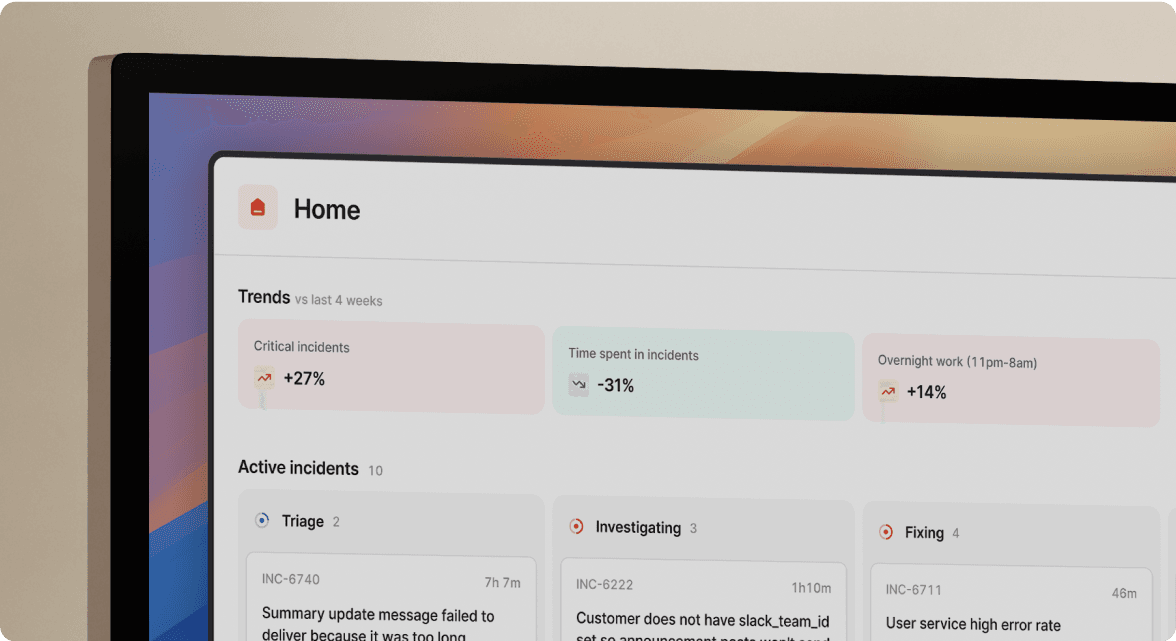

- Improved how we calculate incident workloads within insights

So good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization