Industry Interviews: Colm Doyle, Incident Commander at Slack

Welcome to our Industry Interviews series, where we speak to a variety of interesting people about everything incident management.

Who are you, and what do you do?

My name’s Colm Doyle (@colmisainmdom), and I head up Developer Advocacy at Slack, based in Dublin, Ireland. I’m also part of what we call the major incident commander rotation at Slack.

How did you end up as an incident commander?

It’s a funny story! When we were back in the physical office, I sat literally with my back to V Brennan, the Director of Engineering, Core Infrastructure for Slack..

Her team had many pagers and one of them is the incident commander rotation. One of the people that ran incident command at Slack came over to the Dublin office and was running training and V recommended I do it. I was interested because incident management is something that developers ask a lot about, and you’ve got folks like incident.io that are trying to help. It’s a popular topic on the Slack platform.

However, back in 2019, my life was a series of airports and conferences. It wasn’t the right time to be carrying a pager. So I said, look, keep me on the back burner.

Then, COVID happened and I was stuck at home a lot! As they were figuring out the staffing of the rotation, I put my hand up and said, well, I'm here now, I’m not traveling as much, let me do it. So seven billion years into the pandemic, I’m on the IC rotation every Wednesday during business hours!

Is this the first time you’ve carried the pager, or were you involved in incidents in a previous life?

Before Slack, I was an early employee at a startup called Kitman Labs. When I started there, I ran their mobile team and by the end of it, I was running the entire engineering team. We didn’t really have a formal incident response process at the start, but I’d get called whenever things went wrong.

Before that I worked at Facebook. I wasn't part of Facebook's incident commander rotation, but I was occasionally a responder for an incident, and would also participate in weekly postmortem reviews — we called them SEV reviews.

Nice, that’s a great overview of how you got here. Switching things up to talk about your experience running incidents. Is there a single incident that stands out in your mind as being particularly memorable?

I think I've been relatively lucky. I don't know that I've ever had to run an incident where I had cold sweats. But one stands out in my mind that happened near the start of my incident commander rotation at Slack. The details of what actually went wrong escape me at this point, but I remember how I was feeling.

The details of what actually went wrong escape me at this point, but I remember how I was feeling.

It was a SEV-1 incident: the highest severity at Slack. The whole service went down pretty rapidly. Normally, we run all of our incidents through Slack with our dedicated tooling. However, in this case, we were hard down and unable to do that. We had to fall back to Zoom, our bot tooling wasn’t available and I lost a lot of the conveniences that I had grown to rely on. Meanwhile, everything was going 100 miles an hour.

Responding to an incident on Zoom is very different to responding in Slack. It’s less chaotic in Slack, as people can respond in threads. But if you’re on a call, people tend to start talking over each other, which can be stressful. I remember calling on people by name, like a teacher!

When you start as an incident commander at Slack, you shadow the primary. After you feel comfortable, you then become the primary, and then you have a shadow. In this incident, I was being shadowed by an incredibly talented engineer named Laura Nolan. Thankfully enough, I had Laura open in a separate window. She was encouraging and supporting me through the process.

I was still new to the command rotation at this point, and I distinctly remember the seniority of the people involved in this incident. We had Cal Henderson (CTO), the VP of Customer Service, and various other important people from Slack. I was intimidated less by the technical details of the incident, and more by the seniority and number of people on the call.

I got a nice note from Cal, our CTO, after the incident. But that's the one that I have scar tissue over!

That’s a good segue into dealing with stress. Slack is a significant product that powers a decent part of the world’s productivity. Being the first responder for that service must be stressful. How do you try and stay relaxed on the Wednesdays that you carry the pager?

My stress levels are binary. I’m either not stressed at all, or I am extremely stressed. I have managed to not let Slack incidents be one of the things that stresses me, with the exception of like the incident I was just talking about.

I don’t have an engineering role at Slack, which I think gives me an advantage in incident response. It's rare that I will commit code to our production code base, which is probably the best for everyone. But it puts me in a position that I can be slightly detached from the whole thing — I know I'm not going to be the person that fixes the incident. It doesn’t stress me out because I know I'm leaning on other people, and those people take incident response very seriously. Every engineer is trained on how to be an incident responder and Slack in general has a good culture of helping out.

It doesn’t stress me out because I know I'm leaning on other people, and those people take incident response very seriously.

I only have to carry a pager during business hours, but I stress about responding as quickly as possible. Our SLAs for response are 15 minutes, but the school run for my kids takes 15 minutes, so that’s always in my mind.

I would say the thing that stresses me most is paging people to wake them up — it makes me feel bad! This is changing, as Slack has really embraced the whole digital first remote kind of thing. We don't phrase it as, you know, we're a remote company or we're a co-located company. We just talk about everything being digital first. We’re now moving away from being so concentrated on the west coast, to being distributed around the world — which means I need to wake people up less.

It sounds like you’ve got your stress levels under control, which is fantastic. When the pager does go off, do you have any rituals for getting you into ‘incident mode’?

My overriding memory of getting paged is because I have PagerDuty on so many of my devices. Because of how they're configured, it’s legitimately terrifying when I get paged — everything is going off at once!

I don’t really have any ritual when that does happen though. I like to arrange my windows correctly before I get into anything. I’ll get a Slack window up, a Zoom window up, get in the zone and be calm. It’s really important that an incident commander projects a certain amount of calm into the incident. You need to drive urgency, but you need to remain calm at the same time.

In a really bad incident, I’d probably open a Red Bull — that’s my particular vice. I don't drink tea or coffee and it just seems inappropriate to drink hot chocolate during an incident.

What does the process structure of Incident Commander look like at Slack?

I take weekly shifts on my rotation, but the average is once every two weeks, for around 6 hours each. We run a follow-the-sun rotation at Slack. Folks in Melbourne will carry the pager, then hand off to Pune, India, then hand off to us, and we’ll hand off to the US.

We have different rotations to handle different severities of incidents. You’ll start your rotation handling SEV 3/4s, which are in business hours. You’ll then graduate to major incident commander for SEV1/2s. We also have different Incident Commander rotations for different parts of the business. For example, IT has one, Security has one, etc. The Major Incident Commander rotation is at the top of the pyramid. If there’s an incident, and no-one knows where it goes, it goes to the Major Incident Commander.

What does the first 5 minutes look like after you get paged?

After the terrifying cacophony of PagerDuty occurs, I’ll jump into a Slack channel that has been auto-generated with a standard naming convention of #assemble-YYMMDD-some-words, or sometimes a standing Zoom that is always open and being recorded. Our customer support team also has a rotation of assisting incidents to be in the customer liaison, and they’ll hop in as well.

I'll get a quick download from the person that paged me about what they’re seeing, which helps me assign a severity to the incident.

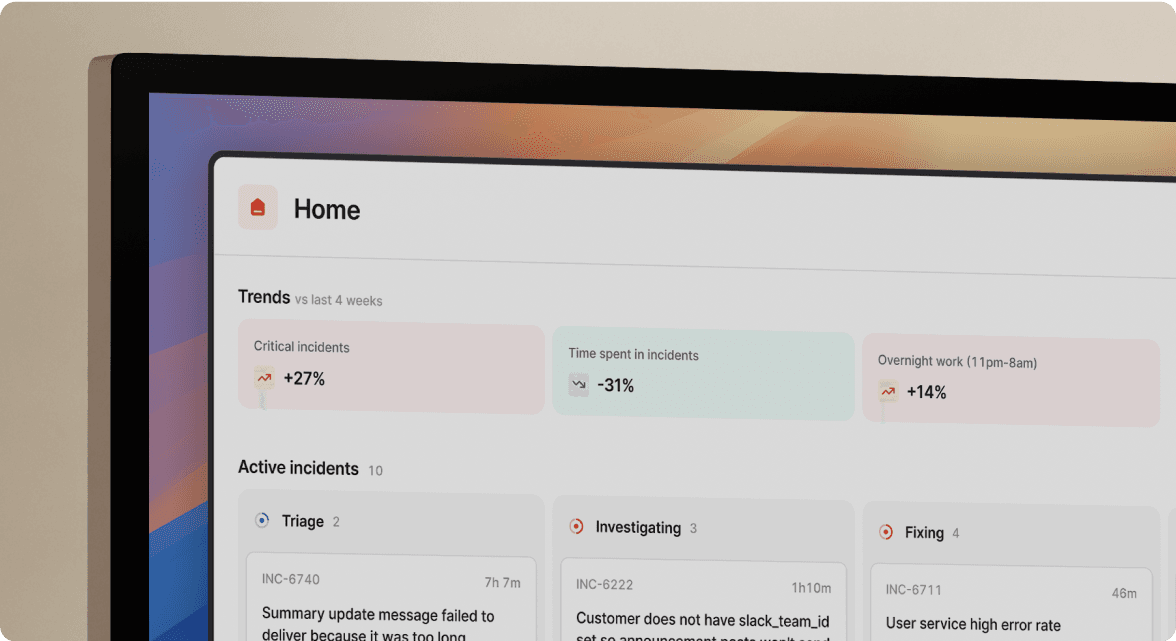

At that point, I’ll declare an incident using our tooling, which is all in Slack. It’ll rename the channel to #incd-YYMMDD-[ticket-number]-some-words, invite certain people into it, create a JIRA ticket to track follow up work, and announce the incident into a central incidents channel.

Right after that, I’ll complete the first CAN report (CAN stands for Conditions, Actions and Needs). We’ll identify what we need to do, assign owners, work on them, and fill out CAN reports along the way. Rinse and repeat until the incident is over!

Sounds like you folks really have your stuff in order when it comes to incident management. What does the training process look like?

As I mentioned earlier, we have shadowing for rotations, so you can learn how the pros do it. Additionally, we have some formal training which is based extensively off the PagerDuty incident response training, with our own customisation. It covers much of what you expect — why incident command is important, why we have severities, etc. But the common theme behind it is that with great power comes great responsibility. You have essentially unlimited power as an Incident Commander at Slack!

We then do a mock incident, which is a lot of fun. We're asked not to talk about what happens in that mock incident, because we don’t want to spoil the surprise for people going through the training! But you can imagine what it is — Dungeons & Dragons style incidents with people throwing curve balls.

The root of what we’re learning is the incident command system that was developed by California firefighters in the 60s. The broad brush strokes of incident command at Silicon Valley technology companies all hail from this. We do have to untrain people from language specific terms at other companies — e.g. Incident Manager On-Call at Facebook vs. Incident Commander at Slack — because the last thing you want in an incident is confusion and a lack of clarity.

...the last thing you want in an incident is confusion and a lack of clarity.

What’s hard for you being an IC at Slack?

I think Slack has generally got it right. There are tweaks we could make around the edges, and we do continually.

One weakness is that we are very reliant on our own tooling. We target high nines for uptime, and we hit them. But when Slack goes hard down like the incident I discussed, response gets harder. However, what’s the alternative? Having an IRC server sitting there that goes rusty? You're so used to everything working in a certain way, and like, we optimize everything for it.

At the end of the day though, Incident response is all about expecting the unexpected, so we have detailed runbooks stored in off-Slack systems that are accessible even if Slack itself is unavailable. It’s not as good as the tooling we’ve built for ourselves, but it’s more than enough to make sure that we can get the system back into a healthy state for ourselves and our customers.

Incident response is all about expecting the unexpected

I think we could definitely do a better job of diversifying our incident commander bench — that’s something that the incident response team would love. I think I'm the only non-engineer on our major Incident Commander rotation. We’d love to have more salespeople, more customer support people, or more communications people. There’s no reason that you have to be a software engineer to be able to be an Incident Commander. Like any aspect of life, more diverse viewpoints create more interesting and better systems.

I totally agree with you. From that incredibly important statement, to something more flippant for us to end with. If you’re in an incident and everything’s on fire, what would be the one song you’d choose to have on in the background to pick your spirits up?

Something really, really dark about everything burning down would probably be my choice, just because I tend to have relatively dark humor. But if I wanted something more uplifting, I’d probably go with Harder, Better, Faster, Stronger by Daft Punk.

Good choice! It’s been a pleasure speaking to you Colm!

Thank you for having me!

See related articles

The post-mortem problem

Post-mortems are one of the most consistently underperforming rituals in software engineering. Most teams do them. Most teams know theirs aren't working. And most teams reach for the same diagnosis: the templates are too long, nobody has time, nobody reads them anyway.

incident.io

incident.io

Keeping it boring: the incident.io technology stack

This is the story of how incident.io keeps its technology stack intentionally boring, scaling to thousands of customers with a lean platform team by relying on managed GCP services and a small set of well-chosen tools.

Matthew Barrington

Matthew Barrington

Secure access at the speed of incident response

Blog about combining incident.io's incident context with Apono's dynamic provisioning, the new integration ensures secure, just-in-time access for on-call engineers, thereby speeding up incident response and enhancing security.

Brian Hanson

Brian HansonSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization