Better security for your app's secrets

The incident-io/core application uses a mixture of environment variables, config files and secrets stored in Google Secret Manager to configure the app. This is a reference guide to all the parts that make up this flow.

What is config?

Application config comes in several forms:

- Non-sensitive config, such as a GCP_PROJECT_ID or GITHUB_APP_NAME

- Sensitive config, like STRIPE_API_KEY or GITHUB_WEBHOOK_SECRET

- Runtime configuration, like DATABASE_URL or MEMCACHIER_PASSWORD

Both (1) and (2) make sense to track alongside the code that uses them, and – quite crucially – would expect to be set to the same value for each instance of an application environment. That means if we deployed the staging environments into two infrastructures, say Heroku and Google Cloud Run as part of a migration, this config would not change between those two instances.

Runtime configuration (3) differs in that it’s specific to the infrastructure, and you might be given different DATABASE_URL environments between instances of the same environment, or perhaps even between different roles (perhaps we should give cron a DATABASE_URL that points at a replica, rather than the primary).

Where do we store it?

The incident-io/core application includes configuration files for each environment it is deployed into (at time of writing, staging and production) which you can find at config/environments/<env>.yml, each parseable into a Config structure defined in the code.

💡 Config files mostly track non-sensitive (1) and sensitive (2) config, and only include runtime (3) if it makes sense to assert on it being present when booting.

Config is a simple structure of FIELD_NAME to value, with some optional struct tags that opt values into additional validation.

A small sample is:

type Config struct {

APP_ENV string `config:"required"` // type 1 (non-sensitive)

APP_ROOT string // type 3 (runtime)

ACCESS_TOKEN_SALT Secret // type 2 (sensitive)

// ...

}Taking the same slice of the production configuration file:

# config/environments/production.yml

---

APP_ENV: production

ATLASSIAN_OAUTH_CLIENT_ID: OEr2GKQfh8rHiqKVtFPWRiK2dAJ20FTd

ATLASSIAN_OAUTH_CLIENT_SECRET: secret-manager:projects/65058793566/secrets/app-atlassian-oauth-client-secret/versions/1How do we load and use config values?

The application, when it wants to use any of these config values, will use them via a package singleton (e.g. config.CONFIG.APP_ENV) that is loaded on application boot, via a call to config.Init.

Key things to know about the loading of config:

- We first load the appropriate configuration file into a blank

config.Config - Then we override any config values that are set as environment variables: this means if you have an

APP_ENVenvironment variable set, it will take precedence over whatever was in the configuration file - Next we look at any Secret values, which until this point will be in the form

secret-manager:<reference>. This means a value is stored in Google Secret Manager, usually within the same Google project that the app runs under, and we’ll set the config value to whatever we access at that reference in Secret Manager.

After this, the config is loaded and ready to be used. Other runtime values (3) might be loaded directly via os.Getenv if needed.

What is Secret Manager and how do we use it?

Google Secret Manager can be used to securely store secret material. It stores secret material under a secret name, and each secret can have multiple secret versions.

Developers are able to access Secret Manager via the Google Cloud Console or through APIs.

As a quickstart:

- All Google services exist within ‘projects’. Each incident-io/core environment has its own project, so incident-io-staging and incident-io-production for the primary environments, along with incident-io-dev-<name> for each developer’s environment (you set this up during onboarding).

- If you are managing secrets for an environment, you must do so from within that environment’s Google project.

- When managing secrets, never delete a secret version. Secrets are intended to be immutable, as deletion would mean rolling back to an old app version that references that secret version would no longer be possible.

- Permissions to manage secrets are granted on all projects to developers, so you should be able to access anyone’s Secret Manager instance.

- Permissions to access secrets for service accounts are only granted in limited form, and will be scoped to a specific project, meaning staging cannot access production’s secrets (or any other project either).

Most interactions with Google Secret Manager will happen through incident-io/core/server/cmd/config, which is a CLI that provides wrappers for common operations.

usage: config [<flags>] <command> [<args> ...]

Manage configuration files for the app

Flags:

--config-file=CONFIG-FILE The configuration file to load

Commands:

show [<flags>]

Loads and shows a configuration file

create-secret --project=PROJECT --field-name=FIELD-NAME

Create a new version of a secret in Google Secret Manager. Will create secret if it does not already exist.What is the security model?

The secret material our app uses is extremely valuable, and an obvious target for attackers. We’ve taken steps to protect these secrets that go beyond our normal procedures, which comes with two important and competing realisations:

- We have become much more secure against accidental exposure or malicious efforts to extract our secrets

- Applying these protections has created several new opportunities to impact our availability

Until now, very little could change outside of our control that might lead to significant downtime, but even small things – such as Heroku changing their IP ranges – may now lead to extended outages, especially if we don’t understand the setup.

We can mitigate these risks by having everyone read this article, which explains what we’ve configured, why, and how it might bite us.

Separating secret material from our app runtime

We now separate secret material from our app runtime, which puts another step between someone accessing the environment variables and getting access to the secrets.

- Context

- We run our app in Heroku, which encourages provisioning config as environment variables.

- Threats

- Access to Heroku from either a security incident or social engineering for Heroku credentials would expose all secrets.

- Third-party dependency used within our app is compromised, and posts the contents of os.Environ to an attacker.

- Engineers accidentally expose environment by dumping the process.

Our solution is to place secrets inside of Secret Manager, which permits access to read those secrets to a Google Service Account associated with the app. By necessity, we continue to provision the Google Service Account credentials via an environment variable to the Heroku compute, but this provides us several benefits:

- Even if an attacker accesses our environment, they would need to understand how to use the Google Service Account credentials to access Secret Manager data (we have other mitigations for this, see below)

- We can revoke these credentials if we ever learn of a breach, which means an attacker would need to use them quickly, and we could prove through Secret Manager audit logs whether they accessed anything, and what they accessed.

- Accidental exposure of environment variables no longer provides secret material wholesale.

Restricting secret access using access levels

With just Google IAM and putting secrets in Secret Manager, we ensure compromising our application environment is less likely to expose the values of the secrets, as we limit the possibility of access to a small window between a breach and when we rotate the Google Service Account credentials.

That small window is still concerning though, as a motivated attacker could pull our secrets in that time, and we’d be unable to cut-off access if they did (they’d have a copy of the secrets, which is game over).

To restrict this, we’ve created a security perimeter in our Google Cloud Platform account that represents locations we expect to receive requests from, and limit access to Secret Manager to appropriate combinations of source and IAM credentials.

Before we go into details:

- Google Access Context Manager is the Google service that provides Access Policies, which are containers for constructs like Security Perimeters or Access Levels.

- Access Policies are scoped either to the entire Google Cloud account (as in, every single project) or to a single Google account.

- Access Levels are rules that apply to traffic incoming to Google Cloud services (such as Pub/Sub, or Secret Manager) based on dimensions of the request, usually source IP range but could also be things like “from a corporate Google Chrome instance with screen lock enabled”.

- Security Perimeters are analogous to a firewall for Google Cloud services. You create a perimeter and say which Access Levels are permissible, then provide ingress (incoming traffic) rules that allowlist which Access Levels and IAM credential pairs are permitted to access which services.

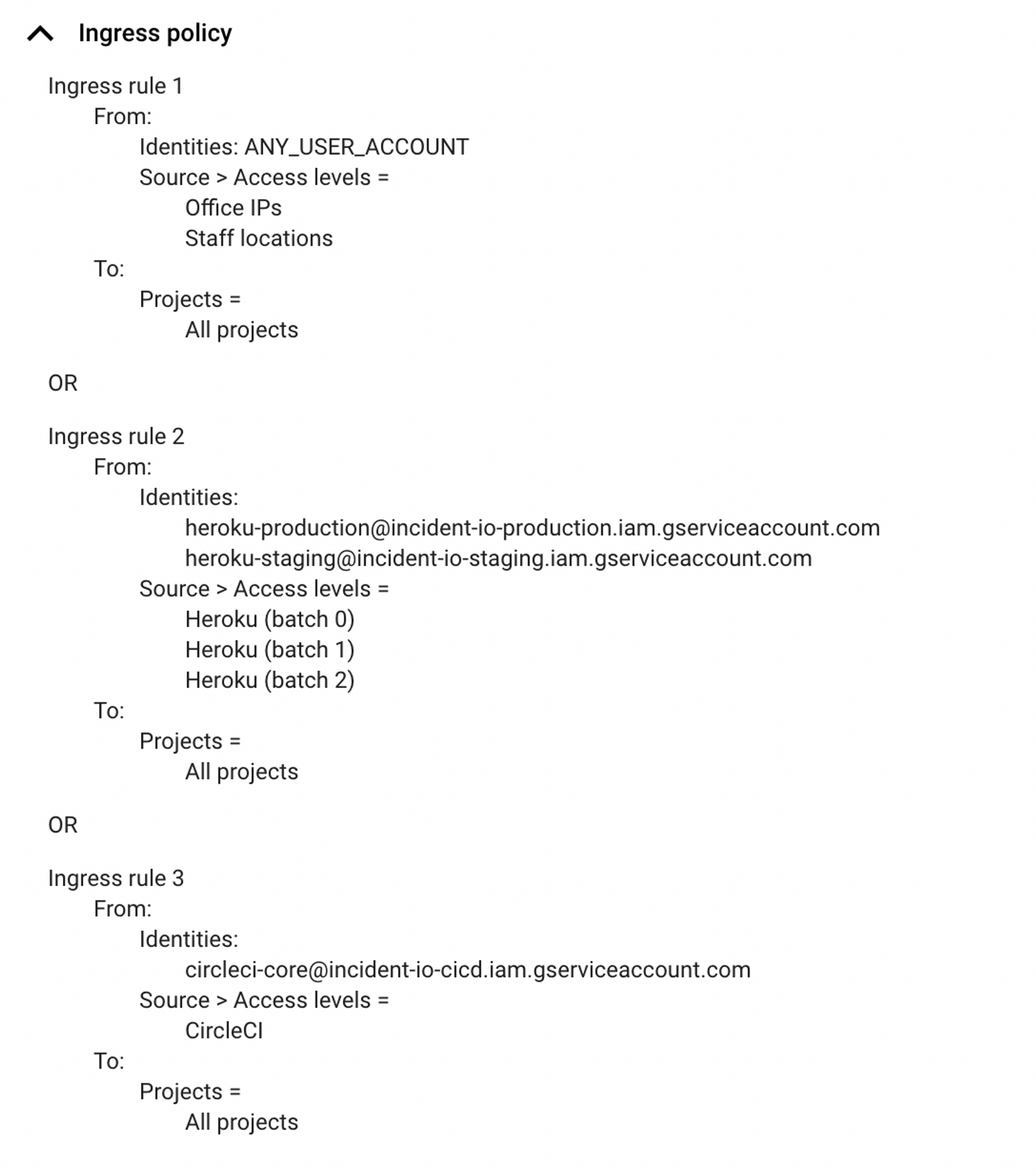

To protect us against leaking of our Google Service Account credentials, we have:

- Created a “Restrict Secret Manager” Security Perimeter inside our global account Access Policy, which is applied to projects running incident-io/core and specifies the permissible Secret Manager access.

- The perimeter applies to several Access Levels that we’ve defined to fit our needs:

- Office IPs being the stable IP addresses of our physical offices

- CircleCI is static egress IPs published by CircleCI, that we are able to use from our CI jobs

- Staff locations are regions we expect staff to work from (currently UK and US)

- Heroku (batch N) collects the IP addresses from eu-west-1, the AWS region Heroku runs our infrastructure from

Copying the ingress policy below, you’ll see we restrict specific patterns of access and pair them with IAM credentials:

The result is that:

- If we ever leak the credentials externally, accessing Secret Manager would only be permissible from within eu-west-1, which is likely to confound anyone who tried.

- Should someone leak their Google user credentials, access will only be permitted from within the UK or US, removing the majority of problem countries that attacks usually originate from.

Finally, whoever stole the credentials is likely to try access before ever realising we have these security rules in place. We can setup alerts for any security policy violations so we hear about it as soon as it happens, helping us reduce the window of opportunity even more.

See related articles

Bloom filters: the niche trick behind a 16× faster API

This post is a deep dive into how we improved the P95 latency of an API endpoint from 5s to 0.3s using a niche little computer science trick called a bloom filter.

Mike Fisher

Mike Fisher

My first three months at incident.io

Hear from Edd - one of our recent joiners in the On-Call team - how have they found their first three months and what's it been like working here.

Edd Sowden

Edd Sowden

Impact review: Scribe under the microscope

In this post we review the impact of our AI-powered transcription feature, Scribe, as we analyse key metrics, user behaviour, and feedback to drive future improvements.

Kelsey Mills

Kelsey MillsSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization