incident.io on Ship It!

A few weeks ago, Stephen and I had the pleasure of talking to Gerhard Lazu on changelog’s Ship It! Podcast. We chatted about incident workflows, our relentless focus on simplicity, and how incident.io uses incident.io when we have incidents ourselves.

If you haven’t listened already, you can find the podcast here. It’s well worth subscribing too - it’s always a great listen!

In the meantime, here’s a summary to whet your appetite!

Your website talks about playing the leading role, not all the roles. What do you mean by this?

When things go wrong you get paged and dropped into white space where you need to define a process.

At this point there’s a lot you need to do: communicate to people to let them know what’s going on, SSH into servers, start investigating, etc.

There’s a number of different roles you need to play and by default you’re responsible for taking them all on.

With incident.io you get to encode your process in the calm light of day, and when you’re paged at 2am you can leave the process management to us, and focus on the problem at hand.

What does the ideal incident flow look like to you?

It depends! There’s no one size fits all approach here, but we believe there's a set of core defaults that every company should follow.

We often think about things on the scale from Jira to Linear in the issue tracking world. At one end you have Jira, which is ultimately flexible and does pretty much whatever you need. Very powerful, but it pushes all decision making and control onto the operator. At the other end of the spectrum there’s Linear which is our issue tracker of choice. It’s opinionated about how things should work and if that fits your model it’s a vastly better experience, taking undifferentiated thinking off your plate.

We're building incident management with opinions

In a similar vein, we’re building incident management with opinions. We’re opinionated about incidents having leads, defining severities and providing structured updates. And zooming out slightly, we believe there’s a few principles that everyone should follow:

- Keeping the context in one place so it’s easy to see what’s happened

- Defining clear roles so it’s obvious who is responsible for what

- Having a structured way to coordinate actions so nobody duplicates work or unwittingly does something to make things worse

- Ensuring good communication, whether that’s between those involved in managing the incident, broadcasting internally to stakeholders, or sharing with your customers publicly.

But we’re also aware that every company is different and as a result they need to be able to imprint their process. So what we’ve built is a tool that encodes these foundations with the ability to shape the specifics to your needs.

One thing that attracted me to incident.io is the simplicity. The flow of this product felt polished and simple.

We’re really pleased that it's been noticed. Simplicity isn’t a mistake here; it’s very much an active product choice.

Have you heard the Mark Twain quote?

I didn't have time to write you a short letter, so I wrote you a long one

Building something simple takes time, it takes effort, and it relies on an extensive understanding of the domain.

We want it to be true that you can install incident.io and start running better incidents with almost zero learning curve. At the core that means you need to know a single Slash command to get going and you’ll find yourself in an incident channel. And at the end of the day, it’s just Slack so it feels familiar, but by dealing with things through us you get a lot for free. Things like automatic timeline curation and nudges to follow provide updates.

Over time we help you to adopt more of the product by providing contextual information and nudges. When someone creates an action, we can subtly let other folks know how that happened.

In general we’re building with a layered product approach. On day one, you can find value by following your process in one of our channels. As you explore more of the product you’ll find more and more power when you need it.

How do you use incident io?

It's like inception.

For some people incidents are major things. Things like “the building is burning down” and it happens every 6 months (yes, that was our actual example 😂). Hopefully not that specific incident, but you get the gist!

We think an incident is an interruption that takes you from what you were doing because it demands a level or urgency. That might be bug investigation, a suspicion of something not working as expected or a small outage. We even think they’re useful for planned work like complex deployments.

There’s no good reason not to use incidents liberally

We use the product regularly, and often think of incidents like notebooks. When a Sentry error appears in Slack, we'll declare an incident, dive into the details and share findings as we go. It works really well and means everyone has a clear understanding of what was done, and the conclusion it came to.

With incident.io there's essentially no cost to declaring an incident so there’s no good reason not to use them liberally.

What does your production setup look like?

Our production setup is intentionally simple (seeing the theme here?!)

incident.io runs as a single go binary running on Heroku with Postgres as backing store. All testing and deployment is managed by CircleCI, with the exception of our Terraform infrastructure code which is deployed using Spacelift.

We use Google’s cloud operations suite for logging and tracing, Grafana cloud for visualisation, and Sentry for error tracking. It’s a really great setup, that we’ll blog about in detail in future!

And no microservices?

No! Internally, our code is broken into services, so there’s specific services for dealing with actions or assigning roles, for example, but with everything in one binary we’ve vastly simplified the production operations.

How do you think about runbooks?

With incident.io we're building rails for you to follow at your org. We’ve already tackled the first thing on that journey which is nudges in incident channels to help you follow the process we’ve laid out.

We’ve just started work on a feature we’re calling workflows, which’ll let you define exactly how incidents should be run in your organization. For example, when you define an incident as affecting the platform, page a platform engineer, send email, create five default actions that need to be done. That looks a bit like a run book.

We have makefiles to help us follow procedures. Could they be automated, yes. Should they be automated, we don't know. How do you think about this?

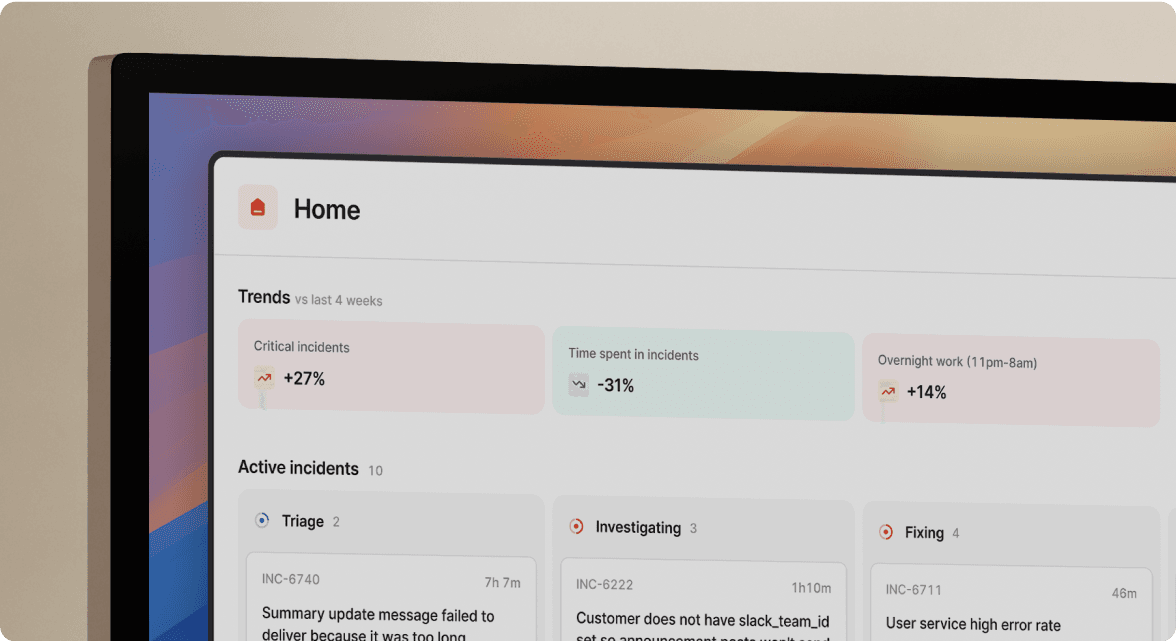

Fundamentally, what we're building is a structured store of information, taking data from Slack, Zoom, Pagerduty etc. With this data we now have queryable information you can interrogate. You can imagine how powerful that would be.

We’re planning to make all of this available so you can pull it back out into your own data repos too.

If you have questions about any of this or want to find out more about incident.io, we'd love to chat. Join us in the incident.io Community

I'm one of the co-founders, and the Chief Product Officer here at incident.io.

See related articles

Short-lived teams, sweating the details and zero bugs: How Linear raises the bar

This week, we talking to Sabin Roman from Linear, to talk about how they build teams, their zero bugs policy and heaps more.

Chris Evans

Chris Evans

Behind the streams: how Netflix drives reliability across their organization

This week we sit down with Hank Jacobs, Staff Site Reliability Engineer at Netflix to discuss their deployment of incident.io across their organization.

Chris Evans

Chris Evans

Scaling into the unknown: growing your company when there's no clear roadmap ahead

In this episode, we chat with Oliver Tappin, Director of SRE at Eagle Eye, about scaling your company when there's no clear roadmap ahead.

incident.io

incident.ioSo good, you’ll break things on purpose

Ready for modern incident management? Book a call with one of our experts today.

We’d love to talk to you about

- All-in-one incident management

- Our unmatched speed of deployment

- Why we’re loved by users and easily adopted

- How we work for the whole organization